i

2013 edTPA Field Test: Summary Report | November 2013

Copyright © 2013 Stanford Center for Assessment, Learning and Equity. All rights reserved. The edTPA trademarks are owned by The

Board of Trustees of the Leland Stanford Junior University. Use of the edTPA trademarks is permitted only pursuant to the terms of a

written license agreement.

Preface and Acknowledgements

This report summarizes key data and information from technical reports that developers of edTPA, a performance

assessment for beginning teachers, have prepared based on several years of development and field testing.

These reports are shared with state education agencies and institutions of higher education that are considering

adoption of edTPA, with the understanding that they should decide whether and how to use edTPA based on their own

review of the available information. A full version of the edTPA Technical Report will be published later this academic

year. The edTPA Technical Advisory Committee will continue its review of the edTPA data from both the development

and operation phases and make recommendations for future research.

This summary report was authored by Raymond L. Pecheone, Executive Director, Stanford Center for Assessment,

Learning and Equity (SCALE); Benjamin Shear, Research Assistant, SCALE; Andrea Whittaker, Director, Teacher

Performance Assessment, SCALE; and Linda Darling-Hammond, Charles E. Ducommun Professor of Education at the

Stanford Graduate School of Education.

The analyses contained in this summary report were reviewed by technical committee members and advisors Lloyd

Bond, Professor of Educational Research Methodology (emeritus), University of North Carolina, Greensboro; Edward

Haertel, Jacks Professor of Education (emeritus), Stanford University Graduate School of Education; Stuart Kahl,

Founding Principal, Measured Progress; and Catherine Taylor, Professor of Psychology: Measurement, Statistics, and

Research, University of Washington and chair of the Washington State Technical Advisory Committee.

We are grateful to them for their advice and recommendations, which strengthened the development and analyses of

edTPA. We also are grateful to the funders of the research and development process, including the Ford Foundation, the

MetLife Foundation, the Morgan Family Foundation, the Stuart Foundation and NCS Pearson Corporation.

SCALE is the lead developer for edTPA, and Stanford University is the sole owner of edTPA. The university has an

agreement with Evaluation Systems, a unit of Pearson, licensing it to administer and distribute edTPA.

The developers of edTPA will continue to study data about edTPA; they welcome comments regarding this report and its

data and will carefully consider such comments as they continue to update and improve edTPA.

Contents

Executive Summary.....................................................................................................................................................................1

Introduction .................................................................................................................................................................................4

edTPA: Assessing Readiness to Teach.....................................................................................................................................4

edTPA: Developed by the Profession for the Profession........................................................................................................5

States Participating in edTPA ................................................................................................................................................6

edTPA as Support and Assessment........................................................................................................................................6

Assessment Design and Architecture........................................................................................................................................9

Design Rationale......................................................................................................................................................................9

Authentic Tasks .......................................................................................................................................................................9

Alignment with Standards ....................................................................................................................................................9

Subject-Specific Assessments .............................................................................................................................................10

Common Architecture and Shared Pedagogy......................................................................................................................10

Scoring Rubrics......................................................................................................................................................................12

Development .............................................................................................................................................................................13

Professional Involvement and Review..................................................................................................................................13

Major Milestones....................................................................................................................................................................14

Scoring ........................................................................................................................................................................................15

Scoring by the Profession for the Profession.......................................................................................................................15

Qualifications ........................................................................................................................................................................15

Training..................................................................................................................................................................................15

ePortfolio Scoring Management System..............................................................................................................................16

Scoring Process .....................................................................................................................................................................16

Adjudication Process.............................................................................................................................................................16

Analyses .....................................................................................................................................................................................17

Descriptive Data ...................................................................................................................................................................17

Validity .................................................................................................................................................................................20

Reliability...............................................................................................................................................................................23

Standard Setting........................................................................................................................................................................25

Use of the Total Score for Decisionmaking...........................................................................................................................25

Setting a Recommended Professional Performance Standard...........................................................................................25

Professional Performance Standard.....................................................................................................................................27

Submitting, Reporting, and Retaking edTPA.........................................................................................................................29

Conclusion: Moving Forward with edTPA...............................................................................................................................30

Appendix A: edTPA Technical and Policy Advisors.................................................................................................................31

Appendix B: Score Means and Standard Deviations..............................................................................................................32

Appendix C: Factor Analyses ..................................................................................................................................................36

Appendix D: edTPA Standard-Setting Panels .....................................................................................................................37

1

Executive Summary

Overview: This report provides a summary of the design, development process, and validation results for a new

performance assessment for beginning teachers: edTPA.

1

edTPA is the first nationally available, educator-designed

performance assessment for teachers entering the profession. It provides a measure of teacher candidates’ readiness to

teach that can inform program completion, licensure, and accreditation decisions, while supporting candidate learning

and preparation program improvement.

Subject-Specific and Performance-Based: edTPA is a subject-specific assessment, which includes versions for 27

dierent teaching fields. The assessment systematically examines an authentic cycle of teaching aimed at specific

learning goals, using evidence about 1) planning, 2) instruction, and 3) student assessment derived from candidates’

practice in their student teaching or internship placement. This evidence includes lesson plans, instructional materials,

student assignments and assessments, feedback on student work, and unedited video recordings of instruction. Also

assessed through these three tasks are candidates’ abilities to develop academic language and to analyze teaching.

Aligned to Standards: edTPA is aligned with the newly revised Interstate Teacher Assessment and Support Consortium

(InTASC) standards for beginning teacher licensing, as well as the Common Core State Standards. edTPA also shares key

points of alignment with the Council for Accreditation of Educator Preparation (CAEP) standards.

Developed by the Profession: Modeled aer the National Board for Professional Teaching Standards’ assessments of

accomplished veteran teachers, edTPA was created with input from teachers and teacher educators across the country

in a process led by Stanford University’s Center for Assessment, Learning and Equity and supported by the American

Association of Colleges for Teacher Education. More than 1,000 educators from 29 states and the District of Columbia

and more than 430 institutions of higher education participated in the design, development, piloting, and field testing of

edTPA.

Scored by the Profession: An additional 650 teachers and teacher educators have served as scorers of the assessments

for the 12,000 plus candidates who participated in the field tests. Scorers must be P-12 or higher education educators

with significant pedagogical content knowledge in the field in which they score, as well as experience in working as

instructors or mentors for novice teachers. In the field test, about half of the scorers were teacher educators and about

half were practicing classroom teachers, many of whom are National Board certified.

“ The content of edTPA is related to the content of the job of a teacher and fits the requirements

for the job. Most stakeholders in public education—including legislators, policymakers,

philanthropists, and the general public—are demanding teacher accountability for student

learning outcomes. The same stakeholders are demanding that teacher educators are held

accountable for certifying that completers of preservice teacher preparation programs have

developed entry level competence for classroom teaching. The edTPA is designed to meet these

new demands for on-the-job teaching performance accountability. ”

Etta R. Hollins, Ph.D., Professor and Kauman Endowed Chair for Urban Teacher Education,

University of Missouri, Kansas City

1

The analyses contained in this summary report, which are drawn from the full edTPA Technical Report, were reviewed by technical

advisors Lloyd Bond, Professor of Educational Research Methodology (emeritus), University of North Carolina, Greensboro; Edward

Haertel, Jacks Professor of Education (emeritus), Stanford University Graduate School of Education; Stuart Kahl, Founding Principal,

Measured Progress; and Catherine Taylor, Professor of Psychology: Measurement, Statistics, and Research, University of Washington

and chair of the Washington State Technical Advisory Committee.

2

Scorer training and certification is a rigorous process that takes about 20 hours to complete. Scoring is conducted using

15 analytic rubrics applied to the three major tasks in the assessment, each evaluated on a five-point scale. Thus total

scores on the assessment can range from 15 to 75. The scoring process and reporting is coordinated by Evaluation

Systems, a division of Pearson, which distributes the assessments and manages the electronic platform on which they

sit.

Valid and Reliable: A set of validation studies was conducted to confirm the content validity, job relevance, and

construct validity of the assessments. In combination, these studies documented that the assessment is well-aligned

to the professional standards it seeks to measure, reflects the actual work of teaching, and that the score measures a

primary characteristic of eective teaching. Inter-rater reliability was evaluated using several dierent statistical tests.

edTPA reliabilities reported here range from .83 to .92 (indicating the percentage of scorer agreement).

Score Distributions: This report analyzes more than 4,000 submissions from the 2013 field test by content field, area of

teaching, and candidate group. Score dierentials across fields were relatively small. The scores were generally higher

in secondary teaching fields than in most elementary and middle childhood fields. Candidates varied in their mastery

of dierent teaching skills. As might be expected of pre-service teachers, candidates performed most highly on the

planning task, followed by the instruction task, and then the assessment task. This conforms with other studies that have

found that learning to evaluate and respond to students’ learning is one of the more challenging elements of teaching,

one that eludes even some veteran teachers.

No noticeable dierences were found across dierent genders or for the small number of candidates whose primary

language was not English. Comparatively small dierences were obtained across ethnic subgroups, given the standard

deviation of mean scores. In other words, there was greater variation in scores within subgroups than there was variation

across subgroups. Because many of these comparisons are based on unequal sample sizes, and some subgroup sample

sizes are quite small, dierences should be interpreted cautiously.

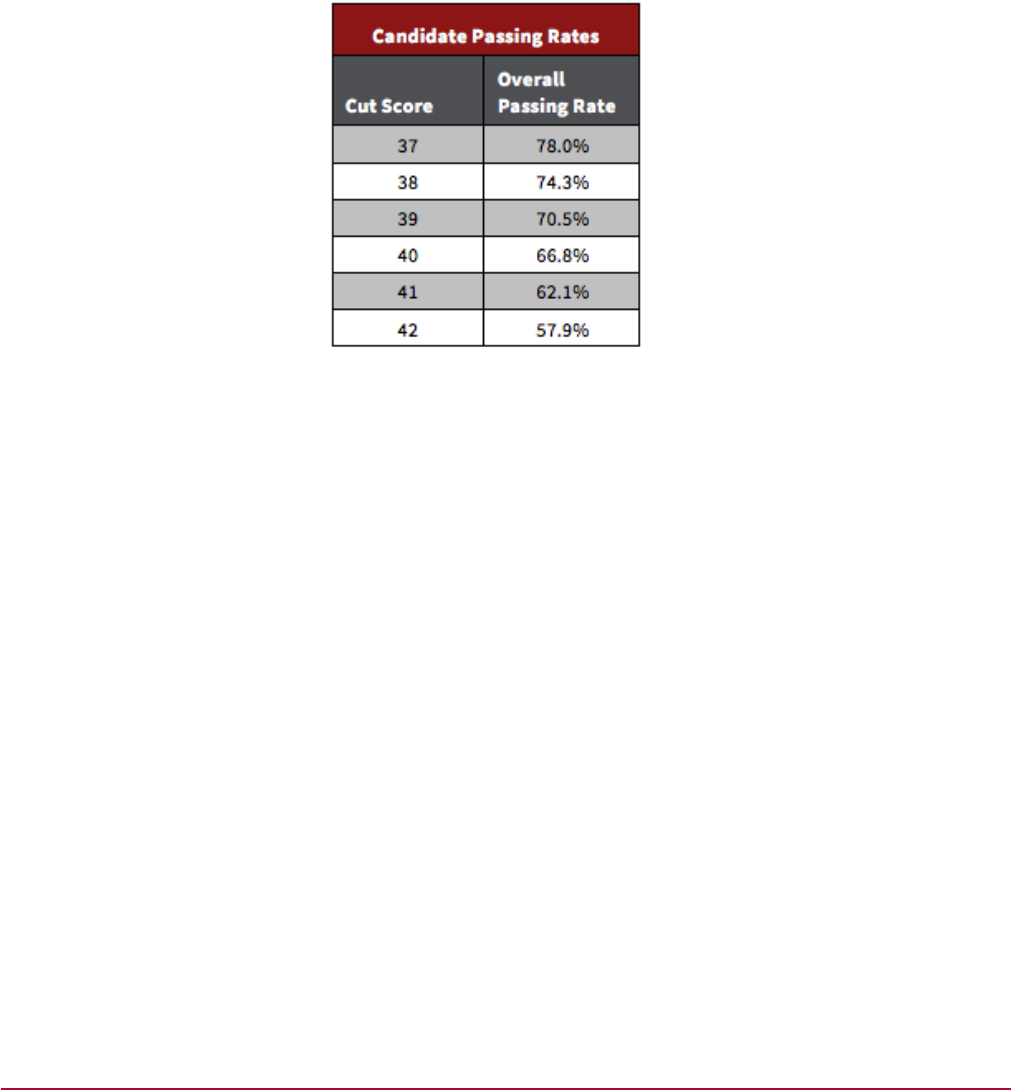

Standard Setting: Standard setting was conducted in August 2013 through a data-informed, standards-based

consensus process featuring three separate panels of experts. These included teachers and teacher educators with

relevant subject matter expertise, education organization representatives, and state education policymakers from

across the nation. The three panels independently agreed on a score target of no more than 42 to which developers

applied lower bounds based on the standard error of measurement in order to minimize erroneous decisions. Using a

half standard error of measurement results in a cut-score band ranging from a total score of 39 to 42. States set their own

passing scores based on state standard setting that takes into account state-specific data, measurement data, and policy

considerations. As discussed by the standard-setting committee members, states may consider setting their initial cut

score lower than the committee-suggested cut score to give programs time as they learn to deliver and support edTPA

activities and to support candidates’ preparation of their submissions. As warranted, the cut score can be raised over

time.

Based on the 2013 field test data, at a cut score of 42, 58% of candidates would have “passed” edTPA on their first

attempt. Field test data, of course, do not fully reflect operational results for several reasons: 1) Candidates are a

voluntary sample of prospective teachers who take the assessment in a non-consequential context; 2) Many programs

using the assessment for the first time have little experience designing support systems, coursework, or clinical

experiences to enable students to demonstrate the targeted skills. Under actual testing circumstances with programs

that have become experienced in supporting the assessment, pass rates would be expected to increase.

In the operational phase, all scores on or near the cut score are read and scored by two independent certified scorers.

If there are dierences in the scores, a third reader independently scores the submission to adjudicate discrepancies in

scores. Candidates who do not pass on their initial attempt may retake one edTPA task or the entire edTPA assessment,

with advice and counsel of faculty from their teacher preparation program.

Conclusion: There is growing agreement that individuals entering teaching must be prepared to meet the academic

needs of all students. With new, higher standards and greater diversity among students, teachers must develop more

sophisticated teaching skills and be able to use them from the first day they enter the classroom. Preparation programs

must also learn how to cultivate this kind of practice, with useful tools to develop and assess new teachers’ abilities to

put their growing knowledge into action. The edTPA may be one component of a system that helps to accomplish these

goals.

4

Introduction

One of the most important challenges facing public education is to ensure that the nation’s teacher workforce is

prepared to meet the academic needs of all students. From the first day they enter the classroom, teachers must be

ready to teach with the necessary skills to support students’ learning. Moreover, while preparing their candidates to enter

the classroom, teacher preparation programs also need actionable information about their candidates’ performance and

data to support program renewal.

The development of edTPA follows the seminal work of the National Board for Professional Teaching Standards®

(NBPTS®) in its eorts to support professional practice. The hallmark of professionalizing teaching rests on three

foundational principles:

1. Teachers are morally committed to the health and welfare of all children.

2. Professions share a common body of knowledge and skills.

3. Professional practice is clearly defined, transmitted, and enforced based on common “standards of

practice.”

Like other professions, teacher preparation programs make a compact with the public that allows for professional

governance over the design and management of programs in exchange for holding themselves accountable for preparing

candidates with the knowledge and skills that they need to practice safely and eectively. Teacher preparation programs

are tasked with meeting parallel goals—preparing candidates to meet the learning needs of all students and informing

their own practices as teacher educators. edTPA is designed to be a common measure to assess a candidate’s readiness

to teach, and to assure the public that preparation programs are accountable for candidate performance.

edTPA: Assessing Readiness to Teach

edTPA is the first nationally available, educator-designed performance assessment for new teachers entering the

profession. It provides a measure of teacher candidates’ readiness to teach that informs program completion and

licensure decisions while supporting candidate learning and preparation program growth and renewal. edTPA is

designed to:

• Strengthen beginning teaching and teacher education

• Inform teacher licensure, in combination with other state and program requirements

• Provide actionable evidence that can guide program improvement

• Strengthen the information base to support accreditation and program evaluation

• Guide the support and induction of new teachers

• Improve P–12 student outcomes

“edTPA taught me to reflect, analyze, and examine where students were at that moment, so I

could understand what I was doing, how students were progressing, and adjust instruction

accordingly. At the end of the process, I submitted my video, work samples, and responses to

prompts that required me to justify and explain my teaching....More than ever, I agree that

we need a classroom-based pre-service assessment that focuses on pedagogy, not just content.

The field needs it and students deserve it.”

Stephanie Wittenbrink, Special Education Learning Support Biology Teacher and Learning

Support English Teacher, Mountlake Terrace High School; former Washington Education

Association Student Board Member

5

edTPA provides an opportunity for candidates to demonstrate their ability to eectively teach subject matter to all

students. It consists of 27 separate subject-specific assessments, each one representing a major teaching licensure/

credential field. The assessment systematically examines evidence derived from candidates’ clinical practice in their

student teaching or internship placement. These include lesson plans, instructional materials, student assignments and

assessments, feedback on student work, and unedited video recordings of instruction.

edTPA: Developed by the Profession for the Profession

Modeled aer the National Board for Professional Teaching Standards’ path-breaking assessments of accomplished

veteran teachers, edTPA was created with input from teachers and teacher educators across the country in a process

led by Stanford University’s Center for Assessment, Learning and Equity and supported by the American Association of

Colleges for Teacher Education.

1

edTPA Developers and Partners

More than 1,000 educators from 29 states and the District of Columbia, and more than 430 institutions of higher

education participated in the development of edTPA.

An extensive, multi-year development process involved teachers and teacher educators in the assessment’s design,

review, piloting, and field tests. edTPA design and review team members included a wide range of university faculty, P–12

teachers, and representatives of national subject-matter organization, such as the Specialized Professional Associations

associated with the National Council for Accreditation of Teacher Education. Involvement with edTPA is supported by the

Teacher Performance Assessment Consortium, a professional learning community of preparation programs, faculty and

state agency leaders.

With these educators, SCALE has developed the edTPA assessments and is responsible for scorer training materials,

including benchmarks and training curriculum. AACTE and SCALE collaborate with the field to develop the resources

that support educative implementation. Evaluation Systems of Pearson provides the infrastructure needed to deliver

assessment materials, including the electronic portfolio platform for candidate registration and submission, digital

distribution of submissions to scorers, scoring services, and score reporting.

edTPA provides an opportunity for candidates

to demonstrate their ability to eectively teach

subject matter to all students.

6

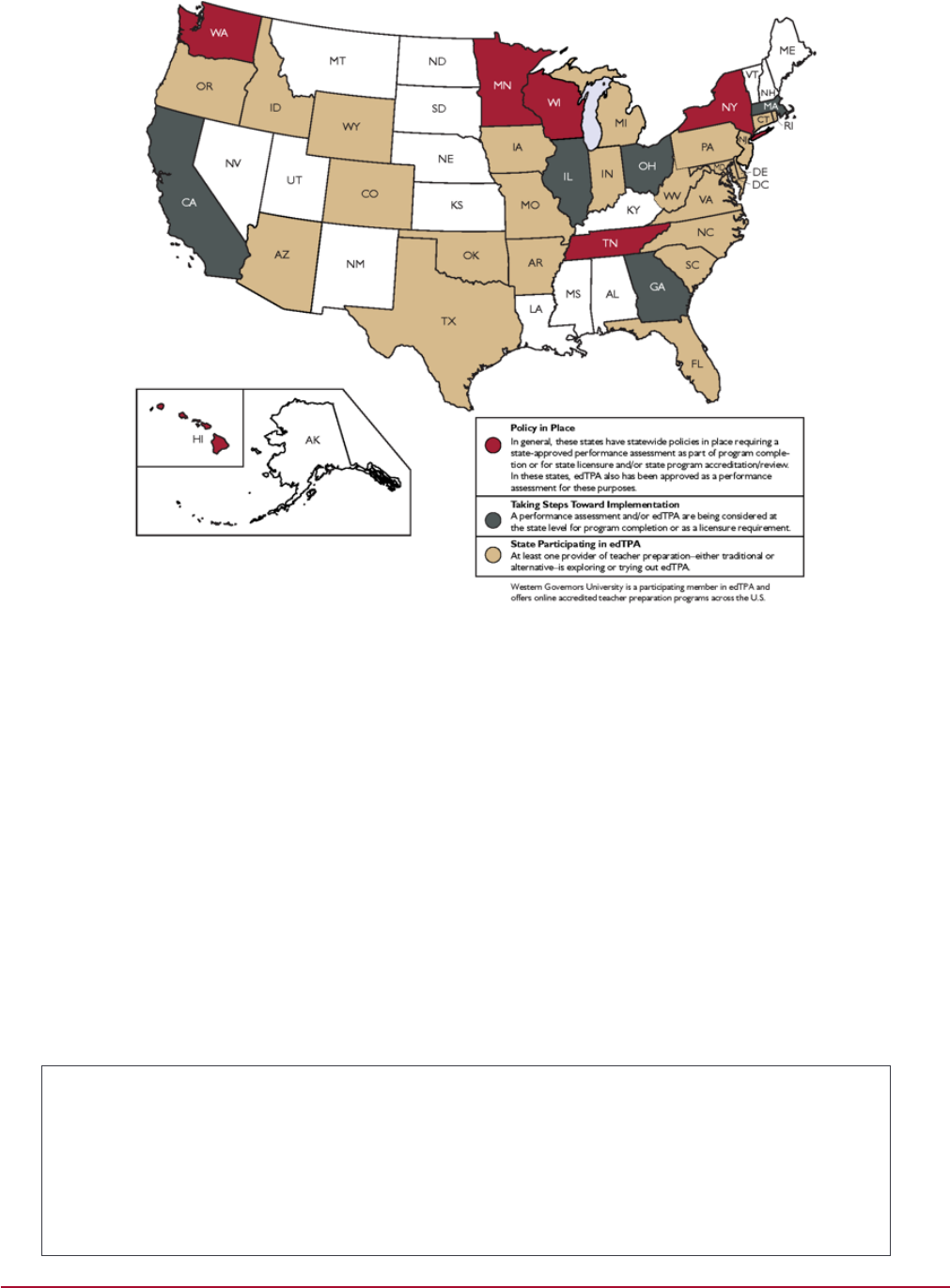

States Participating in edTPA

The map above shows the states currently participating in edTPA, signaling those with implementation policy in place

and those expecting to have an edTPA implementation policy in place soon. Visit edTPA online at edtpa.aacte.org for up-

to-date information.

edTPA as Support and Assessment

Unlike other licensure assessments, edTPA aims to support candidate and program learning, as well as to measure

outcomes. edTPA provides states and preparation programs with a rich array of resources that support its educative

implementation, including handbooks to guide implementation, extensive feedback to candidates and programs, and

scoring opportunities for school- and university-based faculty.

edTPA is designed to be more than a summative measure of teaching eectiveness. As an educative assessment of

teacher performance, candidate preparation for the assessment is embedded in multiple learning experiences across the

teacher preparation program, and the assessment provides actionable data designed to support candidate development

and program renewal. Formative learning experiences facilitated by the edTPA resources provide opportunities for

candidates to develop their practice within coursework and student teaching experiences.

“We learned that edTPA mirrors or enhances teaching tasks and makes more evident the thinking

used by practicing educators as they make their decisions. The cooperating teachers have commented

favorably on the clear and systematic nature of the assessment of important teaching skills.”

Cheryl Hanley-Maxwell, Ph.D., Associate Dean of the School of Education, University of Wisconsin,

Madison

7

Support for Teacher Preparation Programs

For teacher preparation programs, their faculty, and their teacher candidates, the authentic design and curriculum-

embedded approach of edTPA makes it an “assessment of, for, and as learning.” Key benefits of edTPA for teacher

preparation programs include:

• Ongoing professional development and resources to support implementation through an online

professional learning community and resource library, and through face-to-face training opportunities

• Measurement of candidates’ abilities to plan instruction to meet learning standards and to dierentiate

instruction for diverse learners, including English language learners and special education students

• Formative use of rubrics in course assignments, locally developed curriculum-embedded assessments, and

clinical practice evaluations

• Actionable evidence of candidates’ performance over time to address the real-world needs and challenges

that new teachers face every day

• Opportunities for continuous program renewal based on meaningful performance data

• Support for state and national accreditation through easily accessible individual data that can be

aggregated to analyze performance within and across subject areas and program options.

In addition, edTPA supports teacher candidates in their process of learning to teach. The assessment tasks and rubrics

outline clearly the professional standards teachers are striving for. Through formative experiences embedded in program

preparation, candidates may receive real-time feedback from their faculty around standards of professional practice as

they build their understanding of planning, teaching, and student learning, and learn how to demonstrate their growing

abilities.

Support materials to facilitate this learning are available online at no cost for candidates and program faculty. Resources

informing candidates about how to prepare their portfolios include:

• edTPA electronic portfolio platform tips, guides, and videos, such as “Using the edTPA ePortfolio System,”

“Frequently Asked Questions,” and “Preparing Your Assessment with the edTPA ePortfolio System.”

• Assessment preparation guides and video tutorials, such as “Making Good Choices,” “Selecting a Learning

Segment,” “Requesting and Receiving Feedback,” and “Submitting Your Assessment.”

• Guidelines and tips for video submissions, such as “Guidelines for Video Confidentiality,” “Video Recording

Guidelines and Suggestions,” and “Tips for Uploading Video Files.”

“edTPA brings us an opportunity to talk about planning: what kind of skills we want our

candidates to have in terms of planning, assessment and instruction. We can fine tune those

things across our programs and give programs the opportunity to hear what is working well,

areas of weakness and opportunities to share. That’s been very positive.”

Diana Lys, Ed.D., Director of Assessment and Accreditation, East Carolina University

8

“The edTPA required us to reflect, in writing, every single day, but also over longer spans of

time. I’m talking about critical, objective reflection based on evidence. We had to ask and

answer: “What worked today? What didn’t? For whom? Why? What should I do about it? And

most importantly, how do I know?” We had to demonstrate our ability to make mid-course

corrections in response to student learning. For me, it was largely edTPA that provided me the

rapid reorientation toward student thinking and learning that I needed as a student teacher

and then as a novice in my own classroom.”

Nicole Barrick Renner, Teacher, Metro Nashville Public School, Former Teacher Candidate,

Vanderbilt University

Support for State Education Agencies

edTPA provides state agencies, including state standards boards and commissions, with a new performance-based

assessment for teacher licensure. States can use the performance results of edTPA as a key indicator for granting an

initial license to teacher candidates regardless of the path they take to become teachers. Key benefits of edTPA for states

include:

• Opportunity for the state, its school districts, and teacher preparation programs to share a common

framework and language for defining and measuring teaching performance

• Ability to evaluate the impact of teacher preparation on candidate knowledge and skills that lead to greater

student learning

• Ability to use a nationally available common measure that is valid and reliable to evaluate pre-service

teachers’ readiness to teach

• Use of a subject-specific, performance-based assessment system aligned to general and discipline-specific

teaching standards that were designed and developed by educators for educators nationwide

• Access to an array of educative implementation resources and protocols that support preparation program

renewal

9

Assessment Design and Architecture

Design Rationale

Drawing upon the experience gained from over 25 years of developing performance-based assessments of teaching,

including the National Board for Professional Teaching Standards, the Interstate Teacher Assessment and Support

Consortium (InTASC) Standards portfolio, and the Performance Assessment for California Teachers, edTPA was designed

with a focus on subject-specific student learning and principles from research and theory. As a performance-based

assessment system for learning, edTPA is designed to engage candidates in demonstrating their understanding of

teaching and student learning in authentic ways.

Authentic Tasks

State policymakers and teacher preparation programs understand that the future of the public education system resides

in its teachers and leaders. edTPA is designed around job-related practices that teachers employ throughout their

teaching career. The integrated task structure of edTPA draws on evidence of a candidate’s ability to teach his/her subject

matter by documenting a series of lessons from a unit of instruction taught to one group of students.

edTPA’s collection of authentic job-related materials is used to evaluate teaching practice and student learning. In

addition, edTPA systematically documents teachers’ thinking about their practices through analytic commentaries that

explain their teaching context and knowledge about diverse students and justify instructional practices that meet their

students’ varied strengths and needs. In short, edTPA is designed to demonstrate readiness to teach.

The focus of edTPA is in the systematic

collection of authentic teaching artifacts and

commentaries that demonstrate a candidate’s

readiness to teach.

“Those components of teaching—understanding context, developing a plan for learning,

engaging learners, assessing and analyzing artifacts of teaching, plotting next steps

accordingly—encompass the large measure of what practitioners need to know and be able to do

in order to earn their way to a first day of a first year of a teaching career. “

Desiree H. Pointer Mace, Ph.D., Associate Professor and Associate Dean, Alverno College School

of Education, Milwaukee

Alignment with Standards

edTPA is aligned with the Interstate Teacher Assessment and Support Consortium standards, and various professional

standards, including Common Core State Standards and national subject matter organizations’ content and pedagogical

standards. edTPA also shares points of alignment with the Council for Accreditation of Educator Preparation standards.

10

Subject-Specific Assessments

Unlike other generic evaluations of teaching, edTPA is not a “one size fits all” assessment system. It focuses on subject-

matter learning and pedagogy. In each discipline, candidates are required to support student learning of highly valued

knowledge and skills within that field. For example, in the elementary, middle childhood, and secondary mathematics

assessments of edTPA, candidates must design and teach a learning segment with a central focus that supports students’

development of conceptual understanding of a standards-based topic, procedural fluency, and problem solving/

reasoning skills. In addition, candidates must demonstrate subject-specific, grade-level appropriate pedagogy in

mathematics.

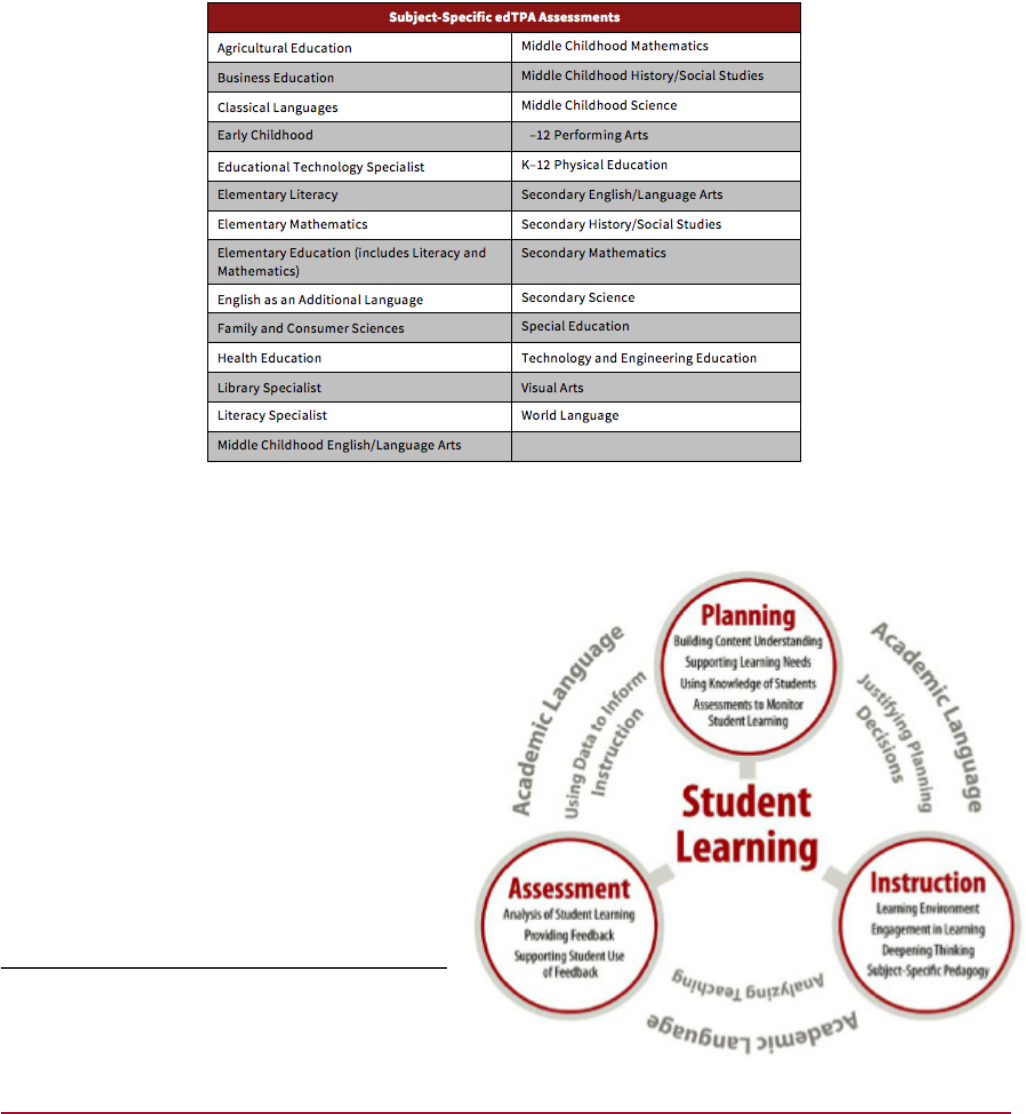

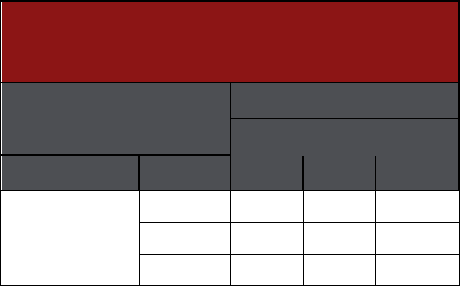

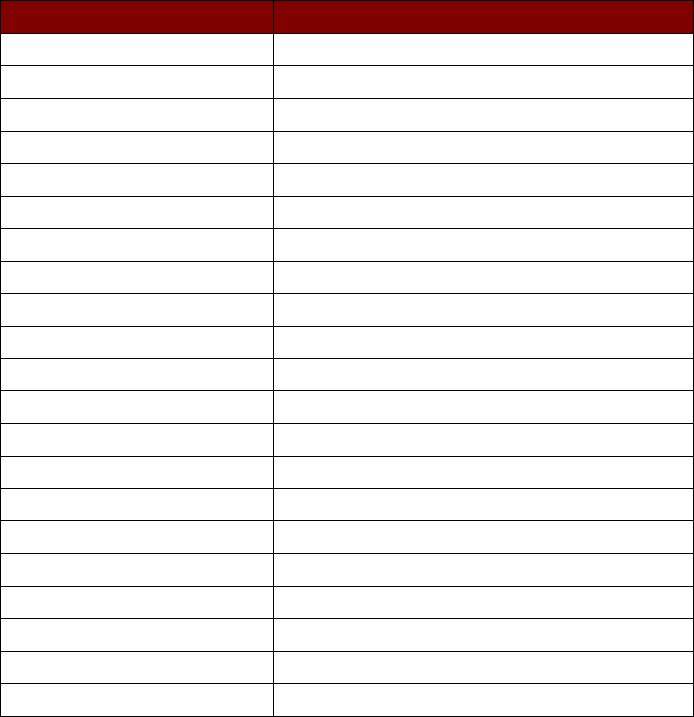

The following table lists the subject-specific assessments available for edTPA.

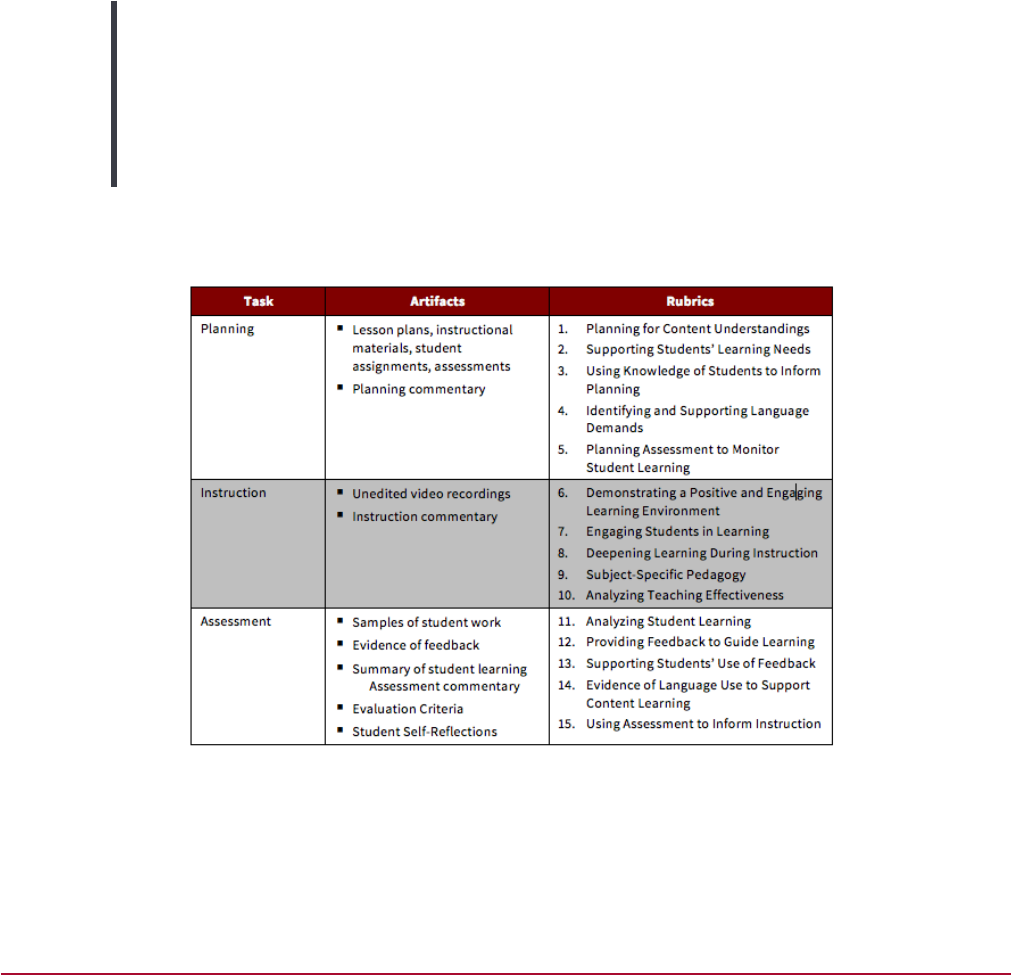

Common Architecture and Shared Pedagogy

While edTPA assessments are subject-specific and

capture pedagogical strategies that are focused on

specific disciplinary goals, they share a common

architecture across all 27 fields, which supports the

evaluation of a common set of teaching principles

and high-leverage teaching behaviors.

The edTPA assessment system contains an integrated

cycle of planning, instruction, and assessment

documenting and analyzing the candidate’s subject-

specific pedagogical practices. These tasks represent

a cycle of eective teaching that a teacher repeats

many times during an academic year. It is what real

teachers do on the job.

Common Architecture. edTPA contains an integrated cycle

of planning, instruction, and assessment documenting

and analyzing the candidate’s subject-specific pedagogical

practices, representing a cycle of eective teaching.

K

11

To complete edTPA, all candidates submit artifacts and analytic commentaries as evidence of how they planned and

implemented a segment of instruction to deepen student learning of a subject-specific objective.

• Artifacts represent authentic work completed by the teacher candidate and his/her students (e.g., lesson

plans, copies of instructional and assessment materials, unedited video recording(s) of the candidate’s

teaching, and student work samples).

• Commentaries require candidates to explain the artifacts, justify the rationale behind the choice of

artifact or instructional decision, and analyze what he/she has learned about students’ learning and the

eectiveness of his/her teaching practice.

The collection of evidence is organized around three key tasks that represent a cycle of eective teaching: A planning task

documents intended teaching, an instruction task documents enacted teaching, and an assessment task documents the

impact of teaching on student learning.

• Task 1 Planning: Planning for Instruction and Assessment

• Task 2 Instruction: Instructing and Engaging Students in Learning

• Task 3 Assessment: Assessing Student Learning

The deliberate focus on shared pedagogical

competency and knowledge across subject-specic

assessments reects the universal “deep structure” of

teaching across content areas.

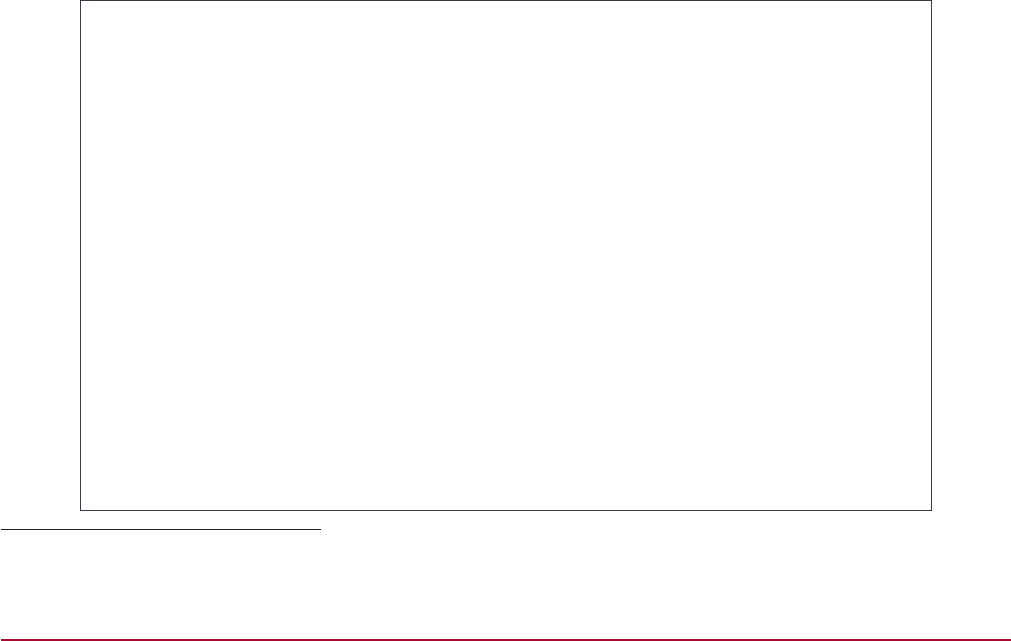

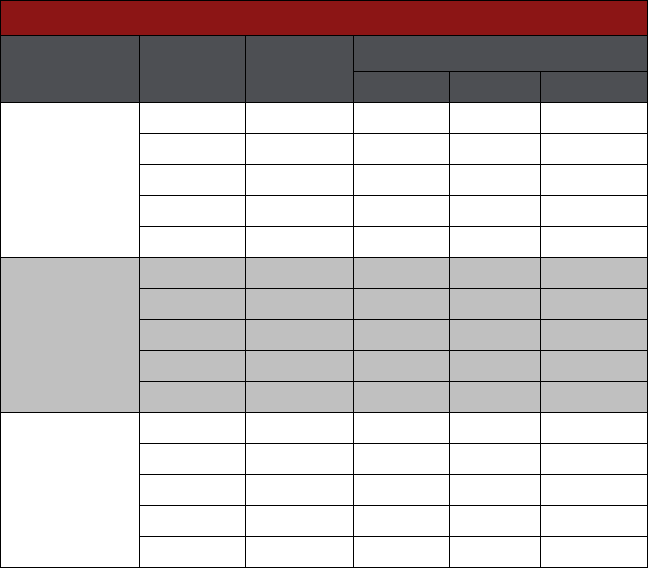

The following table shows the common architecture of edTPA, including how edTPA tasks and embedded components

map to the teaching artifacts required for submission and the scoring rubrics used to evaluate candidate performance.

Scorers evaluate a candidate’s entire edTPA submission, rather than rely on independent scorers of discrete, isolated

tasks. This approach allows the scorer to eectively review the entirety of a candidate’s teaching evidence. In addition to

the three edTPA assessment tasks (Planning, Instruction, and Assessment), each candidate submission also is scored on

two key components, Analysis of Teaching and Academic Language, which are embedded in the three assessment tasks.

12

Scoring Rubrics

edTPA’s subject-specific approach is reflected in its scoring process. To evaluate candidate performance, each

qualified edTPA scorer has pedagogical and subject-matter knowledge specific to one of the 27 subject-specific edTPA

assessments. The edTPA scorer is assigned to score edTPA responses at the grade level and subject area for which he

or she has qualified. Each scorer evaluates a candidate’s entire portfolio by reviewing evidence from across the three

assessment tasks.

Each task is evaluated with five separate rubrics, representing distinctive elements of the task, as shown in the table

above. Thus, there are 15 dierent elements of teaching that are scored.

2

A candidate receives a total score on the edTPA

that can range from 15 to 75.

The rubrics used to score performance—which address common outcomes across all fields and are uniquely adapted to

address student learning and pedagogy specific to each individual subject area—include descriptors for five levels that

address a wide range of performance:

• Level 1 represents the low end of the scoring spectrum, representing the knowledge and skills of a

struggling candidate who is not ready to teach.

• Level 2 represents the knowledge and skills of a candidate who is possibly ready to teach.

• Level 3 represents the knowledge and skills of a candidate who is ready to teach.

• Level 4 represents a candidate with a solid foundation of knowledge and skills for a beginning teacher.

• Level 5 represents the advanced skills and abilities of a candidate very well qualified and ready to teach.

The rubric criteria focus on practices long demonstrated by research to be associated with teacher eectiveness: how

teachers plan to support learning goals and student needs, create a positive learning environment, engage students

in ways that deepen their learning, create meaningful tasks and provide feedback on student work, support student

learning of language, and analyze their teaching in relation to students’ learning and make further plans to facilitate their

progress. The criteria emphasize how teachers support the learning of all students by understanding their students’ prior

knowledge, experiences, and cultural contexts, and by teaching with an eye toward equity.

I approached edTPA with a critical eye regarding the extent to which the instrument supports

or hinders equitable teaching practices. When I noticed that teacher candidates’ edTPA

commentaries revealed much about their grasp of equitable teaching practices, I was prompted

to work with colleagues to analyze how the edTPA process enables teacher candidates to provide

evidence of culturally relevant pedagogy.

Results show that almost 75% of the directions in the edTPA Handbook either directly prompt

or provide an opportunity for teacher candidates to provide evidence of culturally relevant

teaching practices. Because these opportunities are so pervasive, they provide an authentic

integration of culturally relevant practice throughout the process of teaching, rather than

treating it as an add-on or afterthought. Additionally, 8 of 15 edTPA scoring rubrics do not

allow a candidate to score above a 1 if he or she does not demonstrate some level of culturally

relevant pedagogy.

Source: Maria E. Hyler, Yee, L.S., Barnes, S.R., & Carey, R.L. (forthcoming). Teacher Performance

Assessment and Culturally Relevant Pedagogy.

2

Note that edTPA developed for use in Washington state shares the common architecture and 15 common rubrics and includes an

additional component on “Student Voice” embedded in the three tasks and scored with three additional rubrics.

13

Development

Professional Involvement and Review

A hallmark of edTPA is the principle that educators are crucial to the development process. The array of teaching

perspectives, backgrounds, and experience among the many educators who contributed to edTPA development

broadened the scope and sensitivity of the assessment, reflecting the variety of classroom settings and educational

contexts a teacher candidate may face.

• 12,000+ teacher candidates from 430 campuses in 29 states

• 100+ content validation reviewers

• 150+ benchmarkers and scoring trainers

• 120+ subject-specific design/review members

• 10 bias review members

• 650+ scorers

• 5,000+ online community members

Input from educators was structured throughout the extensive development process, including design teams composed

of educators with expertise in specific subject areas who reviewed handbooks and rubrics at multiple points. Through

their careful review and feedback, in conjunction with the results of two years of field testing edTPA nationwide, the

design of edTPA was adjusted accordingly. Input from the field was used to achieve the following:

• Clarify the conceptual focus of the assessment and reduce redundancy across prompts and artifacts.

• Modify commentary prompts and rubrics to ensure

-- attention to the wide range of student learning needs, including those of students with Individualized

Education Programs, English language learners, students with gaps in academic knowledge,

struggling students as well as those who are advanced;

-- features of content-specific student learning and pedagogy are recognized; and

-- clear, comprehensible language is utilized.

• Refine the approach to assessing the development of academic language, including revising rubrics to

examine how the candidate identifies language demands, scaolds student language use, and provides

evidence that students are using academic language to support deep content learning.

• Provide subject-specific examples of academic language constructs.

• Restructure handbooks for ease of use to help candidates consider what to think about, what to do, what to

write, and what to submit, and oering interactive features and links to templates and resources.

• Redesign rubrics to better represent the progression of beginning teacher development and a more even

distribution of performance levels.

“In designing the special education-specific aspects of the assessment, the design team used

five factors to guide the development of task components and associated rubrics: skills and

knowledge of common special educator instructional practices, the general education task

components of other handbooks, Center for Exceptional Children professional standards

for special educators, research-based effective practices and emphases, and special education

specific cycle components. These factors helped us create a handbook that is grounded in core

instructional practices that cut across disability categories, learner ages, and variations in

special educator teaching assignments.”

Cheryl Hanley-Maxwell, Ph.D., Associate Dean of the School of Education, University of

Wisconsin, Madison

14

In addition to the edTPA Design Teams, critical review has been provided by the:

• Bias Review Committee, which included 10 nationally representative educators and teacher educators who

reviewed dra edTPA materials for any potential bias and provided input for revision.

• Technical Advisory Committee, convened by SCALE, consisting of educators, psychometricians, and

researchers who recommended and reviewed research and advised on the technical features of the

assessment.

• National Advisory Board, which provides ongoing advice from teachers, teacher educators, education

organization leaders, and state representatives on edTPA policy and implementation decisions. (For a list of

members, refer to Appendix A of this report.)

Major Milestones

Major development milestones for edTPA include:

• 2009–2010: Initial design and small-scale tryout of assessment prototypes

• 2010–2011: Assessment revision with multi-state pilot test

• 2011–2012: First large-scale field test to inform revision of tasks, rubrics, scoring training, scoring, reporting

systems

• 2012–2013: Revision followed by second large-scale field test to establish validity and reliability of scores

and conduct standard setting process

• 2013–2014: Operational launch of edTPA

15

Scoring

Scoring by the Profession for the Profession

Scoring is one of the potentially transformative elements of the edTPA assessment process. Scorers are drawn from

among teacher preparation program faculty, field supervisors, and P-12 educators who work with novice teachers. These

individuals’ engagement in scoring enables them to better support the formative development of prospective teachers

and to consider ongoing improvements within their preparation programs.

Qualifications

Because of the subject-specific nature of edTPA, trainers and scorers must have pedagogical and subject-matter

knowledge, including relevant experience in roles that support teaching and learning in the content area and grade-

level span in which they score. The pool from which trainers, scoring supervisors, and scorers are recruited include

membership from the following groups:

• University faculty and administrators

• Field supervisors

• Cooperating teachers

• Induction mentors / coaches

• School site principals

• National Board Certified Teachers®

• Subject matter organization members (e.g., NCTM, IRA)

• Retired teachers and principals who are current in their

content field

Scorers also must have experiences that make them aware of appropriate expectations for teacher candidates who are

in the early stages of learning to teach. University faculty and supervisors must have recent experience teaching methods

or clinical supervision responsibilities in the subject matter area they will score. P–12 educators must have recent

experience teaching P–12 learners the subject matter area they will score, as well as guiding or mentoring beginning

teachers.

During the field test, about half of the scorers were teacher preparation faculty and the other half were P-12 educators, of

whom approximately 50 percent were National Board Certified Teachers. These proportions are expected to be similar in

the operational phase of edTPA.

Training

Training for scorers comprises both individual online and interactive group sessions, totaling about 20 hours. The

individualized training includes a series of online training modules that orient scorers to the tasks, rubrics, and scoring

system and provides numerous opportunities to identify and evaluate evidence for each rubric. During training, scorers

review and analyze up to five edTPA submissions prior to qualifying to score (one embedded in individual online

modules, one interactive practice session, two or three qualifying submissions).

Aer completing the individual portion of the training materials, scorers independently score a sample edTPA submission

and then engage in a live (online) interactive group session with a trainer in that content area. The independent scoring

activity gives the scorers the opportunity to practice scoring a subject-specific submission that has been coded by

experienced scorers and trainers and then discuss evidence and score justifications with the trainer and other scorers in

the interactive session.

16

Aer completing the interactive training session, scorers score and identify evidence for at least two additional edTPA

submissions, previously coded by experienced scorers (i.e., “qualifying submissions”), within their specific content area

prior to scoring candidate submissions. The qualifying submissions verify the scorer’s understanding of the scoring

criteria and his or her ability to score candidate submissions accurately and consistently.

“The [scorer training] process was very intensive. It began with a lot of online materials and

modules to work through, then webinar conferences followed by practice portfolios followed

by live training. Then there were additional online and live training sessions to become a

supervisor. It’s very rare to see a program developed like this with so much thought.”

Gwynne Rife, Ph.D., Professor of Biology and Education, College of Education, University of

Findlay, Ohio, and Scoring Supervisor for edTPA

ePortfolio Scoring Management System

To score submissions, scorers use the edTPA electronic portfolio scoring management system, which allows scorers

to receive candidate materials, view them, tag evidence and record the scores they assign for each rubric. Scorers are

presented with specific subject-area portfolios to score based on their qualifications. The system eiciently collects the

scores assigned to each submission for results reporting and data analyses.

Scoring Process

The edTPA scorer is assigned to score responses at the grade level and subject area for which he or she has qualified.

The scorer evaluates a candidate’s entire portfolio across the three assessment tasks. The scorer utilizes a secure online

scoring platform to access each candidate’s materials and applies the rubric scores, viewing all evidence from artifacts,

commentaries, and video recording(s) submitted by the candidate.

With each scorer scoring an entire candidate submission (rather than independent scorers of discrete, isolated tasks),

the scorer can eectively review the entirety of a candidate’s teaching evidence and ensure the components are

appropriately interrelated. The scorer evaluates how the candidate plans to support subject-specific student learning,

enacts those plans in ways that develop student learning, and analyzes the impact of that teaching on student learning.

Guided by 15 analytic rubrics that incorporate the criteria used to score each of the three major areas, the scorer assesses

the extent to which—and the areas in which—the candidate is ready to teach.

Adjudication Process

The edTPA scoring process has been designed to be fair to both candidates and external stakeholders alike. All edTPA

submissions that are at or near the recommended professional performance standard for passing and failing are scored

by at least two, and sometimes three, scorers. This procedure increases the decision consistency of the final scores

assigned to edTPA candidates. In all such cases the final score is based on at least two scorers who agree on the pass/fail

decision.

17

Analyses

Validity and reliability are essential elements of any assessment program. Evaluating the validity and reliability of edTPA

as an instrument to measure teacher candidates’ readiness to teach has been a continuous part of its rigorous, multi-year

development process.

The assessments were developed within a technical framework of psychometric practice and principles guided by The

Standards for Educational and Psychological Testing (AERA, APA, & NCME, 1999). The edTPA development process yielded

important evidence to provide the foundation of the assessments’ validity, reliability, and usability for the purposes of

teacher licensure, accreditation of teacher preparation programs, and candidate completion of preparation programs.

This work has proceeded under the guidance of experts in psychometric practices and procedures. In addition,

participating states have subjected the analyses to further review by their own technical advisors. Advisors have held the

process to a high standard.

Descriptive Data

This section reports descriptive statistics about how candidates performed on edTPA in the 2013 field test sample. These

statistics provide a snapshot of performance on the 2013 field test of the edTPA across credential areas.

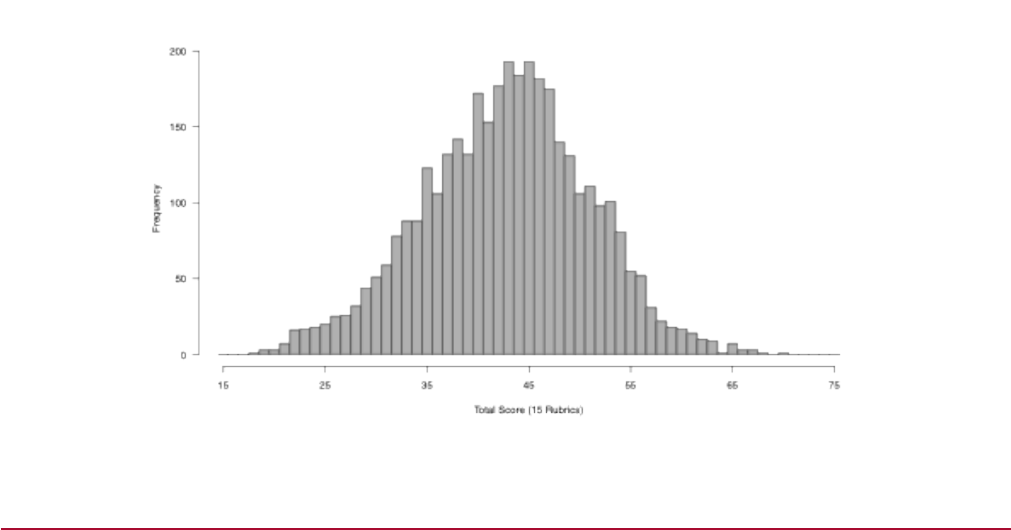

Distribution of Scores

The 2013 edTPA field test provided sample responses and data regarding edTPA’s statistical and qualitative

characteristics.

There were 4,055 submissions evaluated from the 2013 edTPA field test across 23 fields. Each submission was scored on

five separate rubrics within each of the three major edTPA tasks: planning, instruction, and assessment. With five levels

of possible performance for each rubric (and 15 rubrics total for most assessments), the total score could range from 15

to 75.

The following figure shows the distribution of total scores:

Total Score by Number of Submissions. Based on the 2013 edTPA field test, this chart shows

the number of candidate submissions and the total scores assigned. NOTE: Data reflect complete

submissions (i.e., no missing rubric scores). For candidates who received two scores, the average

score across the two was used.

18

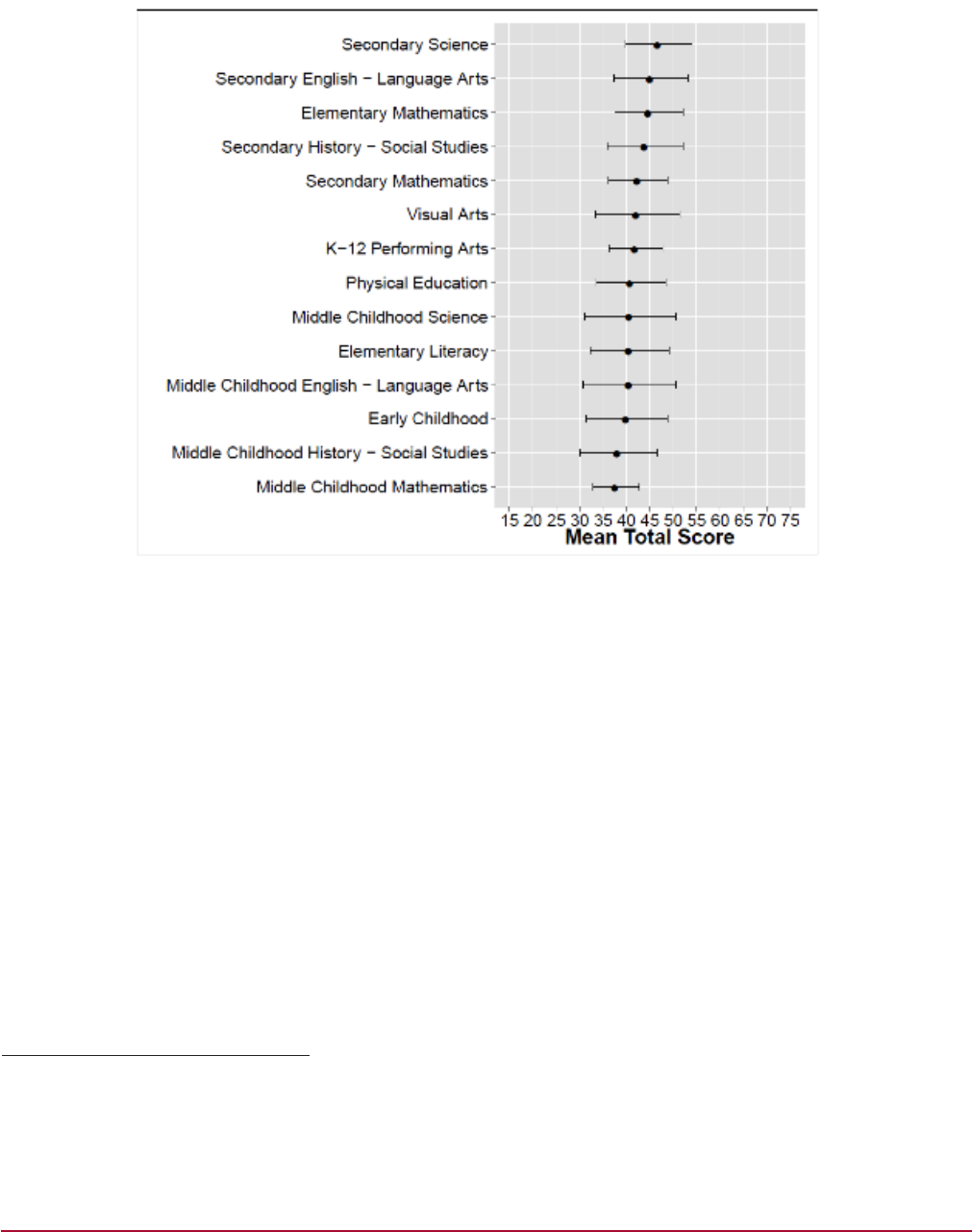

The score means and standard deviations for the 15 possible scores

3

are reported for each credential area in Appendix B.

Summary score data for each of the content areas is presented in the following figure. The highest scores were obtained

in secondary science and the lowest in middle childhood mathematics.

Mean Total Scores across Content Areas

Mean Total Scores by Content Area. This figure provides a visual depiction of the total mean scores

by content area for the 2013 edTPA field test. NOTE: Data reflect complete submissions (i.e., no missing

rubric scores). For candidates who received two scores, the average score across the two was used.

3

There are 18 scores in Elementary Education, which includes assessments of teaching in both literacy and mathematics. World

Language and Classical Language have two fewer rubric scores because academic language is the content to be taught and

therefore is not addressed separately by rubrics in these fields. In Washington state, three additional rubrics are added to evaluate

“student voice.”

19

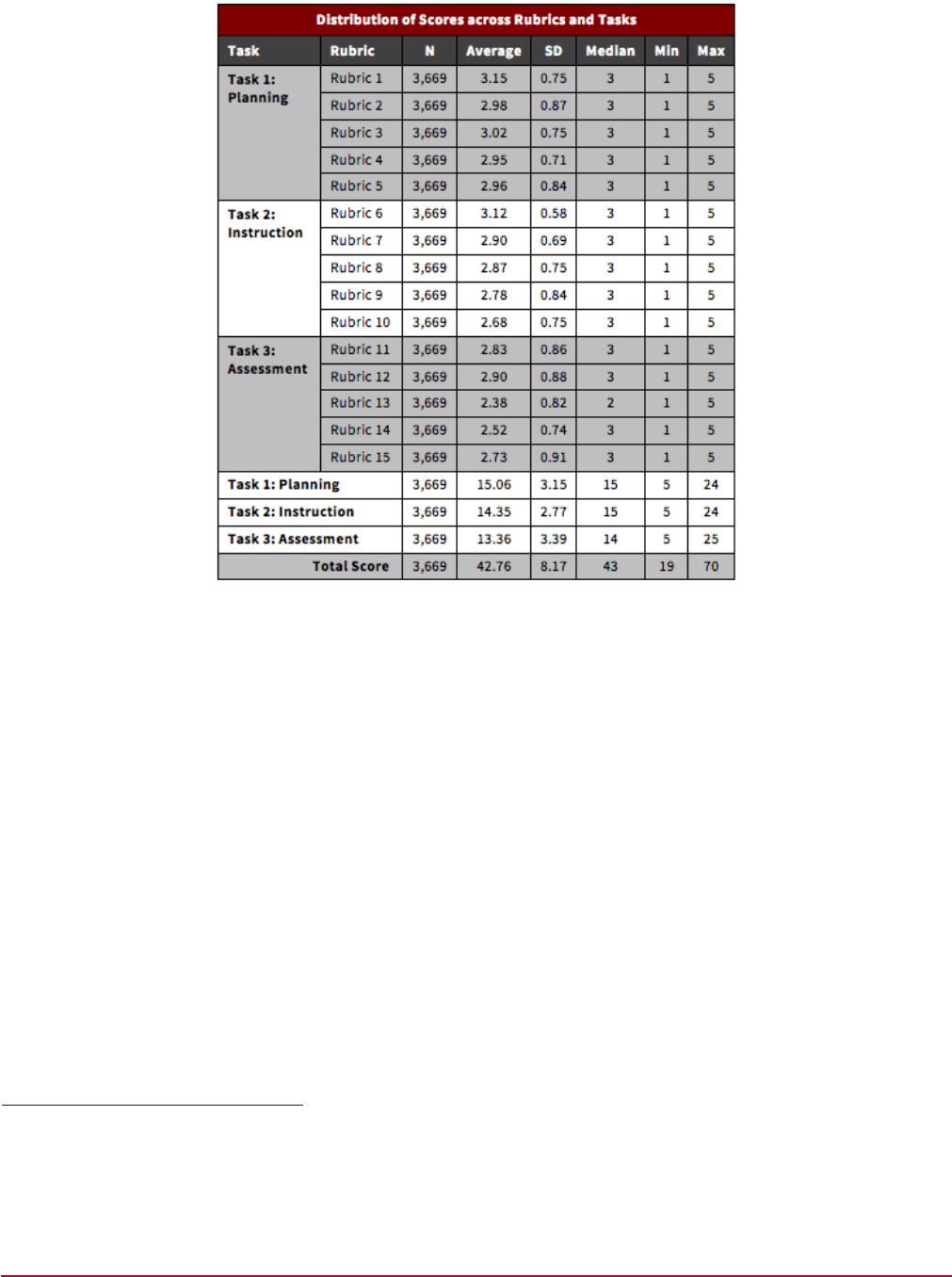

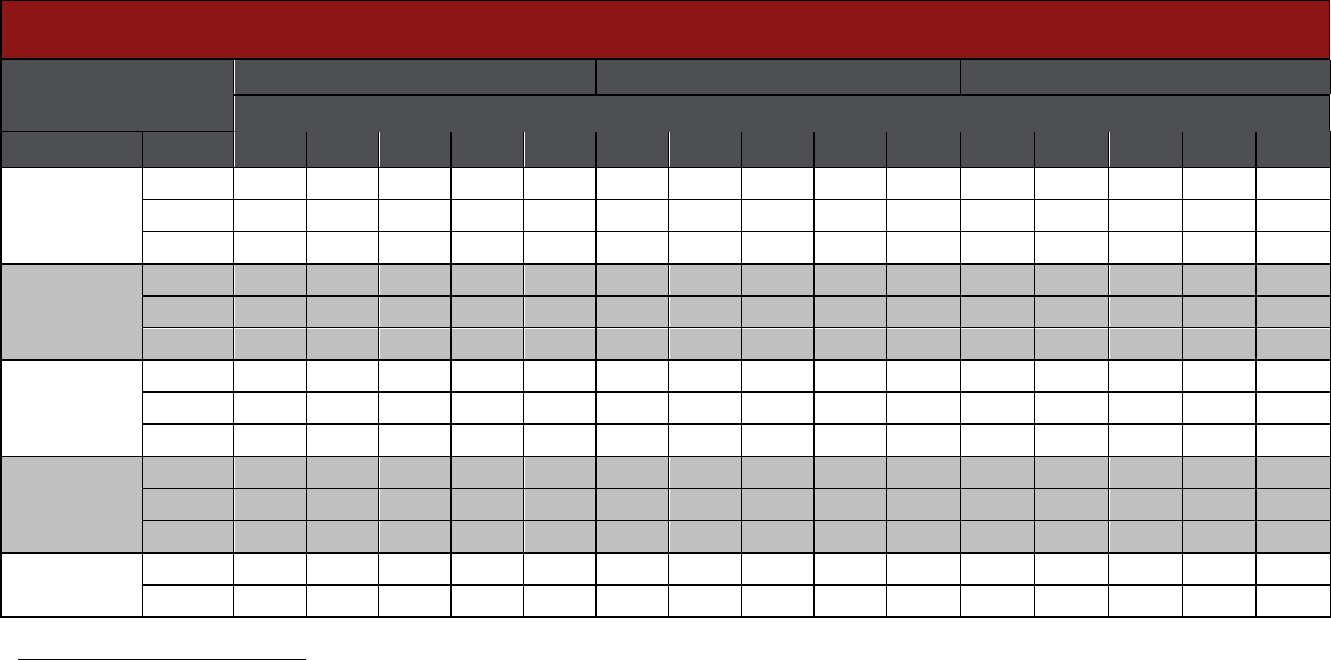

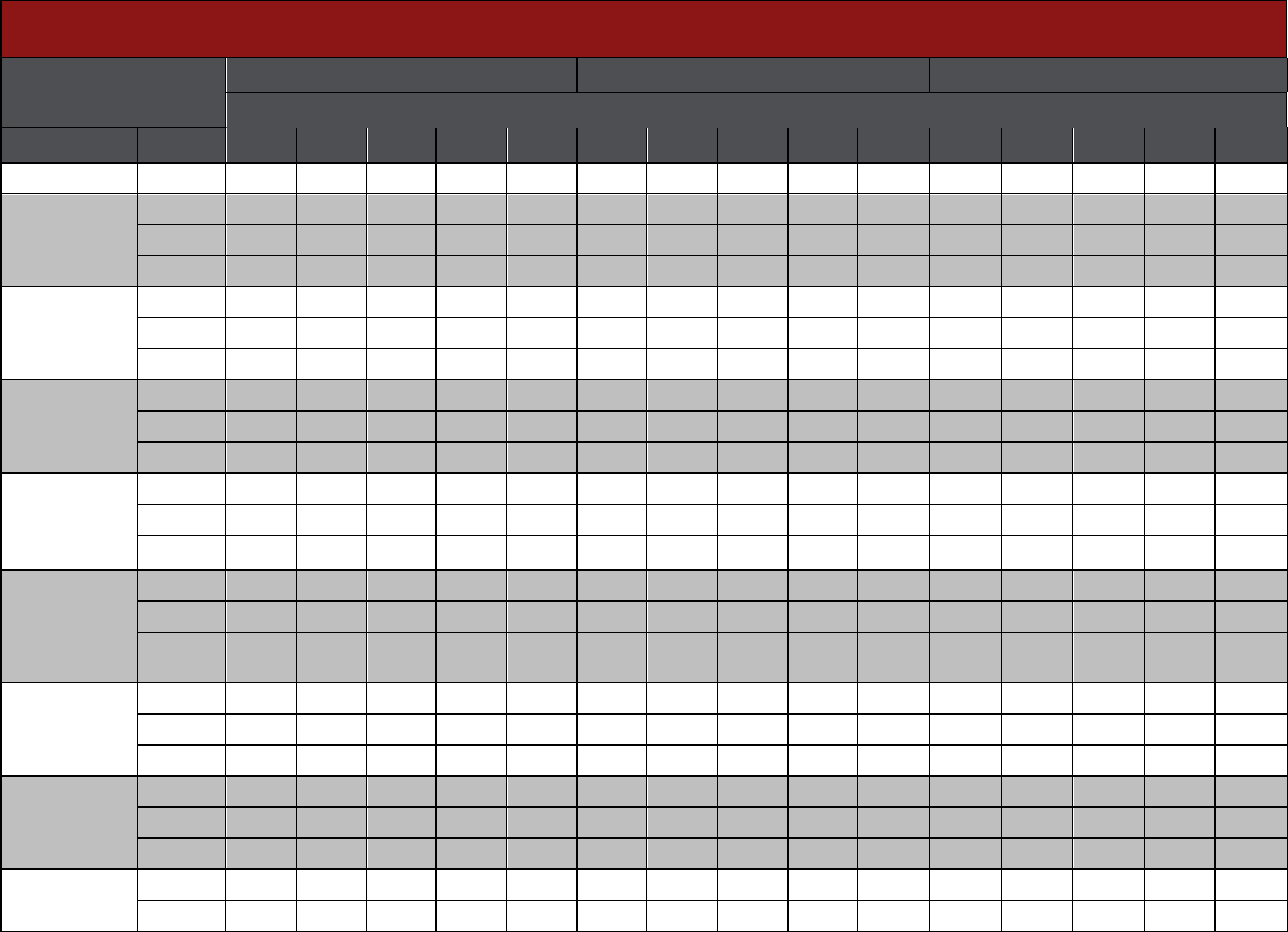

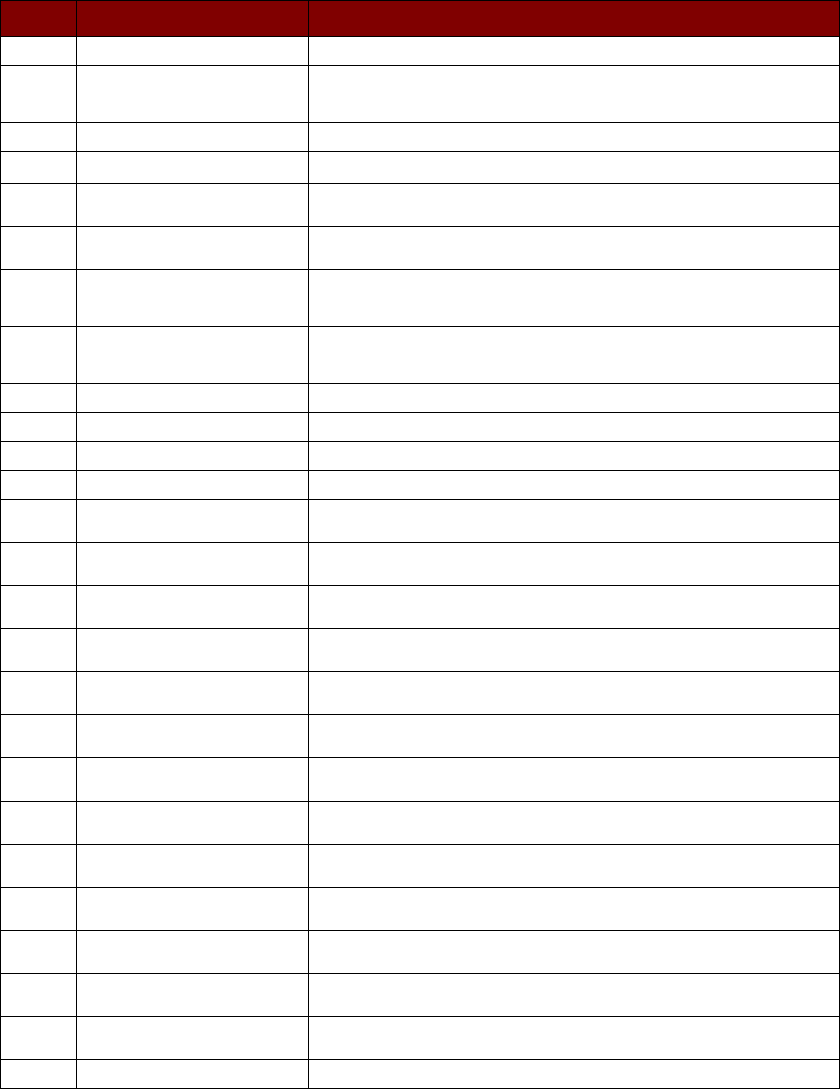

Scores also diered across each of the rubrics. Data for the score distribution from the 2013 edTPA field test rubrics are

presented in the following table:

4

In general, as might be expected of pre-service teachers, candidates performed most highly on the planning task,

followed by the instruction task, and then the assessment task. This conforms with other studies that have found that

learning to evaluate and respond to students’ learning is one of the more challenging elements of teaching, and one that

even some veteran teachers have not always mastered in the past.

4

Data reflect complete submissions (i.e., no missing rubric scores). For candidates who received two scores, the average score across

the two was used. Candidates who completed edTPA in a credential area with fewer than 15 rubrics are excluded from the data, and

only the scores for rubrics 1 through 15 are used for candidates in an edTPA credential area where more than 15 rubrics are used.

Score Distribution across Rubrics and Tasks. This table displays the

overall distribution of scores by rubric for the 2013 edTPA field test.

20

Validity

A valid performance assessment provides an accurate measurement of the important features of a performance that are

related to candidates’ abilities to succeed at that endeavor in a real-world context. Multiple sources of evidence from

the edTPA development process and data analyses collectively provide the foundation to support the validity of edTPA

scores for licensure and accreditation purposes. The following sections summarize validity evidence with respect to the

content of the assessment, its relationship to job requirements, bias and sensitivity, and its psychometric properties.

Content Validity

The authenticity and content relevance of edTPA was a continual focus throughout the development process. It is the key

feature that indicates that teacher candidates demonstrate pedagogical and subject-matter knowledge and skills on the

same tasks for which they will be responsible as licensed educators.

Content validation activities for edTPA consisted of gathering feedback from educators to rate the importance,

alignment, and representativeness of the knowledge and skills required for each rubric, and of the rubric itself, in relation

to national pedagogical and content-specific standards. The content validation activities provide critical evidence that

the content of the assessments is related to knowledge, skills, and abilities necessary for the job of teaching.

As a result of the initial round of content validation activities, all tasks and components were rated above 3 on a 5-point

scale, reflecting the judgments of reviewers that the knowledge and skills represented by tasks have “importance” to

“very great importance,” that they align to InTASC standards “well” to “very well,” and that the rubrics represent relevant

tasks of teaching.

To further support content validity, an additional round of activities was conducted to provide additional confirmation of

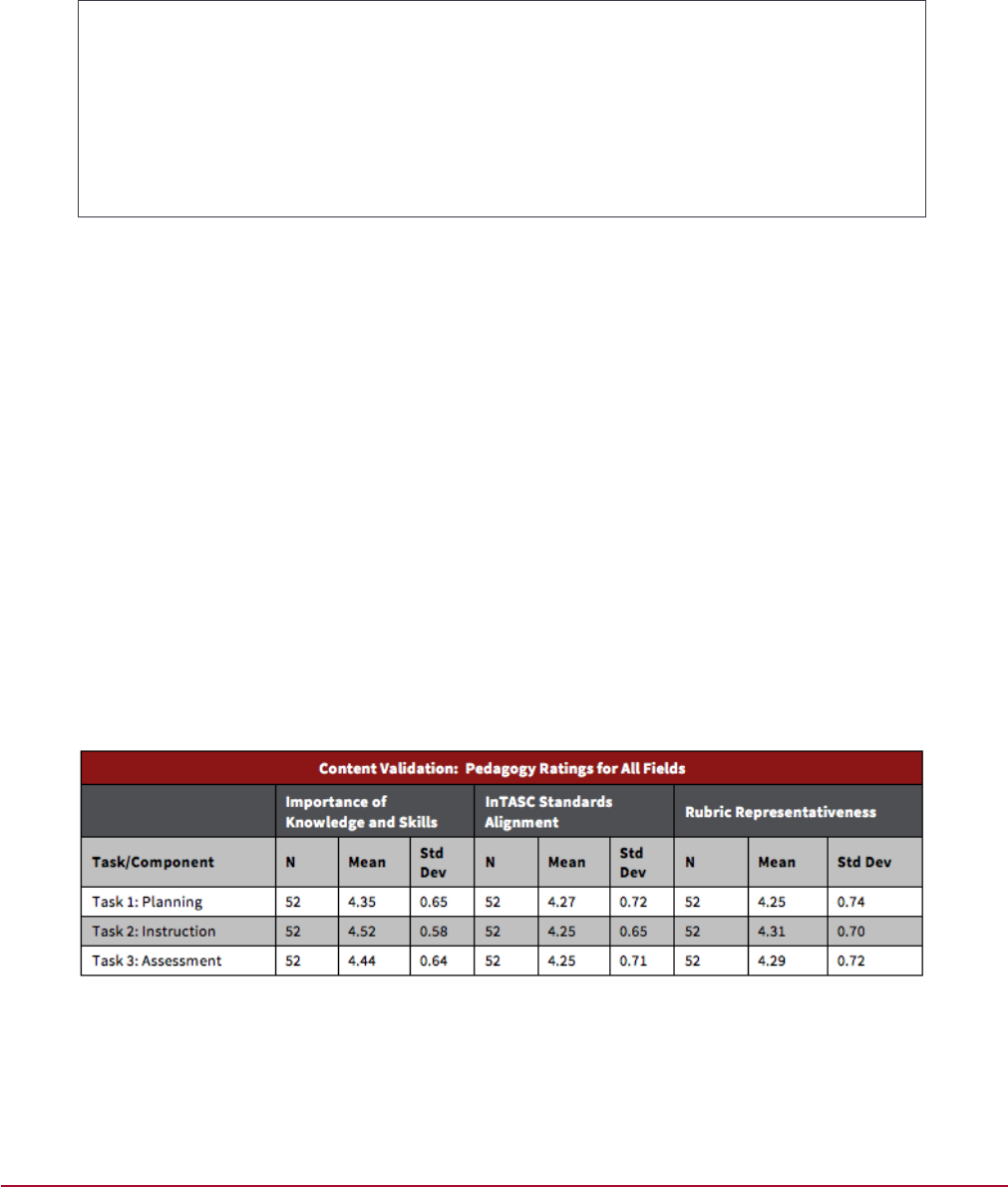

the importance, alignment, and representativeness of the edTPA tasks. The results are shown in the following table:

Content Validity Ratings. The table above displays content validity ratings (on a five-point scale with five

being the most positive rating) given by edTPA content validity committee members. The data indicate a strong

relationship between the assessment’s key tasks and the job of an entry-level teacher.

“If we identify lack of readiness in a candidate and do not recommend her for licensure as a

result, it’s also been the case that she hasn’t done well on the edTPA, and the reverse is true as

well. The assessment, in my view, is valid and predictive of candidates’ capacity to command

the major components of teaching work.”

Desiree H. Pointer Mace, Ph.D., Associate Professor and Associate Dean, Alverno College School

of Education, Milwaukee

21

Job Analysis Studies

The Job Analysis Studies conducted for edTPA focused on the knowledge, skills, and abilities (KSAs) identified as

necessary for successful teachers by the pool of subject-matter experts who were involved in edTPA development

processes. These experts were practicing classroom teachers or teacher educators in each of the content fields. The list of

KSAs generated by this panel informed the development of the edTPA rubrics.

The link between these KSAs and teachers’ actual work was then confirmed through Job Analysis Studies, which

included the following steps: First, a group of teachers identified a list of 105 tasks and behaviors that are critical to

teaching. These tasks then were taken to a national group of educators who rated several aspects of each identified task,

answering questions such as:

• Task Performed: Is the task performed on the job by a teacher?

• Task Importance: On a scale of one to five, how important is the task to eective teacher performance?

• Time Spent on Task: On a scale of one to five, how much time is spent on the task?

Responses related to each task were analyzed to identify the importance of each task to the job of teaching. From

these ratings, an overall “criticality” value of tasks was calculated (with a minimum possible value of 3.0 and maximum

possible value of 15.0). Of the 105 total behaviors and tasks, 86 of them met or exceeded the criticality threshold, which

meant that: 1) 90% or more of respondents agreed that they perform the task, and 2) each task’s mean criticality rating

was 8.0 or higher. Overall, the criticality value across the tasks had a mean of 10.35, maximum of 12.45, and minimum

of 8.38. A panel of educators confirmed that the 15 rubrics were strongly related to the critical tasks and behaviors.

Through this process, the 15 core edTPA rubrics were confirmed as representing knowledge, skills, and abilities that are

judged to be important or critically important to perform the job of a teacher.

Bias and Sensitivity Review

State agencies and teacher preparation programs adopting edTPA benefit from an assessment system that has been

carefully reviewed for fairness and freedom from potential bias. This review was achieved through the structured

examination of handbook prompts, rubrics, and directions by a diverse and trained pool of professional teachers and

teacher educators from across the nation who provided feedback on the structure of prompts, phrasing of questions,

language of rubrics, and formatting of handbooks to ensure comprehensibility and equitable access and evaluation for

all candidates completing edTPA.

“edTPA is assessing candidates’ readiness for classroom teaching. Additionally, edTPA

assesses the extent to which candidates have developed sensibilities for differences among

students and the ability to accommodate differences in students’ needs based on prior academic

performance and cultural, experiential, and linguistic differences.”

Etta R. Hollins, Ph.D., Professor and Kauman Endowed Chair for Urban Teacher Education,

University of Missouri, Kansas City

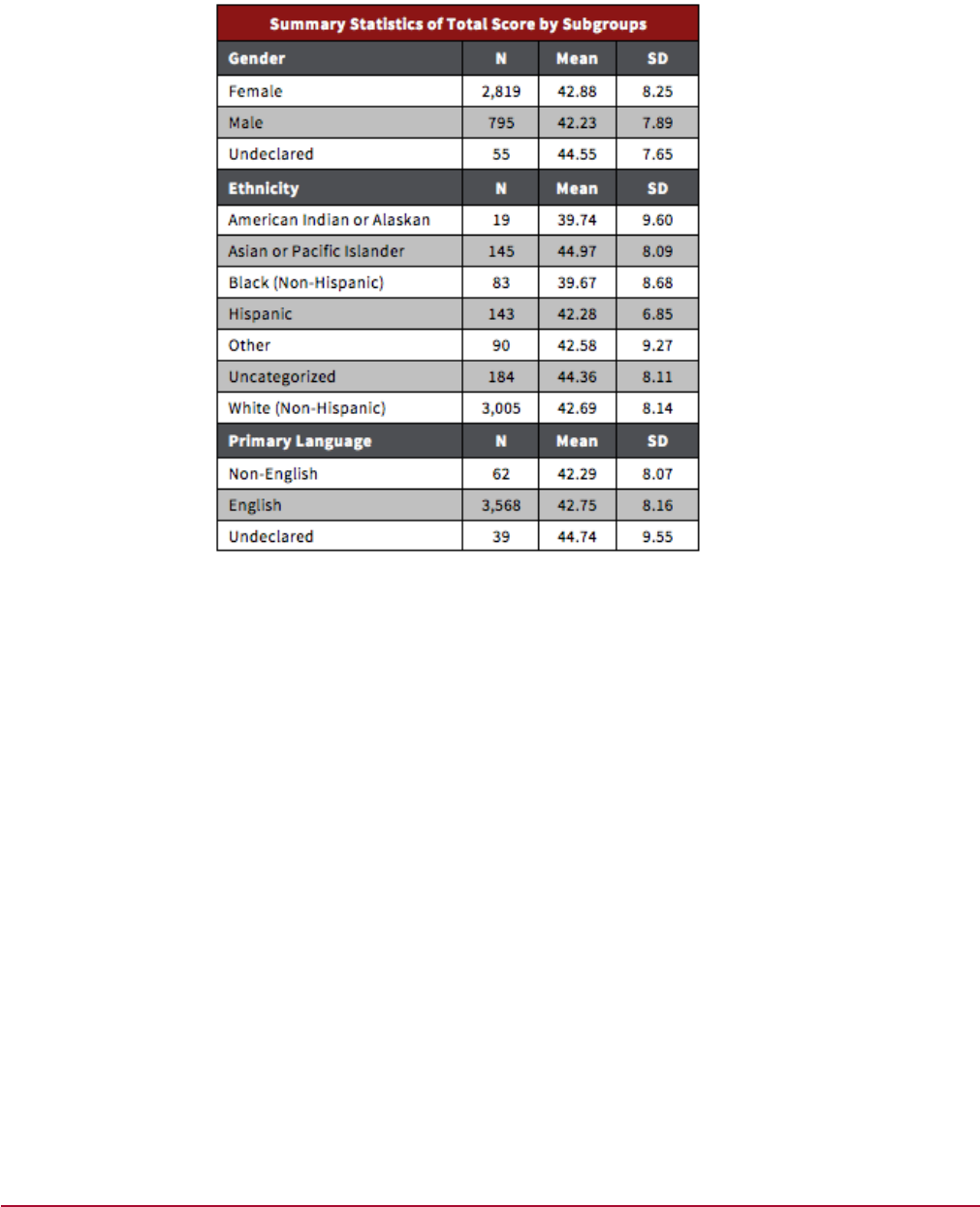

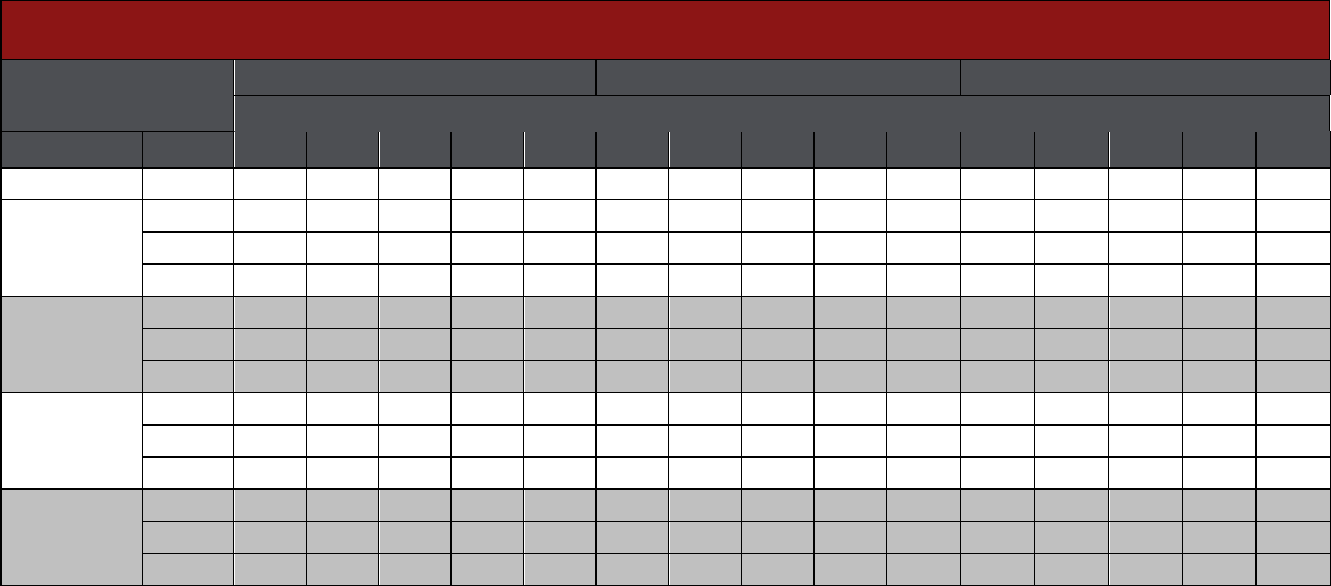

Subgroup Scores

As part of the bias and sensitivity review, average total scores across dierent subgroups were compared. For the 2013

edTPA field test participants, the following figure displays participation and performance data—including mean scores,

standard deviation, and submission volumes—for various participant groups.

5

Gender, ethnicity, and primary language

are based on self-reported responses.

5

Candidates who completed edTPA in a credential area with fewer than 15 rubrics are excluded from the data, and only the scores

for rubrics 1 through 15 are used for candidates in an edTPA credential area where more than 15 rubrics are used. In addition,

candidates with missing rubrics scores are excluded from the data.

22

No noticeable dierences were found across dierent genders or for candidates whose primary language was not

English. (Note, however, that very few candidates reported that English was not their primary language.) Comparatively

small dierences were obtained among the subgroups in ethnic categories, given the standard deviation of mean scores.

In other words, there was greater variation in scores within subgroups than there was variation across subgroups.

Because many of these comparisons are based on very unequal sample sizes, and some of the subgroup sample sizes are

very small, any dierences should be interpreted cautiously.

Construct Validity

Validity refers to the degree to which evidence and theory support proposed interpretations about uses of test scores.

In the case of edTPA, we make inferences about candidates’ readiness to teach based upon their total score across all

rubrics of the edTPA. The AERA, APA, and NCME Standards (1999) suggest that studying the internal structure of items

or tasks on an assessment is one of the primary sources of evidence that can be used to support construct-based score

inferences.

Factor analysis was used to provide support for the use of a total score on the edTPA and strengthen inferences about

candidate readiness to teach based on edTPA scores. Factor analysis is a psychometric method that evaluates patterns

in the scores of an assessment. It can be used to answer two important questions regarding the interpretation of edTPA

scores. First, is there support for using a single total score, summed across all 15 rubrics, to summarize a candidate’s

performance? Second, do the patterns of scores across rubrics support the theory underlying the development of the

edTPA tasks and rubrics? Finding one or a small number of related underlying dimensions in the data supports the

validity of inferences that edTPA measures a primary characteristic of eective teaching.

An exploratory factor analysis results in a set of estimated factor loadings. Such loadings can range from about -1.0 to

+1.0. We expect the factor loadings to be positive and approaching a score of 1 for all rubrics in order to support use of

a total score. To study whether the theoretical task structure is appropriate we conducted additional factor analyses to

Summary Statistics by Subgroups. The table shows performance

data from the 2013 field test relative to the demographic

characteristics of ethnicity, gender, and primary language, based

on self-reported responses.

23

determine which rubrics were most strongly related. We expect that rubrics within the same task will be more closely

related, and that performance across tasks also will be strongly related.

The data suggest that all factor loadings are positive and of moderate to large magnitude. These results support the use

of a single total score. The second factor analysis demonstrated that the hypothesized task structure of the edTPA is

supported by the patterns of candidates’ scores. Refer to Appendix C for detailed results and estimation methods of the

factor analyses.

Reliability

All measurement procedures have some amount of random measurement error. To provide evidence in support of

inferences based on edTPA scores it is important to evaluate the consistency, or reliability, of edTPA scores. Reliability

of edTPA scores in the field test sample was analyzed in two ways. First, the agreement rates between dierent scorers

evaluating the same candidate’s submission were analyzed. Second, the overall variability in a candidate’s scores due to

chance measurement error was evaluated using a Cohen Kappa statistical procedure.

Approximately 10% of all edTPA submissions are randomly selected to be scored by a second, independent scorer. This

provides a way to study how consistent edTPA scores are across dierent scorers. Ideally, a candidate would receive

the same score from two dierent scorers. In practice, the high complexity of the edTPA makes this unlikely. However, if

dierences across scorers are small, this supports the consistency of edTPA scores.

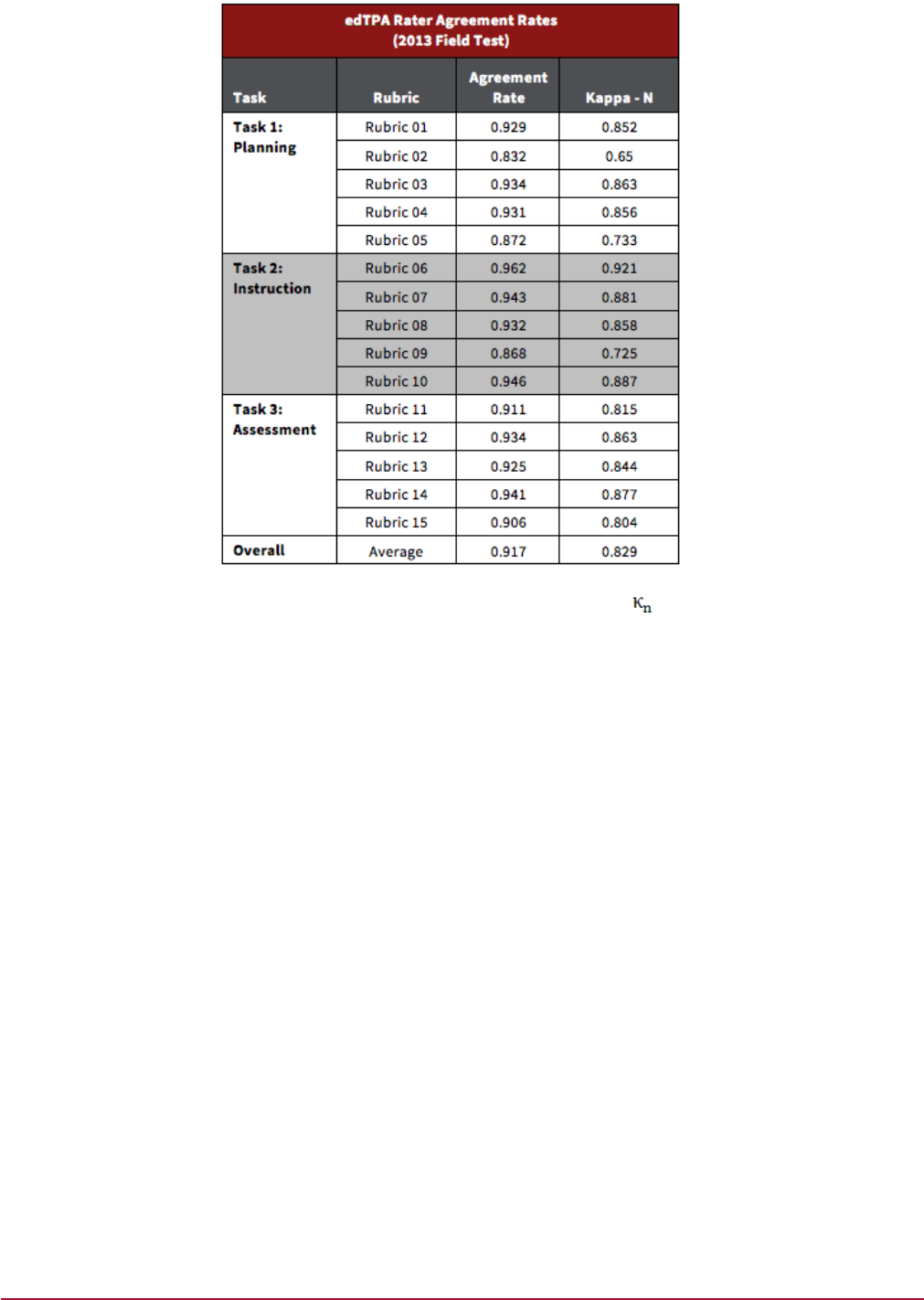

Inter-rater Agreement Rates

To study agreement rates between scorers, the research team conducted several kinds of reliability analyses. In the table

below, we report two kinds of reliability statistics. The first is the adjacent agreement rate. Adjacent agreement refers to

the proportion of cases in which two independent scorers assign either the exact same score or a score within 1 point of

each other. When scoring complex performance assessment tasks, this oen is used as a measure of rater agreement.

In addition, a statistic called kappa - n ( ) is oen reported to account for agreement by chance.

6

In some cases, scorers

will assign the same score to a submission simply by chance, rather than due to consistency of scoring procedure. The

statistic adjusts the adjacent agreement rate to take into account this chance agreement. While there are no clear

guidelines for interpreting values, it is better for these values to be closer to 1.0. The values reported here, which are

generally greater than 0.8 (minimum of 0.65 and maximum of 0.921) suggest scorers are nearly always within 1 point.

The table below shows the inter-rater reliabilities using both of these statistics. The average adjacent agreement rate

was .92 and the average kappa -n was .83. These reliability levels are relatively high, and are comparable to those found

for well-established performance assessments such as National Board Certification and scoring of open-ended tasks like

Advanced Placement essays and portfolios.

6

The version of Cohen’s reported here is based on that suggested by Brennan and Prediger (1981). This adjusts for chance by

assuming that scorers are equally likely to assign any of the 5 score points to a portfolio, a priori. This is an appropriate adjustment

because scorers are encouraged to use all score points and there is no restriction on the number of scores at any level that must be

assigned across submissions.

24

edTPA Rater Agreement Rates. This table presents rater

agreement rates (exact plus adjacent agreement rates) and

(Kappa-N) agreement rates for the 2013 edTPA field test.

25

Standard Setting

Use of the Total Score for Decisionmaking

The integrated three-task design of edTPA and factor analysis findings support the assignment of one total score to the

candidate upon which a pass/fail decision about readiness to teach is made. The total score is calculated as the sum of

the scores on all the rubrics associated with the full collection of artifacts and commentaries, where all rubrics are given

the same weight and contribute equally to the total score. Total scores can range from 15 to 75. In this compensatory

model, a candidate may “compensate” for lower scores on some rubrics with higher scores on other rubrics. The use of

compensatory scoring for professional certification systems is standard practice (See, for example, the National Board for

Professional Teaching Standards certification assessment and most state licensure tests).

Setting a Recommended Professional Performance Standard

Standard setting is an evidence-based process for determining which score on a test or assessment demonstrates a

specified level of performance. The Briefing Book process for determining a passing score (documented by Dr. Edward

Haertel, in Haertel (2002, 2008) and Haertel & Lorie (2004)) was used for edTPA. Very broadly, the process begins with

a statement of the intended performance level description—that is, a description of what candidates meeting the

performance standard should know and be able to do. The goal is then to determine a cut score, or professional

performance (passing) standard (PPS) on an accompanying test or assessment that separates those who meet the

performance standard from those who do not.

A single recommended professional performance (passing) standard (PPS) was set for edTPA for use across all content

areas and grade levels. The single performance standard is a result of the integrative structure and evaluation process of

edTPA, in which a single total score is assigned to each candidate’s entire integrated edTPA submission. The candidate’s

single total score is then compared to the single recommended PPS, which is the same PPS applied to each of the 27

edTPA subject-specific assessments.

For states (e.g., Washington) or credential areas (e.g., World Language) that have more or fewer than the 15 rubrics, it is

necessary to use an adjusted PPS because the score scale (number of possible scores) diers. A proportional adjustment

is made such that the average rubric score corresponding to the total scores remains constant across fields. To achieve

this adjusted PPS, edTPA began with the PPS based on 15 rubrics set during a national standard setting event. This PPS

was adjusted upwards for areas with more than 15 rubrics (where higher total scores are possible) and downwards for

areas with fewer than 15 rubrics (where lower total scores are possible). This results in PPSs that are proportional to the

number of rubrics and maintain the same average rubric score.

The use of a common professional performance standard for performance-based assessments is standard practice in the

teacher certification industry, due in part to the assessment design used by the National Board.

26

edTPA Standard-Setting Event Overview

The edTPA standard-setting event was held over two days in August 2013. A group of subject-area experts and relevant

education policymakers from across the nation were convened for the standard setting session. (See Appendix D for a full

list of participants.) The goal of the session was to have panelists recommend (aer thorough orientation and multiple

steps and discussions) an initial PPS, which was then discussed and evaluated prior to recommending a final PPS.

Key Panels

• Practitioner Panel. Panel group No. 1 included faculty from institutions of higher education and P–12

educators with varying levels of exposure and engagement with edTPA.

• Policy Panel. Panel group No. 2 was a group comprised of members from various national organizations

and stakeholder groups including National Education Association (NEA), American Federation of Teachers

(AFT), and National Board, as well as state departments of education and/or standards boards within states

using edTPA.

• Final Panel to Establish the Recommended Professional Performance Standard. The Final panel

included representatives from both the practitioner panel and the policy panels.

edTPA Standard Setting Guiding Question

Throughout the standard-setting event and examination of sample edTPA submissions, a guiding question was used

and revisited to frame all discussions, which provided a common ground for which all participants could anchor their

decisions.

• Think about a teacher candidate who is just at the level of knowledge and skills required to perform

eectively the job of a new teacher in U.S. public schools.

• Guiding question: What score (the sum of all of the rubric scores of the edTPA) represents the level of

performance that would be achieved by this individual?

The purpose of the edTPA standard setting guiding question and context was to identify the performance expectation

(on the 15-rubric scale) of an initially licensed, classroom-ready teacher. The step-by-step, standard-setting process of

examining actual candidate submissions and impact data guided participants to determine the candidate performance

on edTPA that, as stated in the Briefing Book Method, “just meets the definition of performing eectively the job of a

new teacher.”

Key Steps of the Standard Setting Process

• edTPA Standard Setting Pre-Activities (Homework). Prior to the meeting, each invited panelist received

edTPA handbooks, rubrics, scoring materials, and four previously scored edTPA submissions representing

dierent performance levels across various content areas. Panelists were asked to review materials

submitted by candidates and the scoring evidence identified by trained benchmarkers for the submissions

that were assigned to them.

• Day 1 – Policy Capture 1 Activity Overview/Instructions. Individuals were assigned to collaborate with

other panelists who reviewed the same edTPA materials for the homework assignment. Each panelist

recalled a specific edTPA submission that they reviewed for homework and then provided an individual

rating for that submission. In assigned groups, they discussed their ratings with other panelists with the goal

of arriving at a consensus on the rating. Panelists repeated the process three more times for the other edTPA

submissions they reviewed for homework.

• Day 1 – Policy Capture 2 Activity Overview/Instructions. Each panel determined a score range that would

include the potential cut score. Given this range, a set of “Candidate Score Profiles” (scores for each rubric

and total score) were identified for independent review by the panelists.

27

• Day 2. Each panel returned to the guiding question and framing for establishing a recommended