3DS.COM/EXALEAD

A PRACTICAL GUIDE TO BIG DATA

Opportunities, Challenges & Tools

“Give me a lever long enough and a fulcrum on which

to place it, and I shall move the world.”

1

Archimedes

© 2012 Dassault SystèmesA Practical Guide to Big Data: Opportunities, Challenges & Tools

2

ABOUT THE AUTHOR

Laura Wilber is the former founder and CEO of California-based

AVENCOM, Inc., a software development company specializing

in online databases and database-driven Internet applications

(acquired by Red Door Interactive in 2004), and she served as

VP of Marketing for Kintera, Inc., a provider of SaaS software

to the nonprofit and government sectors. She also developed

courtroom tutorials for technology-related intellectual property

litigation for Legal Arts Multimedia, LLC. Ms. Wilber earned

an M.A. from the University of Maryland, where she was also

a candidate in the PhD program, before joining the federal

systems engineering division of Bell Atlantic (now Verizon) in

Washington, DC. Ms. Wilber currently works as solutions ana-

lyst at EXALEAD. Prior to joining EXALEAD, Ms. Wilber taught

Business Process Reengineering, Management of Informa-

tion Systems and E-Commerce at ISG (l’Institut Supérieur de

Gestion) in Paris. She and her EXALEAD colleague Gregory

Grefenstette recently co-authored Search-Based Applications: At

the Confluence of Search and Database Technologies, published in

2011 by Morgan & Claypool Publishers.

A Practical Guide to Big Data: Opportunities, Challenges & Tools© 2012 Dassault Systèmes

3

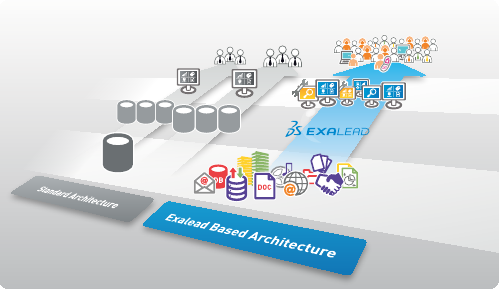

ABOUT EXALEAD

Founded in 2000 by search engine pioneers, EXALEAD®

is the leading Search-Based Application platform provider to

business and government. EXALEAD’s worldwide client base

includes leading companies such as PricewaterhouseCooper,

ViaMichelin, GEFCO, the World Bank and Sanofi Aventis

R&D, and more than 100 million unique users a month use

EXALEAD’s technology for search and information access.

Today, EXALEAD is reshaping the digital content landscape

with its platform, EXALEAD CloudView™, which uses advanced

semantic technologies to bring structure, meaning and

accessibility to previously unused or under-used data in the

new hybrid enterprise and Web information cloud. CloudView

collects data from virtually any source, in any format, and

transforms it into structured, pervasive, contextualized building

blocks of business information that can be directly searched

and queried, or used as the foundation for a new breed of lean,

innovative information access applications.

EXALEAD was acquired by Dassault Systèmes in June 2010.

EXALEAD has offices in Paris, San Francisco, Glasgow, London,

Amsterdam, Milan and Frankfurt.

© 2012 Dassault SystèmesA Practical Guide to Big Data: Opportunities, Challenges & Tools

4

EXECUTIVE SUMMARY

What is Big Data?

While a fog of hype often envelops the omnipresent discussions

of Big Data, a clear consensus has at least coalesced around the

definition of the term. “Big Data” is typically considered to be

a data collection that has grown so large it can’t be effectively

or affordably managed (or exploited) using conventional data

management tools: e.g., classic relational database manage-

ment systems (RDBMS) or conventional search engines,

depending on the task at hand. This can as easily occur at 1

terabyte as at 1 petabyte, though most discussions concern col-

lections that weigh in at several terabytes at least.

Familiar Challenges, New Opportunities

If one can make one’s way through the haze, it also becomes

clear that Big Data is not new. Information specialists in fields

like banking, telecommunications and the physical sciences

have been grappling with Big Data for decades.

2

These Big

Data veterans have routinely confronted data collections that

outgrew the capacity of their existing systems, and in such

situations their choices were always less than ideal:

• Need to access it? Segment (silo) it.

• Need to process it? Buy a supercomputer.

• Need to analyze it? Will a sample set do?

• Want to store it? Forget it: use, purge, and move on.

What is new, however, is that now new technologies have

emerged that offer Big Data veterans far more palatable op-

tions, and which are enabling many organizations of all sizes

and types to access and exploit Big Data for the very first time.

This includes data that was too voluminous, complex or fast-

moving to be of much use before, such as meter or sensor

readings, event logs, Web pages, social network content, email

messages and multimedia files. As a result of this evolution, the

Big Data universe is beginning to yield insights that are chang-

ing the way we work and the way we play, and challenging

just about everything we thought we knew about ourselves,

the organizations in which we work, the markets in which we

operate– even the universe in which we live.

The Internet: Home to Big Data Innovation

Not surprisingly, most of these game-changing technologies

were born on the Internet, where Big Data volumes collided

with a host of seemingly impossible constraints, including the

need to support:

• Massive and impossible to predict traffic

• A 99.999% availability rate

• Sub-second responsiveness

• Sub-penny per-session costs

• 2-month innovation roadmaps

To satisfy these imposing requirements constraints, Web entre-

preneurs developed data management systems that achieved

supercomputer power at bargain-basement cost by distributing

computing tasks in parallel across large clusters of commodity

servers. They also gained crucial agility – and further ramped

up performance – by developing data models that were far

more flexible than those of conventional RDBMS. The best

known of these Web-derived technologies are non-relational

databases (called “NoSQL” for “Not-Only-SQL,” SQL being the

standard language for querying and managing RDBMS), like

the Hadoop framework (inspired by Google; developed and

open-sourced to Apache by Yahoo!) and Cassandra (Facebook),

and search engine platforms, like CloudView (EXALEAD) and

Nutch (Apache).

Another class of solutions, for which we appropriate (and

expand) the “NewSQL” label coined by Matthew Aslett of the

451 Group, strives to meet Big Data needs without abandon-

ing the core relational database model.

4

To boost performance

and agility, these systems employ strategies inspired by the

Internet veterans (like massive distributed scaling, in-memory

processing and more flexible, NoSQL-inspired data models), or

they employ strategies grown closer to (RDBMS) home, like in-

memory architectures and in-database analytics. In addition, a

new subset of such systems has emerged over the latter half of

2011 that goes one step further in physically combining high

performance RDMBS systems with NoSQL and/or search plat-

forms to produce integrated hardware/software applicances for

deep analytics on integrated structured and unstructured data.

The Right Tool for the Right Job

Together, these diverse technologies can fulfill almost any Big

Data access, analysis and storage requirement. You simply need

to know which technology is best suited to which type of task,

and to understand the relative advantages and disadvantages of

particular solutions (usability, maturity, cost, security, etc.).

Complementary, Not Competing Tools

In most situations, NoSQL, Search and NewSQL technologies

play complementary rather than competing roles. One excep-

tion is exploratory analytics, for which you may use a Search

“In the era of Big Data, more isn’t just more.

More is different.”

3

A Practical Guide to Big Data: Opportunities, Challenges & Tools© 2012 Dassault Systèmes

5

platform, a NoSQL database, or a NewSQL solution depending

on your needs. A search platform alone may be all you need if

1) you want to offer self-service exploratory analytics to gen-

eral business users on unstructured, structured or hybrid data,

or 2) if you wish to explore previously untapped resources like

log files or social media, but you prefer a low risk, cost-effective

method of exploring their potential value.

Likewise, for operational reporting and analytics, you could use

a Search or NewSQL platform, but Search may once again be all

you need if your analytics application targets human decision-

makers, and if data latency of seconds or minutes is sufficient

(NoSQL systems are subject to batch-induced latency, and

few situations require the nearly instanteous, sub-millisecond

latency of expensive NewSQL systems).

While a Search platform alone may be all you need for analyt-

ics in certain situations, and it is a highly compelling choice

for rapidly constructing general business applications on top

of Big Data, it nonetheless makes sense to deploy a search

engine alongside a NoSQL or NewSQL system in every Big Data

scenario, for no other technology is as effective and efficient as

Search at making Big Data accessible and meaningful to human

beings.

This is, in fact, the reason we have produced this paper. We

aim to shed light on the use of search technology in Big Data

environments – a role that’s often overlooked or misunderstood

even though search technologies are profoundly influencing

the evolution of data management – while at the same time

providing a pragmatic overview of all the tools available to meet

Big Data challenges and capitalize on Big Data opportunities.

Our own experience with customers and partners has shown

us that for all that has been written about Big Data recently, a

tremendous amount of confusion remains. We hope this paper

will dispel enough of this confusion to help you get on the road

to successfully exploiting your own Big Data.

A Practical Guide to Big Data: Opportunities, Challenges & Tools© 2012 Dassault Systèmes

7

TABLE OF CONTENTS

1. Crossing the Zetta Frontier………………………………..8

A. What is Big Data?…………………………………………………….8

B. Who is Affected by Big Data?…………………………………….8

C. Big Data: Boon or Bane?……………………………………………8

2. Big Data Opportunities………………………………………9

A. Faceted Search at Scale…………………………………………….9

B. Multimedia Search…………………………………………………10

C. Sentiment Analysis……………………………………………..…10

D. Database Enrichment…………………………………………..…11

E. Exploratory Analytics……………………………………………..11

F. Operational Analytics……………………………………………...13

3. Breakthrough Innovation from the Internet…………14

A. Distributed Architectures & Parallel Processing…………..15

B. Relaxed Consistency & Flexible Data Models………………15

C. Caching & In-Memory Processing…………..…………………16

4. Big Data Toolbox……………………………………………..16

A. Data Capture & Preprocessing…………..….………………….16

1) ETL Tools.…………..........………………………………………….16

2) APIs (Application Programming Interfaces) / Connectors…....17

3) Crawlers…………………………………….…………………………17

4) Messaging Systems………………………..……………………..19

B. Data Processing & Interaction…………..….…………………..20

1) NoSQL Systems……………………………..….………………….20

2) NewSQL…………………………..….………………………………23

3) Search Platforms…………………………..……………………….24

C. Auxiliary Tools…………..….…………….…………………………27

1) Cloud Services…………..….…………….…………………………27

2) Visualization Tools…………..….…………………………………28

5. Case Studies………………………….………………………..28

A. GEFCO: Breaking through Performance Barriers…………28

B. Yakaz: Innovating with Search + NoSQL……………………29

C. La Poste: Building Business Applications on Big Data…………30

D. …And Many Others………..……………………………………...31

6. Why EXALEAD CloudView

TM

?……………………………32

© 2012 Dassault SystèmesA Practical Guide to Big Data: Opportunities, Challenges & Tools

8

1) CROSSING

THE ZETTA FRONTIER

Fueled by the pervasiveness of the Internet, unprecedented

computing power, ubiquitous sensors and meters, addictive

consumer gadgets, inexpensive storage and (to-date) highly

elastic network capacity, we humans and our machines are

cranking out digital information at a mind-boggling rate.

IDC estimates that in 2010 alone we generated enough digital

information worldwide to fill a stack of DVDs reaching from

the earth to the moon and back. That’s about 1.2 zettabytes,

or more than one trillion gigabytes—a 50% increase over 2009.

5

IDC further estimates that from 2011 on, the amount of data

produced globally will double every 2 years.

No wonder then scientists coined a special term – ”Big Data”

– to convey the extraordinary scale of the data collections now

being amassed inside public and private organizations and out

on the Web.

A. What Exactly is ”Big Data”?

Big Data is more a concept than a precise term. Some apply the

”Big Data” label only to petabyte-scale data collections (> one

million GB). For others, a Big Data collection may house ‘only’

a few dozen terabytes of data. More often, however, Big Data is

defined situationally rather than by size. Specifically, a data col-

lection is considered “Big Data” when it is so large an organiza-

tion cannot effectively or affordably manage or exploit it using

conventional data management tools.

B. Who Is Affected By Big Data?

Big Data has been of concern to organizations working in select

fields for some time, such as the physical sciences (meteorol-

ogy, physics), life sciences (genomics, biomedical research),

government (defense, treasury), finance and banking (transac-

tion processing, trade analytics), communications (call records,

network traffic data), and, of course, the Internet (search engine

indexation, social networks).

Now, however, due to our digital fecundity, Big Data is becom-

ing an issue for organizations of all sizes and types.

In fact, in 2008 businesses were already managing on average

100TB or more of digital content.

6

Big Data has even become

a concern of individuals as awareness grows of the depth and

breadth of personal information being amassed in Big Data

collections (in contrast, some, like LifeLoggers,

7

broadcast their

day-to-day lives in a Big Data stream of their own making).

C. Big Data: Boon or Bane?

For some, Big Data simply means Big Headaches, raising

difficult issues of information system cost, scaling and perfor-

mance, as well as data security, privacy and ownership.

But Big Data also carries the potential for breakthrough insights

and innovation in business, science, medicine and govern-

ment—if we can bring humans, machines and data together

to reveal the natural information intelligence locked inside our

mountains of Big Data.

BIG DATA

A data collection that is too large to be effectively

or affordably managed using conventional

technologies.

1000 Gigabytes (GB) ≈ 1 Terabyte (To)

1000 Terabytes ≈ 1 Petabyte (Po)

1000 Petabytes ≈ 1 Exabyte (Eo)

1000 Exabytes ≈ 1 Zettabyte (Zo)

1000 Zettabytes ≈ 1 Yottabyte (Yo)

Measuring Big Data

Disk Storage*

* For Processor or Virtual Storage, replace 1000 with 1024.

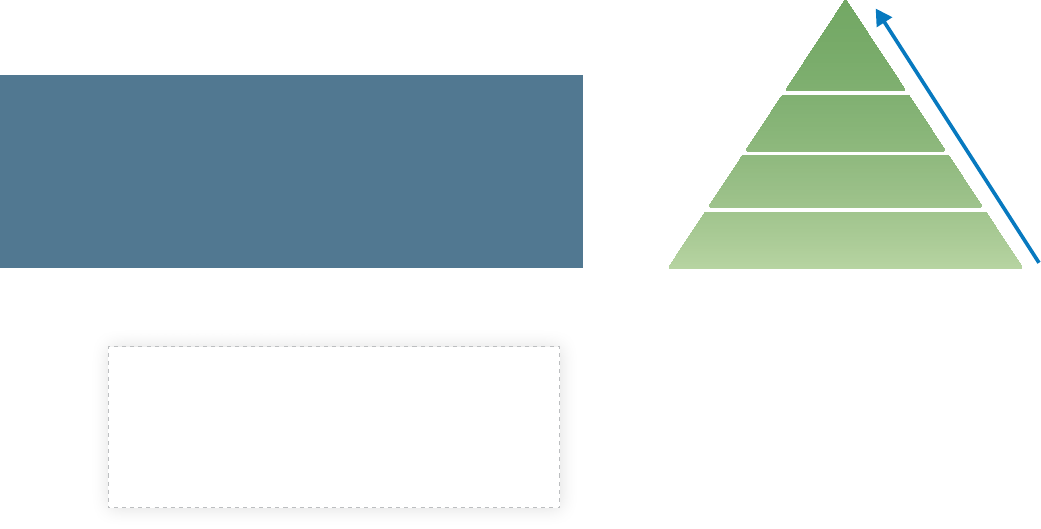

Knowledge

Information

Data

Decision Making

Synthesizing

Analyzing

Summarizing

Organizing

Collecting

Wisdom

The classic data management mission: transforming raw data into action-

guiding wisdom. In the era of Big Data, the challenge is to find automated,

industrial-grade methods for accomplishing this transformation.

A Practical Guide to Big Data: Opportunities, Challenges & Tools© 2012 Dassault Systèmes

9

2) BIG DATA

OPPORTUNITIES

Innovative public and private organizations are already demon-

strating that transforming raw Big Data collections into action-

able wisdom is possible. They are showing in particular that

tremendous value can be extracted from the ”grey” data that

makes up the bulk of Big Data, that is to say data that

is unused (or under-used) because it has historically been:

1) Too voluminous, unstructured and/or raw (i.e., minimally

structured) to be exploited by conventional information

systems, or

2) In the case of highly structured data, too costly or complex

to integrate and exploit (e.g., trying to gather and align data

from dozens of databases worldwide).

These organizations are also opening new frontiers in opera-

tional and exploratory analytics using structured data (like

database content), semi-structured data (such as log files or

XML files) and unstructured content (like text documents or

Web pages).

Some of the specific Big Data opportunities they are capitaliz-

ing on include:

• Faceted search at scale

• Multimedia search

• Sentiment analysis

• Automatic database enrichment

• New types of exploratory analytics

• Improved operational reporting

We’ll now look more closely at these opportunities, with each

accompanied by a brief example of an opportunity realized

using a technology whose role is often overlooked or misunder-

stood in the context of Big Data: the search engine. We’ll then

review the full range of tools available to organizations seek-

ing to exploit Big Data, followed by further examples from the

search world.

A. Faceted Search at Scale

Faceted search is the process of iteratively refining a search

request by selecting (or excluding) clusters or categories of

results. In contrast to the conventional method of paging

through simple lists of results, faceted search (also referred to

as parametric search and faceted navigation) offers a remark-

ably effective means of searching and navigating large volumes

of information—especially when combined with user aids like

type-ahead query suggestions, auto-spelling correction and

fuzzy matching (matching via synonyms, phonetics and ap-

proximate spelling).

Until recently, faceted search could only be provided against

relatively small data sets because the data classification and

descriptive meta-tagging upon which faceted search depends

were largely manual processes. Now, however, industrial-grade

natural language processing (NLP) technologies are making it

possible to automatically classify and categorize even Big Data-

size collections of unstructured content, and hence to achieve

faceted search at scale.

NATURAL LANGUAGE PROCESSING (NLP)

Rooted in artificial intelligence, NLP—also referred

to as computational linguistics—uses tools like

statistical algorithms and machine learning to

enable computers to understand instances of

human language (like speech transcripts, text

documents and SMS messages). While NLP

focuses on the structural features of an utterance,

semantics goes beyond form in seeking to identify

and understand meanings and relationships.

FACETED SEARCH EXAMPLE:

EXALEAD CloudView

TM

uses industrial-grade semantic

and statistical processing to automatically cluster and

categorize search results for an index of 16 billion Web

pages (approx. 6 petabytes of raw data).

Facets hide the scale and complexity of Big Data

collections from end users, boosting search success and

making search and navigation feel simple and natural.

© 2012 Dassault SystèmesA Practical Guide to Big Data: Opportunities, Challenges & Tools

10

You can see industrial faceting at work in the dual Web/enter-

prise search engine EXALEAD CloudView

TM

, in other public Web

search engines like Google, Yahoo! and Bing, and, to varying

degrees of automation and scale, in search utilities from organi-

zations like HP, Oracle, Microsoft and Apache.

Look for this trend to accelerate and to bring new accessibility

to unstructured Big Data.

B. Multimedia Search

Multimedia content is the fastest growing type of user-gen-

erated content, with millions of photos, audio files and videos

uploaded to the Web and enterprise servers daily. Exploiting

this type of content at Big Data scale is impossible if we must

rely solely on human tagging or basic associated metadata like

file names to access and understand content.

However, recent technologies like automatic speech-to-text

transcription and object-recognition processing (called Content-

Based Image Retrieval, or CBIR) are enabling us to structure this

content from the inside out, and paving the way toward new

accessibility for large-volume multimedia collections. Look for

this trend to have a significant impact in fields like medicine,

media, publishing, environmental science, forensics and digital

asset management.

C. Sentiment Analysis

Sentiment analysis uses semantic technologies to automati-

cally discover, extract and summarize the emotions and at-

titudes expressed in unstructured content. Semantic analysis

is sometimes applied to behind-the-firewall content like email

messages, call recordings and customer/constituent surveys.

More commonly, however, it is applied to the Web, the world’s

first and foremost Big Data collection and the most comprehen-

sive repository of public sentiment concerning everything from

ideas and issues to people, products and companies.

Sentiment analysis on the Web typically entails collecting data

from select Web sources (industry sites, the media, blogs, fo-

rums, social networks, etc.), cross-referencing this content with

target entities represented in internal systems (services, prod-

ucts, people, programs, etc.), and extracting and summarizing

the sentiments expressed in this cross-referenced content.

Multimedia Search Example:

FRANCE 24 is a 24/7 international news channel broadcasting in French,

English and Arabic. In partnership with EXALEAD, Yacast Media and Vecsys,

FRANCE 24 is automatically generating near real-time transcripts of its broad-

casts, and using semantic indexation of these transcripts to offer “full text“

search inside videos. Complementary digital segmentation technology enables

users to jump to the precise point in the broadcast where their search term is

used.

The Web: The world’s first and foremost

Big Data collection.

SENTIMENT ANALYSIS EXAMPLE:

A large automotive vehicle manufacturer uses Web

sentiment analysis to improve product quality

management. The application uses the EXALEAD

CloudView

TM

platform to extract, analyze and

organize pertinent quality-related information from

consumer car forums and other resources so the

company can detect and respond to potential issues

at an early stage. Semantic processors automatically

structure this data by model, make, year, type of

symptom and more.

A Practical Guide to Big Data: Opportunities, Challenges & Tools© 2012 Dassault Systèmes

11

This type of Big Data analysis can be a tremendous aid in

domains as diverse as product development and public policy,

bringing unprecedented scope, accuracy and timeliness to ef-

forts such as:

• Monitoring and managing public perception of an issue,

brand, organization, etc. (called “reputation monitoring”)

• Analyzing reception of a new or revamped service or

product

• Anticipating and responding to potential quality, pricing or

compliance issues

• Identifying nascent market growth opportunities and

trends in customer demand

D. Database Enrichment

Once you can collect, analyze and organize unstructured

Big Data, you can use it to enhance and contextualize exist-

ing structured data resources like databases and data ware-

houses. For instance, you can use information extracted from

high-volume sources like email, chat, website logs and social

networks to enrich customer profiles in a Customer Relation-

ship Management (CRM) system. Or, you can extend a digital

product catalog with Web content (like, product descriptions,

photos, specifications, and supplier information). You can even

use such content to improve the quality of your organization’s

master data management, using the Web to verify details or fill

in missing attributes.

E. Exploratory Analytics

Exploratory analytics has aptly been defined as “the process of

analyzing data to learn about what you don’t know to ask.”

8

It

is a type of analytics that requires an open mind and a healthy

sense of curiosity. In practice, the analyst and the data engage

in a two-way conversation, with researchers making discover-

ies and uncovering possibilities as they follow their curiosity

from one intriguing fact to another (hence the reason exploratory

analytics are also called “iterative analytics”).

In short, it is the opposite of conventional analytics, referred

to as Online Analytical Processing (OLAP). In classic OLAP, one

seeks to retrieve answers to precise, pre-formulated questions

from an orderly, well-known universe of data. Classic OLAP is

also sometimes referred to as Confirmatory Data Analysis (CDA)

as it is typically used to confirm or refute hypotheses.

Discovering Hidden Meanings & Relationships

There is no doubt that the Big Data collections we are now

amassing hold the answers to questions we haven’t yet

thought to ask. Just imagine the revelations lurking in the

100 petabytes of climate data at the DKRZ (German Climate

Computing Center), or in the 15 petabytes of data produced an-

nually by the Large Hadron Collider (LHC) particle accelerator, or

in the 200 petabytes of data Yahoo! has stocked across its farm

of 43,000 (soon to be 60,000) servers.

An even richer vein lies in cross-referencing individual collec-

tions. For example, cross-referencing Big Data collections of

genomic, demographic, chemical and biomedical information

DATABASE ENRICHMENT EXAMPLE:

The travel and tourism arm of France’s high speed

passenger rail service, Voyages-SNCF, uses unstructured

Web data (like local events and attractions and travel

articles and news) to enhance the content in its internal

transport and accommodation databases. The result

is a full-featured travel planning site that keeps the

user engaged through each stage of the purchase

cycle, boosting average sales through cross-selling,

and helping to make Voyages-SNCF.com a first-stop

reference for travel planning in France.

“Big Data will become a key basis of competition,

underpinning new waves of productivity growth,

innovation and consumer surplus—as long as the

right policies and enablers are in place.”

McKinsey Global Institute

9

“[Exploratory analytic] techniques make it

feasible to look for more haystacks, rather than

just the needle in one haystack.”

10

© 2012 Dassault SystèmesA Practical Guide to Big Data: Opportunities, Challenges & Tools

12

might move us closer to a cure for cancer. At a more mundane

level, such large scale cross-referencing may simply help us bet-

ter manage inventories, as when Wal-Mart hooked up weather

and sales data and discovered that hurricane warnings trigger

runs not just on flashlights and batteries (expected), but also on

strawberry Pop-Tarts breakfast pastries (not expected), and that

the top-selling pre-hurricane item is beer (surprise again).

However, Wal-Mart’s revelation was actually not the result of

exploratory analytics (as is often reported), but rather conven-

tional analytics. In 2004, with Hurricane Frances on the way,

Wal-Mart execs simply retrieved sales data for the days before

the recently passed Hurricane Charley from their then-460TB

data warehouse, and fresh supplies of beer and pastries were

soon on their way to stores in Frances’ path.

11

What’s important about the Wal-Mart example is to imagine

what could happen if we could turn machines loose to discover

such correlations on their own. In fact, we do this now in two

ways: one can be characterized as a ”pull” approach, the other

a ”push” strategy.

In the “pull” method, we can turn semantic mining tools loose

to identify the embedded relationships, patterns and mean-

ings in data, and then use visualization tools, facets (dynamic

clusters and categories) and natural language queries to explore

these connections in a completely ad hoc manner. In the second

“push” method, we can sequentially ask the data for answers

to specific questions, or instruct it to perform certain operations

(like sorting), to see what turns up.

Improving the Accuracy and Timeliness

of Predictions

The goal of exploratory, ”let’s see what turns up” analytics is

almost always to generate accurate, actionable predictions.

In traditional OLAP, this is done by applying complex statisti-

cal models to clean sample data sets within a formal, scientific

“hypothesize, model, test” process.

Exploratory analytics accelerate this formal process by deliver-

ing a rich mine of ready-to-test models that may never have

otherwise come to light. And, though conventional predictive

analytics are in no danger of being sidelined, running simple

algorithms against messy Big Data collections can produce

forecasts that are as accurate as complex analytics on well-

scrubbed, statistically-groomed sample data sets.

For example, real estate services provider Akerys uses the

EXALEAD CloudView

TM

platform to aggregate, organize and

structure real estate market statistics extracted daily from the

major real estate classifieds websites. As a result, Akerys’ public

Labo-Immo project (labo-immo.org) enables individuals to accu-

rately identify and explore market trends two-to-three months

in advance of the official statistics compiled by notaries and

other industry professionals.

In another example drawn from the world of the Web, Google

analyzed the frequency of billions of flu symptom-related

Web searches and demonstrated that it was possible to predict

flu outbreaks with as much accuracy as the U.S. Centers for

Disease Control and Prevention (CDC), whose predictions were

based on a complex analytics applied to data painstakingly

compiled from clinics and physicians. Moreover, as people tend

to conduct Internet research before visiting a doctor, the Web

search data revealed trends earlier, giving health care communi-

ties valuable lead time in preparing for outbreaks. Now the CDC

and other health organizations like the World Health Organiza-

tion use Google Flu Trends as an additional disease monitoring

tool.

13

“Business decisions will increasingly be made, or

at least corroborated, on the basis of computer

algorithms rather than individual hunches.“

12

Search-based analytics offers an effective means of distilling information intel-

ligence from large-volume data sets, especially un- or semi-structured corpora

such as Web collections.

Trends as an additional disease monitoring tool.

A Practical Guide to Big Data: Opportunities, Challenges & Tools© 2012 Dassault Systèmes

13

Of note, too, is the fact that neither the CDC nor clinic directors

care why Web searches so closely mirror—and anticipate—CDC

predictions: they’re just happy to have the information. This is

the potential of exploratory Big Data analytics: sample it all in,

see what shows up, and, depending on your situation, either

act on it—or relay it to specialists for investigation or validation.

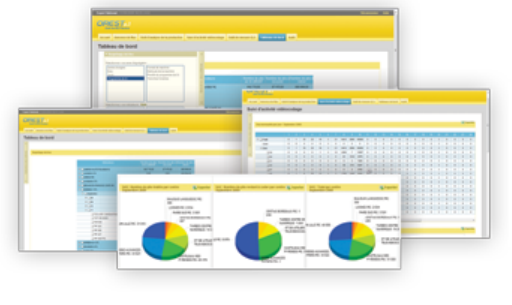

F. Operational Analytics

While exploratory analytics are terrific for planning, operational

analytics are ideal for action. The goal of such analytics is to de-

liver actionable intelligence on meaningful operational metrics

in real or near-real time.

This is not easy as many such metrics are embedded in massive

streams of small-packet data produced by networked devices

like ‘smart’ utility meters, RFID readers, barcode scanners, web-

site activity monitors and GPS tracking units. It is machine data

designed for use by other machines, not humans.

Making it accessible to human beings has traditionally not been

technically or economically feasible for many organizations.

New technologies, however, are enabling organizations to over-

come technical and financial hurdles to deliver human-friendly

and analysis of real-time Big Data streams (see Chapter 4).

As a result, more organizations (particularly in sectors like tele-

communications, logistics, transport, retailing and manufactur-

ing) are producing real-time operational reporting and analytics

based on such data, and significantly improving agility, opera-

tional visibility, and day-to-day decision making as a result.

Consider, for example, the case of Dr. Carolyn McGregor of the

University of Ontario. Conducting research in Canada, Australia

and China, she is using real-time, operational analytics on Big

Data for early detection of potentially fatal infections in prema-

ture babies. The analytics platform monitors real-time streams

of data like respiration, heart rate and blood pressure readings

captured by medical equipment (with electrocardiograms alone

generating 1,000 readings per second).

The system can detect anomalies that may signal the onset

of an infection long before symptoms emerge, and well in ad-

“Invariably, simple models and a lot of data

trump more elaborate models based on less

data.”

14

Alon Halevy, Peter Norvig & Fernando Pereira

vance of the legacy approach of having a doctor review limited

data sets on paper every hour or two. As Dr. McGregor notes,

“You can’t see it with the naked eye, but a computer can.”

15

EXPLORATORY ANALYTICS EXAMPLE:

In an example of exploratory analytics inside the

enterprise, one of the world’s largest global retailers

is using an EXALEAD CloudView

TM

Search-Based

Application (SBA) to enable non-experts to use natural

language search, faceted navigation and visualization

to explore the details of millions of daily cash register

receipts. Previously, these receipts, which are stored

in an 18TB Teradata data warehouse, could only

be analyzed by Business Intelligence system users

executing canned queries or complex custom queries.

A second SBA further enables users to perform

exploratory analytics on a cross-referenced view of

receipt details and loyalty program data (also housed

in a Teradata data warehouse). Users can either enter

a natural language query like “nutella and paris” to

launch their investigations, or they can simply drill

down on the dynamic data clusters and categories

mined from source systems to explore potentially

significant correlations.

Both of these SBAs are enabling a wide base of

business users to mine previously siloed data for

meaningful information. They are also improving the

timeliness and accuracy of predictions by revealing

hidden relationships and trends.

© 2012 Dassault SystèmesA Practical Guide to Big Data: Opportunities, Challenges & Tools

14

3) BREAKTHROUGH INNOVATION

FROM THE INTERNET

As the examples in Chapter 2 demonstrate, it is possible to

overcome the technical and financial challenges inherent in

seizing Big Data opportunities. This capability is due in large

part to tools and technologies forged over the past 15 years by

Internet innovators including:

• Web search engines like EXALEAD, Google and Yahoo!,

who have taken on the job of making the ultimate Big Data

collection, the Internet, accessible to all.

• Social networking sites like LinkedIn and Facebook.

• eCommerce giants like Amazon.

These organizations and others like them found that conven-

tional relational database technology was too rigid and/or

costly for many data processing, access and storage tasks in the

highly fluid, high-volume world of the Web.

Relational database management systems (RDBMS) were,

after all, initially designed (half a century ago) to accurately and

reliably record transactions like payments and orders for brick-

and-mortar businesses. To protect the accuracy and security

of this information, they made sure incoming data adhered to

elaborate, carefully-constructed data models and specifications

through processing safeguards referred to as ACID constraints

(for data Atomicity, Consistency, Isolation and Durability).

These ACID constraints proved to be highly effective at ensur-

ing data accuracy and security, but they are very difficult to

scale, and for certain types of data interaction—like social net-

working, search and exploratory analytics—they are not even

wholly necessary. Sometimes, maximizing system availability

and performance are higher priorities than ensuring full data

consistency and integrity.

Accordingly, Internet businesses developed new data manage-

ment systems that relaxed ACID constraints and permitted

them to scale their operations massively and cost-effectively

while maintaining optimal availability and performance.

OPERATIONAL ANALYTICS EXAMPLE:

A leading private electric utility and the world’s largest

renewable energy operator has deployed a CloudView

Search-Based Application (SBA) to better manage its

wind power production. Specifically, they are using

CloudView to automate cumbersome analytic processes

and deliver timelier production forecasts.

The CloudView SBA works by allowing a quasi-real-

time comparison of actual production data from

metering equipment (fed into an Oracle system)

and forecastdata produced by an MS SQL Server

application. Prior to deploying CloudView, separately

stored production and forecast data had to be manually

compared – an inefficient and error-prone process with

undesirable lag time.

The new streaming predictive analytics capability is

boosting the company’s ability to achieve an optimal

balance between actual and forecast production to

minimize costly surpluses or deficits. The use of an

SBA also offers unlimited, ad-hoc drill down on all

data facets maintained in source systems, including

reporting and analytics by geographic location (country,

region, city, etc.) and time period (hour, day, week,

month, etc.). Historical data is retained for long-range

analytics.

As an added benefit, the platform is improving overall

information systems responsiveness by offloading

routine information requests from the Oracle and MS

SQL Server systems. The Proof-of-Concept (POC) for

this SBA was developed in just 5 days.

See the GEFCO and La Poste case studies in Chapter 5

for additional examples of operational reporting and

analytics on Big Data.

“Reliability at massive scale is one of the biggest

challenges we face at Amazon.com… Even

the slightest outage has significant financial

consequences and impacts customer trust.”

16

Amazon

A Practical Guide to Big Data: Opportunities, Challenges & Tools© 2012 Dassault Systèmes

15

A. Distributed Architectures & Parallel Processing

One of the most important ways they achieved this was by

distributing processing and access tasks in parallel across large

(and often geographically dispersed) grids of loosely coupled,

inexpensive commodity servers.

Working in parallel, these collections of low-end servers can

rival supercomputers in processing power at a fraction of the

cost, and ensure continuous service availability in the case of

inevitable hardware failures.

It is an architecture inspired by symmetric multi-processing

(SMP), massively parallel processing (MPP) and grid computing

strategies and technologies.

B. Relaxed Consistency & Flexible Data Models

In addition to distributed architectures and parallel processing,

these Internet innovators also achieved greater performance,

availability and agility by designing systems that can ingest

and process inconsistent, constantly evolving data. These flex-

ible models, together with semantic technologies, have also

played a primary role in making grey data exploitable (these

models are discussed in Chapter 4, Section B, Data Processing

& Interaction).

SOME COMMON RDBMS

MS SQL Server

MySQL

PostgreSQL

Oracle 11g

IBM DB2 & Informix

ACID CONSTRAINTS

Atomicity

Consistency

Isolation

Durability

Match low revenue

model

($0,0001/session)

Follow a 2-month

innovation roadmap

Handle 100s of

millions

of database records

Offer 99,999%

availability

Be usable without

training

Support impossible

to forecast traffic

Present up-to-date

information

Provide sub-second

response times

Dynamo

SimpleDB

Cassandra

CloudView

MapReduce

BigTable

Hadoop

Voldemort

Internet Drives Data Management Innovation

TYPES OF PARALLEL PROCESSING

In parallel processing, programming tasks are broken

into subtasks and executed in parallel across multiple

computer processors to boost computing power and

performance. Parallel processing can take place in

a single multiple processor computer, or across

thousands of single- or multi-processor machines.

SMP is parallel processing across a small number of

tightly-coupled processors (e.g., shared memory, data

bus, disk storage (sometimes), operating system (OS)

instance, etc.).

MPP is parallel processing across a large number of

loosely-coupled processors (each node having its

own local memory, disk storage, OS copy, etc.). It is a

“shared nothing” versus “shared memory” or “shared

disk” architecture. MMP nodes usually communicate

across a specialized, dedicated network, and they are

usually homogeneous machines housed in a single

location.

Grid Computing also employs loosely-coupled nodes

in a shared-nothing framework, but, unlike SMP and

MPP, a grid is not architected to act as a single computer

but rather to function like individual collaborators

working together to solve a single problem, like

modeling a protein or refining a climate model.

Grids are typically inter-organizational collaborations

that pool resources to create a shared computing

infrastructure. They are usually heterogeneous, widely

dispersed, and communicate using standard WAN

technologies. Examples include on-demand grids (e.g.,

Amazon EC2), peer-to-peer grids (e.g., SETI@Home),

and research grids (e.g., DutchGrid).

© 2012 Dassault SystèmesA Practical Guide to Big Data: Opportunities, Challenges & Tools

16

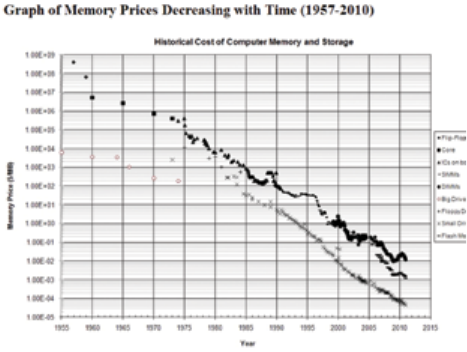

C. Caching & In-Memory Processing

Most further developed systems that make heavy use of data

caching, if not full in-memory storage and processing. (In

in-memory architectures, data is stored and processed in high

speed RAM, eliminating the back-and-forth disk input/output

(I/O) activity that can bottleneck performance.) This evolution

is due in equal parts to innovation, a dramatic decrease in the

cost of RAM (see chart below), and to the rise of distributed

architectures (even though the price of RAM has dropped, it’s

still far less expensive to buy a batch of commodity computers

whose combined RAM is 1TB than to buy a single computer

with 1TB RAM).

While few organizations deals with Internet-scale data manage-

ment challenges, these Web-born innovations have nonethe-

less spawned pragmatic commercial and open source tools and

technologies anyone can use right now to address Big Data

challenges and take advantage of Big Data opportunities.

Let’s look at that toolbox now.

Data/chart copyright 2001, 2010, John C. McCallum.

See www.jcmit.com/mem2010.htm.

4) THE BIG DATA TOOLBOX

While some research organizations may rely on supercomputers

to meet their Big Data needs, our toolbox is stocked with tools

accessible to organizations of all sizes and types.

These tools include:

A.Data Capture & Preprocessing

1.ETL (Extract, Transform and Load) Tools

2.APIs (Application Programming Interfaces) / Connectors

3.Crawlers

4.Messaging Systems

B.Data Processing & Interaction

1.NoSQL Systems

2.NewSQL Systems

3.Search Engines

C.Auxiliary Tools

1.Cloud Services

2.Visualization Tools

Each has a different role to play in capturing, processing, ac-

cessing or analyzing Big Data. Let’s look first at data capture

and preprocessing tools.

A. Data Capture & Preprocessing

1.ETL TOOLS

Primary Uses

• Data consolidation (particularly loading data warehouses)

• Data preprocessing/normalization

Definition

ETL (Extract, Transform and Load) tools are used to map and

move large volumes of data from one system to another.

They are most frequently used as data integration aids. More

specifically, they are commonly used to consolidate data from

multiple databases into a central data warehouse through bulk

data transfers. ETL platforms usually include mechanisms for

”normalizing” source data before transferring it, that is to say,

for performing at least the minimal processing needed to align

incoming data with the target system’s data model and specifi-

cations, and removing duplicate or anomalous data.

Examples

Solutions range from open source platforms to expensive

commercial offerings, with some ETLs available as embedded

modules in BI and database systems. Higher-end commercial

solutions are most likely to offer features useful in Big Data

contexts, like data pipelining and partitioning, and compatibility

with SMP, MPP and grid environments.

A Practical Guide to Big Data: Opportunities, Challenges & Tools© 2012 Dassault Systèmes

17

Some ETL examples include:

• Ab Initio

• CloverETL (open source)

• IBM Infosphere DataStage

• Informatica PowerCenter

• Jasper ETL (open source – Talend-powered)

• MS SQL Server Integration Services

• Oracle Warehouse Builder (embedded in Oracle 11g)

& Oracle Data Integrator

• Talend Open Studio (open source)

Caveats

In Big Data environments, the Extract process can sometimes

place an unacceptable burden on source systems, and the

Transform stage can be a bottleneck if the data is minimally

structured or very raw (most ETL platforms require an exter-

nal or add-on module to handle unstructured data). The Load

process can also be quite slow even when the code is optimized

for large volumes. This is why ETL transfers, which are widely

used to feed data warehouses, tend to be executed during

off-hours—usually overnight—resulting in unacceptable data

latency in some situations. Note, however, that many ETL

vendors are developing - or have already developed - special

editions to address these limitations, such as the Real Time Edi-

tion of Informatica’s PowerCenter (in fact, their new 9.1 release

is specially tailored for Big Data environments).

2.APIS

Primary Use

• Data exchange/integration

Definition

An Application Programming Interface (API) is a software-to-

software interface for exchanging almost every type of service

or data you can conceive, though we focus here on the use of

APIs as tools for data exchange or consolidation. In this context,

an API may enable a host system to receive (ingest) data from

other systems (a “push” API), or enable others to extract data

from it (a publishing or ”pull” API). APIs typically employ

standard programming languages and protocols to facilitate

exchanges (e.g., HTTP/REST, Java, XML). Specific instances of

packaged APIs on a system are often referred to as ”connec-

tors,“ and may be general in nature, like the Java Database Con-

nectivity (JDBC) API for connecting to most common RDBMS,

or vendor/platform specific, like a connector for IBM Lotus

Notes.

Examples

APIs are available for most large websites, like Amazon, Google

(e.g., AdSense, Maps), Facebook, Flickr, Twitter, and MySpace.

They are also available for most enterprise business applica-

tions and data management systems. Enterprise search engines

usually offer packaged connectors encompassing most com-

mon file types and enterprise systems (e.g., XML repositories,

file servers, directories, messaging platforms, and content and

document management systems).

Caveats

With Big Data loads, APIs can cause bottlenecks due to poor

design or insufficient computing or network resources, but

they’ve generally proven to be flexible and capable tools for

exchanging large-volume data and services. In fact, you could

argue the proliferation of public and private APIs has played an

important role in creating today’s Big Data world.

Nonetheless, you can still sometimes achieve better perfor-

mance with an embedded ETL tool than an API, or, in the case

of streaming data, with a messaging architecture (see Messag-

ing Systems below).

Moreover, APIs are generally not the best choice for collecting data

from the Web. A crawler is a better tool for that task (see Crawlers

below). There are three main drawbacks to APIs in the Web context:

• In spite of their proliferation, only a tiny percentage of

online data sources are currently accessible via an API.

• APIs usually offer access to only a limited portion of

a site’s data.

• Formats and access methods are at the owner’s discretion,

and may change at any time. Because of this variability

and changeability, it can take a significant amount of time

to establish and maintain individual API links, an effort

that can become completely unmanageable in Big Data

environments.

3.CRAWLERS

Primary Use

• Collection of unstructured data (often Web content) or

small packet data

Definition

A crawler is a software program that connects to a data source,

methodically extracts the metadata and content it contains, and

sends the extracted content back to a host system for index-

ation.

One type of crawler is a file system crawler. This kind of crawler

works its way recursively through computer directories, subdi-

rectories and files to gather file content and metadata (like file

path, name, size, and last modified date). File system crawlers

are used to collect unstructured content like text documents,

semi-structured content like logs, and structured content like

XML files.

Another type of crawler is a Web (HTTP/HTTPS) crawler. This

© 2012 Dassault SystèmesA Practical Guide to Big Data: Opportunities, Challenges & Tools

18

type of crawler accesses a website, captures and transmits the

page content it contains along with available metadata (page

titles, content labels, etc.), then follows links (or a set visitation

list) to proceed to the next site.

Typically a search engine is used to process, store and access

the content captured by crawlers, but crawlers can be used

with other types of data management systems (DMS).

Examples

File system crawlers are normally embedded in other software

programs (search engines, operating systems, databases, etc.).

However, there are a few available in standalone form: River-

Glass EssentialScanner, Sonar, Methabot (these are also Web

crawlers).

Web crawlers are likewise usually embedded, most often in

search engines, though there are standalone open source

crawlers available as well. The best-known Web crawlers are

those employed by public WWW search engines. Web crawler

examples include:

• Bingbot

• crawler4j

• EXALEAD Crawler

• Googlebot

• Heritrix

• Nutch

• WebCrawler

• Yahoo! Slurp

Caveats

As with other data collection tools, one needs to configure

crawls so as not to place an undue load on the source system –

or the crawler. The quality of the crawler determines the extent

to which loads can be properly managed.

It should also be kept in mind that crawlers recognize only a

limited number of document formats (e.g., HTML, XML, text,

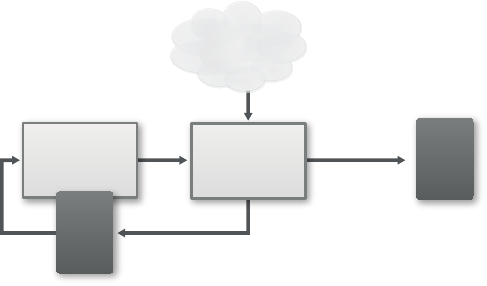

World Wide

Web

Web pages

Scheduler

Multi-threaded

downloader

Text and

metadata

URLs

URLs

Storage

Queue

Basic architecture of a standard Web crawler. Source: Wikipedia.

PDF, etc.).If you want to use a crawler to gather non-supported

document formats, you’ll need to convert data into an ingest-

ible format using tools like API connectors (standard with most

commercial search engines), source-system export tools, ETL

platforms or messaging systems.

You should also be aware of some special challenges associated

with Web crawling:

• Missed Content

Valuable data on the Web exists in unstructured, semi-struc-

tured and structured form, including Deep Web content that

is dynamically generated as a result of form input and/or

database querying. Not all engines are capable of accessing this

data and capturing its full semantic logic.

• Low Quality Content

While crawlers are designed to cast a wide net, with backend

search engines (or other DMS) being responsible for separat-

ing the wheat from the chaff, overall quality can nevertheless

be improved if a crawler can be configured to do some pre-

liminary qualitative filtering, for example, excluding certain

document types, treating the content of a site as a single page

to avoid crowding out other relevant sources (website collaps-

ing), detecting and applying special rules for duplicate and near

duplicate content, etc.

• Performance Problems

Load management is especially important in Web crawling.

If you don’t (or can’t) properly regulate the breadth and depth

of a crawl according to your business needs and resources, you

can easily encounter performance problems. You can likewise

encounter performance issues if you don’t (or can’t) employ a

refined update strategy, zeroing in on pertinent new or modi-

fied content rather than re-crawling and re-indexing all content.

Of course, regardless of the size of the crawl, you also should avoid

placing an undue load on the visited site or violating data owner-

ship and privacy policies. These infractions are usually inadvertent

and due to weaknesses in the crawler used, but they can nonethe-

less result in your crawler being blocked, or ”blacklisted,” from

public websites. For internal intranet crawls, such poor manage-

ment can cause performance and security problems.

In the case of the public Web, an RSS (“Really Simple Syndica-

tion” or “Rich Site Summary”) feed for delivering authorized,

regularly changing Web content may be available to help you

avoid some of these pitfalls. But they are not available for all

sites, and they may be incomplete or out of date.

A Practical Guide to Big Data: Opportunities, Challenges & Tools© 2012 Dassault Systèmes

19

MAKING SENSE OF THE WEB

A search engine sometimes views HTML content as

an XML tree, with HTML tags as branches and text

as nodes, and uses rules written in the standard

XML query language, XPath, to extract and structure

content. This is a strategy in which the crawler plays

an important role in pre-processing content. A search

platform may also view HTML as pure text, relying on

semantic processing within the core of the engine to

give the content structure.

The first approach can produce high quality results, but

it is labor-intensive, requiring specific rules to be drafted

and monitored for each source (in the fast-changing

world of the Web, an XPath rule has an average lifespan

of only 3 months). The second approach can be applied

globally to all sites, but it is complex and error prone. An

ideal strategy balances the two, exploiting the patterns

of structure that do exist while relying on semantics to

verify and enrich these patterns.

4.MESSAGING SYSTEMS

Primary Uses

• Data exchange (often event-driven, small-packet data)

• Application/systems integration

• Data preprocessing and normalization (secondary role)

Definition

Message-Oriented Middleware (MOM) systems provide an

enabling backbone for enterprise application integration. Often

deployed within service-oriented architectures (SOA), MOM

solutions loosely couple systems and applications through a

bridge known as a message bus. Messages (data packets) man-

aged by the bus may be configured for point-to-point delivery

(message queue messaging) or broadcast to multiple subscrib-

ers (publish-subscribe messaging). They vary in their level of

support for message security, integrity and durability.

Exchanges between disparate systems are possible because

all connected systems (“peers”) share a common message

schema, set of command messages and infrastructure (often

dedicated). Data from source systems is transformed to the

degree necessary to enable other systems to consume it,

for example, binary values may need to be converted to their

textual (ASCII) equivalents, or session IDs and IP addresses may

be extracted from log files and encoded as XML records. APIs for

managing this data processing may be embedded in individual

systems connected to the bus, or they may be embedded in the

MOM platform.

Complex Event Processing (CEP)

MOM systems are often used to manage the asynchronous

exchange of event-driven, small-packet data (like barcode scans,

stock quotes, weather data, session logs and meter readings)

between diverse systems. In some instances, a Complex Event

Processing (CEP) engine may be deployed to analyze this data

in real time, applying complex trend detection, pattern match-

ing and causality modeling to streaming information and

taking action as prescribed by business rules. For instance, a

CEP engine may apply complex algorithms to streaming data

like ATM withdrawals and credit card purchases to detect and

report suspicious activity in real time or near real time. If a CEP

offers historical processing, data must be captured and stored

in a DMS.

Examples

MOM platforms may be standalone applications or they may

be bundled within broader SOA suites. Examples include:

• Apache ActiveMQ

• Oracle/BEA MessageQ

• IBM WebSphere MQ Series

• Informatica Ultra Messaging

• Microsoft Message Queuing (MSMQ)

• Solace Messaging & Content Routers

• SonicMQ from Progress Software

• Sun Open Message Queue (OpenMQ)

• Tervela Data Fabric HW & SW Appliances

• TIBCO Enterprise Message Service & Messaging Appliance

Most of the organizations above also offer a CEP engine. There

are also a number of specialty CEP vendors, including Steam-

Base Systems, Aleri-Coral8 (now under the Sybase umbrella),

UC4 Software and EsperTech. In addition, many of the NewSQL

platforms discussed in the next section incorporate CEP tech-

nology, creating uncertainty as to whether CEP will continue as

a standalone technology.

Caveats

Messaging systems were specifically designed to meet the

high-volume, high-velocity data needs of industries like fi-

nance, banking and telecommunications. Big Data volumes can

nonetheless overload some MOM systems, particularly

if the MOM is performing extensive data processing—filtering,

aggregation, transformation, etc—at the message bus level.

In such situations, performance can be improved by offloading

processing tasks to either source or destination systems. You

could also upgrade to an extreme performance solution like IBM

© 2012 Dassault SystèmesA Practical Guide to Big Data: Opportunities, Challenges & Tools

20

WebSphere MQ Low Latency Messaging or Informatica Ultra

Messaging, or to a hardware-optimized MOM solution like the

Solace, Tervela or TIBCO messaging appliances (TIBCO’s appli-

ance was developed in partnership with Solace).

B. Data Processing & Interaction

Today, classic RDBMS are complemented by a rich set of alter-

native DMS specifically designed to handle the volume, variety,

velocity and variability of Big Data collections (the so-called

“4Vs” of Big Data). These DMS include NoSQL, NewSQL and

Search-based systems. All can ingest data supplied by any of

the capture and preprocessing tools discussed in the last section

(ETLs, APIs, crawlers or messaging systems).

• NoSQL

NoSQL systems are distributed, non-relational databases

designed for large-scale data storage and for massively-parallel

data crunching across a large number of commodity servers.

They can support multiple activities, including exploratory and

predictive analytics, ETL-style data transformation, and non-

mission-critical OLTP (for example, managing long-duration or

inter-organization transactions). Their primary drawbacks are

their unfamiliarity, and, for the youngest of these largely open-

source solutions, their instability.

• NewSQL

NewSQL systems are relational databases designed to provide

ACID-compliant, real-time OLTP and conventional SQL-based

OLAP in Big Data environments. These systems break through

conventional RDBMS performance limits by employing NoSQL-

style features such as column-oriented data storage and distrib-

uted architectures, or by employing technologies like in-mem-

ory processing, SMP or MPP (some go further and integrate

NoSQL or Search components to address the 4V challenges of

Big Data). Their primary drawback is cost and rigidity (most are

integrated hardware/software appliances).

• Search-Based Platforms

As they share the same Internet roots, Big Data-capable search

platforms naturally employ many of the same strategies and

technologies as their NoSQL counterparts (distributed architec-

tures, flexible data models, caching, etc.) – in fact, some would

argue they are NoSQL solutions, but this classification would

obscure their prime differentiator: natural language processing

(NLP). It is NLP technology that enables search platforms to

automatically collect, analyze, classify and correlate diverse col-

lections of structured, unstructured and semi-structured data.

NLP and semantic technologies also enable Search platforms

to do what other systems cannot: sentiment analysis, machine

learning, unsupervised text analysis, etc. Search platforms are

deployed as a complement to NoSQL and NewSQL systems,

giving users of any skill level a familiar, simple way to search,

analyze or explore the Big Data collections they house. In

some situations, Search-Based Applications (SBAs) even offer

an easier, more affordable alternative to NoSQL and NewSQL

deployments.

As noted in the Executive Summary, the challenge with these

technologies is determining which is best suited to a particular

type of task, and to understand the relative advantages and

disadvantages of particular solutions (usability, maturity, cost,

security, technical skills required, etc.). Based on such consider-

ations, the chart below summarizes general best use (not only

use!) scenarios.

For the two categories with multiple options checked–explor-

atory and operational analytics–the choice of NoSQL, Search or

NewSQL depends on whether your target user is 1) a machine,

or 2) a human, and if it is a human being, whether that user is a

business user or an expert analyst, statistician or programmer.

The second factor is whether batch-processing or streaming

analytics are right for your needs, and if streaming, whether

your latency requirements are real-time, quasi-real-time or

simply right-time.

To learn more, let’s look more closely now at these three types

of DMS.

1.NOSQL SYSTEMS

Primary Uses

• Large-scale data processing (parallel processing over dis-

tributed systems)

• Embedded IR (basic machine-to-machine information look-

up & retrieval)

• Exploratory analytics on semi-structured data (expert level)

• Large volume data storage (unstructured, semi-structured,

small-packet structured)

Definition

NoSQL, for “Not Only SQL,” refers to an eclectic and increasing-

Big Data Task

Big Data Tool

NoSQL Search NewSQL

Storage

Structured Data X

Unstructured, Semi-structured, & Small-packet Structured Data X

Processing

Basic Data Transformation/Crunching X

Natural Language/Semantic Processing, Sentiment Analysis X

Transaction Processing (ACID OLTP & Event Stream Processing) X

Access & Interaction

Machine-to-Machine Information Retrieval (IR) X

Human-to-Machine IR/Exploration X

Agile Development of Business Applications X

Analytics

Conventional Analytics (OLAP) X

Exploratory Analytics X X X

Operational Reporting/Analytics X X

A Practical Guide to Big Data: Opportunities, Challenges & Tools© 2012 Dassault Systèmes

21

ly familiar group of non-relational data management systems

(e.g., Hadoop, Cassandra and BerkeleyDB). Common features

include distributed architectures with parallel processing across

large numbers of commodity servers, flexible data models that

can accommodate inconsistent/changeable data, and the use

of caching and/or in-memory strategies to boost performance.

They also use non-SQL languages and mechanisms to interact

with data (though some now feature APIs that convert SQL

queries to the system’s native query language or tool).

Accordingly, they provide relatively inexpensive, highly scalable

storage for high-volume, small-packet historical data like logs,

call-data records, meter readings, and ticker snapshots (i.e.,

“big bit bucket” storage), and for unwieldy semi-structured or

unstructured data (email archives, xml files, documents, etc.).

Their distributed framework also makes them ideal for massive

batch data processing (aggregating, filtering, sorting, algorith-

mic crunching (statistical or programmatic), etc.). They are good

as well for machine-to-machine data retrieval and exchange,

and for processing high-volume transactions, as long as ACID

constraints can be relaxed, or at least enforced at the applica-

tion level rather than within the DMS.

Finally, these systems are very good exploratory analytics

against semi-structured or hybrid data, though to tease out

intelligence, the researcher usually must be a skilled statistician

working in tandem with a skilled programmer.

If you want to deploy such a system in a standalone version

on commodity hardware, and you want to be able to run full-

text searches or ad-hoc queries against it, or to build business

applications on top of it, or in general simply make the data it

contains accessible to business users, then you need to deploy

a search engine along with it.

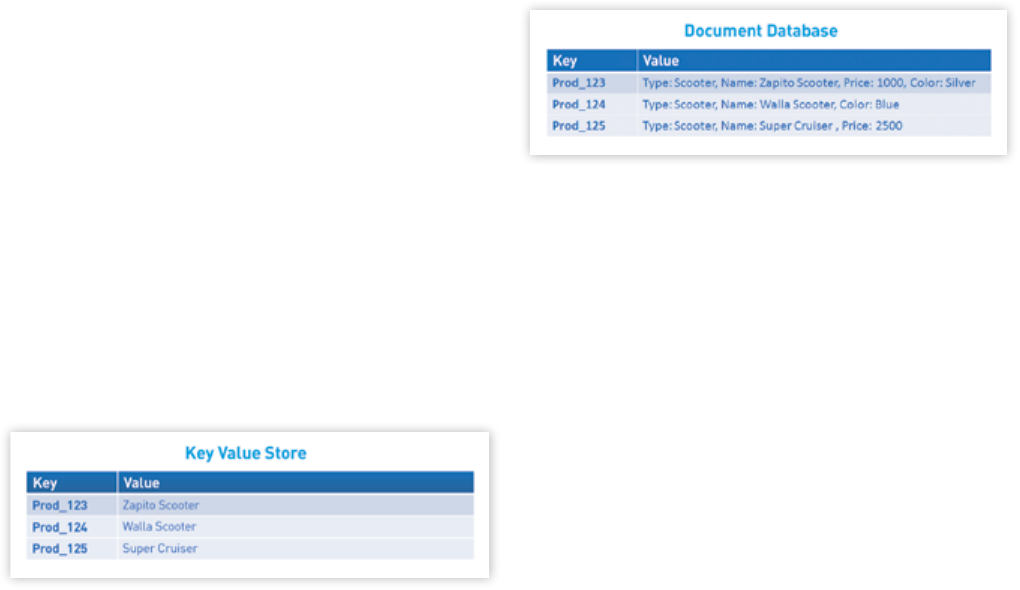

NoSQL DMS come in four basic flavors, each suited to different

kinds of tasks:

17

• Key-Value stores

• Document databases (or stores)

• Wide-Column (or Column-Family) stores

• Graph databases

Most Key-Value Stores pair simple string keys with string values for fast infor-

mation retrieval.

Key-Value Stores

Typically, these DMS store items as alpha-numeric identi-

fiers (keys) and associated values in simple, standalone tables

(referred to as “hash tables”). The values may be simple text

strings or more complex lists and sets. Data searches can usu-

ally only be performed against keys, not values, and are limited

to exact matches.

Primary Use

The simplicity of Key-Value Stores makes them ideally suited

to lightning-fast, highly-scalable retrieval of the values needed

for application tasks like managing user profiles or sessions or

retrieving product names. This is why Amazon makes extensive

use of its own K-V system, Dynamo, in its shopping cart.

Examples: Key-Value Stores

• Dynamo (Amazon)

• Voldemort (LinkedIn)

• Redis

• BerkeleyDB

• Riak

• MemcacheDB

Document Databases

Inspired by Lotus Notes, document databases were, as their

name implies, designed to manage and store documents. These

documents are encoded in a standard data exchange format

such as XML, JSON (Javascript Option Notation) or BSON (Bi-

nary JSON). Unlike the simple key-value stores described above,

the value column in document databases contains semi-struc-

tured data – specifically attribute name/value pairs. A single

column can house hundreds of such attributes, and the number

and type of attributes recorded can vary from row to row. Also,

unlike simple key-value stores, both keys and values are fully

searchable in document databases.

Document Databases contain semi-structured values that can be queried. The

number and type of attributes per row can vary, offering greater flexibility than

the relational data model.

© 2012 Dassault SystèmesA Practical Guide to Big Data: Opportunities, Challenges & Tools

22

Primary Use

Document databases are good for storing and managing Big

Data-size collections of literal documents, like text documents,

email messages, and XML documents, as well as conceptual

”documents” like de-normalized (aggregate) representations of

a database entity such as a product or customer. They are also

good for storing “sparse” data in general, that is to say irregular

(semi-structured) data that would require an extensive use of

“nulls” in an RDBMS (nulls being placeholders for missing or

nonexistent values).

Document Database Examples

• CouchDB (JSON)

• MongoDB (BSON)

• MarkLogic (XML database)

• Berkeley DB XML (XML database)

Wide-Column (or Column-Family) Stores

Like document databases, Wide-Column (or Column-Family)

stores (hereafter WC/CF) employ a distributed, column-oriented

data structure that accommodates multiple attributes per key.

While some WC/CF stores have a Key-Value DNA (e.g., the

Dynamo-inspired Cassandra), most are patterned after Google’s

Bigtable, the petabyte-scale internal distributed data storage

system Google developed for its search index and other collec-

tions like Google Earth and Google Finance.

These generally replicate not just Google’s Bigtable data stor-

age structure, but Google’s distributed file system (GFS) and

MapReduce parallel processing framework as well, as is the

case with Hadoop, which comprises the Hadoop File System

(HDFS, based on GFS) + Hbase (a Bigtable-style storage sys-

tem) + MapReduce.

Primary Uses

This type of DMS is great for:

COLUMN-ORIENTED ADVANTAGE

In row-oriented RDBMS tables, each attribute is

stored in a separate column, and each row - and

every column in that row - must be read sequen-

tially to retrieve information – a slower method

than in the column-oriented NoSQL model,

wherein large amounts of information can be

extracted from a single wide column in a single

“read” action.

• Distributed data storage, especially versioned data because

of WC/CF time-stamping functions.

• Large-scale, batch-oriented data processing: sorting, pars-

ing, conversion (e.g., conversions between hexadecimal,

binary and decimal code values), algorithmic crunching,

etc.

• Exploratory and predictive analytics performed by expert

statisticians and programmers.

If you are using a MapReduce framework, keep in mind that

MapReduce is a batch processing method, which is why Google

reduced the role of MapReduce in order to move closer to

streaming/real-time index updates in Caffeine, its latest search

infrastructure.

Wide-Column/Column-Family Examples

• Bigtable (Google)

• Hypertable

• Cassandra (Facebook; used by Digg, Twitter)

• SimpleDB (Amazon)

• Hadoop (specifically HBase database on HDFS file system;

Apache, open sourced by Yahoo!)

• Cloudera, IBM InfoSphere BigInsights, etc. (i.e., vendors

offering commercial and non-commercial Hadoop distribu-

tions, with varying degrees of vendor lock-in)

Graph Databases

Graph databases replace relational tables with structured

relational graphs of interconnected key-value pairings. They

are similar to object-oriented databases as the graphs are rep-

resented as an object-oriented network of nodes (conceptual

objects), node relationships (“edges”) and properties (object at-

tributes expressed as key-value pairs). They are the only of the

four NoSQL types discussed here that concern themselves with

relations, and their focus on visual representation of informa-

tion makes them more human-friendly than other NoSQL DMS.

Primary uses

Graph databases are more concerned with the relationships between data entities

than with the entities themselves.

A Practical Guide to Big Data: Opportunities, Challenges & Tools© 2012 Dassault Systèmes

23

In general, graph databases are useful when you are more inter-

ested in relationships between data than in the data itself: for

example, in representing and traversing social networks, gener-

ating recommendations (e.g., upsell or cross-sell suggestions),

or conducting forensic investigations (e.g., pattern-detection).

Note these DMS are optimized for relationship “traversing,”

not for querying. If you want to explore relationships as well

as querying and analyzing the values embedded within them

(and/or to be able to use natural language queries to analyze

relationships), then a search-based DMS is a better choice.

Graph Database Examples

• Neo4j

• InfoGrid

• Sones GraphDB

• AllegroGraph

• InfiniteGraph

Caveats

NoSQL systems offer affordable and highly scalable solutions

for meeting particular large-volume data storage, processing

and analysis needs. However, the following common con-

straints should be kept in mind in evaluating NoSQL solutions:

•Inconsistent maturity levels

Many are open source solutions with the normal level of volatil-

ity inherent in that development methodology, and they vary

widely in the degree of support, standardization and packaging

offered. Therefore, what one saves in licensing can sometimes

be eaten up in professional services.

• A lack of expertise

There is a limited talent pool of engineers who can deploy

and manage these systems. There are likewise relatively few