NATIONAL STRATEGY TO ADVANCE

PRIVACY-PRESERVING DATA

SHARING AND ANALYTICS

A Report by the

FAST-TRACK ACTION COMMITTEE ON ADVANCING

PRIVACY-PRESERVING DATA SHARING AND ANALYTICS

NETWORKING AND INFORMATION TECHNOLOGY

RESEARCH AND DEVELOPMENT SUBCOMMITTEE

of the

NATIONAL SCIENCE AND TECHNOLOGY COUNCIL

March 2023

About the Office of Science and Technology Policy

The Office of Science and Technology Policy (OSTP) was established by the National Science and Technology Policy,

Organization, and Priorities Act of 1976 to provide the President and others within the Executive Office of the President

with advice on the scientific, engineering, and technological aspects of the economy, national security, health, foreign

relations, the environment, and the technological recovery and use of resources, among other topics. OSTP leads

interagency science and technology policy coordination efforts, assists the Office of Management and Budget with an

annual review and analysis of Federal research and development budgets and serves as a source of scientific and

technological analysis and judgment for the President concerning major policies, plans, and programs of the Federal

Government. More information is available at https://www.whitehouse.gov/ostp

.

About the National Science and Technology Council

The National Science and Technology Council (NSTC) is the principal means by which the Executive Branch coordinates

science and technology policy across the diverse entities that make up the Federal research and development enterprise.

A primary objective of the NSTC is to ensure science and technology policy decisions and programs are consistent with

the President's stated goals. The NSTC prepares research and development strategies coordinated across Federal

agencies to accomplish multiple national goals. The work of the NSTC is organized under committees that oversee

subcommittees and working groups focused on different aspects of science and technology. More information is

available at https://www.whitehouse.gov/ostp/nstc

.

About the Subcommittee on Networking & Information Technology Research & Development

The Networking and Information Technology Research and Development (NITRD) Program has been the Nation's primary

source of federally funded work on pioneering information technologies (IT) in computing, networking, and software

since it was first established as the High-Performance Computing and Communications program following passage of the

High-Performance Computing Act of 1991. The NITRD Subcommittee of the NSTC guides the multiagency NITRD Program

in its work to provide the research and development foundations for ensuring continued U.S. technological leadership

and for meeting the Nation's needs for advanced IT. The National Coordination Office (NCO) supports the NITRD

Subcommittee and its Interagency Working Groups (IWGs) (https://www.nitrd.gov/about/).

About the Fast-Track Action Committee on Advancing Privacy-Preserving Data Sharing and

Analytics

The Fast-Track Action Committee on Advancing Privacy-Preserving Data Sharing and Analytics is a multi-agency venue for

building a whole-of-government approach, from research and development to pilot projects and adoption, to advance

privacy-preserving data sharing and analytics technologies that enable collective data sharing and analysis while

maintaining disassociability and confidentiality.

About This Document

This National Strategy to Advance Privacy-Preserving Data Sharing and Analytics is a cohesive national strategy to

advance the research, development, and adoption of privacy-preserving data sharing and analytics technologies. A list of

acronyms and abbreviations used in the document is included as a reference in Appendix A.

Copyright

This document is a work of the United States Government and is in the public domain (see 17 U.S.C. §105). As a courtesy,

we ask that copies and distributions include an

acknowledgment to OSTP. Published in the United States of America,

2023.

Note: Any mention in the text of commercial, non-profit, academic partners, or their products, or references is for

information only; it is not intended to imply endorsement or recommendation by any U.S. Government agency.

National Strategy to Advance Privacy-Preserving Data Sharing and Analytics

i

NATIONAL SCIENCE AND TECHNOLOGY COUNCIL

Chair

Arati Prabhakar, Director, Office of Science

and Technology Policy (OSTP), Assistant to

the President for Science and Technology

Acting Executive Director

Kei Koizumi, Principal Deputy Director for

Policy, OSTP

SUBCOMMITTEE ON NETWORKING AND INFORMATION TECHNOLOGY

RESEARCH AND DEVELOPMENT (NITRD)

Co-Chair Co-Chair

Margaret Martonosi, Assistant

Director for Computer and

Information Science and

Engineering, National Science

Foundation (NSF)

Kathleen (Kamie) Roberts, NITRD

National Coordination Office (NCO)

Executive Secretary

Nekeia Butler, NITRD NCO

FAST-TRACK ACTION COMMITTEE (FTAC) ON ADVANCING PRIVACY-

PRESERVING DATA SHARING AND ANALYTICS

Co-Chairs

Tess DeBlanc-Knowles,

Senior Policy Advisor

for Artificial

Intelligence, OSTP

James Joshi, Program

Director, Computer

and Information

Science and

Engineering, NSF

Naomi Lefkovitz, Senior

Privacy Policy Advisor,

National Institute of

Standards and

Technology (NIST)

Katelyn McCall-Kiley,

Program Director, xD,

U.S. Census Bureau

Technical Coordinator

Tomas Vagoun, NITRD NCO

Writing Team Members

Tess DeBlanc-Knowles, OSTP

Dylan Gilbert, NIST

James Joshi, NSF

Naomi Lefkovitz, NIST

Aaron Mannes, DHS

Katelyn McCall-Kiley, Census Bureau

Angela Robinson, NIST

Lisa Wolfisch, GSA

FTAC Members

Gil Alterovitz, VA

Lindsey Barrett, NTIA

Tim Bond, DARPA

Rafael Fricks, VA

Simson Garfinkel, DHS

Elena Ghanaim, NIH

Karyn Gorman, DOT

Michael Hawes, Census

Bureau

Jeri Hessman, NITRD NCO

Kevin Herms, ED

Wu He, NSF

Brian Ince, ODNI

Luke Keller, Census Bureau

Brian Lee, CDC

Steven Lee, DOE

Nicholas Mancini, DOS

Kathryn Marchesini, HHS

Mark Przybocki, NIST

Andrew Regenscheid, NIST

Scott Sellars, DOS

Heidi Sofia, NIH

National Strategy to Advance Privacy-Preserving Data Sharing and Analytics

ii

Table of Contents

Executive Summary ............................................................................................................................................... 1

Introduction ........................................................................................................................................................... 3

Applications of PPDSA Technologies........................................................................................................................... 4

Privacy Risks and Harms in the Context of PPDSA....................................................................................................... 6

The Need for a National Strategy ............................................................................................................................... 7

1: Vision and Guiding Principles ............................................................................................................................. 8

Vision ......................................................................................................................................................................... 8

Guiding Principles ....................................................................................................................................................... 8

Participants in the PPDSA Ecosystem ....................................................................................................................... 11

2: Current State ................................................................................................................................................... 12

Legal and Regulatory Environment ........................................................................................................................... 12

Key Challenges ......................................................................................................................................................... 13

Overview of PPDSA Capabilities ............................................................................................................................... 15

3: Strategic Priorities and Recommended Actions ............................................................................................... 20

Strategic Priority 1: Advance Governance and Responsible Adoption ....................................................................... 20

Recommendation 1.a. Establish a steering group to support PPDSA guiding principles and strategic priorities ....... 20

Recommendation 1.b. Clarify the use of PPDSA technologies within the statutory and regulatory

environments .............................................................................................................................................................. 20

Recommendation 1.c. Develop capabilities and procedures to mitigate privacy incidents ........................................ 21

Strategic Priority 2: Elevate and Promote Foundational and Use-inspired Research ................................................. 21

Recommendation 2.a. Develop a holistic scientific understanding of privacy threats, attacks, and harms ............... 22

Recommendation 2.b. Invest in foundational and use-inspired R&D for PPDSA technologies ................................... 22

Recommendation 2.c. Expand and promote interdisciplinary R&D at the intersection of science, technology,

policy, and law ............................................................................................................................................................. 24

Strategic Priority 3: Accelerate Translation to Practice ............................................................................................. 26

Recommendation 3.a. Promote applied and translational research and systems development ............................... 26

Recommendation 3.b. Pilot implementation activities within the Federal Government ........................................... 26

Recommendation 3.c. Establish technical standards for PPDSA technologies............................................................ 27

Recommendation 3.d. Accelerate efforts to develop standardized taxonomies, tool repositories,

measurement methods, benchmarking, and testbeds ............................................................................................... 28

Recommendation 3.e. Improve usability and inclusiveness of PPDSA solutions ........................................................ 29

Strategic Priority 4: Build Expertise and Promote Training and Education ................................................................ 30

Recommendation 4.a. Expand institutional expertise in PPDSA technologies ............................................................ 30

Recommendation 4.b. Educate and train participants on the appropriate use and deployment of PPDSA

technologies ................................................................................................................................................................ 31

Recommendation 4.c. Expand privacy curricula in academia ..................................................................................... 31

Strategic Priority 5: Foster International Collaboration on PPDSA ............................................................................ 32

Recommendation 5.a. Foster bilateral and multilateral engagements related to a PPDSA ecosystem ...................... 32

Recommendation 5.b. Explore the role of PPDSA technologies to enable cross-border collaboration ...................... 33

Conclusion ........................................................................................................................................................... 35

Appendix A: Abbreviations and Acronyms ........................................................................................................... 36

Endnotes.............................................................................................................................................................. 37

National Strategy to Advance Privacy-Preserving Data Sharing and Analytics

1

Executive Summary

Data are vital resources for solving society’s biggest problems. Today, significant amounts of data are

accumulated every day—fueled by widespread data generation methods, new data collection

technologies, faster means of communication, and more accessible cloud storage. Advances in

computing have significantly reduced the cost of data analytics and artificial intelligence, making it even

easier to use this data to derive valuable insights and enable new possibilities. However, this potential is

often limited by legal, policy, technical, socioeconomic, and ethical challenges involved in sharing and

analyzing sensitive information. These opportunities can only be fully realized if strong safeguards that

protect privacy

1

—a fundamental right in democratic societies—underpin data sharing and analytics.

Privacy-preserving data sharing and analytics (PPDSA) methods and technologies can unlock the

beneficial power of data analysis while protecting privacy. PPDSA solutions include methodological,

technical, and sociotechnical approaches that employ privacy-enhancing technologies to derive value

from, and enable an analysis of, data to drive innovation while also providing privacy and security.

However, adoption of PPDSA technologies has been slow because of challenges related to inadequate

understanding of privacy risks and harms, limited access to technical expertise, trust, transparency

among participants with regard to data collection and use,

2

uncertainty about legal compliance, financial

cost, and the usability and technical maturity of solutions.

3

PPDSA technologies have enormous potential, but their benefit is tied to how they are developed and

used. Existing confidentiality and privacy laws and policies provide important protections to individuals

and communities, and attention is needed to determine how to uphold these protections through the

use of PPDSA technologies and maintain commitments to equity, transparency, and accountability.

Consideration of how individuals may control the collection, linking, and use of their data should also

factor into the design and use of PPDSA technologies.

Recognizing the untapped potential of PPDSA technologies, the White House Office of Science and

Technology Policy (OSTP) initiated a national effort to advance PPDSA technologies.

This National Strategy to Advance Privacy-Preserving Data Sharing and Analytics (Strategy) lays out a

path to advance PPDSA technologies to maximize their benefits in an equitable manner, promote trust,

and mitigate risks. This Strategy takes great care to incorporate socioeconomic and technological

contexts that are vital to responsible use of PPDSA technologies, including their impact on equity,

fairness, and bias—and how they might introduce privacy harms, especially to disadvantaged groups.

This Strategy first sets out a vision for a future data ecosystem that incorporates PPDSA approaches:

Privacy-preserving data sharing and analytics technologies help advance the well-being and

prosperity of individuals and society, and promote science and innovation in a manner that

affirms democratic values.

This Strategy then lays out the following foundational guiding principles to achieve this vision:

• PPDSA technologies will be created and used in ways that protect privacy, civil rights, and civil

liberties.

• PPDSA technologies will be created and used in a manner that stimulates responsible scientific

research and innovation, and enables individuals and society to benefit equitably from the value

derived from data sharing and analytics.

National Strategy to Advance Privacy-Preserving Data Sharing and Analytics

2

• PPDSA technologies will be trustworthy, and will be created and used in a manner that upholds

accountability.

• PPDSA technologies will be created and used to minimize the risk of harm to individuals and

society arising from data sharing and analytics, with explicit consideration of impacts on

underserved, marginalized, and vulnerable communities.

Based on the guiding principles, this Strategy identifies the following strategic priorities for the public

and private sectors to progress toward the vision of a future data ecosystem that effectively

incorporates PPDSA technologies:

• Advance governance and responsible adoption through the establishment of a multi-partner

steering group to help develop and maintain a healthy PPDSA ecosystem, greater clarity on the

use of PPDSA technologies within the statutory and regulatory environments, and proactive risk

mitigation measures.

• Elevate and promote foundational and use-inspired research through investments in

multidisciplinary research that will advance practical deployment of PPDSA approach and

exploratory research to develop the next generation of PPDSA technologies.

• Accelerate translation to practice through pilot implementations, development of consensus

technical standards, and creation of user-focused tools, decision aids, and testbeds.

• Build expertise and promote training and education through concerted efforts to expand

PPDSA expertise across the public and private sector and foster privacy education opportunities

from K-12 through higher education, with particular attention to capacity building in

underserved communities.

• Foster international collaboration on PPDSA through promotion of partnerships and an

international policy environment that furthers the development and adoption of PPDSA

technologies and supports common values while protecting national and economic security.

PPDSA technologies have the potential to catalyze American innovation and creativity by facilitating

data sharing and analytics while protecting sensitive information and individuals’ privacy. Leveraging

data at scale holds the power to drive transformative innovation to address climate change, financial

crime, public health, human trafficking, social equity, and other challenges, yet it also holds the potential

to violate privacy and undercut the fundamental rights of individuals and communities. PPDSA

technologies, coupled with strong governance, can play a critical role in protecting democratic values

and mitigating privacy risks and harms while enabling data sharing and analytics that will contribute to

improvements in the quality of life of the American people. This Strategy serves as a roadmap for both

the public and private sectors to responsibly harness the potential of PPDSA technologies and move

together toward the vision that anchors this Strategy.

OSTP, in partnership with the National Economic Council, will focus and coordinate Federal activities to

advance the priorities put forward in this Strategy.

National Strategy to Advance Privacy-Preserving Data Sharing and Analytics

3

Introduction

Data drive scientific and technological breakthroughs, underpin policymaking, and power the global

economy. Clinicians use data to identify the best treatments for their patients, farmers use data to

predict and improve farm yields, researchers use data to generate new knowledge about natural and

social phenomena, and public servants use data to create evidence-based policies. Artificial Intelligence

(AI) and other emerging analytics techniques are amplifying the power of data, making it easier to

discover new patterns and insights ranging from better prediction models to understand and mitigate

the impacts of climate change to new methods for detecting financial crime.

Although data enable science, innovation, and insights, balancing the benefits of these data-derived

insights with the imperatives of privacy, security, and other values is a longstanding challenge. For

example, when developing new treatment options, medical researchers may benefit from broad access

to electronic health records. However, those records may contain personal health information related to

individual patients, compromising the privacy and safety of those patients as well as rights under health

privacy laws and regulations on the protection of human subjects. Similarly, when researchers access

authorized data without safeguards on how they access the data, privacy-sensitive information such as

their location or the specific type of information they are accessing may be revealed. In many domains,

collaborations that could improve AI model training and accelerate progress must be balanced with

ethical and legal privacy concerns and intellectual property protection concerns.

Over several decades, privacy researchers, including

statisticians and cryptographers, have been adapting

various anonymization, statistical disclosure

limitation, and private computation techniques to

address privacy or confidentiality needs. As demands

for granular data grow and as the amount of publicly

available data on individuals has proliferated, so have

the challenges to protecting against re-identification

and record-linkage risks in protected datasets or

membership inference attacks on analytical models

built on sensitive data. New solutions to address these

threats are increasingly needed.

Privacy-preserving data sharing and analytics (PPDSA)

solutions include technical and sociotechnical

approaches that employ certain types of privacy-

enhancing technologies (PETs) to generate value from

and enable analytics on data while protecting privacy

and security. Some PPDSA approaches allow users

(e.g., researchers and physicians) to gain insights from

sensitive data without exposing the original data itself

or allow them to access shared data without being

tracked or identified. Other PPDSA approaches enable

data sharing by obscuring personal data or making

synthetic reflections of the original data that preserve the properties of interest in the data while

protecting individual privacy.

What is meant by data?

For the purposes of this strategy, data are

elements or values that convey

information. Data can range from abstract

concepts to physical measurements. They

exist in a wide variety of types (e.g., text,

image, audio, video) and contexts (e.g.,

location, time), including digital and non-

digital forms. Data can vary in complexity

from basic representations of information

and graphs to multimodal, including those

generated by complex human-computer

interactions. Data that convey information

about other data are known as metadata.

Data may represent aggregate

information or derived data. Numerous

data operations, including those involving

metadata, can have privacy implications

for individuals and groups. Examples

include data collection, access, analysis,

sharing, transmission, and retention.

National Strategy to Advance Privacy-Preserving Data Sharing and Analytics

4

PPDSA technologies can unlock new forms of collaboration and new norms in the responsible use of

personal data. By enabling the use of more comprehensive and diverse datasets, PPDSA technologies

can help the global community tackle shared challenges and drive solutions in areas such as healthcare,

climate change, financial crime, human trafficking, and pandemic response and achieve more equitable

outcomes for underserved, marginalized, and vulnerable populations.

The Fast-Track Action Committee (FTAC) on Advancing Privacy-Preserving Data Sharing and Analytics

was chartered under the National Science and Technology Council’s (NSTC)

4

Networking and

Information Technology Research and Development (NITRD)

5

Subcommittee in January 2022 to develop

this National Strategy to Advance Privacy-Preserving Data Sharing and Analytics (Strategy) to advance

the research, development, and adoption of PPDSA

technologies.

6

This Strategy intends to chart a path

forward for the Nation to steward responsible and

accountable development and adoption of these

transformative technologies.

Applications of PPDSA Technologies

Deployments of PPDSA technologies have begun to

show promising results. For example, the Boston

Women's Workforce Council initiated a study of the

Boston-area gender wage gap to assess the compliance

of Boston-area employers with the Equal Pay Act.

7

A

PPDSA technique, secure multiparty computation, was

used to enable the joint analysis of sensitive salary

data across Boston-area employers without disclosing

those data to any party external to the employer. The

2020 U.S. Census used differential privacy, another

PPDSA technique, to publish aggregate statistical data

while protecting the privacy of individuals.

8

In another

example, researchers at the University of Pennsylvania

collaborated with nine other institutions using the

PPDSA technique of federated learning to develop a

machine learning (ML) model to analyze magnetic resonance imaging scans of brain tumor patients and

distinguish healthy brain tissue from cancerous regions.

9

Case Study: Boston Women’s Workforce Council Wage Gap Study

Challenge: The Boston Women’s Workforce Council (BWWC) sought to assess the compliance of Boston-area

employers to the Equal Pay Act by computing the Boston-area gender and race wage gaps. The assessment required

employers to share privacy-sensitive payroll data.

Approach: A traditional approach would have required Boston-area employers to release sensitive information about

employees to a trusted entity for statistical analysis. Instead, the BWWC used secure multiparty computation. Boston-

area employers were able to provide the salary information of their employees in a privacy-preserving way so that

accurate wage statistics were computed even though underlying salary information was never disclosed.

Impact: Sixty-nine employers participated in the 2016 BWWC wage gap report, contributing wage information on

113,000 employees. By 2021, 134 employers contributed information on 156,000 employees' wages. This adoption of

secure multiparty computation has allowed the BWWC to determine whether the gender wage gap is closing in Boston

and track the progress each year.

What are PETs and PPDSA

technologies?

For the purposes of this strategy, PETs

refer to a broad set of technologies that

protect privacy by removing personal

information, by minimizing or reducing

personal data, or by preventing

undesirable processing of data, while

maintaining the functionality of a system.

PPDSA technologies refer to a subset of

PETs that are essential for enabling data

sharing and analytics in a privacy-

preserving manner, such as secure

multiparty computation, homomorphic

encryption, differential privacy, zero

knowledge proofs, synthetic data,

federated learning, and trusted execution

environments, which are discussed further

in the document.

National Strategy to Advance Privacy-Preserving Data Sharing and Analytics

5

In the public sector, PPDSA technologies are advancing the Federal Statistical System’s ability to safely

expand access to some of the nation’s most sensitive data as intended under the Foundations for

Evidence-Based Policymaking Act of 2018 (Evidence Act).

10

They can also advance the mission

articulated in the Federal Data Strategy

11

“to fully leverage the value of federal data for mission, service,

and the public good”

12

by enabling data sharing and analysis across agencies to safely and responsibly

share Federal data for research. They can support national priorities to advance open science by

enabling federally funded researchers to maximize sharing of scientific data while respecting potential

legal and privacy limitations.

13

The CHIPS and Science Act of 2022

14

includes a provision that establishes

a National Secure Data Service (NSDS) demonstration which will, in concert with the statistical system’s

implementation of the Evidence Act, pilot a tiered access system, leveraging PPDSA technologies to

facilitate access to Federal statistical data for evidence-based policymaking. There are also PPDSA

applications in public health, real-time data for emergency response, traffic analysis, improved quality of

human resources information, and urban planning, all of which can benefit from the analysis of sensitive

data.

In the private sector, PPDSA approaches can, for example, enable companies to share data on cyber

incidents without disclosing the identity of the company or competitive details of the company's

operations. Banks can improve fraud detection by using PPDSA technologies for information sharing

across organizational boundaries to identify suspicious patterns while better protecting customers'

privacy. To enable more efficient and climate-friendly management of electricity or traffic through smart

grids and smart cities, PPDSA technologies can facilitate understanding of consumption and travel

patterns while not revealing privacy-sensitive information.

However, challenges related to inadequate understanding of how PPDSA technologies mitigate privacy

risks and harms, limited access to technical expertise, trust, uncertainty about legal and policy

compliance, financial cost, the usability and technical maturity of solutions, as well as how solutions map

to different use cases have slowed adoption of PPDSA technologies.

PPDSA technologies have enormous potential to enable data collaboration, but strong policies and

governance will be needed to ensure that they are developed and used in ways that protect multiple

aspects of privacy, security, civil rights, and civil liberties. Many such safeguards are built into U.S.

confidentiality and privacy laws and policies that apply to the Federal Government’s use of data, and

attention is needed to determine how to advance these protections and the use of PPDSA technologies

together (see for example endnotes

15,16

). For example, PPDSA technologies may not fully resolve

questions of whether data have been legitimately obtained or used and whether the nature or quality of

the data could create harmful bias in the results.

Consideration of how individuals may control the collection and use of their data, including data

deletion requests, should also factor into the design and use of PPDSA technologies. PPDSA technologies

that are poorly designed or deployed could undermine the potential benefits. It is essential that

solutions be applied in a manner that accomplishes the desired privacy goals. If employed without

verifiable performance evaluations, transparency, and accountability, PPDSA approaches could create a

false sense of privacy. In the private sector, deploying PPDSA technologies without appropriate

safeguards could further empower data monopolies, undermining fair markets and increasing inequity

in access. It is imperative that laws, regulations, and policy keep pace with innovations in PPDSA

technologies to ensure that adequate protections are upheld as PPDSA technologies are implemented

and used.

National Strategy to Advance Privacy-Preserving Data Sharing and Analytics

6

Privacy Risks and Harms in the Context of PPDSA

Establishing a precise definition of privacy is difficult because privacy is inherently multidisciplinary and

encompasses diverse concepts, including confidentiality, consent, and control over multiple facets of

data and identity.

17

Privacy needs shift with context, time, and individual or cultural differences.

Understanding and defining privacy involves interdisciplinary research that brings together

technologists, social scientists, economists, and privacy and legal professionals, among others. At its

foundation, privacy safeguards basic human values such as autonomy, dignity, freedom of expression,

freedom of association, and freedom to engage in intellectual exploration. Lack of privacy can

undermine individual rights and freedoms and can worsen discrimination against marginalized groups. It

is also important to note that ensuring security is critical to achieving privacy protection goals.

To improve the ability of organizations to assess privacy risks, the National Institute of Standards and

Technology (NIST) has defined privacy risk as the likelihood that individuals will experience problems

resulting from data processing, and the impact should they occur.

18

These problems can be expressed in

various ways, but NIST describes them as ranging from dignity-type losses, which could include

embarrassment or long-term reputational harm to more tangible harms such as discrimination; financial

harm, which could result from identity theft; and losses in self-determination, which could include life-

altering or threatening situations such as imprisonment or domestic violence.

19

Privacy protection includes addressing various objectives, such as confidentiality, disassociability,

predictability, and manageability.

20

Data confidentiality is well understood as a means of managing

privacy risk and applies to the protection of data from unauthorized access or use, whether about an

individual, group, or entity. Confidentiality protections are written into U.S. statistical laws and are

accompanied by a suite of statistical disclosure limitations and tiered data access solutions. Many of the

PETs that are used for PPDSA are cryptographic techniques that inherently meet the objective of

confidentiality. The distinguishing characteristic of PPDSA approaches is their capability for meeting the

privacy engineering objective of disassociability to prevent even authorized entities from making

linkages between data and people's identities, which further enhances privacy with authorized use of

the data.

For example, these include methods that encrypt data so that a third party can perform analytics on the

encrypted information, those that add “noise” to real data or create synthetic datasets to allow for

broad public release and analysis of the data, and those that use hardware-based solutions to protect

data during analysis. Such technologies currently include, but are not limited to, secure multiparty

computation, homomorphic encryption, zero-knowledge proofs, federated learning, secure enclaves,

differential privacy, and synthetic data generation tools. When deploying PPDSA techniques, it is also

critical to protect privacy in terms of what could be learned through privacy-eroding inferences from the

output of the secure analysis.

21

Privacy risks can arise when privacy objectives are not appropriately met when sharing or analyzing

data. For example, loss of confidentiality may expose privacy-sensitive information. This may be the

disclosure of an individual's identifying information or the privacy-sensitive attribute of a record/person.

Various re-identification, data reconstruction, record linkage, or association attacks are possible even

when some form of de-identification or anonymization technique is used, particularly by combining

auxiliary information that is easily available.

Similarly, insufficient disassociability includes the potential for inferring privacy-sensitive information

related to an individual. For example, from a published ML model, it may be possible to infer that a

particular individual's data was included in the model's training dataset. An adversary may also observe

National Strategy to Advance Privacy-Preserving Data Sharing and Analytics

7

a data user’s pattern of access to determine what type of information is being accessed and from where.

In addition, despite the ability of the Onion Router (Tor) browsers to provide anonymous

communication, website fingerprinting attacks can be used to predict a website visited by the user

based on observed traffic patterns.

22

PPDSA solutions are typically designed by considering multiple

threat models that characterize how an adversary may compromise a system. Addressing the possible

risks often includes a combination of PPDSA techniques to safely carry out the analytics and to protect

privacy in released results.

The Need for a National Strategy

As PPDSA technologies begin making the transition to practice and the understanding of their value

grows, it is imperative that the Nation moves forward in a manner that harnesses the opportunities of

these technologies and is grounded in a commitment to uphold democratic values. This Strategy sets

out a vision for the future that responsible research, development, and adoption of PPDSA technologies

can enable, and details a series of priorities and actions the public and private sectors can take to move

toward that vision. Section 1

of the Strategy lays out the vision for a future data sharing and analytics

ecosystem enabled by PPDSA technologies as well as the principles that should guide design,

development, and implementation efforts. Section 2 provides context on the current state of PPDSA

technology development and adoption, including the key challenges that must be overcome for the

broader adoption of such technologies. Section 3 presents five strategic priorities and recommends

actions within each that should be advanced to achieve the vision for the future of the PPDSA ecosystem

defined in

Section 1.

In developing the Strategy, the FTAC consulted a diverse group of participants and experts in the field

from across government, academia, the private sector, non-profit, and civil society entities. Engagement

activities included the following:

• A series of virtual roundtables, open to the public, to provide an opportunity for broad input.

Three sessions were attended by nearly 300 total participants.

• A request for information

23

was issued on June 9, 2022, and garnered 77 responses

24

from the

public.

• A data call to Federal agencies seeking information related to their use of PPDSA technologies.

• Informational presentations from a broad range of invited researchers, developers, and

practitioners from government, academia, the private sector, and civil society.

The strategy integrates this broad set of perspectives and experiences to set out an inclusive path

toward a future data ecosystem enabled by PPDSA technologies.

National Strategy to Advance Privacy-Preserving Data Sharing and Analytics

8

1: Vision and Guiding Principles

Vision

This Strategy strives to set the country on a path to a future where:

Privacy-preserving data sharing and analytics technologies help advance the well-being and

prosperity of individuals and society, and promote science and innovation in a manner that

affirms democratic values.

This vision of the future applies broadly to individuals, groups, and society at large, including industry,

civil society, academia, and government at all levels.

Guiding Principles

PPDSA technologies offer novel approaches to sharing and processing data to advance the physical and

emotional well-being and prosperity of individuals and groups, as well as innovation-driven economic

growth. However, on their own, PPDSA technologies cannot prevent the results of data analytics from

being used in ways that may undermine democratic values, such as privacy, freedom, equity,

accountability, and civil rights and liberties. These tenets must be reflected in all aspects of the

development life cycle of PPDSA technologies if such technologies are to realize the vision of this

Strategy.

The following principles are intended to guide the development and deployment of PPDSA technologies

in support of progress toward achieving the vision:

• PPDSA technologies will be created and used in ways that protect privacy, civil rights, and civil

liberties.

• PPDSA technologies will be created and used in a manner that stimulates responsible scientific

research and innovation and enables individuals and society to benefit equitably from the value

derived from data sharing and analytics.

• PPDSA technologies will be trustworthy and will be created and used in a manner that upholds

accountability.

• PPDSA technologies will be created and used to minimize the risk of harm to individuals and

society arising from data sharing and analytics, with explicit consideration of impacts on

underserved, marginalized, and vulnerable communities.

PPDSA technologies will be created and used in ways that protect privacy, civil rights, and civil

liberties.

While PPDSA technologies can play an important role in protecting privacy, including confidentiality of

data, they are not a complete solution. Without appropriate safeguards, the results from data sharing

and analytics could be used in ways that degrade or infringe upon the privacy of individuals and groups

or infringe upon core democratic values. Indeed, the confidentiality capabilities of PPDSA technologies

may also make them more difficult to audit than conventional system designs because they restrict the

information that parties can access or obtain. Furthermore, PPDSA technologies may not fully resolve

questions of whether the data have been legitimately obtained or used (e.g., with meaningful individual

consent) and whether the nature or quality of the data could create harmful bias in the results.

National Strategy to Advance Privacy-Preserving Data Sharing and Analytics

9

Public trust will hinge on the justified assurance that government, academic, and industry use of PPDSA

solutions will respect privacy, civil liberties, and civil rights. The future PPDSA ecosystem must be

transparent and inclusive and reflect privacy principles and preferences (e.g., individual participation

and consent management). Achieving these outcomes will require developing systems informed by

ethical, social, behavioral, and economic factors as well as human-centered design principles.

Governance around the design, development, and deployment of PPDSA technologies will provide

guardrails and accountability for responsible and ethical use. The Federal Chief Data Officers Council's

adoption of the Data Ethics Framework with civil liberties and privacy inputs provides an encouraging

way forward and helps Federal leaders and data users make privacy-conscious and ethical decisions as

they acquire, manage, and use technology and data in support of their agencies' missions.

25

The

Blueprint for an AI Bill of Rights

26

released by OSTP in October 2022 similarly guides the principles that

should guide the design, use, and deployment of automated systems, including in terms of data privacy,

data collection, and data use.

Globally, the Federal Government can leverage partnerships to advocate

for privacy-protecting technical standards and norms within and across international bodies.

PPDSA technologies will be created and used in a manner that stimulates responsible scientific

research and innovation and enables individuals and society to benefit equitably from the value

derived from data sharing and analytics.

This Strategy envisions a future in which PPDSA technologies enable data-driven scientific research and

innovations that will improve health, well-being, and prosperity. Individuals are not the only

beneficiaries. Competing organizations in the market sector will be able to share and process data in

ways not possible today without undermining individual privacy and intellectual property protections.

However, it is imperative that everyone benefits equitably from the insights gleaned from the data. In

keeping with the democratic values of equity and fairness, the entities creating and using these PPDSA

technologies will prioritize equitable access to these benefits.

Ensuring that everyone benefits equitably requires the proper alignment of incentives to foster a data-

enabled inclusive economy. When combined, the power of economies of scale, AI, and network effects

could lead to market concentration or anticompetitive practices that undermine innovation or

incentives for the responsible use of data. PPDSA technologies have the potential to enable smaller

businesses or would-be market entrants to harness data to fuel responsible business models without

being reliant on directly collecting, accessing, or licensing large amounts of data. Appropriate policy and

technical guardrails and approaches to democratize PPDSA technologies and enhance equitable access

to data can address these risks and foster innovation and robust market competition.

PPDSA technologies will be trustworthy and will be created and used in a manner that upholds

accountability.

The use of PPDSA technologies will be built on a foundation of trust. PPDSA technologies need to be

trustworthy, meaning that from a technical perspective, they need to be able to perform as intended. As

PPDSA technologies evolve, it will be necessary to establish a framework for independent verification of

these systems even as the technology continues to mature. To mitigate risk, organizations will need to

have a means to rigorously test, evaluate, and continuously monitor the performance of PPDSA

technologies. The development of risk and performance metrics and diverse testbeds will support such

evaluation. Standards, certifications, frameworks, guidelines, and tools will further support the

trustworthiness of PPDSA technologies, and in turn, provide accountability for their use.

However, accountability for the use of PPDSA technologies requires more than trustworthiness. As

noted, the implementation of PPDSA technologies alone may not prevent the shared data and analytic

National Strategy to Advance Privacy-Preserving Data Sharing and Analytics

10

outputs from being used for non-democratic or otherwise harmful purposes. Entities engaging in data

sharing and analytics must be accountable for their decisions and actions. Embedding the design,

development, and deployment of PPDSA technologies in a larger framework that encompasses legal,

regulatory, ethical, and policy mechanisms will help to create this level of accountability. This larger

frame of accountability includes employing environmentally sustainable best practices in the design,

development, and deployment of PPDSA technologies to minimize associated harms such as

unsustainable energy use.

Technologies, laws, and policies that are developed in an open, inclusive, and transparent manner and

leverage the expertise of a broad set of actively engaged collaborators, including the public sector,

industry, academia, and civil society, will support accountability.

27

Design, development, and

deployment of mechanisms to maximize openness and transparency within the technologies themselves

(e.g., open-source software) should be prioritized. Organizations will also need the capabilities and

procedures to respond to incidents in the event of sensitive data disclosures or reidentification risks.

Risk management can be used to support ethical decision-making to derive the benefits described in this

Strategy while minimizing harmful impacts on individuals and society at large. Users of PPDSA

technologies need the requisite knowledge, skills, and techniques to identify, prioritize, and respond to

risks associated with data sharing and analytics activities. Therefore, effective education, awareness,

and training for the responsible and ethical use of data and technologies, including applicable academic

programs, workforce hiring pathways, and relevant government procurement activities will be needed.

Conducting and publishing privacy impact assessments is another way to support transparency,

demonstrate risk mitigation strategies, and maintain public trust. Existing risk management frameworks,

such as the NIST Privacy Framework,

28

Cybersecurity Framework,

29

and AI Risk Management

Framework

30

can be used to support these needs.

PPDSA technologies will be created and used to minimize the risk of harm to individuals and society

arising from data sharing and analytics, with explicit consideration of impacts on underserved,

marginalized, and vulnerable communities.

As noted previously, the use of PPDSA technologies should not contribute disproportionately to

unintended or harmful outcomes for underserved, marginalized, and vulnerable communities. Such

harms may arise in diverse ways. In some cases, the implementation of the technology may create

harm. For instance, adding noise in data to protect privacy while training an ML model typically reduces

the accuracy of the trained model; such accuracy loss can be disproportionately worse for the

underrepresented classes and subgroups in the training data.

31

In addition, when such noising

techniques are used, the bias already present in the original data may be further amplified, which can

lead to disproportionately increased harm to some groups represented in the data.

Ensuring equitable benefits from PPDSA technologies will require the inclusion of diverse viewpoints,

expertise, and perspectives in PPDSA research and development (R&D). This includes domain experts

that can best identify relevant statistics and insights to be preserved or generated from the data to

inform the application of a PPDSA technology to maintain the necessary integrity of those measures.

Taxonomies and methodologies for formal analysis of relevant risks and harms based on empirical

studies will help provide foundations for advances that avoid negative outcomes. Auditing requirements

and criteria, as well as rigorous benchmarks for success, will help facilitate meaningful and accountable

implementation of this principle into the design, development, and deployment of PPDSA technologies.

National Strategy to Advance Privacy-Preserving Data Sharing and Analytics

11

Participants in the PPDSA Ecosystem

As noted, organizations that will benefit from an improved ability to share and analyze data include all

levels of government, academia, and all sectors of industry. Relevant parties also include developers of

PPDSA technologies, policymakers, and individuals who are the subject of or affected by data sharing

and analytics. Many contributors within the PPDSA ecosystem can and should partner to design,

develop, and deploy these technologies effectively and responsibly.

With the use of PPDSA technologies, individuals can feel more confident that their privacy will be

maintained and may thus be more likely to participate in data sharing and analysis activities. PPDSA

technology developers and deployers have a responsibility to communicate the technology performance

capabilities and limitations in their systems, including their determinations about acceptable privacy-

accuracy or functionality tradeoffs and any supporting measures to address or help mitigate these

tradeoffs. Researchers, data scientists, or other third-party entities using the data and individuals or

groups who could be harmed, especially already marginalized or vulnerable groups, must have a role in

the design, development, and deployment process to communicate concerns or expose areas where

more research is needed to improve the technologies or implementation solutions. This level of public

participation can be facilitated through capacity building and the provision of tools and frameworks that

facilitate communication and collaboration across technical and non-technical communities.

Civil society organizations and institutions can offer multidisciplinary subject matter expertise and

diverse perspectives to support the activities necessary for achieving the vision put forward in this

Strategy. Organizations dedicated to pertinent issues such as consumer rights, civil and human rights,

privacy, data science, and emerging technologies can provide guidance to help ensure that the

development and deployment of PPDSA solutions follow the guiding principles defined above and move

the ecosystem toward the envisioned future. Civil society organizations also stand to benefit greatly

from the insights that can be gleaned from PPDSA solutions to further public interest missions and goals,

and it will be important to build their capacity to employ and engage with PPDSA technologies.

National Strategy to Advance Privacy-Preserving Data Sharing and Analytics

12

2: Current State

The theoretical foundations for many PPDSA tools and techniques (e.g., secure multiparty computation,

homomorphic encryption) appeared in the late 1970s and 1980s alongside many of the advancements in

computer science, networking, and the internet, often supported by Federal research funding. In

parallel, statistical disclosure limitation techniques were advancing within the statistical profession, led

by Federal statistical agencies.

32

As the global internet and advances in computing continued to mature

throughout the 1990s and 2000s, techniques to prevent the identification or re-identification of data

subjects became more relevant. Statistical disclosure limitation techniques are widely used today to

manage the re-identification risk of publicly available data products in statistical organizations within

and outside of government, along with a series of restricted access modalities such as physical and

virtual data enclaves to provide secure access to data when public products are too risky.

33

In the

pursuit of techniques that provide more provable guarantees for privacy, those same organizations are

also investing in PPDSA-related technologies to expand their toolset.

Recent years have seen a dramatic shift in the role of PPDSA technologies more broadly, from generally

beneficial to essential, although the relative maturity of each PPDSA approach varies considerably. As

the value and demand for data continue to rapidly expand, so do privacy risks, creating a need for new

solutions. Further, advances in AI systems have driven demand for data exponentially, with large

language models and generative AI models reaching unprecedented performance through

computationally intensive approaches based on large corpora of training data. Legislation and Federal

initiatives have also elevated and prioritized the use of data for evidence-based policymaking and the

sharing of scientific data from federally funded research. This landscape of technical capabilities,

foundationally based on data, evolves daily and presents a unique moment for the convergence of

techniques that fundamentally enhance privacy with those that are designed to derive insights from

data. At the same time, the proliferation of data protection and privacy regulations and laws around the

world and within the United States creates a complex regulatory environment, which may not account

for new technologies.

The following examination of the current state captures some of the critical aspects of the legal

environment, key challenges in adoption, and capabilities of specific tools and techniques.

Legal and Regulatory Environment

At the Federal level, the use and protection of personal data in the United States are governed by a set

of risk-based, sector-specific laws and regulations that extend to different data types or uses. Some,

such as the Privacy Act of 1974

34

and the Confidential Information Protection and Statistical Efficiency

Act of 2002,

35

apply to how the Federal government handles certain information. Others, such as the

Health Insurance Portability and Accountability Act (HIPAA) of 1996,

36

the Children's Online Privacy

Protection Act of 1998,

37

the Gramm-Leach-Bliley Act,

38

and the Family Educational Rights and Privacy

Act of 1974

39

expand the scope of application to specified private-sector entities to protect specific

types of personal data. Following the enactment of the California Consumer Privacy Act of 2018,

40

there

are also an increasing number of state laws that provide more general protections.

Governing a key area for adoption of PPDSA approaches, the HIPAA Privacy Rule protects the privacy of

individually identifiable health information held by covered entities, such as health care providers. The

Privacy Rule requires authorization from individuals or a waiver of authorizations by an Institutional

Review Board (IRB) or Privacy Board to disclose individually identifiable health information.

41

National Strategy to Advance Privacy-Preserving Data Sharing and Analytics

13

More broadly, the Federal Policy for the Protection of Human Subjects,

42

referred to as the “Common

Rule,” sets forth requirements for the protection of human subjects in research.

Globally, data protection regulations have proliferated in the last few years following the introduction of

the European Union's General Data Protection Regulation (GDPR),

43

including in countries such as

Brazil,

44

China,

45

Canada,

46

and Singapore.

47

These create a complex regulatory landscape and cross-

jurisdictional issues that add to the challenges of developing and deploying effective PPDSA technologies

for cross-border research and applications.

In response to recent growth in AI and ML research and data-driven consumer products, several

regulatory agencies, such as the Food and Drug Administration,

48

the Consumer Financial Protection

Bureau,

49

and the Federal Trade Commission, have announced guidance and plans for advanced

rulemaking

50

to address issues related to safety and privacy in the context of AI- and data-based systems

and practices. However, similar regulatory efforts to clarify how PPDSA technologies do or do not meet

various legal restrictions related to data sharing and protection have not yet been announced.

Key Challenges

While some PPDSA technologies have seen initial deployments in commercial products and public sector

uses, others still have technical challenges to overcome to enable broader use (discussed in detail

below). Nonetheless, a set of common challenges have hindered widespread adoption, including:

Limited awareness. Knowledge and understanding about PPDSA technologies in general are sparse

among potential users in the public and private sectors. The capabilities afforded by PPDSA technologies

are not widely known, understood, or appreciated outside of the research community. Furthermore,

many potential users who have heard of the technologies are under the impression that there are no

mature, useable PPDSA technologies.

Inconsistent definitions and taxonomy. An absence of standard definitions or taxonomies related to

privacy and PPDSA technologies makes understanding privacy risks and considerations difficult and

inconsistent among the parties. This has resulted in a lack of clarity about the appropriateness and use

of available PPDSA technologies for various scenarios and how to manage privacy alongside

functionality.

Inadequate understanding of privacy technology risks and benefits. Potential users of PPDSA

technology need a way of understanding and evaluating the risks and benefits that the technology

entails, including potential harms, and mapping technologies to relevant threat models. Few techniques

offer absolute security, meaning that they cannot be compromised by an adversary who has unlimited

computational resources and time. However, most practical techniques are designed to ensure that an

adversary cannot compromise them in a reasonable amount of time. Mitigating risk, therefore, involves

understanding the threat models and adversarial capabilities.

Lack of consensus standards. Few widely adopted standards specifying the mechanisms or use of PPDSA

technologies exist at this time. Although an open initiative led by industry and academia has established

a homomorphic encryption standard

51

and a standard for zero-knowledge proofs

52

is underway, more

standards that specify foundational cryptographic primitives and other PPDSA technologies would

facilitate adoption and trust in solutions. In addition, there are no widely adopted standards for data

formats, application programming interfaces, or system architectures that are necessary to facilitate the

interoperability and deployment of PPDSA technologies. Promotion of consensus standards, however,

should not dissuade those considering PPDSA solutions from leveraging the tremendous flexibility that

National Strategy to Advance Privacy-Preserving Data Sharing and Analytics

14

many PPDSA techniques offer in terms of customizing implementation to best suit their data and use

cases.

Varying stages of maturity. Existing PPDSA technologies are in varying levels of maturity with some

having achieved initial success in terms of real-world adoption, particularly for simpler applications.

Years of theoretical research in cryptography, for instance, have led to secure multiparty computation

and homomorphic encryption techniques becoming more practical. However, these approaches still

have scalability and efficiency challenges that need to be addressed in the context of a broader set of

threat models to enable wider adoption. This is further complicated by a complex global regulatory

environment with varying compliance requirements for personal data protection. In many cases, a

combination of various PPDSA techniques needs to be employed to ensure end-to-end privacy that is

measurable or formally provable, which remains a research challenge.

Insufficient usability of solutions. Current PPDSA approaches must be tailored to each specific

deployment and require significant technical expertise to implement. There is not an appropriate set of

usable tools and interfaces whose design is informed by human-centric design principles and social,

economic, and behavioral factors that make the solutions easily deployable, configurable, accessible,

and manageable by a diverse set of users with varying levels of capabilities. This hinders broader

adoption among organizations that do not have specialized expertise and creates high deployment

burdens, particularly in sectors that are already overtaxed or have low technical maturity. Even if

significant specialized expertise is used to deploy such a technology, it can become ineffective if it is not

used properly due to usability challenges, inconsistent assumptions about behavioral and economic

issues, or misaligned incentives.

Inadequate implementation assessment capabilities and management of tradeoffs between privacy

and other issues. Approaches that address privacy in a broader context of competing issues are

underdeveloped. For instance, techniques such as differential privacy provide formally provable privacy

guarantees but require more analysis to assess potential impacts on fairness and bias, as well as

tradeoffs with accuracy in different implementations which can affect the degree of utility for data

users. Effectively assessing the implementation of PPDSA solutions in terms of vulnerability in real-

world deployment is another challenge, and the lack of standards makes it difficult for third-party

auditors to evaluate the strength of privacy protections. There is also a lack of mature approaches for

measurement and metrics concerning privacy risks and harms, which could help assess the efficacy of

solutions and for devising risk-aware solutions.

Lack of clarity around regulatory compliance. Cross-functional partners (i.e., security, privacy, and

general counsel) may struggle to determine when or if the use of PPDSA technology is consistent or in

compliance with legal or regulatory requirements. Current laws and regulations do not adequately

envision a role for PPDSA technologies and legal standards for privacy protection and interpretations of

these standards do not directly address the question of whether the use of PPDSA technologies is

sufficient to satisfy their requirements.

Impact on individuals, marginalized groups, and society. The use of PPDSA technology does not

guarantee the safe use of data nor can it control for cases of illegitimate collection of data. Furthermore,

certain approaches could produce analytical results that amplify or introduce bias, and such results

could lead to decisions that are discriminatory or disproportionately harmful to certain subgroups

represented in the data.

PPDSA technologies in the early stages of development may be poorly

understood and deployed or adopted in privacy-invasive and potentially unsafe ways that risk re-

identification.

National Strategy to Advance Privacy-Preserving Data Sharing and Analytics

15

The above list is not meant to be exhaustive and must also be addressed within the context of today’s

fast-evolving emerging technological landscape.

Overview of PPDSA Capabilities

As a result of years of research, various PPDSA technologies have emerged that show promise for

helping achieve the vision put forward by this Strategy. These include cryptographic and non-

cryptographic techniques and span hardware- and software-based approaches. They address various

privacy priorities, such as pseudonymization or anonymization of sensitive data and statistical disclosure

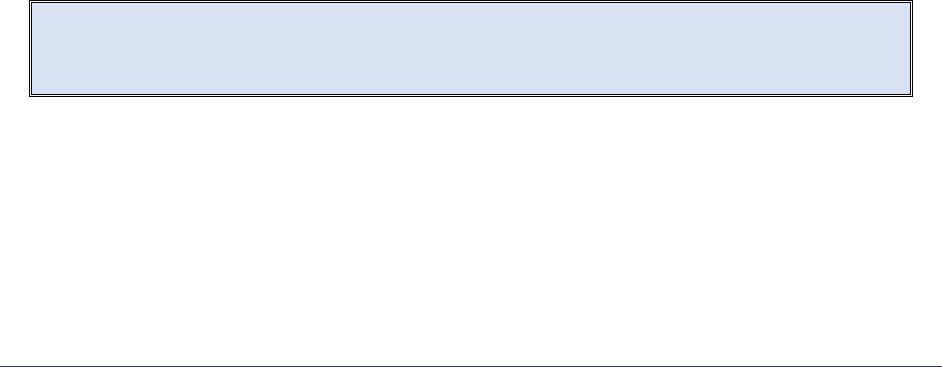

control and promote confidentiality and disassociability. Table 1 reviews the key existing technical

approaches that are essential for PPDSA and an in-depth discussion follows.

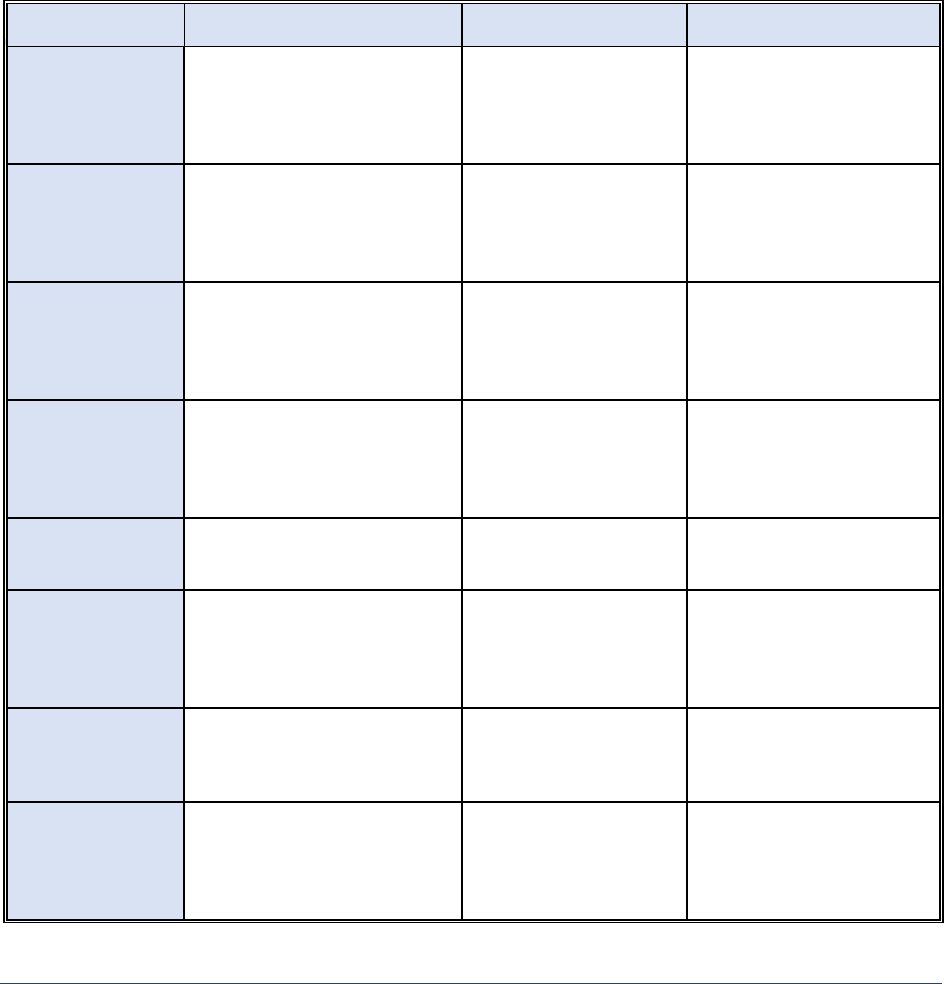

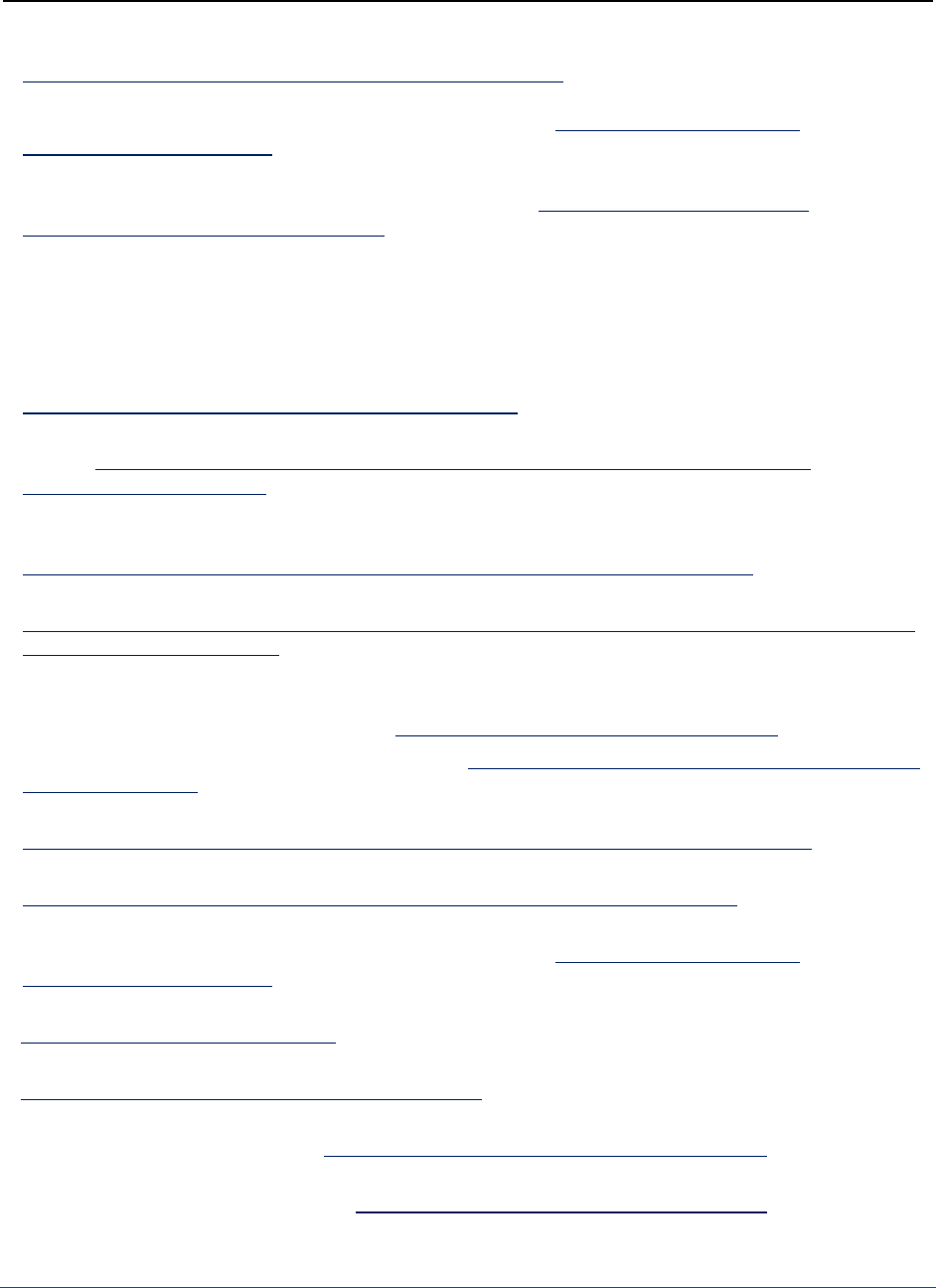

Table 1. Overview of Key Technical Approaches Essential for PPDSA.

Technique

Description

Value

Limitations

K-anonymity

Transforms a given set of k

records in such a way that in the

published version, each

individual is indistinguishable

from the others

Reduces the risk of re-

identification

Vulnerable to reidentification

attack if additional public

information is available

Differential

Privacy

Adds noise to the original data in

such a way that an adversary

cannot tell whether any

individual’s data was or was not

included in the original dataset

Provides formal

guarantee of privacy by

reducing the likelihood of

data reconstruction or

linkage attacks

Limited to simpler data types;

challenge in managing

tradeoff between privacy,

accuracy, or utility of data

Synthetic Data

Information that is artificially

manufactured as an alternative

to real-world data

Preserves the overall

properties or

characteristics of the

original dataset

May still disclose privacy-

sensitive information

contained in the original

dataset; difficult to mirror

real-world data

Secure

Multiparty

Computation

Allows multiple parties to jointly

perform an agreed computation

over their private data, while

allowing each party to learn only

the final computational output

Increases the ability to

compute over distributed

datasets without

revealing original data

Higher computational and

communication

costs/burdens, and difficult to

scale

Homomorphic

Encryption

Allows computing over

encrypted data to produce

results in an encrypted form

Only authorized users can

see original and/or

computed data

Higher computational cost

and time

Zero-Knowledge

Proof

Allows one party to prove to

another party that a particular

statement is true without

revealing privacy-sensitive

information

Increases ability to

validate information

without disclosing

sensitive information

Cost and scalability

Trusted

Execution

Environment

Creates a secure, isolated

execution environment parallel

to the main operating system to

process sensitive data

Allows faster secure

analytics on data

compared to encryption-

based techniques

Introduces other ways

sensitive data can leak

Federated

Learning

Allows multiple entities to

collaborate in building an ML

model on distributed data

without sharing original data

Minimizes data sharing

while training a combined

model

Various data reconstruction

or inference attacks are still

possible; require consistency

across datasets held by

multiple entities

National Strategy to Advance Privacy-Preserving Data Sharing and Analytics

16

Data anonymization and statistical disclosure limitation techniques. These techniques address privacy

risks in publishing data by transforming the original data to limit the disclosure of sensitive information

or prevent the re-identification of individuals or groups represented in the data. Challenges inherent in

these approaches relate to how to balance privacy goals and the accuracy of the published data when

used for various types of analysis. The capabilities of anonymization or disclosure techniques are

currently limited to only simpler types of data, such as tabular data.

• k-anonymity is an anonymization technique that transforms a given set of k records in such a

way that in the published version, each individual is indistinguishable from the others. Extensive

research in k-anonymity and its extensions has shown that such techniques are vulnerable to

reconstruction or linkage attacks

53

that can lead to the re-identification of a data subject if an

adversary has relevant auxiliary information. k-anonymity has been recently used for the

publication of COVID-19

54

datasets by the U.S. Centers for Disease Control and Prevention.

55

• Differential privacy, a data perturbation approach, adds noise to the original data in such a way

that an adversary cannot tell whether any individual’s data was or was not included in the

original dataset. Such approaches aim to ensure that statistical information computed using the

published data remains statistically valid. Differential privacy has gained popularity because of

its rigorous mathematical foundation. Initial deployments have not yet produced a generalizable

method of how to best set the privacy parameter to control the strength of the privacy

guarantee while optimizing for accurate analytic results. It has been used by the Census Bureau

in the publication of 2020 Census data (see case study below).

• Synthetic data approach involves generating simulated data that preserves the overall statistical

properties or characteristics of the original dataset without revealing its sensitive information.

This approach is increasingly popular for training AI-based applications for image recognition

56

and healthcare,

57

as large volumes of synthetic data can be easily made available for training AI

models. Research shows that synthetic data may still disclose privacy-sensitive information

contained in the original dataset unless techniques such as differential privacy are used.

58

Cryptographic techniques. Years of research have resulted in the development of various cryptographic

techniques that support computation over private data. Scalability and efficiency issues remain key

challenges for the practical deployment of many cryptographic techniques. Computation and

communication costs become challenging for complex analytics (e.g., deep learning) or as the number of

collaborating parties or data sizes increases.

• Secure multiparty computation allows multiple parties to jointly perform an agreed computation

over their private data while allowing each party to only learn the final computational output.

Computations can be agreed for a common (public) output, such as everyone learning an

average salary, or can be tailored to distinct private outputs per party.

59

After over 30 years of

theoretical research, secure multiparty computation is increasingly being seen as a viable PPDSA

solution that can be used to support simple privacy-preserving computational tasks such as

computing statistics or regression analysis. A special case of secure multiparty computation is

private set intersection, which identifies overlapping elements from multiple datasets without

revealing any other sensitive data.

• Homomorphic encryption allows computing over encrypted data to produce results in an

encrypted form. Then, only users with appropriate keys can extract the result from its encrypted

form. For example, additive homomorphic encryption allows computing a sum of two encrypted

Na

tional Strategy to Advance Privacy-Preserving Data Sharing and Analytics

17

values to produce the encryption of the sum of their original values. Fully homomorphic

encryption supports arbitrary computation over encrypted data. Recent efforts led to the

development of a homomorphic encryption standard in 2018.

60

• Zero-knowledge proof is another well-known technique that allows one party, called a prover, to

provide proof to convince another party, a verifier, that a particular statement is true without

revealing privacy-sensitive information.

61

For example, some digital assets use zero-knowledge

proofs to prove statements about transactions and balances without revealing additional

metadata.

62

Zero-knowledge proofs can thus be used to support auditability or compliance

checks. More recently, zero-knowledge proof schemes are being used for convolutional neural

networks

63

to prove that the prediction task was carried out by the model itself, without

disclosing any model information.

• Functional encryption allows computing a value of a mathematical function over some data using

the encrypted form of that data. Special cases of functional encryption include identity-based

encryption and attribute-based encryption, which allow encrypted data to be decrypted only by

parties that satisfy specific criteria (identity or attributes).

T

rusted execution environments. A trusted execution environment creates a secure, isolated execution

environment parallel to the main operating system

64

to process sensitive data. It ensures verifiability of

security regarding sensitive data processing or code execution, trusted input-output operations, and

secure storage for data that can be accessed by only authorized entities at any time. Trusted execution

environments may use hardware- or virtualization-based isolation techniques, and they can be made

available on cloud platforms. Trusted execution environments provide a faster alternative to

cryptographic approaches but bring additional privacy risks such as side-channel leakage of information.