National Telecommunications and Information Administration

i

Artificial Intelligence

Accountability Policy Report

MARCH 2024

NTIA

Artificial

Intelligence

Accountability Policy Report

With thanks to Ellen P. Goodman,

principal author, and the NTIA sta for

their eorts in draing this report.

MARCH 2024

National Telecommunications and Information Administration

v

Contents

Executive Summary.........................................................................................................................................2

1. Introduction ..................................................................................................................................................8

2. Requisites for AI Accountability: Areas of Significant Commenter Agreement ................... 16

2.1. Recognize potential harms and risks ............................................................................................. 16

2.2. Calibrate accountability inputs to risk levels ............................................................................... 18

2.3. Ensure accountability across the AI lifecycle and value chain .................................................. 18

2.4. Develop sector-specific accountability with cross-sectoral horizontal capacity .................. 19

2.5. Facilitate internal and independent evaluations ......................................................................... 20

2.6. Standardize evaluations as appropriate ....................................................................................... 21

2.7. Facilitate appropriate access to AI systems for evaluation ........................................................ 21

2.8. Standardize and encourage information production ................................................................. 22

2.9. Fund and facilitate growth of the accountability ecosystem .................................................... 23

2.10. Increase federal government role ................................................................................................ 23

3. Developing Accountability Inputs: A Deeper Dive ......................................................................... 26

3.1. Information flow ................................................................................................................................ 26

3.1.1. AI system disclosures...................................................................................................................... 28

3.1.2. AI output disclosures: use, provenance, adverse incidents.................................................... 31

3.1.3. AI system access for researchers and other third parties ........................................................ 36

3.1.4. AI system documentation.............................................................................................................. 37

3.2. AI System evaluations ....................................................................................................................... 39

3.2.1. Purpose of evaluations .................................................................................................................. 40

3.2.2. Role of standards............................................................................................................................. 42

3.2.3. Proof of claims and trustworthiness ........................................................................................... 45

3.2.4. Independent evaluations .............................................................................................................. 46

3.2.5. Required evaluations...................................................................................................................... 48

3.3 Ecosystem requirements ................................................................................................................... 49

3.3.1. Programmatic support for auditors and red-teamers ............................................................. 49

3.3.2. Datasets and compute ................................................................................................................... 50

3.3.3. Auditor certification ........................................................................................................................ 51

4. Using Accountability Inputs .................................................................................................................. 54

NTIA Artificial Intelligence Accountability Policy Report

National Telecommunications and Information Administration

1vi

Executive

Summary

4.1 Liability rules and standards ............................................................................................................. 54

4.2. Regulatory enforcement ................................................................................................................... 58

4.3. Market development ......................................................................................................................... 59

5. Learning From Other Models ................................................................................................................ 62

5.1 Financial assurance ............................................................................................................................ 62

5.2 Human rights and Environmental, Social, and Governance (ESG) assessments ................... 65

5.3 Food and drug regulation.................................................................................................................. 66

5.4 Cybersecurity and privacy accountability mechanisms ............................................................. 67

6. Recommendations ................................................................................................................................... 70

6.1 Guidance .............................................................................................................................................. 70

6.1.1 Audits and auditors: Federal government agencies should work with stakeholders

as appropriate to create guidelines for AI audits and auditors, using existing and/or new

authorities. ......................................................................................................................................................... 70

6.1.2 Disclosure and access: Federal government agencies should work with stakeholders

to improve standard information disclosures, using existing and/or new authorities................ 71

6.1.2 Liability rules and standards: Federal government agencies should work with

stakeholders to make recommendations about applying existing liability rules and

standards to AI systems and, as needed, supplementing them. ..................................................... 71

6.2. Support................................................................................................................................................. 72

6.2.1 People and tools: Federal government agencies should support and invest in

technical infrastructure, AI system access tools, personnel, and international standards

work to invigorate the accountability ecosystem................................................................................ 72

6.2.2 Research: Federal government agencies should conduct and support more research and

development related to AI testing and evaluation, tools facilitating access to AI systems for

research and evaluation, and provenance technologies, through existing and new capacity. . 72

6.3. Regulatory Requirements ................................................................................................................. 73

6.3.1. Audits and other independent evaluations: Federal agencies should use existing

and/or new authorities to require as needed independent evaluations and regulatory

inspections of high-risk AI model classes and systems. .................................................................... 73

6.3.2 Cross-sectoral governmental capacity: The federal government should strengthen its

capacity to address cross-sectoral risks and practices related to AI. ............................................. 73

6.3.3. Contracting: The federal government should require that government suppliers,

contractors, and grantees adopt sound AI governance and assurance practices for AI

used in connection with the contract or grant, including using AI standards and risk

management practices recognized by federal agencies, as applicable. ........................................ 74

Appendix A: Glossary of Terms ................................................................................................................. 76

NTIA Artificial Intelligence Accountability Policy Report

National Telecommunications and Information Administration

3 2

In April 2023, the National Telecommunications and In-

formation Administration (NTIA) released a Request for

Comment (“RFC”) on a range of questions surrounding AI

accountability policy. The RFC elicited more than 1,400

distinct comments from a broad range of stakeholders.

In addition, we have met with many interested parties

and participated in and reviewed publicly available dis-

cussions focused on the issues raised by the RFC.

Based on this input, we have derived eight major policy

recommendations, grouped into three categories: Guid-

ance, Support, and Regulatory Requirements. Some of

these recommendations incorporate and build on the

work of the National Institute of Standards and Tech-

nology (NIST) on AI risk management. We also propose

building federal government regulatory and oversight

capacity to conduct critical evaluations of AI systems

and to help grow the AI accountability ecosystem.

While some recommendations are closely linked to oth-

ers, policymakers should not hesitate to consider them

independently. Each would contribute to the AI account-

ability ecosystem and mitigate the risks posed by accel-

erating AI system deployment. We believe that providing

targeted guidance, support, and regulations will foster

an ecosystem in which AI developers and deployers

can properly be held accountable, incentivizing the ap-

propriate management of risk and the creation of more

trustworthy AI systems.

Independent evaluation, including red-teaming, au-

dits, and performance evaluations of high-risk AI sys-

tems can help verify the accuracy of material claims

made about these systems and their performance

against criteria for trustworthy AI. Creating evaluation

standards is a critical piece of auditing, as is trans-

parency about methodology and criteria for auditors.

Much more work is needed to develop such standards

and practices; near-term work, including under the

AI EO, will contribute to developing these standards

and methodologies.

Consequences for responsible parties, building on in-

formation sharing and independent evaluations, will

require the application and/or development of levers

– such as regulation, market pressures, and/or legal lia-

bility – to hold AI entities accountable for imposing un-

acceptable risks or making unfounded claims.

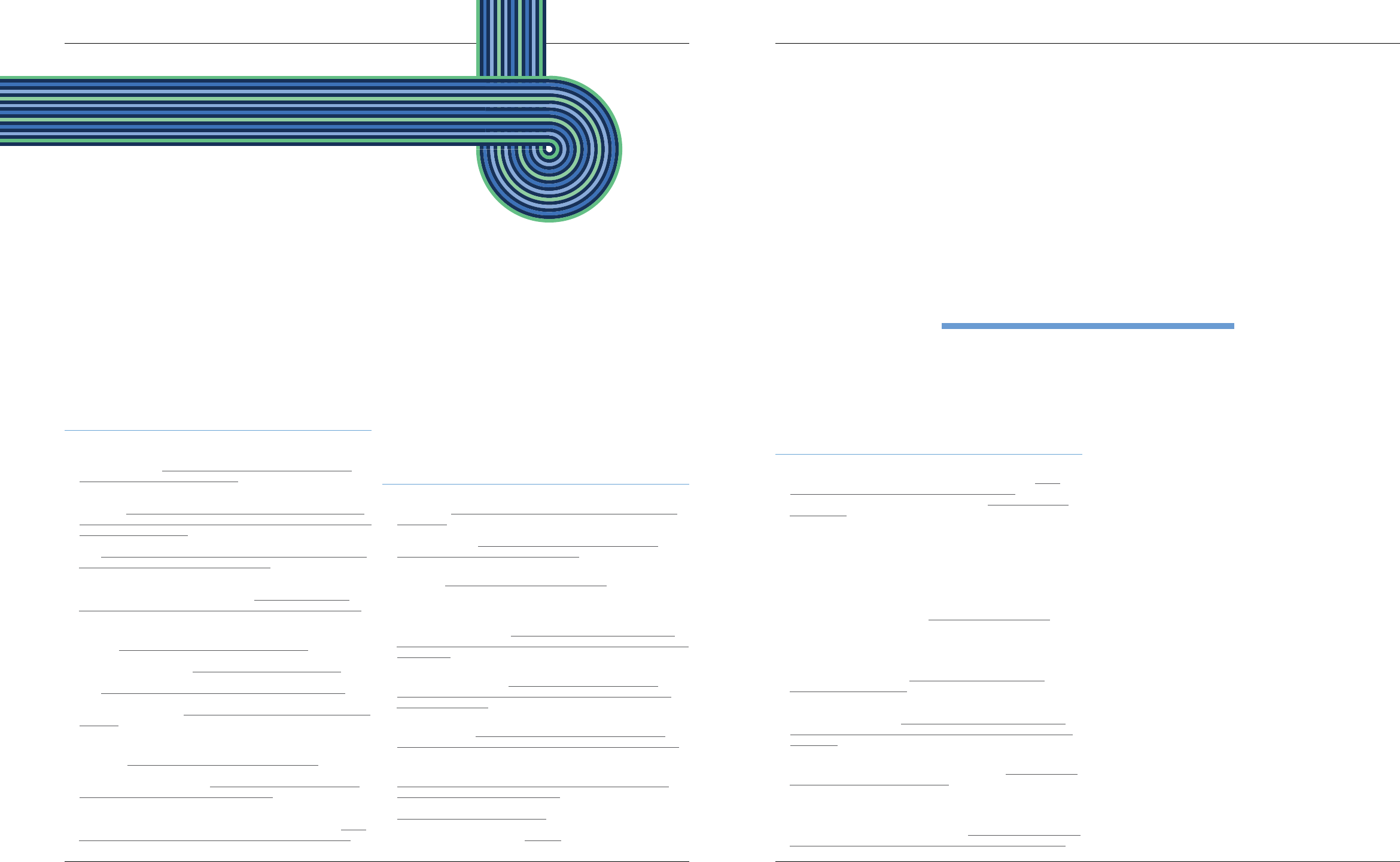

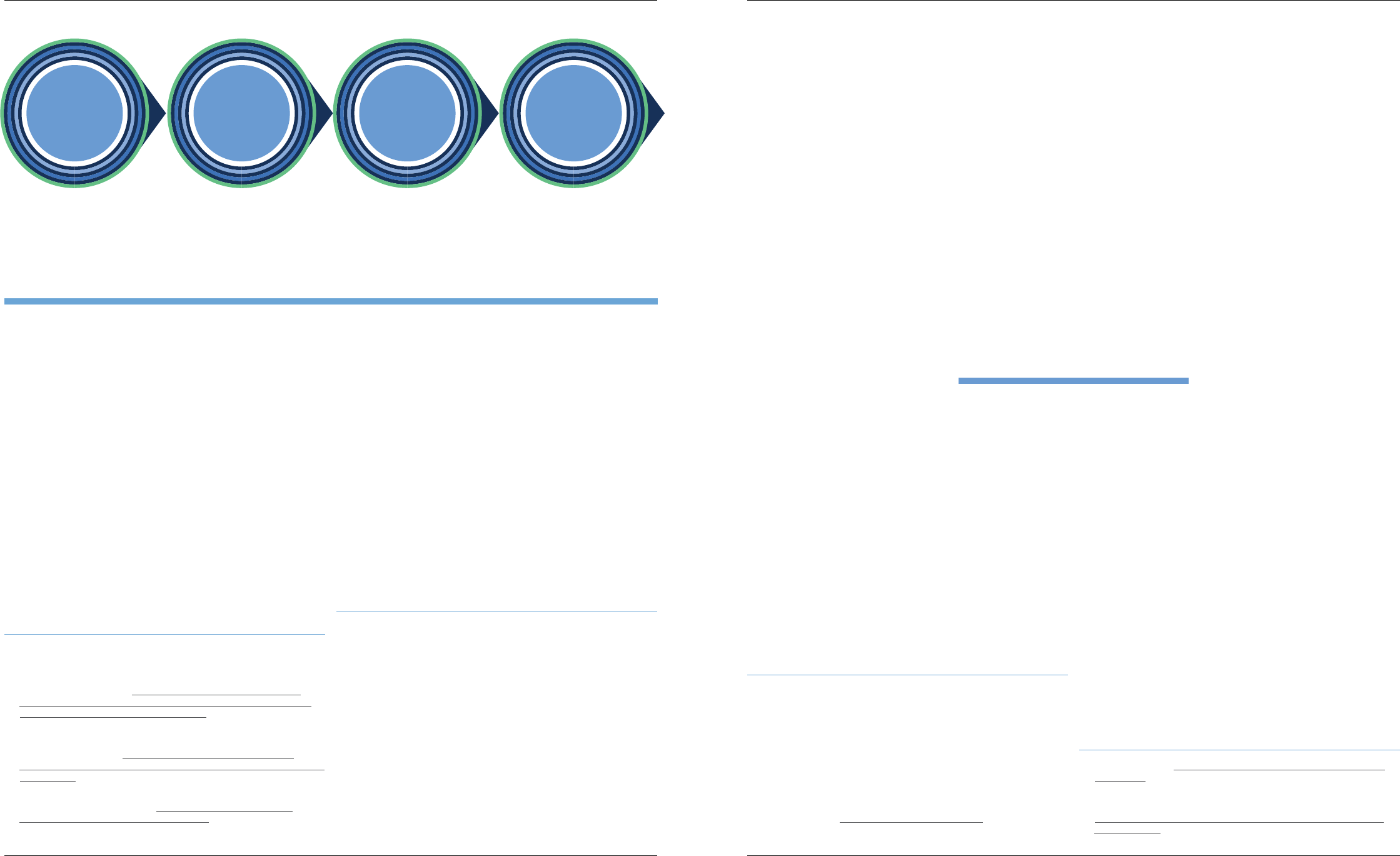

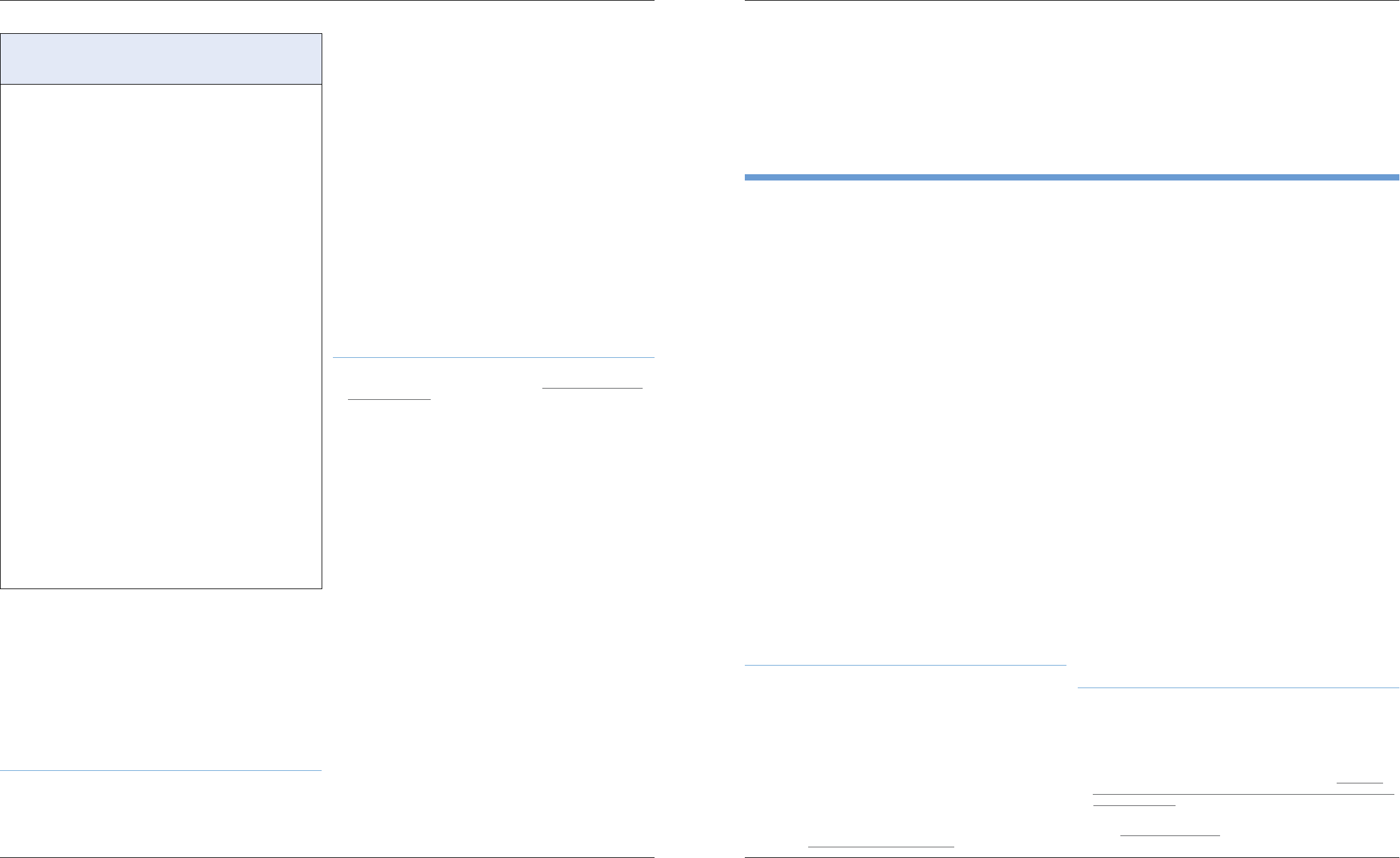

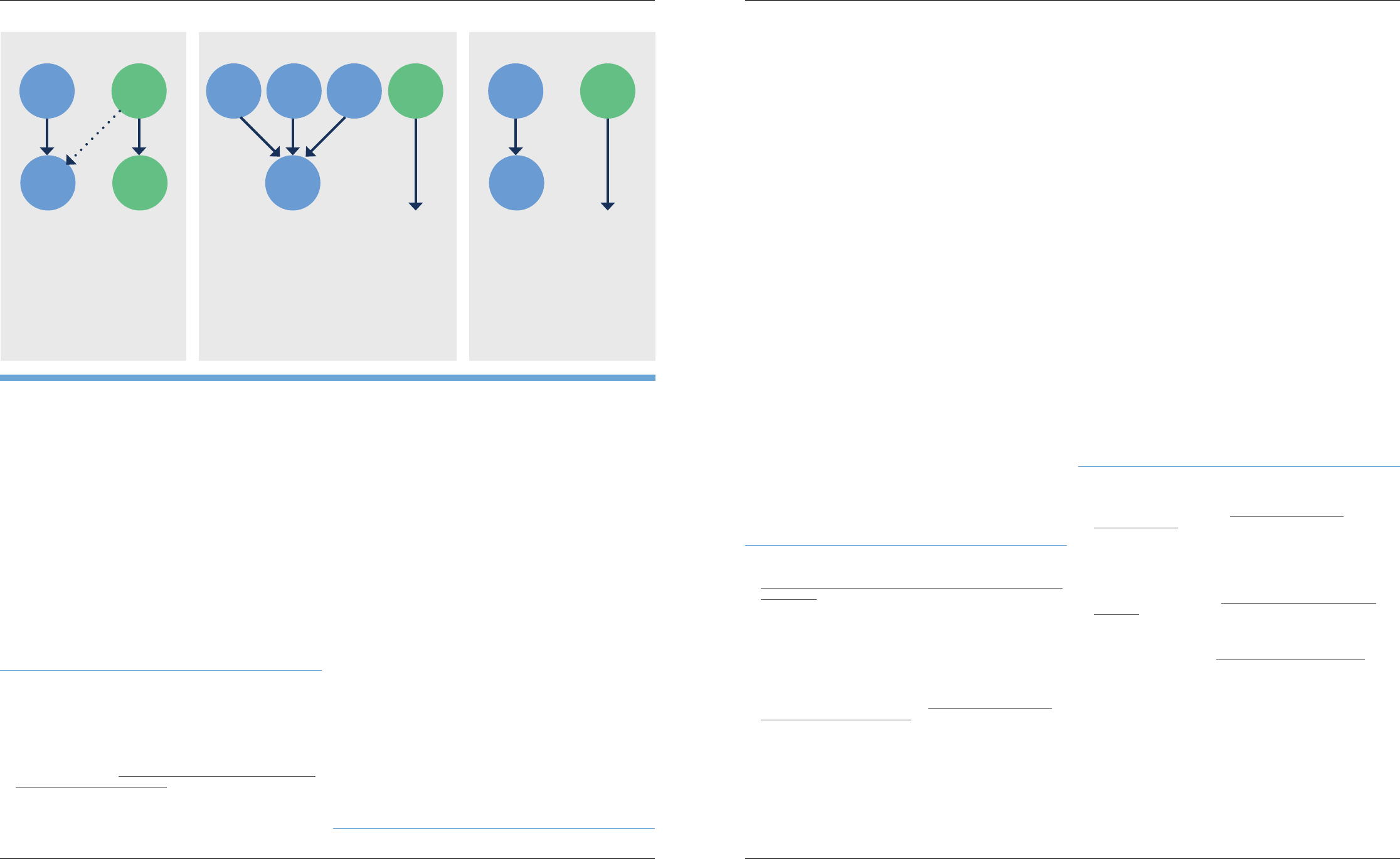

This Report conceives of accountability as a chain of in-

puts linked to consequences. It focuses on how informa-

tion ow (documentation, disclosures, and access) sup-

ports independent evaluations (including red-teaming

and audits), which in turn feed into consequences (in-

cluding liability and regulation) to create accountability. It

concludes with recommendations for federal government

action, some of which elaborate on themes in the AI EO,

to encourage and possibly require accountability inputs.

ways. Such competition, facilitated by information, en-

courages not just compliance with a minimum baseline

but also continual improvement over time.

To promote innovation and adoption of trustworthy AI,

we need to incentivize and support pre- and post-re-

lease evaluation of AI systems, and require more infor-

mation about them as appropriate. Robust evaluation

of AI capabilities, risks, and tness for purpose is still an

emerging eld. To achieve real accountability and har-

ness all of AI’s benets, the United States – and the world

– needs new and more widely available accountability

tools and information, an ecosystem of independent AI

system evaluation, and consequences for those who fail

to deliver on commitments or manage risks properly.

Access to information by appropriate means and par-

ties is important throughout the AI lifecycle, from early

development of a model to deployment and successive

uses, as recognized in federal government eorts already

underway pursuant to President Biden’s Executive Order

Number 14110 on the Safe, Secure, and Trustworthy De-

velopment and Use of Articial Intelligence of October 30,

2023 (“AI EO”). This information ow should include doc-

umentation about AI system models, architecture, data,

performance, limitations, appropriate use, and testing. AI

system information should be disclosed in a form t for

the relevant audience, including in plain language. There

should be appropriate third-party access to AI system

components and processes to promote suicient action-

able understanding of machine learning models.

Executive Summary

Articial intelligence (AI) systems are rapidly becoming

part of the fabric of everyday American life. From cus-

tomer service to image generation to manufacturing, AI

systems are everywhere.

Alongside their transformative potential for good, AI sys-

tems also pose risks of harm. These risks include inac-

curate or false outputs; unlawful discriminatory algorith-

mic decision making; destruction of jobs and the dignity

of work; and compromised privacy, safety, and security.

Given their inuence and ubiquity, these systems must

be subject to security and operational mechanisms that

mitigate risk and warrant stakeholder trust that they will

not cause harm.

Commenters emphasized how AI accountability policies

and mechanisms can play a key part in getting the best

out of this technology. Participants in the AI ecosystem

– including policymakers, industry, civil society, work-

ers, researchers, and impacted community members –

should be empowered to expose problems and potential

risks, and to hold responsible entities to account.

AI system developers and deployers should have mech-

anisms in place to prioritize the safety and well-being

of people and the environment and show that their AI

systems work as intended and benignly. Implemen-

tation of accountability policies can contribute to the

development of a robust, innovative, and informed AI

marketplace, where purchasers of AI systems know what

they are buying, users know what they are consuming,

and subjects of AI systems – workers, communities, and

the public – know how systems are being implement-

ed. Transparency in the marketplace allows companies

to compete on measures of safety and trustworthiness,

and helps to ensure that AI is not deployed in harmful

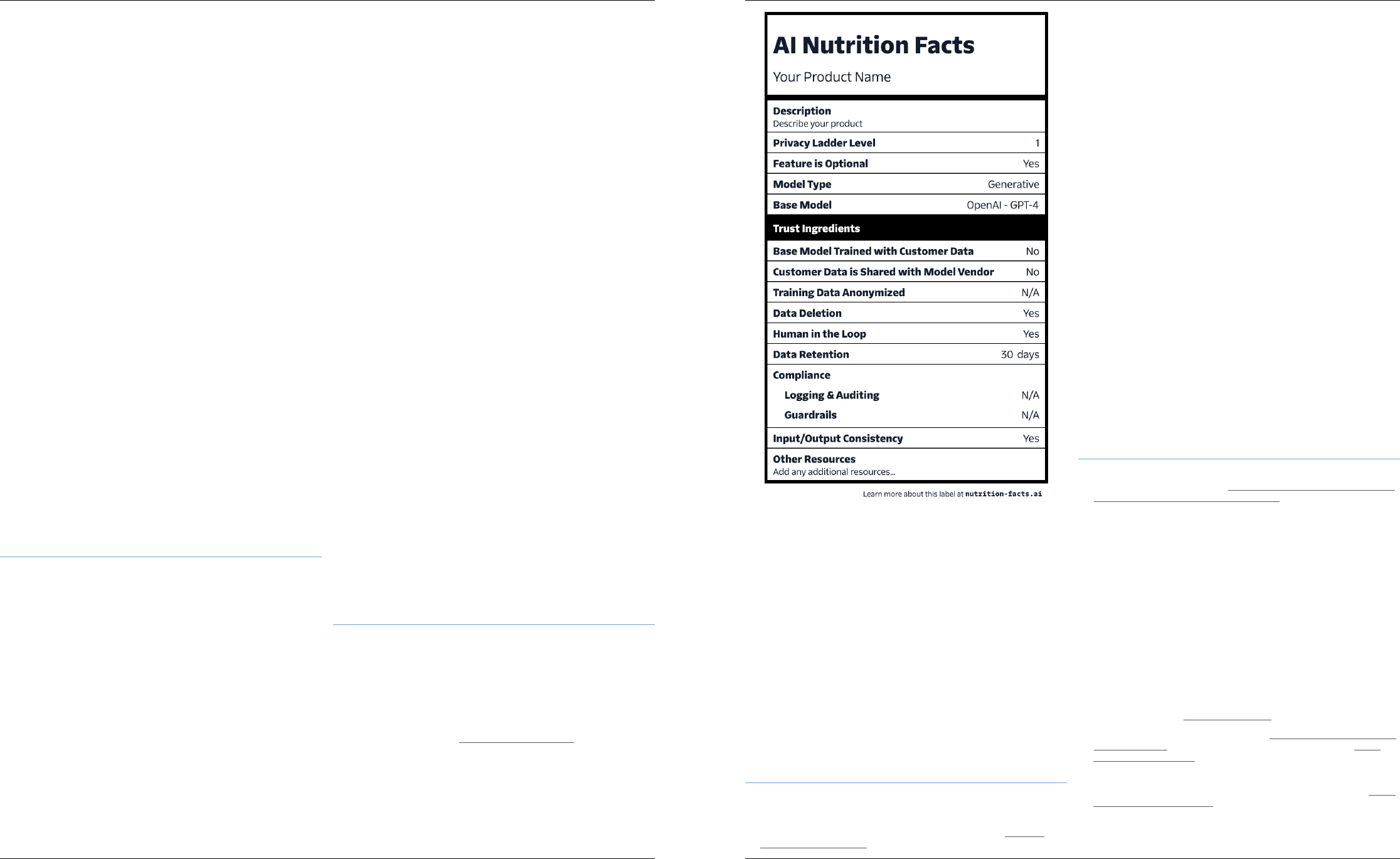

Disclosures,

Documentation,

Access

Evaluations,

Audits,

Red Teaming

Liability,

Regulation,

Market

AI ACCOUNTABILITY CHAIN

ACCOUNTABILITY

SYSTEM

OR MODEL

Participants in the AI ecosystem

– including policymakers,

industry, civil society, workers,

researchers, and impacted

community members – should

be empowered to expose

problems and potential risks,

and to hold responsible entities

to account.

Source: NTIA

NTIA Artificial Intelligence Accountability Policy Report

National Telecommunications and Information Administration

5 4

7. Cross-sectoral governmental capacity: The feder-

al government should strengthen its capacity to

address cross-sectoral risks and practices related

to AI. Whether located in existing agencies or new

bodies, there should be horizontal capacity in gov-

ernment to develop common baseline requirements

and best practices, and otherwise support the work

of agencies. These cross-sectoral tasks could in-

clude:

• Maintaining registries of high-risk AI deployments,

AI adverse incidents, and AI system audits;

• With respect to audit standards and/or auditor

certications, advocating for the needs of federal

agencies and coordinating with audit processes

undertaken or required by federal agencies them-

selves; and

• Providing evaluation, certication, documentation,

coordination, and disclosure oversight, as needed.

8. Contracting: The federal government should re-

quire that government suppliers, contractors,

and grantees adopt sound AI governance and as-

surance practices for AI used in connection with

the contract or grant, including using AI stan-

dards and risk management practices recognized

by federal agencies, as applicable. This would

ensure that entities contracting with the federal

government or receiving federal grants are enacting

sound internal AI system assurances. Such practices

in this market segment could accelerate adoption

more broadly and improve the AI accountability eco-

system throughout the economy.

5. Research: Federal government agencies should

conduct and support more research and develop-

ment related to AI testing and evaluation, tools

facilitating access to AI systems for research and

evaluation, and provenance technologies, through

existing and new capacity. This investment would

move towards creating reliable and widely applica-

ble tools to assess when AI systems are being used,

on what materials they were trained, and the capabil-

ities and limitations they exhibit. The establishment

of the U.S. AI Safety Institute at NIST in February 2024

is an important step in this direction.

REGULATORY REQUIREMENTS

6. Audits and other independent evaluations: Fed-

eral agencies should use existing and/or new

authorities to require as needed independent

evaluations and regulatory inspections of high-

risk AI model classes and systems. AI systems

deemed to present a high risk of harming rights or

safety – according to holistic assessments tailored to

deployment and use contexts – should in some cir-

cumstances be subject to mandatory independent

evaluation and/or certication. For some models

and systems, that process should take place both be-

fore release or deployment, as is already the case in

some sectors, and on an ongoing basis. To perform

these assessments, agencies may need to require

other accountability inputs, including documenta-

tion and disclosure relating to systems and models.

Some government agencies already have authorities

to establish risk categories and require independent

evaluations and/or other accountability measures,

while others may need new authorities.

SUPPORT

4. People and tools: Federal government agencies

should support and invest in technical infra-

structure, AI system access tools, personnel, and

international standards work to invigorate the

accountability ecosystem. This means building

the resources necessary, through existing and new

capacity, to meet the national need for independent

evaluations of AI systems, including:

• Datasets to test for equity, eicacy, and other attri-

butes and objectives;

• Computing and cloud infrastructure required to

conduct rigorous evaluations;

• Legislative establishment and funding of a Nation-

al AI Research Resource;

• Appropriate access to AI systems and their compo-

nents for researchers, evaluators, and regulators,

subject to intellectual property, data privacy, and

security- and safety-informed protections;

• Independent evaluation and red-teaming support,

such as through prizes, bounties, and research

support;

• Workforce development;

• Federal personnel with the appropriate socio-

technical expertise to design, conduct, and review

evaluations; and

• International standards development (including

broad stakeholder participation).

GUIDANCE

1. Audits and auditors: Federal government agen-

cies should work with stakeholders as appropri-

ate to create guidelines for AI audits and audi-

tors, using existing and/or new authorities. This

includes NIST’s tasks under the AI EO concerning AI

testing and evaluation and other eorts in the feder-

al government to rene guidance on such matters as

the design of audits, the subject matter to be audit-

ed, evaluation standards for audits, and certication

standards for auditors.

2. Disclosure and access: Federal government agen-

cies should work with stakeholders to improve

standard information disclosures, using exist-

ing and/or new authorities. Greater transparency

about, for example, AI system models, architecture,

training data, input and output data, performance,

limitations, appropriate use, and testing should be

provided to relevant audiences, including in some

cases to the public via model or system cards, data-

sheets, and/or AI “nutrition labels.” Standardization

of accessible formats and the use of plain language

can enhance the comparability and legibility of dis-

closures. Legislation is not necessary for this activity

to advance, but it could accelerate it.

3. Liability rules and standards: Federal govern-

ment agencies should work with stakeholders to

make recommendations about applying existing

liability rules and standards to AI systems and, as

needed, supplementing them. This would help in

determining who is responsible and held account-

able for AI system harms throughout the value chain.

NTIA Artificial Intelligence Accountability Policy Report

National Telecommunications and Information Administration

76

1.

Introduction

NTIA Artificial Intelligence Accountability Policy Report

National Telecommunications and Information Administration

9 8

managed.

3

To be clear, trust and assurance are not prod-

ucts that AI actors generate. Rather, trustworthiness in-

volves a dynamic between parties; it is in part a function

of how well those who use or are aected by AI systems

can interrogate those systems and make determinations

about them, either themselves or through proxies.

AI assurance eorts, as part of a larger accountability

ecosystem, should allow government agencies and

other stakeholders, as appropriate, to assess whether

the system under review (1) has substantiated claims

made about its attributes and/or (2) meets baseline

criteria for “trustworthy AI.” The RFC asked about the

evaluations entities should conduct prior to and aer

deploying AI systems; the necessary conditions for AI

system evaluations and certications to validate claims

and provide other assurance; dierent policies and ap-

proaches suitable for dierent use cases; helpful regu-

latory analogs in the development of an AI accountabil-

ity ecosystem; regulatory requirements such as audits

or licensing; and the appropriate role for the federal

government in connection with AI assurance and other

accountability mechanisms.

Over 1,440 unique comments from diverse stakeholders

were submitted in response to the RFC and have been

posted to Regulations.gov.

4

An NTIA employee read ev-

ery comment. Approximately 1,250 of the comments

were submitted by individuals in their own capacity.

Approximately 175 were submitted by organizations or

3 National Institute of Standards and Technology (NIST), Artificial Intelligence Risk

Management Framework (AI RMF 1.0) (Jan. 2023), https://doi.org/10.6028/NIST.

AI.100-1 [hereinaer “NIST AI RMF”]. The later-adopted AI EO uses the term “safe,

secure, and trustworthy” AI. Because safety and security are part of NIST’s definition of

“trustworthy,” this Report uses the “trustworthy” catch-all. Other policy documents use

“responsible” AI. See, e.g., Government Accountability Oice (GAO), Artificial Intelligence:

An Accountability Framework for Federal Agencies and Other Entities (GAO Report No.

GAO-21-519SP), at 24 n.22 (Jun 30, 2021), https://www.gao.gov/assets/gao-21-519sp.

pdf (citing U.S. government documents using the term “responsible use” to entail AI

system use that is responsible, equitable, traceable, reliable, and governable).

4 Regulations.gov, NTIA AI Accountability RFC (2023), https://www.regulations.gov/

document/NTIA-2023-0005-0001/comment. Comments in this proceeding are

accessible through this link, with an index available linking commenter name with

regulations.gov commenter number available here: https://www.regulations.gov/

document/NTIA-2023-0005-1452.

Introduction

NTIA issued a Request for Comment on AI Accountabil-

ity Policy on April 13, 2023 (RFC).

1

The RFC included 34

questions about AI governance methods that could be

employed to hold relevant actors accountable for AI

system risks and harmful impacts. It specically sought

feedback on what policies would support the develop-

ment of AI audits, assessments, certications, and other

mechanisms to create earned trust in AI systems – which

practices are also known as AI assurance. To be account-

able, relevant actors must be able to assure others that

the AI systems they are developing or deploying are wor-

thy of trust, and face consequences when they are not.

2

The RFC relied on the NIST delineation of “trustworthy

AI” attributes: valid and reliable, safe, secure and resil-

ient, privacy-enhanced, explainable and interpretable,

accountable and transparent, and fair with harmful bias

1 National Telecommunications and Information Administration (NTIA), AI Accountability

Policy Request for Comment, 88 Fed. Reg 22433 (April 13, 2023) [hereinaer “AI

Accountability RFC”].

2 See Claudio Novelli, Mariarosaria Taddeo, and Luciano Floridi, “Accountability in

Artificial Intelligence: What It Is and How It Works,” (Feb. 7, 2023), AI & Society: Journal

of Knowledge, Culture and Communication, https://doi.org/10.1007/s00146-023-

01635-y (stating that AI accountability “denotes a relation between an agent A and

(what is usually called) a forum F, such that A must justify A’s conduct to F, and

F supervises, asks questions to, and passes judgment on A on the basis of such

justification. . . . Both A and F need not be natural, individual persons, and may be

groups or legal persons.”) (italics in original).

ident Biden issued an Executive Order on Safe, Secure,

and Trustworthy Development and Use of Articial In-

telligence (“AI EO”), which advances and coordinates the

Administration’s eorts to ensure the safe and secure use

of AI; promote responsible innovation, competition, and

collaboration to create and maintain the United States’

leadership in AI; support American workers; advance eq-

uity and civil rights; protect Americans who increasingly

use, interact with, or purchase AI and AI-enabled prod-

ucts; protect Americans’ privacy and civil liberties; man-

age the risks from the federal government’s use of AI; and

lead global societal, economic, and technical progress.

8

Administration eorts to advance trustworthy AI prior to

the release of the RFC in April 2023 include most notably

the NIST AI Risk Management Framework (NIST AI RMF)

9

and the White House Blueprint for an AI Bill of Rights

(Blueprint for AIBoR).

10

Manage the Risks Posed by AI (December 14, 2023), https://www.hhs.gov/about/

news/2023/12/14/fact-sheet-biden-harris-administration-announces-voluntary-

commitments-leading-healthcare-companies-harness-potential-manage-risks-posed-

ai.html.

8 Executive Order No. 14110, Safe, Secure, and Trustworthy Development and Use of

Artificial Intelligence, 88 Fed. Reg. 75191 [hereinaer “AI EO”] (2023) at Sec. 2, https://

www.whitehouse.gov/briefing-room/presidential-actions/2023/10/30/executive-order-

on-the-safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence/.

9 NIST AI RMF; see also U.S.-E.U. Trade and Technology Council (TTC), TTC Joint

Roadmap on Evaluation and Measurement Tools for Trustworthy AI and Risk

Management (Dec. 1, 2022), https://www.nist.gov/system/files/documents/2022/12/04/

Joint_TTC_Roadmap_Dec2022_Final.pdf, at 9 (“The AI RMF is a voluntary framework

seeking to provide a flexible, structured, and measurable process to address AI

risks prospectively and continuously throughout the AI lifecycle. […] Using the AI

RMF can assist organizations, industries, and society to understand and determine

their acceptable levels of risk. The AI RMF is not a compliance mechanism, nor is it a

checklist intended to be used in isolation. It is law- and regulation-agnostic, as AI policy

discussions are live and evolving.”).

10 The White House, Blueprint for an AI Bill of Rights: Making Automated Systems Work

for the American People (Oct. 2022), https://www.whitehouse.gov/wp-content/

uploads/2022/10/Blueprint-for-an-AI-Bill-of-Rights.pdf [hereinaer “Blueprint for

AIBoR”].

individuals in their institutional capacity. Of this latter

group, industry (including trade associations) accounted

for approximately 48%, nonprot advocacy for approx-

imately 37%, and academic and other research organi-

zations for approximately 15%. There were a few com-

ments from elected and other governmental oicials.

Since the release of the RFC, the Biden-Harris Administra-

tion has worked to advance trustworthy AI in several ways.

In May 2023, the Administration secured commitments

from leading AI developers to participate in a public

evaluation of AI systems at DEF CON 31.

5

The Administra-

tion also secured voluntary commitments from leading

developers of “frontier” advanced AI systems (“White

House Voluntary Commitments”) to advance trust and

safety, including through evaluation and transparency

measures that relate to queries in the RFC.

6

In addition,

the Administration secured voluntary commitments from

healthcare companies related to AI.

7

Most recently, Pres-

5 See The White House, FACT SHEET: Biden-Harris Administration Announces New

Actions to Promote Responsible AI Innovation that Protects Americans’ Rights

and Safety (May 4, 2023), https://www.whitehouse.gov/briefing-room/statements-

releases/2023/05/04/fact-sheet-biden-harris-administration-announces-new-actions-

to-promote-responsible-ai-innovation-that-protects-americans-rights-and-safety/

(allowing “AI models to be evaluated thoroughly by thousands of community partners

and AI experts to explore how the models align with the principles and practices

outlined in the Biden-Harris Administration’s Blueprint for an AI Bill of Rights and AI

Risk Management Framework”).

6 See The White House, FACT SHEET: Biden-Harris Administration Secures Voluntary

Commitments from Leading Artificial Intelligence Companies to Manage the

Risks Posed by AI (July 21, 2023), https://www.whitehouse.gov/briefing-room/

statements-releases/2023/07/21/fact-sheet-biden-harris-administration-secures-

voluntary-commitments-from-leading-artificial-intelligence-companies-to-manage-

the-risks-posed-by-ai/; The White House, Ensuring Safe, Secure and Trustworthy

AI (July 21, 2023), https://www.whitehouse.gov/wp-content/uploads/2023/07/

Ensuring-Safe-Secure-and-Trustworthy-AI.pdf [hereinaer “First Round White House

Voluntary Commitments”] (detailing the commitments to red-team models, sharing

information among companies and the government, investment in cybersecurity,

incentivizing third-party issue discovery and reporting, and transparency through

watermarking, among other provisions); The White House, FACT SHEET: Biden-Harris

Administration Secures Voluntary Commitments from Eight Additional Artificial

Intelligence Companies to Manage the Risks Posed by AI (Sept. 12, 2023), https://www.

whitehouse.gov/briefing-room/statements-releases/2023/09/12/fact-sheet-biden-

harris-administration-secures-voluntary-commitments-from-eight-additional-artificial-

intelligence-companies-to-manage-the-risks-posed-by-ai/; The White House, Voluntary

AI Commitments (September 12, 2023), https://www.whitehouse.gov/wp-content/

uploads/2023/09/Voluntary-AI-Commitments-September-2023.pdf [hereinaer

“Second Round White House Voluntary Commitments”].

7 The White House, FACT SHEET: Biden-Harris Administration Announces Voluntary

Commitments from Leading Healthcare Companies to Harness the Potential and

NTIA Artificial Intelligence Accountability Policy Report

National Telecommunications and Information Administration

11 10

country have passed bills that aect AI,

14

and localities

are legislating as well.

15

The United States has collaborated with international

partners to consider AI accountability policy. The U.S. –

EU Trade and Technology Council (TTC) issued a joint AI

Roadmap and launched three expert groups in May 2023,

of which one is focused on “monitoring and measuring

AI risks.”

16

These groups have issued a list of 65 key terms,

wherever possible unifying disparate denitions.

17

Par-

ticipants in the 2023 Hiroshima G7 Summit have worked

to advance shared international guiding principles and

a code of conduct for trustworthy AI development.

18

The

Intelligence: Advancing Innovation Towards the National Interest (committee hearing)

(June 22, 2023), https://science.house.gov/hearings?ID=441AF8AB-7065-45C8-81E0-

F386158D625C; U.S. Senate Committee on the Judiciary Subcommittee on Privacy,

Technology, and the Law, Oversight of A.I.: Rules for Artificial Intelligence (committee

hearing) (May 16, 2023), https://www.judiciary.senate.gov/committee-activity/

hearings/oversight-of-ai-rules-for-artificial-intelligence.

14 See Katrina Zhu, The State of State AI Laws: 2023, Electronic Privacy Information Center

(Aug. 3, 2023), https://epic.org/the-state-of-state-ai-laws-2023/ (providing an inventory

of state legislation).

15 See, e.g., The New York City Council, A Local Law to Amend the Administrative Code

of the City of New York, in Relation to Automated Employment Decision Tools, Local

Law No. 2021/144 (Dec. 11, 2021), https://legistar.council.nyc.gov/LegislationDetail.

aspx?ID=4344524&GUID=B051915D-A9AC-451E-81F8-6596032FA3F9&Options=ID%7CTe

xt%7C&Search=.

16 See The White House, FACT SHEET: U.S.-EU Trade and Technology Council Deepens

Transatlantic Ties (May 31, 2023), https://www.whitehouse.gov/briefing-room/

statements-releases/2023/05/31/fact-sheet-u-s-eu-trade-and-technology-council-

deepens-transatlantic-ties/.

17 See The White House, U.S.-EU Joint Statement of the Trade and Technology

Council (May 31, 2023), https://www.whitehouse.gov/briefing-room/statements-

releases/2023/05/31/u-s-eu-joint-statement-of-the-trade-and-technology-council-2/;

supra note 9, U.S.-E.U. Trade and Technology Council (TTC).

18 The White House, G7 Leaders’ Statement on the Hiroshima AI Process (Oct. 30, 2023),

https://www.whitehouse.gov/briefing-room/statements-releases/2023/10/30/g7-

leaders-statement-on-the-hiroshima-ai-process/; Hiroshima Process International

Guiding Principles for Organizations Developing Advanced AI System (Oct. 30, 2023),

https://www.mofa.go.jp/files/100573471.pdf; Hiroshima Process International Code

of Conduct for Organizations Developing Advanced AI Systems (Oct. 30, 2023), https://

www.mofa.go.jp/files/100573473.pdf (mofa.go.jp).

Federal regulatory and law enforcement agencies have

also advanced AI accountability eorts. A joint statement

from the Federal Trade Commission, the Department of

Justice’s Civil Rights Division, the Equal Employment

Opportunity Commission, and the Consumer Finan-

cial Protection Bureau outlined the risks of unlawfully

discriminatory outcomes produced by AI and other au-

tomated systems and asserted the respective agencies’

commitment to enforcing existing law.

11

Other federal

agencies are examining AI in connection with their mis-

sions.

12

A number of dierent Congressional committees

have held hearings, and members of Congress have in-

troduced bills related to AI.

13

State legislatures across the

11 See Rohit Chopra, Kristen Clarke, Charlotte A. Burrows, and Lina M. Khan, Joint

Statement on Enforcement Eorts Against Discrimination and Bias in Automated

Systems (April 25, 2023), https://www.c.gov/system/files/c_gov/pdf/EEOC-CRT-

FTC-CFPB-AI-Joint-Statement%28final%29.pdf [hereinaer “Joint Statement on

Enforcement Eorts”]; Consumer Financial Protection Circular, 2023-03, Adverse action

notification requirements and the proper use of the CFPB’s sample forms provided in

Regulation B, https://www.consumerfinance.gov/compliance/circulars/circular-2023-

03-adverse-action-notification-requirements-and-the-proper-use-of-the-cfpbs-sample-

forms-provided-in-regulation-b/. See also, Consumer Financial Protection Bureau,

CFPB Issues Guidance on Credit Denials by Lenders Using Artificial Intelligence (Sept.

2023), https://www.consumerfinance.gov/about-us/newsroom/cfpb-issues-guidance-

on-credit-denials-by-lenders-using-artificial-intelligence/; Equal Employment

Opportunity Commission, Select Issues: Assessing Adverse Impact in Soware,

Algorithms, and Artificial Intelligence Used in Employment Selection Procedures Under

Title VII of the Civil Rights Act of 1964 (May 18, 2023), https://www.eeoc.gov/laws/

guidance/select-issues-assessing-adverse-impact-soware-algorithms-and-artificial.

12 See, e.g., U.S. Department of Education Oice of Educational Technology, Artificial

Intelligence and the Future of Teaching and Learning: Insights and Recommendations

(May 2023), https://www2.ed.gov/documents/ai-report/ai-report.pdf; Engler, infra note

359 (referring to initiatives by the U.S. Food and Drug Administration); U.S. Department

of State, Artificial Intelligence (AI), https://www.state.gov/artificial-intelligence/; U.S.

Department of Health and Human Services, Trustworthy AI (TAI) Playbook (September

2021), https://www.hhs.gov/sites/default/files/hhs-trustworthy-ai-playbook.pdf;

U.S. Department of Homeland Security Science & Technology Directorate, Artificial

Intelligence (September 2023), https://www.dhs.gov/science-and-technology/artificial-

intelligence.

13 See, e.g., Laurie A. Harris, Artificial Intelligence: Overview, Recent Advances, and

Considerations for the 118th Congress, Congressional Research Service (Aug. 4,

2023), at 9-10, https://crsreports.congress.gov/product/pdf/R/R47644/2; Anna

Lenhart, Roundup of Federal Legislative Proposals that Pertain to Generative AI:

Part II, Tech Policy Press (Aug. 9, 2023), https://techpolicy.press/roundup-of-federal-

legislative-proposals-that-pertain-to-generative-ai-part-ii/; see also, e.g., U.S. House

of Representatives Committee on Oversight and Accountability Subcommittee on

Cybersecurity, Information Technology, and Government Innovation, Advances in AI:

Are We Ready For a Tech Revolution? (subcommittee hearing) (March 8, 2023), https://

oversight.house.gov/hearing/advances-in-ai-are-we-ready-for-a-tech-revolution/; U.S.

House of Representatives Committee on Science, Space, and Technology, Artificial

latory, and other measures and policies that are designed

to provide assurance to external stakeholders that AI sys-

tems are legal and trustworthy. More specically, this Re-

port focuses on information ow, system evaluations, and

ecosystem development

which, together with regu-

latory, market, and liability

functions, are likely to pro-

mote accountability for AI

developers and deployers

(collectively and individual-

ly designated here as “AI ac-

tors”). There are many other

players in the AI value chain

traditionally included in the

designation of AI actors, in-

cluding system end users.

Any of these players can cause harm, but this Report fo-

cuses on developers and deployers as the most relevant

entities for policy interventions. This Report concentrates

further on the cross-sectoral aspects of AI accountability,

while acknowledging that AI accountability mechanisms

are likely to take dierent forms in dierent sectors.

Multiple policy interventions may be necessary to

achieve accountability. Take, for example, a policy pro-

moting the disclosure to appropriate parties of training

data details, performance limitations, and model char-

acteristics for high-risk AI systems. Disclosure alone

does not make an AI actor accountable. However, such

information ows will likely be important for internal

accountability within the AI actor’s domain and for ex-

ternal accountability as regulators, litigators, courts, and

the public act on such information. Disclosure, then, is

an accountability input whose eectiveness depends on

other policies or conditions, such as the governing lia-

bility framework, relevant regulation, and market forces

(in particular, customers’ and consumers’ ability to use

the information disclosed to make purchase and use

decisions). This report touches on how accountability

inputs feed into the larger accountability apparatus and

considers how these connections might be developed in

further work.

Our nal limitations on scope concern matters that are

Organization for Economic Cooperation and Develop-

ment is working on accountability in AI.

19

In Europe, the

EU AI Act – which includes provisions addressing pre-re-

lease conformity certications for high-risk systems, as

well as transparency and

audit provisions and spe-

cial provisions for founda-

tion models

20

or general

purpose AI – has continued

on the path to becoming

law.

21

The EU Digital Ser-

vices Act requires audits of

the largest online platforms

and search engines,

22

and a

recent EU Commission del-

egated act on audits indi-

cates that it is important in

this context to analyze algorithmic systems and technol-

ogies such as generative models.

23

In light of all this activity, it is important to articulate the

scope of this Report. Our attention is on voluntary, regu-

19 See, e.g., OECD ADVANCING ACCOUNTABILITY IN AI GOVERNING AND MANAGING

RISKS THROUGHOUT THE LIFECYCLE FOR TRUSTWORTHY AI (Feb. 2023), https://

www.oecd.org/sti/advancing-accountability-in-ai-2448f04b-en.htm. See also United

Nations, High-level Advisory Body on Artificial Intelligence, https://www.un.org/en/

ai-advisory-body (calling for “[g]lobally coordinated AI governance” as the “only way

to harness AI for humanity, while addressing its risks and uncertainties, as AI-related

applications, algorithms, computing capacity and expertise become more widespread

internationally” and describing the mandate of the new High-level Advisory Body on

Artificial Intelligence to “analysis and advance recommendations for the international

governance of AI”).

20 We use the term “foundation model” to refer to models which are “trained on broad

data at scale and are adaptable to a wide range of downstream tasks”, like “BERT,

DALL-E, [and] GPT-3”. See Richi Bommasani et al., On the Opportunities and Risks of

Foundation Models, arXiv (July 12, 2022), https://arxiv.org/pdf/2108.07258.pdf.

21 See European Parliament, European Parliament legislative resolution of 13 March

2024 on the proposal for a regulation of the European Parliament and of the Council

on laying down harmonised rules on Artificial Intelligence (Artificial Intelligence

Act) and amending certain Union Legislative Acts (COM(2021)0206 – C9-0146/2021

– 2021/0106(COD)) (March 13, 2024), https://www.europarl.europa.eu/doceo/

document/TA-9-2024-0138_EN.pdf (containing the text of the proposed EU AI Act as

adopted by the European Parliament) [hereinaer “EU AI Act”]; European Parliament,

Artificial Intelligence Act: Deal on Comprehensive Rules for Trustworthy AI, European

Parliament News (Dec. 12, 2023), https://www.europarl.europa.eu/news/en/press-

room/20231206IPR15699/artificial-intelligence-act-dealon-comprehensive-rules-for-

trustworthy-ai.

22 See European Commission, Digital Services Act: Commission Designates First Set of

Very Large Online Platforms and Search Engines (April 25, 2023), https://ec.europa.eu/

commission/presscorner/detail/en/ip_23_2413.

23 See European Commission, Commission Delegated Regulation (EU) Supplementing

Regulation (EU) 2022/2065 of the European Parliament and of the Council, by Laying

Down Rules on the Performance of Audits for Very Large Online Platforms and Very

Large Online Search Engines, (Oct. 20, 2023), at 2, 14, https://digital-strategy.ec.europa.

eu/en/library/delegated-regulation-independent-audits-under-digital-services-act.

Our attention is on voluntary,

regulatory, and other measures

and policies that

are designed to provide

assurance to external

stakeholders that AI systems

are legal and trustworthy.

NTIA Artificial Intelligence Accountability Policy Report

National Telecommunications and Information Administration

13 12

of AI accountability: (1) information ow, including doc-

umentation of AI system development and deployment;

relevant disclosures appropriately detailed to the stake-

holder audience; and provision to researchers and evalu-

ators of adequate access to AI system components; (2) AI

system evaluations, including government requirements

for independent evaluation and pre-release certication

(or licensing) in some cases; and (3) government support

for an accountability ecosystem that widely distributes

eective scrutiny of AI systems, including within govern-

ment itself.

Section 4 shows how accountability inputs intersect with

liability, regulatory, and market-forcing functions to en-

sure real consequences when AI actors forfeit trust.

Section 5 surveys lessons learned from other account-

ability models outside of the AI space.

Section 6 concludes with recommendations for govern-

ment action.

Appendix A is a glossary of terms used in this Report.

Finally, open-source AI models, AI models with widely

available model weights, and components of AI systems

generally are of tremendous interest and raise distinct

accountability issues. The AI EO tasked the Secretary

of Commerce with soliciting input and issuing a report

on “the potential benets, risks, and implications, of

dual-use foundation models for which the weights are

widely available, as well as policy and regulatory recom-

mendations pertaining to such models,”

31

and NTIA has

published a Request for Comment for the purpose of in-

forming that report.

32

The remainder of this Report is organized as follows:

Section 2 of the Report outlines signicant commenter

alignment around cross-cutting issues, many of which

are covered in more depth later. Such issues include

calibrating AI accountability policies to risk, assuring AI

systems across their lifecycle, standardizing disclosures

and evaluations, and increasing the federal role in sup-

porting and/or requiring certain accountability inputs.

Section 3 of the Report dives deeper into these issues,

organizing the discussion around three key ingredients

31 AI EO at Sec. 4.6.

32 National Telecommunications and Information Administration, Dual Use Foundation

Artificial Intelligence Models With Widely Available Model Weights, 89 Fed. Reg. 14059

(Feb. 26, 2024), https://www.federalregister.gov/documents/2024/02/26/2024-03763/

dual-use-foundation-artificial-intelligence-models-with-widely-available-model-

weights.

Similarly, the role of privacy and the use of personal

data in model training are topics of great interest and

signicance to AI accountability. More than 90% of all or-

ganizational commenters noted the importance of data

protection and privacy to trustworthy and accountable

AI.

28

AI can exacerbate risks to Americans’ privacy, as rec-

ognized by the Blueprint for an AI Bill of Rights and the AI

EO

.29

Privacy protection is not only a focus of AI account-

ability, but importantly privacy also needs to be consid-

ered in the development and use of accountability tools.

Documentation, disclosures, audits, and other forms of

evaluation can result in the collection and exposure of

personal information, thereby jeopardizing privacy if not

properly designed and executed. Stronger and clearer

rules for the protection of personal data are necessary

through the passage of comprehensive federal privacy

legislation and other actions by federal agencies and the

Administration. The President has called on Congress to

enact comprehensive federal privacy protections.

30

of AI. See 17 U.S.C. § 1201(a)(1)(C); NTIA, Recommendations of the National

Telecommunications and Information Administration to the Register of Copyrights in

the Eight Triennial Section 1201 Rulemaking at 48-58 (Oct. 1, 2021), https://www.ntia.

gov/sites/default/files/publications/ntia_dmca_consultation_2021_0.pdf.

28 See, e.g., Data & Society Comment at 7; Google DeepMind Comment at 3; Global

Partners Digital Comment at 15; Hitachi Comment at 10; TechNet Comment at 4;

NCTA Comment at 4-5; Centre for Information Policy Leadership (CIPL) Comment

at 1; Access Now Comment at 3-5; BSA | The Soware Alliance Comment at 12;

U.S. Chamber of Commerce Comment at 9 (discussing the need for federal privacy

protection); Business Roundtable Comment at 10 (supporting a passage of a federal

privacy/consumer data security law to align compliance eorts across the nation); CTIA

Comment at 1, 4-7 (declaring that federal privacy legislation is necessary to avoid the

current fragmentation); Salesforce Comment at 9 (“The lack of an overarching Federal

standard means that the data which powers AI systems could be collected in a way that

prevents the development of trusted AI. Further, we believe that any comprehensive

federal privacy legislation in the United States should include provisions prohibiting

the use of personal data to discriminate on the basis of protected characteristics”).

29 See AI EO at Sec. 2(f)(“Artificial Intelligence is making it easier to extract, re-identify,

link, infer, and act on sensitive information about people’s identities, locations, habits,

and desires. Artificial Intelligence’s capabilities in these areas can increase the risk that

personal data could be exploited and exposed.”); Sec. 9.

30 See The White House, Readout of White House Listening Session on Tech Platform

Accountability (Sept. 8, 2022) [hereinaer “Readout of White House Listening Session”],

https://www.whitehouse.gov/briefing-room/statements-releases/2022/09/08/readout-

of-white-house-listening-session-on-tech-platform-accountability.

the focus of other federal government inquiries. Although

NTIA received many comments related to intellectual

property, particularly on the role of copyright in the de-

velopment and deployment of AI, this Report is largely

silent on intellectual property issues. Mitigating risks to

intellectual property (e.g. infringement, unauthorized

data transfers, unauthorized disclosures) are certainly

recognized components of AI accountability.

24

These is-

sues are of ongoing consideration at the U.S. Patent and

Trademark Oice (USPTO)

25

and at the U.S. Copyright Of-

ce.

26

We look forward to working with these agencies

and others on these issues as warranted to help ensure

that AI accountability and related transparency, safety,

and other considerations relevant to the broader digital

economy and Internet ecosystem are represented.

27

24 See, e.g., NIST AI RMF at 16, 24 (recognizing that training data should follow applicable

intellectual property rights laws, that policies and procedures should be in place to

address risks of infringement of a third-party’s intellectual property or other rights);

Hiroshima Process International Code of Conduct for Organizations Developing

Advanced AI Systems, supra note 18, at 8 (calling on organizations to “implement

appropriate data input measures and protections for personal data and intellectual

property” and encouraging organizations “to implement appropriate safeguards,

to respect rights related to privacy and intellectual property, including copyright-

protected content.”).

25 The USPTO will clarify and make recommendations on key issues at the intersection

of intellectual property and artificial intelligence. See AI EO Section 5.2. See also U.S.

Patent and Trademark Oice, Request for Comments Regarding Artificial Intelligence

and Inventorship, 88 Fed. Reg. 9492 (Feb. 14, 2023), https://www.federalregister.gov/

documents/2023/02/14/2023-03066/request-for-comments-regarding-artificial-

intelligence-and-inventorship; U.S. Patent and Trademark Oice, Public Views on

Artificial Intelligence and Intellectual Property Policy (Oct. 2020), https://www.uspto.

gov/sites/default/files/documents/USPTO_AI-Report_2020-10-07.pdf; U.S. Patent and

Trademark Oice, Artificial Intelligence, https://www.uspto.gov/initiatives/artificial-

intelligence.

26 See, e.g., U.S. Copyright Oice, Notice of Inquiry and Request for Comments on Artificial

Intelligence and Copyright, 88 Fed. Reg. 59942 (Aug. 30, 2023) [hereinaer “Copyright

Oice AI RFC”], https://www.federalregister.gov/documents/2023/08/30/2023-18624/

artificial-intelligence-and-copyright; U.S. Copyright Oice Comment at 2 (describing

the Copyright Oice’s ongoing work at the intersection of AI and copyright law and

policy); U.S. Copyright Oice, Copyright and Artificial Intelligence, https://www.

copyright.gov/ai/.

27 See U.S. Copyright Oice Comment at 2 (“We are, however, cognizant that the

policy issues implicated by rapidly developing AI technologies are bigger than any

individual agency’s authority, and that NTIA’s accountability inquiries may align

with our work.”); see also Copyright Oice AI RFC at 59,944 n.22 (mentioning the U.S.

Copyright Oice’s consideration of AI in the regulatory context of the Digital Millenium

Copyright Act rulemaking. By law, NTIA plays a consultation role in the rulemaking and

has previously commented on petitions for exemptions that involve considerations

Disclosures,

Documentation,

Access

Evaluations,

Audits,

Red Teaming

Liability,

Regulation,

Market

AI ACCOUNTABILITY CHAIN

ACCOUNTABILITY

SYSTEM

OR MODEL

Source: NTIA

NTIA Artificial Intelligence Accountability Policy Report

National Telecommunications and Information Administration

1514

Requisites

for AI

Accountability:

Areas of Significant Commenter

Agreement

2.

NTIA Artificial Intelligence Accountability Policy Report

National Telecommunications and Information Administration

17 16

2.1. RECOGNIZE POTENTIAL HARMS AND RISKS

Many commenters, especially individual commenters,

expressed serious concerns about the impact of AI. AI

system potential harms and risks have been well-doc-

umented elsewhere.

33

The following are representative

examples, which also appeared in comments:

• Ineicacy and inadequate functionality.

• Inaccuracy, unreliability, ineectiveness, insui-

cient robustness.

• Untness for the use case.

• Lowered information integrity.

• Misleading or false outputs, sometimes coupled

with coordinated campaigns.

• Opacity around use.

• Opacity around provenance of AI inputs.

• Opacity around provenance of AI outputs.

• Safety and security concerns.

• Unsafe decisions or outputs that contribute to

harmful outcomes.

• Capacities falling into the hands of bad actors who

intend harm.

• Adversarial evasion or manipulation of AI.

• Obstacles to reliable control by humans.

• Harmful environmental impact.

33 Many of these risks are recognized in the AI EO, the AIBoR, and in the Oice of

Management and Budget, Proposed Memorandum for the Heads of Executive

Departments and Agencies, “Advancing Governance, Innovation, and Risk

Management for Agency Use of Artificial Intelligence” (Nov. 2023), https://ai.gov/wp-

content/uploads/2023/11/AI-in-Government-Memo-Public-Comment.pdf at 24-25.

Requisites for AI

Accountability: Areas of

Significant Commenter

Agreement

The comments submitted to the RFC compose a large

and diverse corpus of policy ideas to advance AI account-

ability. While there were signicant disagreements, there

was also a fair amount of support among stakeholders

from dierent constituencies for making AI systems

more open to scrutiny and more accountable to all. This

section provides a brief overview of signicant plurality

(if not majority) sentiments in the comments relating to

AI accountability policy, along with NTIA reections. Sec-

tion 3 provides a deeper treatment of these positions;

most are congruent with the Report’s recommendations

in Section 6.

Individual commenters reected misgivings in the

American public at large about AI.

34

Three major themes

emerged from many of the individual comments:

• The most signicant by the numbers was concern

about intellectual property. Nearly half of all individual

commenters (approximately 47%) expressed alarm

that generative AI

35

was ingesting as training materi-

al copyrighted works without the copyright holders’

consent, without their compensation, and/or without

attribution. They also expressed worries that AI could

supplant the jobs of creators and other workers. Some

of these commenters supported new forms of regu-

lation for AI that would require copyright holders to

opt-in to AI system use of their works.

36

• Another signicant concern was that malicious actors

would exploit AI for destructive purposes and develop

their own systems for those ends. A related concern

was that AI systems would not be subject to suicient

controls and would be used to harm individuals and

communities, including through unlawfully discrimi-

natory impacts, privacy violations, fraud, and a wide

array of safety and security breaches.

• A nal theme concerned the personnel building and

deploying AI systems, and the personnel making

AI policy. Individual commenters questioned the

credibility of the responsible people and institutions

and doubted whether they had suiciently diverse

experiences, backgrounds, and inclusive practices to

foster appropriate decision-making.

34 See Alec Tyson and Emma Kikuchi, Growing Public Concern About the Role of

Artificial Intelligence in Daily Life, Pew Research Center (Aug. 28, 2023), https://www.

pewresearch.org/short-reads/2023/08/28/growing-public-concern-about-the-role-of-

artificial-intelligence-in-daily-life/.

35 “The term ‘generative AI’ means the class of AI models that emulate the structure and

characteristics of input data in order to generate derived synthetic content. This can

include images, videos, audio, text, and other digital content.” AI EO at Sec. 3(p).

36 Stakeholders are deeply divided on some of these policy issues, such as the

implications of “opt-in” or “opt-out” systems, or compensation for authors, which

are part of the U.S. Copyright Oice’s inquiry and USPTO ongoing work. This report

recognizes the importance of these issues to the overall risk management and

accountability framework without touching on the merits.

• Violation of human rights.

• Discriminatory treatment, impact, or bias.

• Improper disclosure of personal, sensitive, con-

dential, or proprietary data.

• Lack of accessibility.

• The generation of non-consensual intimate imag-

ery of adults and child sexual abuse material.

• Labor abuses involved in the training of AI data.

• Impacts on privacy.

• Exposure of non-public information through AI

analytical insights.

• Use of personal information in ways that are con-

trary to the contexts in which they are collected.

• Overcollection of personal information to create

training datasets or to unduly monitor individuals

(such as workers and trade unions).

• Potential negative impact to jobs and the economy.

• Infringement of intellectual property rights.

• Infringements on the ability to form and join

unions.

• Job displacement, reduction, and/or degradation

of working conditions, such as increased moni-

toring of workers and the potential mental and

physical health impacts.

• Undue concentration of power and economic

benets.

NTIA Artificial Intelligence Accountability Policy Report

National Telecommunications and Information Administration

19 18

safety-impacting or rights-impacting AI systems deserve

extra scrutiny because of the risks they pose of causing

serious harm. Another kind of tiering ties AI accountabil-

ity expectations to how capable a model or system is.

Commenters suggested that highly capable models and

systems may deserve extra scrutiny, which could include

requirements for pre-release certication and capabili-

ty disclosures to government.

39

This kind of tiering ap-

proach is evident, for example, in the AI EO requirement

that developers of certain “dual-use foundation models”

more capable than any yet released would have to make

disclosures to the federal government.

40

2.3. ENSURE ACCOUNTABILITY ACROSS THE

AI LIFECYCLE AND VALUE CHAIN

Various actors in the AI value chain exercise dierent

degrees and kinds of control throughout the lifecycle of

an AI system. Upstream developers design and create AI

models and/or systems. Downstream deployers then de-

ploy those models and/or systems (or use the models as

part of other systems) in particular contexts. The down-

stream deployers may also ne tune a model, thereby

acting as downstream developers of the deployed sys-

tems. Both upstream developers and downstream de-

ployers of AI systems should be accountable; existing

laws and regulations may already specify accountability

mechanisms for dierent actors.

Commenters laid out good reasons to vest accountability

with AI system developers who make critical upstream deci-

sions about AI models and other components. These actors

have privileged knowledge to inform important disclosures

and documentation and may be best positioned to man-

age certain risks. Some models and systems should not be

deployed until they have been independently evaluated.

41

39 See, e.g., Center for AI Safety Comment Appendix A – A Regulatory Framework for

Advanced Artificial Intelligence (proposing regulatory regime for frontier models that

would require pre-release certification around information security, safety culture, and

technical safety); OpenAI Comment at 6 (considering a requirement of pre-deployment

risk assessments, security and deployment safeguards); Microso Comment at 7

(regulatory framework based on the AI tech stack, including licensing requirements for

foundation models and infrastructure providers); Anthropic at 12 (confidential sharing

of large training runs with regulators); Credo AI Comment at 9 (Special foundation

model and large language model disclosures to government about models and

processes, including AI safety and governance); Audit AI Comment at 8 (“High-risk AI

systems should be released with quality assurance certifications based on passing

and maintaining ongoing compliance with AI accountability regulations.”); Holistic AI

Comment at 9 (high-risk systems should be released with certifications).

40 AI EO at Sec. 4.2(i).

41 See Oice of Management and Budget, Proposed Memorandum for the Heads of

Potential AI system risks and harms inform NTIA’s con-

sideration of accountability measures. AI system devel-

opers and deployers should be responsible for man-

aging the risks of their systems. As AI systems multiply

and diuse into society and the marketplace, customers,

workers, consumers, and those aected by AI need as-

surance that these systems work as claimed and without

causing harm. This is especially important for high-risk

systems that are rights-impacting or safety-impacting.

2.2. CALIBRATE ACCOUNTABILITY INPUTS TO

RISK LEVELS

Commenters generally support calibrating AI account-

ability inputs to scale with the risk of the AI system or

application.

37

As many acknowledge, existing work from

NIST, the Organization for Economic Cooperation and

Development (OECD), the Global Partnership on Arti-

cial Intelligence, and the European Union (e.g., the EU AI

Act), among others, have established robust frameworks

to map, measure, and manage risks. In the interest of

risk-based accountability, one commenter, for example,

suggested a “baseline plus” approach: all models and

applications are subject to some baseline standard of

assurance practices across sectors and higher risk mod-

els or applications have an additional set of obligations.

38

NTIA concludes that a tiered approach to AI accountabil-

ity has the benet of scoping expectations and obliga-

tions proportionately to AI system risks and capabilities.

As discussed below, many commenters argued that

37 See, e.g., University of Illinois Urbana-Champaign School of Information Sciences

Researchers (UIUC) Comment at 8 (“…tiered systems match an AI system’s risk with

an appropriate level of oversight… The result is a more tailored and proportionate

regulation of fast evolving AI systems…”); Przemyslaw Grabowicz et al., Comment

at 11 (“AI systems represent too many applications for a single set of rules. Just as

dierent FDA restrictions are applied to dierent medications, AI controls should be

tailored to the application.”); Institute of Electrical and Electronics Engineers (IEEE)

Comment at 13 (“When the integrity level increases, so too does the intensity and

rigor of the required verification and validation tasks); AI & Equality Comment at 3

(“The transparency and accountability requirements should also be tailored and

calibrated according to the amount of risk presented by the specific sector or domain

in which the AI system is being deployed...”); Palantir Comment at 7 (appropriate

accountability mechanisms depends on the AI use context and risk profile); Securities

Industry and Financial Markets Association (SIFMA) Comment at 4 (focus auditing

on high-risk AI application such as “hiring, lending, insurance underwriting, and

education admissions”); Bipartisan Policy Center (BPC) Comment at 2 (urging

risk-based accountability systems); NCTA Comment at 6; Consumer Technology

Association Comment at 2; Centre for Information Policy Leadership Comment at 4;

Workday Comment at 1; Adobe Comment at 7; BSA | The Soware Alliance Comment

at 2; Intel Comment at 5-7; Developers Alliance Comment at 6; Salesforce Comment

at 4; Guardian Assembly Comment at 12-14; American Property Casualty Insurance

Association Comment at 2; Samuel Hammond, Foundation for American Innovation

Comment at 2; Anan Abrar Comment at 1.

38 Guardian Assembly Comment at 12.

Just as AI actors share responsibility for the trustworthi-

ness of AI systems, we think it clear from the comments

that they must share responsibility for providing ac-

countability inputs. As part of the chain of accountabil-

ity, there should be information

sharing from upstream developers

to downstream deployers about in-

tended uses, and from downstream

deployers back to upstream devel-

opers about renements and actual

impacts so that systems can be ad-

justed appropriately. Mechanisms

discussed below such as adverse AI

incident reports, AI system audits,

public disclosures, and other forms

of information ow and evaluation

could all help with allocations of

responsibility for trustworthy AI – allocations that will

require attention and elaboration elsewhere.

2.4. DEVELOP SECTOR-SPECIFIC

ACCOUNTABILITY WITH CROSS-SECTORAL

HORIZONTAL CAPACITY

The application of sector-specic laws, rules, and en-

forcement obligations are being considered by govern-

ment agencies and courts in the context of AI systems.

Regulatory agencies are determining their powers to

evaluate and demand information about some AI sys-

tems from the earliest stages of design.

45

Commenters

thought that additional accountability mechanisms

should be tailored to the sector in which the system is

deployed.

46

AI deployment in sectors such as health, ed-

ucation, employment, nance, and transportation in-

volve particular risks, the identication and mitigation

45 See, e.g., supra note 11.

46 See, e.g., MITRE Comment at 17 (“The U.S. should rely on existing sector-specific

regulators, equipping them to address new AI-related regulatory needs.”); HR Policy

Association (HRPA) Comment at 4 (policymakers should “align, when possible, any

new guidelines or standards for AI with existing government policies and commonly

adopted employer best practices”); Jonhson & Johnson Comment at 2 (recommending

“regulatory approaches to AI that are contextual, proportional and use-case specific”);

SIFMA Comment at 5 (supporting a “flexible, and principles-based approach to third-

party AI risk management, with the applicable sectoral regulators providing additional

specific requirements as needed” similar to cybersecurity and pointing to NYDFS Part

500.11(a) as instructive); Morningstar, Inc. Comment at 1-3 (financial regulations apply

to AI systems); Intel Comment at 3 (identifying existing sectoral laws that apply to AI

harms); Ernst and Young Comment at 11 (uniformity of accountability requirements

might not be practical across sectors or even within the same sector); see also, e.g., Eric

Schmidt Comment (arguing in an individual comment that “AI accountability should

depend on business sector.”).

At the same time, there are also good reasons to vest ac-

countability with AI system deployers because context and

mode of deployment are important to actual AI system im-

pacts.

42

Not all risks can be identied pre-deployment, and

downstream developers/deployers

may ne tune AI systems either to

ameliorate or exacerbate dangers

present in artifacts from upstream

developers. Actors may also deploy

and/or use AI systems in unintended

ways.

Recognizing the uidity of AI sys-

tem knowledge and control, many

commenters argued that account-

ability should run with the AI sys-

tem through its entire lifecycle and

across the AI value chain,

43

lodging

responsibility with AI system actors in accordance with

their roles.

44

This value chain of course includes actors

who may be neither developers nor deployers, such as

users, and many others including vendors, buyers, evalua-

tors, testers, managers, and duciaries.

Executive Departments and Agencies, “Advancing Governance, Innovation, and Risk

Management for Agency Use of Artificial Intelligence” (Nov. 2023), at 16, https://

ai.gov/wp-content/uploads/2023/11/AI-in-Government-Memo-Public-Comment.

pdf [hereinaer “OMB Dra Memo”]. Some commenters focused particularly on pre-

release evaluation for emergent risks. See, e.g., ARC Comment at 8 (“It is insuicient

to test whether an AI system is capable of dangerous behavior under the terms of its

intended deployment. Thorough dangerous capabilities evaluation must include

full red-teaming, with access to fine-tuning and other generally available specialized

tools.”); SaferAI Comment at 2 (Some of the measures that AI labs should conduct to

help mitigate AI risks are: “pre-deployment risk assessments; dangerous capabilities

evaluations; third-party model audits; safety restrictions on model usage; red-

teaming”).

42 See, e.g., Center for Data Innovation Comment at 7 (“[R]egulators should focus their

oversight on operators, the parties responsible for deploying algorithms, rather than

developers, because operators make the most important decisions about how their

algorithms impact society.”).

43 NIST AI RMF, Second Dra, at 6 Figure 2 (Aug. 18, 2022) (describing the AI lifecycle in

seven stages: planning and design, collection, and processing of data, building and

training the model, verifying and validating the model, deployment, operation and

monitoring, and use of the model/impact from the model), https://nvlpubs.nist.gov/

nistpubs/ai/NIST.AI.100-1.pdf.

44 See, e.g., ARC Comment at 8 (suggesting that because an AI system’s risk profile

changes with actual deployments “[i]t is insuicient to test whether an AI system is

capable of dangerous behavior under the terms of its intended deployment..”); Boston

University and University of Chicago Researchers Comment at 1 (“mechanisms for

AI monitoring and accountability must be implemented throughout the lifecycle

of important AI systems…”); See also Center for Democracy & Technology (CDT)

Comment at 26 (“Pre-deployment audits and assessments are not suicient because

they may not fully capture a model or system’s behavior aer it is deployed and used in

particular contexts.”). See also, e.g., Murat Kantarcioglu Comment (individual comment

suggesting that “AI accountability mechanisms should cover the entire lifecycle of any

given AI system”).

Not all risks can be identied

pre-deployment, and

downstream developers/

deployers may ne tune AI

systems either to ameliorate

or exacerbate dangers present

in artifacts from upstream

developers. Actors may also

deploy and/or use AI systems

in unintended ways.

NTIA Artificial Intelligence Accountability Policy Report

National Telecommunications and Information Administration

21 20

2.5. FACILITATE INTERNAL AND

INDEPENDENT EVALUATIONS

Commenters noted that self-administered AI system as-

sessments are important for identifying risks and system