EC10CH18_Abadie ARI 28 June 2018 11:30

Annual Review of Economics

Econometric Methods

for Program Evaluation

Alberto Abadie

1

and Matias D. Cattaneo

2

1

Department of Economics, Massachusetts Institute of Technology, Cambridge,

2

Department of Economics and Department of Statistics, University of Michigan,

Annu. Rev. Econ. 2018. 10:465–503

The Annual Review of Economics is online at

economics.annualreviews.org

https://doi.org/10.1146/annurev-economics-

080217-053402

Copyright

c

2018 by Annual Reviews.

All rights reserved

JEL codes: C1, C2, C3, C54

Keywords

causal inference, policy evaluation, treatment effects

Abstract

Program evaluation methods are widely applied in economics to assess the

effects of policy interventions and other treatments of interest. In this article,

we describe the main methodological frameworks of the econometrics of

program evaluation. In the process, we delineate some of the directions

along which this literature is expanding, discuss recent developments, and

highlight specific areas where new research may be particularly fruitful.

465

Annu. Rev. Econ. 2018.10:465-503. Downloaded from www.annualreviews.org

Access provided by Massachusetts Institute of Technology (MIT) on 03/19/19. For personal use only.

EC10CH18_Abadie ARI 28 June 2018 11:30

1. INTRODUCTION

The nature of empirical research in economics has profoundly changed since the emergence in the

1990s of a new way to understand identification in econometric models. This approach emphasizes

the importance of heterogeneity across units in the parameters of interest and of the choice of the

sources of variation in the data that are used to estimate those parameters. Initial impetus came from

empirical research by Angrist, Ashenfelter, Card, Krueger and others, while Angrist, Heckman,

Imbens, Manski, Rubin, and others provided many of the early methodological innovations (see, in

particular, Angrist 1990, Angrist & Krueger 1991, Angrist et al. 1996, Card 1990, Card & Krueger

1994, Heckman 1990, Manski 1990; for earlier contributions, see Ashenfelter 1978, Ashenfelter

& Card 1985, Heckman & Robb 1985).

While this greater emphasis on identification has permeated all brands of econometrics and

quantitative social science, nowhere have the effects been felt more profoundly than in the field

of program evaluation—a domain expanding the social, biomedical, and behavioral sciences that

studies the effects of policy interventions. The policies of interest are often governmental pro-

grams, like active labor market interventions or antipoverty programs. In other instances, the term

policy is more generally understood to include any intervention of interest by public or private

agents or by nature.

In this article, we offer an overview of classical tools and recent developments in the economet-

rics of program evaluation and discuss potential avenues for future research. Our focus is on ex

post evaluation exercises, where the effects of a policy intervention are evaluated after some form

of the intervention (such as a regular implementation of the policy, an experimental study, or a

pilot study) is deployed and the relevant outcomes under the intervention are measured. Many

studies in economics and the social sciences aim to evaluate existing or experimentally deployed

interventions, and the methods surveyed in this article have been shown to have broad applicability

in these settings. In many other cases, however, economists and social scientists are interested in

ex ante evaluation exercises, that is, in estimating the effect of a policy before such policy is im-

plemented on the population of interest (e.g., Todd & Wolpin 2010). In the absence of measures

of the outcomes under the intervention of interest, ex ante evaluations aim to extrapolate outside

the support of the data. Often, economic models that precisely describe the behavior of economic

agents serve as useful extrapolation devices for ex ante evaluations. Methods that are suitable for

ex ante evaluation have been previously covered in the Annual Review of Economics (Arcidiacono &

Ellickson 2011, Berry & Haile 2016).

This distinction between ex ante and ex post program evaluations is closely related to often-

discussed differences between the so-called structural and causal inference schools in econometrics.

The use of economic models as devices to extrapolate outside the support of the data in ex ante

program evaluation is typically associated with the structural school, while ex post evaluations

that do not explicitly extrapolate using economic models of behavior are often termed causal or

reduced form.

1

Given that they serve related but quite distinct purposes, we find the perceived

conflict between these two schools to be rather artificial, and the debates about the purported

superiority of one of the approaches over the other largely unproductive.

Our goal in this article is to provide a summary overview of the literature on the econometrics

of program evaluation for ex post analysis and, in the process, to delineate some of the directions

1

As argued by Imbens (2010), however, reduced form is a misnomer relative to the literature on simultaneous equation models,

where the terms structural form and reduced form have precise meanings. Moreover, terms like structural model or causal

inference are nomenclature, rather than exclusive attributes of the literatures and methods that they refer to.

466 Abadie

·

Cattaneo

Annu. Rev. Econ. 2018.10:465-503. Downloaded from www.annualreviews.org

Access provided by Massachusetts Institute of Technology (MIT) on 03/19/19. For personal use only.

EC10CH18_Abadie ARI 28 June 2018 11:30

along which it is expanding, discussing recent developments and the areas where research may be

particularly fruitful in the near future.

Other recent surveys on the estimation of causal treatment effects and the econometrics of

program evaluation from different perspectives and disciplines include those by Abadie (2005a),

Angrist & Pischke (2008, 2014), Athey & Imbens (2017c), Blundell & Costa Dias (2009), DiNardo

& Lee (2011), Heckman & Vytlacil (2007), Hern

´

an & Robins (2018), Imbens & Rubin (2015),

Imbens & Wooldridge (2009), Lee (2016), Manski (2008), Pearl (2009), Rosenbaum (2002, 2010),

Van der Laan & Robins (2003), and VanderWeele (2015), among many others.

2. CAUSAL INFERENCE AND PROGRAM EVALUATION

Program evaluation is concerned with the estimation of the causal effects of policy interven-

tions. These policy interventions can be of very different natures depending on the context of the

investigation, and they are often generically referred to as treatments. Examples include condi-

tional transfer programs (Behrman et al. 2011), health care interventions (Finkelstein et al. 2012,

Newhouse 1996), and large-scale online A/B studies in which IP addresses visiting a particular

web page are randomly assigned to different designs or contents (see, e.g., Bakshy et al. 2014).

2.1. Causality and Potential Outcomes

We represent the value of the treatment by the random variable W. We aim to learn the effect of

changes in treatment status on some observed outcome variable, denoted by Y. Following Neyman

(1923), Rubin (1974), and many others, we use potential outcomes to define causal parameters:

Y

w

represents the potential value of the outcome when the value of the treatment variable, W,is

set to w. For each value of w in the support of W, the potential outcome Y

w

is a random variable

with a distribution over the population. The realized outcome, Y, is such that, if the value of the

treatment is equal to w for a unit in the population, then for that unit, Y = Y

w

, while other

potential outcomes Y

w

with w

= w remain counterfactual.

A strong assumption lurks implicit in the last statement. Namely, the realized outcome for

each particular unit depends only on the value of the treatment of that unit and not on the

treatment or on outcome values of other units. This assumption is often referred to as the stable

unit treatment value assumption (SUTVA) and rules out interference between units (Rubin 1980).

SUTVA is a strong assumption in many practical settings; for example, it may be violated in an

educational setting with peer effects. However, concerns about interference between units can

often be mitigated through careful study design (see, e.g., Imbens & Rubin 2015).

The concepts of potential and realized outcomes are deeply ingrained in economics. A demand

function, for example, represents the potential quantity demanded as a function of price. Quantity

demanded is realized for the market price and is counterfactual for other prices.

While, in practice, researchers may be interested in a multiplicity of treatments and outcomes,

we abstract from that in our notation, where Y and W are scalar random variables. In addition

to treatments and potential outcomes, the population is characterized by covariates X,a(k × 1)

vector of variables that are predetermined relative to the treatment. That is, while X and W may

not be independent (perhaps because X causes W, or perhaps because they share common causes),

the value of X cannot be changed by active manipulation of W. Often, X contains characteristics

of the units measured before W is known.

Although the notation allows the treatment to take on an arbitrary number of values, we

introduce additional concepts and notation within the context of a binary treatment, that is,

W ∈{0, 1}.Inthiscase,W = 1 often denotes exposure to an active intervention (e.g., participation

www.annualreviews.org

•

Econometric Methods for Program Evaluation 467

Annu. Rev. Econ. 2018.10:465-503. Downloaded from www.annualreviews.org

Access provided by Massachusetts Institute of Technology (MIT) on 03/19/19. For personal use only.

EC10CH18_Abadie ARI 28 June 2018 11:30

in an antipoverty program), while W = 0 denotes remaining at the status quo. For simplicity of

exposition, our discussion mostly focuses on the binary treatment case. The causal effect of the

treatment (or treatment effect) can be represented by the difference in potential outcomes, Y

1

−Y

0

.

Potential outcomes and the value of the treatment determine the observed outcome

Y = WY

1

+ (1 − W )Y

0

. 1.

Equation 1 represents what is often termed the fundamental problem of causal inference (Holland

1986). The realized outcome, Y, reveals Y

1

if W = 1andY

0

if W = 0. However, the unit-level

treatment effect, Y

1

−Y

0

, depends on both quantities. As a result, the value of Y

1

−Y

0

is unidentified

from observing (Y, W, X).

Beyond the individual treatment effects, Y

1

− Y

0

, which are identified only under assumptions

that are not plausible in most empirical settings, the objects of interest, or estimands, in program

evaluation are characteristics of the joint distribution of (Y

1

, Y

0

, W, X) in the sample or in the

population. For most of this review, we focus on estimands defined for a certain population of

interest from which we have extracted a random sample. Like in the work of Abadie et al. (2017a),

we say that an estimand is causal when it depends on the distribution of the potential outcomes,

(Y

1

, Y

0

) beyond its dependence on the distribution of (Y, W, X). This is in contrast to descriptive

estimands, which are characteristics of the distribution of (Y, W, X).

2

The average treatment effect (ATE),

τ

ATE

= E[Y

1

− Y

0

],

and the average treatment effect on the treated (ATET),

τ

ATET

= E[Y

1

− Y

0

|W = 1],

are causal estimands that are often of interest in the program evaluation literature. Under SUTVA,

ATE represents the difference in average outcomes induced by shifting the entire population from

the inactive to the active treatment. ATET represents the same object for the treated. To improve

the targeting of a program, researchers often aim to estimate ATE or ATET after accounting for a

set of units’ characteristics, X. Notice that ATE and ATET depend on the distribution of (Y

1

, Y

0

)

beyond their dependence on the distribution of (Y, W, X). Therefore, they are causal estimands

under the definition of Abadie et al. (2017a).

2.2. Confounding

Consider now the descriptive estimand,

τ = E[Y |W = 1] − E[Y |W = 0],

which is the difference in average outcomes for the two different treatment values. ATE and ATET

are measures of causation, while the difference in means, τ , is a measure of association. Notice

that

τ = τ

ATE

+ b

ATE

= τ

ATET

+ b

ATET

,2.

2

Abadie et al. (2017a) provide a discussion of descriptive and causal estimands in the context of regression analysis.

468 Abadie

·

Cattaneo

Annu. Rev. Econ. 2018.10:465-503. Downloaded from www.annualreviews.org

Access provided by Massachusetts Institute of Technology (MIT) on 03/19/19. For personal use only.

EC10CH18_Abadie ARI 28 June 2018 11:30

where b

ATE

and b

ATET

are bias terms given by

b

ATE

=

(

E[Y

1

|W = 1] − E[Y

1

|W = 0]

)

Pr(W = 0) 3.

+

(

E[Y

0

|W = 1] − E[Y

0

|W = 0]

)

Pr(W = 1) 4.

and

b

ATET

= E[Y

0

|W = 1] − E[Y

0

|W = 0]. 5.

Equations 2–5 combine into a mathematical representation of the often-invoked statement that

association does not imply causation, enriched with the statement that lack of association does

not imply lack of causation. If average potential outcomes are identical for treated and nontreated

units, an untestable condition, then the bias terms b

ATE

and b

ATET

disappear. However, if potential

outcomes are not independent of the treatment, then, in general, the difference in mean outcomes

between the treated and the untreated is not equal to the ATE or ATET. Lack of independence

between the treatment and the potential outcomes is often referred to as confounding. Confound-

ing may arise, for example, when information that is correlated with potential outcomes is used for

treatment assignment or when agents actively self-select into treatment based on their potential

gains.

Confounding is a powerful notion, as it explains departures of descriptive estimands from causal

ones. Directed acyclic graphs (DAGs; see Pearl 2009) provide graphical representations of causal

relationships and confounding.

A DAG is a collection of nodes and directed edges among nodes, with no directed cycles. Nodes

represent random variables, while directed edges represent causal effects not mediated by other

variables in the DAG. Moreover, a causal DAG must contain all causal effects among the variables

in the DAG, as well as all variables that are common causes of any pair of variables in the DAG

(even if common causes are unobserved).

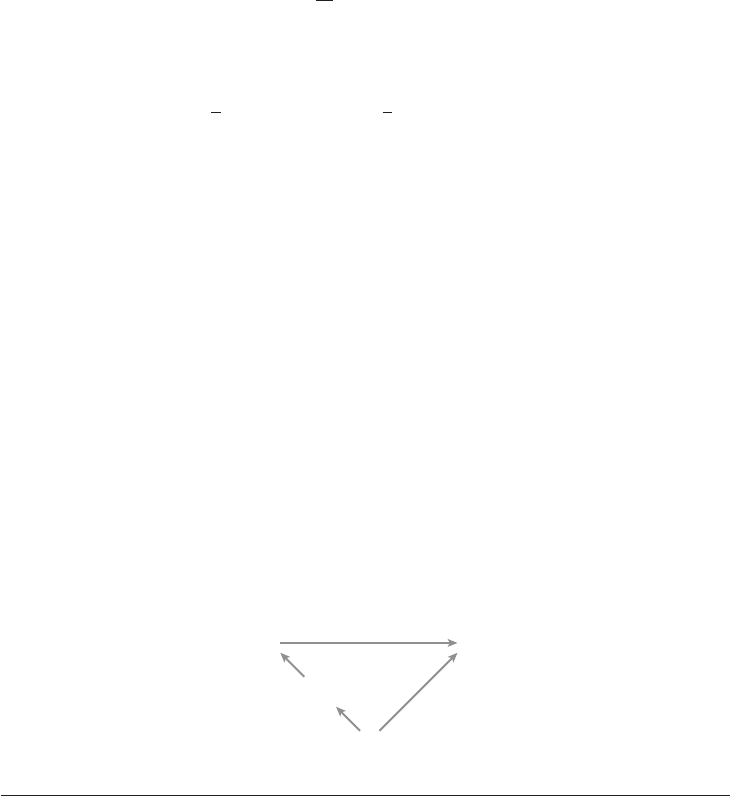

Consider the DAG in Figure 1. This DAG includes a treatment, W, and an outcome of interest,

Y.InthisDAG,W is not a cause of Y, as there is no directed edge from W to Y. The DAG also

includes a confounder, U. This confounder is a common cause of W and Y. Even when W does not

cause Y, these two variables may be correlated because of the confounding effect of U. A familiar

example of this structure in economics is Spence’s (1973) job-market signaling model, where W

is worker education; Y is worker productivity; and there are other worker characteristics, U,that

cause education and productivity (e.g., worker attributes that increase productivity and reduce

the cost of acquiring education). In this example, even when education does not cause worker

productivity, the two variables may be positively correlated.

The DAG in Figure 1 implies that Y

w

does not vary with w because W does not cause Y.

However, Y and W are not independent, as they share a common cause, U. Confounding may

arise in more complicated scenarios. In Section 4, we cover other forms of confounding that may

arise in DAGs. Confounding, of course, often coexists with true causal effects. That is, the DAG

in Figure 1 implies the presence of confounding regardless of whether there is a directed edge

from W to Y.

UW

Y

Figure 1

Confounding in a directed acyclic graph. The graph includes a treatment, W ; an outcome of interest, Y ;and

a confounder, U.

www.annualreviews.org

•

Econometric Methods for Program Evaluation 469

Annu. Rev. Econ. 2018.10:465-503. Downloaded from www.annualreviews.org

Access provided by Massachusetts Institute of Technology (MIT) on 03/19/19. For personal use only.

EC10CH18_Abadie ARI 28 June 2018 11:30

Beyond average treatment effects, other treatment parameters of interest depend on the dis-

tribution of (Y

1

, Y

0

). These include treatment effects on characteristics of the distribution of the

outcome other than the average (e.g., quantiles), as well as characteristics of the distribution of

the treatment effect, Y

1

− Y

0

(e.g., variance), other than its average. In Section 3, we elaborate

further on the distinction between treatment effects on the distribution of the outcome versus

characteristics of the distribution of the treatment effect.

3. RANDOMIZED EXPERIMENTS

Active randomization of the treatment provides the basis of what is arguably the most successful and

extended scientific research design for program evaluation. Often referred to as the gold standard

of scientific evidence, randomized clinical trials play a central role in the natural sciences, as well

as in the drug approval process in the United States, Europe, and elsewhere (Bothwell et al. 2016).

While randomized assignment is often viewed as the basis of a high standard of scientific evidence,

this view is by no means universal. Cartwright (2007), Heckman & Vytlacil (2007), and Deaton

(2010), among others, challenge the notion that randomized experiments occupy a preeminent

position in the hierarchy of scientific evidence, while Angrist & Pischke (2010), Imbens (2010),

and others emphasize the advantages of experimental designs.

3.1. Identification Through Randomized Assignment

Randomization assigns treatment using the outcome of a procedure (natural, mechanical, or elec-

tronic) that is unrelated to the characteristics of the units and, in particular, unrelated to potential

outcomes. Whether randomization is based on coin tossing, random number generation in a

computer, or the radioactive decay of materials, the only requirement of randomization is that

the generated treatment variable is statistically independent of potential outcomes. Complete (or

unconditional) randomization implies

(Y

1

, Y

0

) ⊥⊥ W,6.

where the symbol ⊥⊥ denotes statistical independence. If Equation 6 holds, we say that the treat-

ment assignment is unconfounded. In Section 4, we consider conditional versions of uncon-

foundedness where the value of the treatment W for each particular unit may depend on certain

characteristics of the units, and Equation 6 holds conditional on those characteristics.

The immediate consequence of randomization is that the bias terms in Equations 4 and 5 are

equal to zero:

b

ATE

= b

ATET

= 0,

which, in turn, implies

τ

ATE

= τ

ATET

= τ = E[Y |W = 1] − E[Y |W = 0]. 7.

Beyond average treatment effects, randomization identifies any characteristic of the marginal

distributions of Y

1

and Y

0

. In particular, the cumulative distributions of potential outcomes

F

Y

1

(y) = Pr(Y

1

≤ y)andF

Y

0

(y) = Pr(Y

0

≤ y) are identified by

F

Y

1

(y) = Pr(Y ≤ y|W = 1)

and

F

Y

0

(y) = Pr(Y ≤ y|W = 0).

470 Abadie

·

Cattaneo

Annu. Rev. Econ. 2018.10:465-503. Downloaded from www.annualreviews.org

Access provided by Massachusetts Institute of Technology (MIT) on 03/19/19. For personal use only.

EC10CH18_Abadie ARI 28 June 2018 11:30

While the average effect of a treatment can be easily described using τ

ATE

, it is more complicated

to establish a comparison between the entire distributions of Y

1

and Y

0

. To be concrete, suppose

that the outcome variable of interest, Y, is income. Then, an analyst may want to rank the income

distributions under the active intervention and in the absence of the active intervention. For that

purpose, it is useful to consider the following distributional relationships: (a) equality of distribu-

tions, F

Y

1

(y) = F

Y

0

(y) for all y ≥ 0; (b) first-order stochastic dominance (with F

Y

1

dominating F

Y

0

),

F

Y

1

(y) − F

Y

0

(y) ≤ 0 for all y ≥ 0; and (c) second-order stochastic dominance (with F

Y

1

dominating

F

Y

0

),

y

0

(F

Y

1

(z) − F

Y

0

(z)) dz ≤ 0 for all y ≥ 0.

Equality of distributions implies that the treatment has no effect on the distribution of the

outcome variable, in this case income. It implies τ

ATE

= 0 but represents a stronger notion of

null effect. Under mild assumptions, first- and second-order stochastic dominance imply that the

income distribution under the active treatment is preferred to the distribution of income in the

absence of the active treatment (see, e.g., Abadie 2002, Foster & Shorrocks 1988).

Equivalently, the distributions of Y

1

and Y

0

can be described by their quantiles. Quantiles of

Y

1

and Y

0

are identified by inverting F

Y

1

and F

Y

0

or, more directly, by

Q

Y

1

(θ) = Q

Y |W =1

(θ),

Q

Y

0

(θ) = Q

Y |W =0

(θ),

with θ ∈ (0, 1), where Q

Y

(θ) denotes the θ quantile of the distribution of Y. The effect of the

treatment on the θ quantile of the outcome distribution is given by the quantile treatment effect,

Q

Y

1

(θ) − Q

Y

0

(θ). 8.

Notice that, while quantile treatment effects are identified from Equation 6, quantiles of the

distribution of the treatment effect, Q

Y

1

−Y

0

(θ), are not identified. Unlike expectations, quantiles

are nonlinear operators, so in general, Q

Y

1

−Y

0

(θ) = Q

Y

1

(θ) − Q

Y

0

(θ). Moreover, while quantile

treatment effects depend only on the marginal distributions of Y

1

and Y

0

, which are identified

from Equation 6, quantiles of the distribution of treatment effects are functionals of the joint

distribution of (Y

1

, Y

0

), which involves information beyond the marginals. Because, even in a

randomized experiment, the two potential outcomes are never both realized for the same unit, the

joint distribution of (Y

1

, Y

0

) is not identified. As a result, quantiles of Y

1

− Y

0

are not identified

even in the context of a randomized experiment.

To gain intuitive understanding of this lack of identification result, consider an example where

the marginal distributions of Y

1

and Y

0

are identical, symmetric around zero, and nondegenerate.

Identical marginals are consistent with a null treatment effect, that is, Y

1

− Y

0

= 0 with probability

one. In this scenario, all treatment effects are zero. However, identical marginals and symmetry

around zero are also consistent with Y

1

=−Y

0

or, equivalently, Y

1

− Y

0

=−2Y

0

with probability

one, which leads to positive treatment effects for half of the population and negative treatment

effects for the other half. In the first scenario, the treatment does not change the value of the

outcome for any unit in the population. In contrast, in the second scenario, the locations of units

within the distribution of the outcome variable are reshuffled, but the shape of the distribution

does not change. While these two different scenarios imply different distributions of the treatment

effect, Y

1

− Y

0

, they are both consistent with the same marginal distributions of the potential

outcomes, Y

1

and Y

0

, so the distribution of Y

1

− Y

0

is not identified.

Although the distribution of treatment effects is not identified from randomized assignment

of the treatment, knowledge of the marginal distributions of Y

1

and Y

0

can be used to bound

functionals of the distribution of Y

1

− Y

0

(see, e.g., Fan & Park 2010, Firpo & Ridder 2008). In

www.annualreviews.org

•

Econometric Methods for Program Evaluation 471

Annu. Rev. Econ. 2018.10:465-503. Downloaded from www.annualreviews.org

Access provided by Massachusetts Institute of Technology (MIT) on 03/19/19. For personal use only.

EC10CH18_Abadie ARI 28 June 2018 11:30

particular, if the distribution of Y is continuous, then for each t ∈ R,

max

sup

y∈R

F

Y

1

(y) − F

Y

0

(y − t)

,0

≤ F

Y

1

−Y

0

(t) ≤ 1 + min

inf

y∈R

F

Y

1

(y) − F

Y

0

(y − t)

,0

9.

provides sharp bounds on the distribution of Y

1

− Y

0

. This expression can be applied to bound

the fraction of units with positive (or negative) treatment effects. B ounds on Q

Y

1

−Y

0

(θ)canbe

obtained by inversion.

3.2. Estimation and Inference in Randomized Studies

So far, we have concentrated on identification issues. We now turn to estimation and infer-

ence. Multiple modes of statistical inference are available for treatment effects (see Rubin 1990),

and our discussion mainly focuses on two of them: (a) sampling-based frequentist inference and

(b) randomization-based inference on a sharp null. We also briefly mention permutation-based

inference. However, because of space limitations, we omit Bayesian methods (see Rubin 1978,

2005) and finite-sample frequentist methods based on randomization (see Abadie et al. 2017a,

Neyman 1923). Imbens & Rubin (2015) give an overview of many of these methods.

3.2.1. Sampling-based frequentist inference. In the context of sampling-based frequentist

inference, we assume that data consist of a random sample of treated and nontreated units, and

that the treatment has been assigned randomly. For each unit i in the sample, we observe the value

of the outcome, Y

i

; the value of the treatment, W

i

; and, possibly, the values of a set of covariates,

X

i

. Consider the common scenario where randomization is carried out with a fixed number of

treated units, n

1

, and untreated units, n

0

, such that n = n

1

+ n

0

is the total sample size. We

construct estimators using the analogy principle (Manski 1988). That is, estimators are sample

counterparts of population objects that identify parameters of interest. In particular, Equation 7

motivates estimating τ = τ

ATE

= τ

ATET

using the difference in sample means of the outcome

between treated and nontreated,

τ =

1

n

1

n

i=1

W

i

Y

i

−

1

n

0

n

i=1

(1 − W

i

)Y

i

.

Under conventional regularity conditions, a large sample approximation to the sampling distri-

bution of τ is given by

τ − τ

se(τ )

d

−→ N (0, 1), 10.

where

se(τ ) =

σ

2

Y |W =1

n

1

+

σ

2

Y |W =0

n

0

1/2

, 11.

and σ

2

Y |W =1

and σ

2

Y |W =0

are the sample counterparts of the conditional variance of Y given W = 1

and W = 0, respectively.

The estimator τ coincides with the coefficient on W from a regression of Y on W and a con-

stant, and se(τ ) is the corresponding heteroskedasticity-robust standard error. Inference based on

Equations 10 and 11 has an asymptotic justification but can perform poorly in finite samples, espe-

cially if n

1

and n

0

differ considerably. Imbens & Koles

´

ar (2016) discuss small sample adjustments

to the t-statistic and its distribution and demonstrate that such adjustments substantially improve

coverage rates of confidence intervals in finite samples. In addition, more general sampling pro-

cesses (e.g., clustered sampling) or randomization schemes (e.g., stratified randomization) are

472 Abadie

·

Cattaneo

Annu. Rev. Econ. 2018.10:465-503. Downloaded from www.annualreviews.org

Access provided by Massachusetts Institute of Technology (MIT) on 03/19/19. For personal use only.

EC10CH18_Abadie ARI 28 June 2018 11:30

possible. They affect the variability of the estimator and, therefore, the standard error formulas

(for details, see Abadie et al. 2017b, Imbens & Rubin 2015).

Estimators of other quantities of interest discussed in Section 2 can be similarly constructed

using sample analogs. In particular, for any θ ∈ (0, 1), the quantile treatment effects estimator is

given by

Q

Y |W =1

(θ) −

Q

Y |W =0

(θ),

where

Q

Y |W =1

(θ)and

Q

Y |W =0

(θ) are the sample analogs of Q

Y |W =1

(θ)andQ

Y |W =0

(θ), respectively.

Sampling-based frequentist inference on quantile treatment effects follows from the results of

Koenker & Bassett (1978) on quantile inference for quantile regression estimators. Bounds on the

distribution of F

Y

1

−Y

0

can be computed by replacing the cumulative functions F

Y |W =1

and F

Y |W =0

in Equation 9 with their sample analogs,

F

Y |W =1

and

F

Y |W =0

, respectively. The estimators of the

cumulative distribution functions F

Y

1

and F

Y

0

(or, equivalently, estimators of the quantile functions

Q

Y

1

and Q

Y

0

) can also be applied to test for equality of distributions and first- and second-order

stochastic dominance (see, e.g., Abadie 2002).

3.2.2. Randomization inference on a sharp null. The testing exercise in Section 3.2.1 is based

on sampling inference. That is, it is based on the comparison of the value of a test statistic for the

sample at hand to its sampling distribution under the null hypothesis. In contrast, randomization

inference takes the sample as fixed and concentrates on the null distribution of the test statistic

induced by the randomization of the treatment. Randomization inference originated with Fisher’s

(1935) proposal to use the physical act of randomization as a reasoned basis for inference.

Consider the example in Panel A of Table 1. This panel shows the result of a hypothetical

experiment where a researcher randomly selects four individuals out of a sample of eight individuals

to receive the treatment and excludes the remaining four individuals from the treatment. For each

of the eight sample individuals, i = 1, ..., 8, the researcher observes treatment status, W

i

,and

the value of the outcome variable, Y

i

. For concreteness, we assume that the researcher adopts

the difference in mean outcomes between the treated and the nontreated as a test statistic for

randomization inference. The value of this statistic in the experiment is τ = 6.

The sample observed by the researcher is informative only about the value of τ obtained under

one particular realization of the randomized treatment assignment: t he one observed in practice.

There are, however, 70 possible ways to assign four individuals out of eight to the treatment group.

Let be the set of all possible randomization realizations. Each element ω of has probability

1/70. Consider Fisher’s null hypothesis that the treatment does not have any effect on the outcomes

of any unit, that is, Y

1i

= Y

0i

for each experimental unit. Notice that Fisher’s null pertains to the

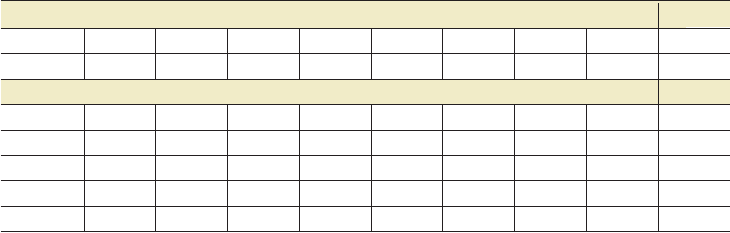

Table 1 Randomization distribution of a difference in means

Panel A: Sample and sample statistic

Y

i

12 4 6 10 6 0 1 1

W

i

1 1 1 1 0 0 0 0 τ = 6

Panel B: Randomization distribution τ (ω)

ω = 1 1 1 1 1 0 0 0 0 6

ω = 2 1 1 1 0 1 0 0 0 4

ω = 3 1 1 1 0 0 1 0 0 1

ω = 4 1 1 1 0 0 0 1 0 1.5

...ω = 70 0 0 0 0 1 1 1 1 −6

www.annualreviews.org

•

Econometric Methods for Program Evaluation 473

Annu. Rev. Econ. 2018.10:465-503. Downloaded from www.annualreviews.org

Access provided by Massachusetts Institute of Technology (MIT) on 03/19/19. For personal use only.

EC10CH18_Abadie ARI 28 June 2018 11:30

−8−6−4−202468

0

2

4

6

8

10

12

Dierence

in means

Frequency

τ(ω)

Figure 2

Randomization distribution of the difference in means. The vertical line represents the sample value of τ.

sample and not necessarily to the population. Under Fisher’s null, it is possible to calculate the value

τ (ω) that would have been observed for each possible realization of the randomized assignment,

ω ∈ . The distribution of τ (ω)inPanelBofTable 1 is called the randomization distribution

of τ. A histogram of the randomization distribution of τ is depicted in Figure 2. p-values for

one-sided and two-sided tests are calculated in the usual fashion: Pr(τ (ω) ≥ τ ) = 0.0429 and

Pr(|τ (ω)|≥|τ |) = 0.0857, respectively. These probabilities are calculated over the randomization

distribution. Because the randomization distribution under the null can be computed without

error, these p-values are exact.

3

Fisher’s null hypothesis of Y

1i

− Y

0i

= 0 for every experimental unit is an instance of a sharp

null, that is, a null hypothesis under which the values of the treatment effect for each unit in the

experimental sample are fixed. Notice that this sharp null, if extended to every population unit,

implies but is not implied by Neyman’s null, E[Y

1

]− E[Y

0

] = 0. However, a rejection of Neyman’s

null, using the t-statistic discussed above in the context of sampling-based frequentist inference,

does not imply that Fisher’s test will reject. This is explained by Ding (2017) using the power

properties of the two types of tests and illustrated by Young (2017).

3.2.3. Permutation methods. Some permutation methods are related to randomization tests

but rely on a sampling interpretation. They can be employed to evaluate the null hypothesis that

Y

1

and Y

0

have the same distribution. Notice that this is not a sharp hypothesis and that it pertains

to the population rather than to the sample at hand. These tests are based on the observation

that, under the null hypothesis, the distribution of the vector of observed outcomes is invariant

3

In a setting with many experimental units, it may be computationally costly to calculate the value of the test statistic under

all possible assignments. In those cases, the randomization distribution can be approximated from a randomly selected subset

of assignments.

474 Abadie

·

Cattaneo

Annu. Rev. Econ. 2018.10:465-503. Downloaded from www.annualreviews.org

Access provided by Massachusetts Institute of Technology (MIT) on 03/19/19. For personal use only.

EC10CH18_Abadie ARI 28 June 2018 11:30

under permutations. Ernst (2004) provides more discussion and comparisons between Fisher’s

randomization inference and permutation methods, and Bugni et al. (2018), who also include

further references on this topic, describe a recent application of permutation-based inference in

the context of randomized experiments.

3.3. Recent Developments

Looking ahead, the literature on the analysis and interpretation of randomized experiments re-

mains active, with several interesting developments and challenges still present beyond what is

discussed in this section. Athey & Imbens ( 2017a) provide a detailed introduction to the econo-

metrics of randomized experiments. Some recent topics in this literature include (a) decision

theoretic approaches to randomized experiments and related settings (Banerjee et al. 2017);

(b) design of complex or high-dimensional experiments accounting for new (big) data environments

such as social media or networks settings (Bakshy et al. 2014); (c) the role of multiple hypothesis

testing and alternative inference methods, such as model selection, shrinkage, and empirical Bayes

approaches (see, e.g., Abadie & Kasy 2017); and (d ) subgroup, dynamic, and optimal treatment

effect analysis, as well as related issues of endogenous stratification (see, e.g., Abadie et al. 2017c,

Athey & Imbens 2017b, Murphy 2003).

4. CONDITIONING ON OBSERVABLES

4.1. Identification by Conditional Independence

In the absence of randomization, the independence condition in Equation 6 is rarely justified,

and the estimation of treatment effect parameters requires additional identifying assumptions.

In this section, we discuss methods based on a conditional version of the unconfoundedness

assumption in Equation 6. We assume that potential outcomes are independent of the treatment

after conditioning on a set of observed covariates. The key underlying idea is that confounding, if

present, is fully accounted for by observed covariates.

Consider an example where newly admitted college students are awarded a scholarship on

the basis of the result of a college entry exam, X, and other student characteristics, V.Weusethe

binary variable W to code the receipt of a scholarship award. To investigate the effect of the award

on college grades, Y, one may be concerned about the potential confounding effect of precollege

academic ability, U, which may not be precisely measured by X. Confounding may also arise if V

has a direct effect on Y.

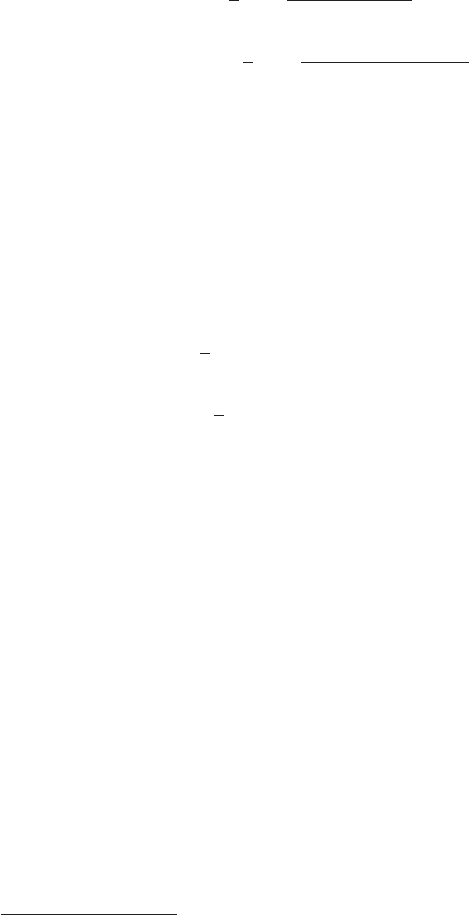

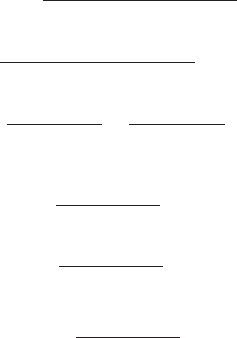

This setting is represented in the DAG in Figure 3a. Precollege academic ability, U, is a cause

of W, the scholarship award, through its effect on the college entry exam grade, X. Precollege

ability, U, is also a direct cause of college academic grades, Y. The confounding effect of U induces

statistical dependence between W and Y that is not reflective of the causal effect of W on Y.

In a DAG, a path is a collection of consecutive edges (e.g., X → W → Y ), and confounding

can generate from backdoor paths, that is, paths from the treatment, W, to the outcome, Y,that

start with incoming arrows. The path W ← X ← U → Y is a backdoor path in Figure 3a.An

additional backdoor path, W ← V → Y, emerges if other causes of the award, aside from the

college entry exam, also have a direct causal effect on college grades. The presence of backdoor

paths may create confounding, invalidating Equation 6.

Consider a conditional version of unconfoundedness,

(Y

1

, Y

0

) ⊥⊥ W |X. 12.

www.annualreviews.org

•

Econometric Methods for Program Evaluation 475

Annu. Rev. Econ. 2018.10:465-503. Downloaded from www.annualreviews.org

Access provided by Massachusetts Institute of Technology (MIT) on 03/19/19. For personal use only.

EC10CH18_Abadie ARI 28 June 2018 11:30

V

UXWY

a Confounding by U and V

UXWY

b Confounding by U only

Figure 3

Causal effect and confounding. The graphs show the result of a college entrance exam, X; other student

characteristics affecting college admission, V ; receipt of a scholarship award, W ; precollege academic ability,

U; and college grades, Y.(a) Confounding by U and V.(b) Confounding by U alone.

Equation 12 states that (Y

1

, Y

0

) is independent of W given X.

4

This assumption allows identification

of treatment effect parameters by conditioning on X. That is, controlling for X makes the treatment

unconfounded.

5

Along with the common support condition

0 < Pr(W = 1|X) < 1, 13.

Equation 12 allows identification of treatment effect parameters. Equation 12 implies

E[Y

1

− Y

0

|X] = E[Y |X , W = 1] − E[Y |X, W = 0].

Then, the common support condition in Equation 13 implies

τ

ATE

= E

E[Y |X, W = 1] − E[Y |X, W = 0]

14.

and

τ

ATET

= E

E[Y |X, W = 1] − E[Y |X, W = 0]|W = 1

. 15.

Other causal parameters of interest, like the quantile treatment effects in Equation 8, are identified

by the combination of unconfoundedness and common support (Firpo 2007).

The common support condition can be directly assessed in the data, as it depends on the

conditional distribution of X given W, which is identified by the joint distribution of (Y, W, X).

Unconfoundedness, however, is harder to assess, as it depends on potential outcomes that are

not always observed. Pearl (2009) and others have investigated graphical causal structures that

allow identification by conditioning on covariates. In particular, Pearl’s backdoor criterion (Pearl

1993) provides sufficient conditions under which treatment effect parameters are identified via

conditioning.

6

In our college financial aid example, suppose that other causes of the award, aside from the

college entry exam, do not have a direct effect on college grades (that is, all the effect of V on Y

is through W ). This is the setting in Figure 3b.

7

Using Pearl’s backdoor criterion, and provided

that a common support condition holds, it can be shown t hat the causal structure in Figure 3b

4

For the identification results stated below, it is enough to have unconfoundedness in terms of the marginal distributions of

potential outcomes, that is, Y

w

⊥⊥ W |X for w ={0, 1}.

5

The unconfoundedness assumption in Equation 12 is often referred to as conditional independence, exogeneity, selection

on observables, ignorability, or missing at random.

6

Pearl (2009) and Morgan & Winship (2015) provide detailed introductions to identification in DAGs, and Richardson

& Robins (2013) discuss the relationship between identification in DAGs and statements about conditional independence

between the treatment and potential outcomes similar to that in Equation 12.

7

Notice that the node representing V disappears from the DAG in Figure 3b. Because V causes only one variable in the

DAG, it cannot create confounding. As a result, it can be safely excluded from the DAG.

476 Abadie

·

Cattaneo

Annu. Rev. Econ. 2018.10:465-503. Downloaded from www.annualreviews.org

Access provided by Massachusetts Institute of Technology (MIT) on 03/19/19. For personal use only.

EC10CH18_Abadie ARI 28 June 2018 11:30

implies that treatment effect parameters are identified by adjusting for X, as in Equations 14 and

15. The intuition for this result is rather immediate. Precollege ability, U, is a common cause of W

and Y, and the only confounder in this DAG. However, conditioning on X blocks the path from

U to X. Once we condition on X, the variable U is no longer a confounder, as it is not a cause of

W. In contrast, if other causes of the award, aside from the college entry exam, have a direct effect

on college grades, as in Figure 3a, then Pearl’s backdoor criterion does not imply Equations 14

or 15. Additional confounding, created by V, is not controlled for by conditioning on X alone.

4.2. Regression Adjustments

There exists a wide array of methods for estimation and inference under unconfoundedness.

However, it is important to notice that, in the absence of additional assumptions, least squares

regression coefficients do not identify ATE or ATET. Consider a simple setting where X takes

on a finite number of values x

1

, ..., x

m

. Under Equations 12 and 13, ATE and ATET are given

by

τ

ATE

=

m

k=1

E[Y |X = x

k

, W = 1] − E[Y |X = x

k

, W = 0]

Pr(X = x

k

)

and

τ

ATET

=

m

k=1

E[Y |X = x

k

, W = 1] − E[Y |X = x

k

, W = 0]

Pr(X = x

k

|W = 1),

respectively. These identification results form the basis for the subclassification estimators of ATE

and ATET in the work of Cochran (1968) and Rubin (1977). One could, however, attempt to

estimate ATE or ATET using least squares. In particular, one could consider estimating τ

OLS

by

least squares in the regression equation

Y

i

= τ

OLS

W

i

+

m

k=1

β

OLS,k

D

ki

+ ε

i

,

under the usual restrictions on ε

i

,whereD

ki

is a binary variable that takes value one if X

i

= x

k

and zero otherwise. It can be shown that, in the absence of additional restrictive assumptions, τ

OLS

differs from τ

ATE

and τ

ATET

. More precisely, it can be shown that

τ

OLS

=

m

k=1

(

E[Y |X = x

k

, W = 1] − E[Y |X = x

k

, W = 0]

)

w

k

where

w

k

=

var(W |X = x

k

)Pr(X = x

k

)

m

r=1

var(W |X = x

r

)Pr(X = x

r

)

.

That is, τ

OLS

identifies a variance-weighted ATE (see, e.g., Angrist & Pischke 2008).

8

Even in this

simple setting, with a regression specification that is fully saturated in all values of X (including

a dummy variable for each possible value), τ

OLS

differs from τ

ATE

and τ

ATET

except for special cases

(e.g., when E[Y |X = x

k

, W = 1] − E[Y |X = x

k

, W = 0] is the same for all k).

8

It can be shown that var(W |X = x

r

) is maximal when Pr(W = 1|X = x

r

) = 1/2 and is decreasing as this probability moves

toward zero or one. That is, τ

OLS

weights up groups with X = x

k

, where the size of the treated and untreated populations are

roughly equal, and weights down groups with large imbalances in the sizes of these two groups.

www.annualreviews.org

•

Econometric Methods for Program Evaluation 477

Annu. Rev. Econ. 2018.10:465-503. Downloaded from www.annualreviews.org

Access provided by Massachusetts Institute of Technology (MIT) on 03/19/19. For personal use only.

EC10CH18_Abadie ARI 28 June 2018 11:30

4.3. Matching Estimators

Partly because of the failure of linear regression to estimate conventional treatment effect param-

eters like ATE and ATET, researchers have resorted to flexible and nonparametric methods like

matching.

4.3.1. Matching on covariates. Matching estimators of ATE and ATET can be constructed in

the following manner. First, for each sample unit i, select a unit j(i) in the opposite treatment

group with similar covariate values. That is, select j (i) such that W

j(i)

= 1 − W

i

and X

j(i)

X

i

.

Then, a one-to-one nearest-neighbor matching estimator of ATET can be obtained as the average

of the differences in outcomes between the treated units and their matches,

τ

ATET

=

1

n

1

n

i=1

W

i

(Y

i

− Y

j(i)

). 16.

An estimator of ATE can be obtained in a similar manner, but using the matches for both treated

and nontreated:

τ

ATE

=

1

n

n

i=1

W

i

(Y

i

− Y

j(i)

) −

1

n

n

i=1

(1 − W

i

)(Y

i

− Y

j(i)

). 17.

Nearest-neighbor matching comes in many varieties. It can be done with replacement (that is,

potentially using each unit in the control group as a match more than once) or without, with only

one or several matches per unit (in which case, the outcomes of the matches for each unit are

usually averaged before inserting them into Equations 16 and 17), and there exist several potential

choices for the distance that measures the discrepancies in the values of the covariates between

units [like the normalized Euclidean distance and the Mahalanobis distance (see Abadie & Imbens

2011, Imbens 2004)].

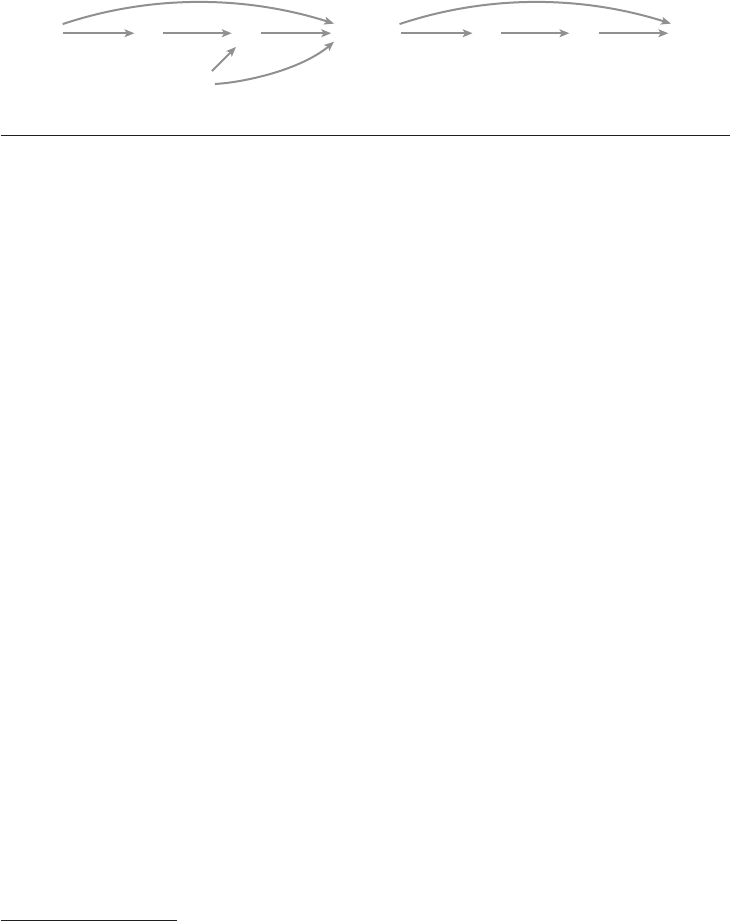

4.3.2. Matching on the propensity score. Matching on the propensity score is another com-

mon variety of matching method. Rosenbaum & Rubin (1983) define the propensity score as the

conditional probability of receiving the treatment given covariate values

p(X) = Pr(W = 1|X).

Rosenbaum & Rubin (1983) prove that, if the conditions in Equations 12 and 13 hold, then the

same conditions hold after replacing X with the propensity score p(X). An implication of this

result is that, if controlling for X makes the treatment unconfounded, then controlling for p(X)

makes the treatment unconfounded, as well. Figure 4 provides intuition for this result. The entire

effect of X on W is mediated by the propensity score. Therefore, after conditioning on p(X ), the

covariate X is no longer a confounder. In other words, conditioning on the propensity score blocks

W

X

p(X)

Y

Figure 4

Identification by conditioning on the propensity score, p(X).

478 Abadie

·

Cattaneo

Annu. Rev. Econ. 2018.10:465-503. Downloaded from www.annualreviews.org

Access provided by Massachusetts Institute of Technology (MIT) on 03/19/19. For personal use only.

EC10CH18_Abadie ARI 28 June 2018 11:30

the backdoor path between W and Y. Therefore, any remaining statistical dependence between

W and Y is reflective of the causal effect of W on Y. The propensity score result of Rosenbaum

& Rubin (1983) motivates matching estimators that match on the propensity score, p(X), instead

of matching on the entire vector of covariates, X. Matching on the propensity score reduces the

dimensionality of the matching variable, avoiding biases generated by curse of dimensionality

issues (see, e.g., Abadie & Imbens 2006). In most practical settings, however, the propensity score

is unknown. Nonparametric estimation of the propensity score brings back dimensionality issues.

In some settings, estimation of the propensity score can be guided by institutional knowledge about

the process that produces treatment assignment (elicited from experts in the subject matter). In

empirical practice, the propensity score is typically estimated using parametric methods like probit

or logit.

Abadie & Imbens (2006, 2011, 2012) derive large sample results for estimators that match

directly on the covariates, X. Abadie & Imbens (2016) provide large sample results for the case

where matching is done on an estimated propensity score (for reviews and further references on

propensity score matching estimators, see I mbens & Rubin 2015, Rosenbaum 2010).

4.4. Inverse Probability Weighting

Inverse probability weighting (IPW) methods (see Hirano et al. 2003, Robins et al. 1994) are also

based on the propensity score and provide an alternative to matching estimators. This approach

proceeds by first obtaining estimates of propensity score values, p(X

i

),andthenusingthose

estimates to weight outcome values. For example, an IPW estimator of ATE is

τ

ATE

=

1

n

n

i=1

W

i

Y

i

p(X

i

)

−

1

n

n

i=1

(1 − W

i

) Y

i

1 − p(X

i

)

.

This estimator uses 1/p(X

i

) to weigh observations in the treatment group and 1/(1 − p(X

i

)) to

weigh observations in the untreated group. Intuitively, observations with large p(X

i

) are overrep-

resented in the treatment group and thus weighted down when treated. The same observations

are weighted up when untreated. The opposite applies to observations with small p(X

i

). This

estimator can be modified so that the weights in each treatment arm sum to one, which produces

improvements in finite sample performance (see, e.g., Busso et al. 2014).

4.5. Imputation and Projection Methods

Imputation and projection methods (e.g., Cattaneo & Farrell 2011, Heckman et al. 1998, Im-

bens et al. 2006, Little & Rubin 2002) provide an alternative class of estimators that rely on the

assumptions in Equations 12 and 13. Regression imputation estimators are based on prelimi-

nary estimates of the outcome process, that is, the conditional distribution of Y given (X, W ).

Let μ

1

(x)andμ

0

(x) be parametric or nonparametric estimators of E[Y |X = x, W = 1] and

E[Y |X = x, W = 0], respectively. Then, an empirical analog of Equation 14 provides a projec-

tion and imputation estimator of ATE:

τ

ATE

=

1

n

n

i=1

(

μ

1

(X

i

) − μ

0

(X

i

)

)

.

Similarly, a projection and imputation estimator of ATET is given by the empirical analog of

Equation 15.

www.annualreviews.org

•

Econometric Methods for Program Evaluation 479

Annu. Rev. Econ. 2018.10:465-503. Downloaded from www.annualreviews.org

Access provided by Massachusetts Institute of Technology (MIT) on 03/19/19. For personal use only.

EC10CH18_Abadie ARI 28 June 2018 11:30

4.6. Hybrid Methods

Several hybrid methods combine matching and propensity score weighting with projection and

imputation techniques. Among them are the doubly robust estimators (or, more generally, locally

robust estimators) of Van der Laan & Robins (2003), Bang & Robins (2005), Cattaneo (2010),

Farrell (2015), Chernozhukov et al. (2016), and Sloczynski & Wooldridge (2017). In the case of

ATE estimation, for example, they can take the form

τ

ATE

=

1

n

n

i=1

W

i

(Y

i

− μ

1

(X

i

))

p(X

i

)

+ μ

1

(X

i

)

−

1

n

n

i=1

(1 − W

i

)(Y

i

− μ

0

(X

i

))

1 − p(X

i

)

+ μ

0

(X

i

)

.

This estimator is doubly robust in the sense that consistent estimation of p(·) or consistent estima-

tion of μ

1

(·)andμ

0

(·) is required for the validity of the estimator. Part of the appeal of doubly and

locally robust methods comes from the efficiency properties of the estimators. However, relative

to other methods discussed in this section, doubly robust methods require estimating not only p(·)

or μ

1

(·)andμ

0

(·), but all of these functions. As a result, relative to other methods, doubly robust

estimators involve additional tuning parameters and implementation choices. Closely related to

doubly and locally robust methods are the bias-corrected matching estimators of Abadie & Imbens

(2011). These estimators include a regression-based bias correction designed to purge matching

estimators from the bias that arises from imperfect matches in the covariates,

τ

ATE

=

1

n

n

i=1

W

i

(Y

i

− Y

j(i)

) − (μ

0

(X

i

) − μ

0

(X

j(i)

))

−

1

n

n

i=1

(1 − W

i

)

(Y

i

− Y

j(i)

) − (μ

1

(X

i

) − μ

1

(X

j(i)

))

.

4.7. Comparisons of Estimators

Busso et al. (2014) study the finite sample performance of matching and IPW estimators, as well

as some of their variants. Kang & Schafer (2007) and the accompanying discussions and rejoinder

provide finite sample evidence on the performance of double robust estimators and other related

methods.

9

As these and other studies demonstrate, the relative performance of estimators based on con-

ditioning on observables depends on the features of the data generating process. In finite samples,

IPW estimators become unstable when the propensity score approaches zero or one, while regres-

sion imputation methods may suffer from extrapolation biases. Estimators that match directly on

covariates do not require specification choices but may incorporate nontrivial biases if the quality

of the matches is poor. Hybrid methods, such as bias-corrected matching estimators or doubly ro-

bust estimators, include safeguards against bias caused by imperfect matching and misspecification

but impose additional specification choices that may affect the resulting estimate.

Apart from purely statistical properties, estimators based on conditioning on covariates differ

in the way they are related to research transparency. In particular, during the design phase of

a study (i.e., sample, variable, and model selection), matching and IPW methods employ only

9

Dehejia & Wahba (1999), Smith & Todd (2005), and others evaluate the performance of some of the estimators in this

section against an experimental benchmark.

480 Abadie

·

Cattaneo

Annu. Rev. Econ. 2018.10:465-503. Downloaded from www.annualreviews.org

Access provided by Massachusetts Institute of Technology (MIT) on 03/19/19. For personal use only.

EC10CH18_Abadie ARI 28 June 2018 11:30

information about treatment, W, and covariates, X. That is, the matches and the specification

for the propensity score can be constructed without knowledge or use of the outcome variable.

This feature of matching and IPW estimators provides a potential safeguard against specification

searches and p-hacking, a point forcefully made by Rubin (2007).

4.8. Recent Developments and Additional Topics

All of the estimation and inference methods discussed above take the covariates, X, as known and

small relative to the sample size, although, in practice, researchers often use high-dimensional

models with the aim of using a setting where the conditional independence assumption is deemed

appropriate. In most applications, X includes both raw preintervention covariates and transfor-

mations thereof, such as dummy variable expansions of categorical variables, power or other series

expansions of continuous variables, higher-order interactions, or other technical regressors gen-

erated from the original available variables. The natural tension between standard theory (where

the dimension of X is taken to be small) and practice (where the dimension of X tends to be large)

has led to a new literature that aims to develop estimation and inference methods in program

evaluation that account and allow for high-dimensional X.

Belloni et al. (2014) and Farrell (2015) develop new program evaluation methods employing

machinery from the high-dimensional literature in statistics. This methodology, which allows for

very large X (much larger than the sample size), proceeds in two steps. First, a parsimonious

model is selected from the large set of preintervention covariates employing model selection

via least absolute shrinkage and selection operator (LASSO). Then, treatment effect estimators

are constructed using only the small (usually much smaller than the sample size) set of selected

covariates. More general methods and a review of this literature are given by Belloni et al. (2017)

and Athey et al. (2016), among others. Program evaluation methods proposed in this literature

employ modern model selection techniques, which require a careful analysis and interpretation but

often ultimately produce classical distributional approximations (in the sense that these asymptotic

approximations do not change relative to results in low-dimensional settings).

Cattaneo et al. (2017a; 2018c,d) develop program evaluation methods where the distributional

approximations do change relative to low-dimensional results due to the inclusion of many covari-

ates in the estimation. These results can be understood as giving new distributional approximations

that are robust to (i.e., also valid in) cases where either the researcher cannot select out covariates

(e.g., when many multiway fixed effects are needed) or many covariates remain included in the

model even after model selection. These high-dimensional methods not only are valid when many

covariates are included, but also continue to be valid in cases where only a few covariates are used,

thereby offering demonstrable improvements for estimation and inference in program evaluation.

Related kernel-based methods are developed by Cattaneo & Jansson (2018).

All of the ideas and methods discussed above are mostly concerned with average treatment

effects in the context of binary treatments. Many of these results have been extended to multivalued

treatments (Cattaneo 2010, Farrell 2015, Hirano & Imbens 2004, Imai & van Dyk 2004, Imbens

2000, Lechner 2001, Yang et al. 2016), to quantiles and related treatment effects (Cattaneo 2010,

Firpo 2007), and to the analysis and interpretation of counterfactual distributional treatment

effects (Chernozhukov et al. 2013, DiNardo et al. 1996, Firpo & Pinto 2016). We do not discuss

this work due to space limitations.

Space restrictions also prevent us from discussing in detail other strands of the literature on es-

timation of treatment effects based on conditioning on covariates. In particular, in the biostatistics

literature, structural nested models, marginal structural models, and optimal treatment regimes

estimators are employed to estimate treatment effects in contexts with time-varying treatments and

www.annualreviews.org

•

Econometric Methods for Program Evaluation 481

Annu. Rev. Econ. 2018.10:465-503. Downloaded from www.annualreviews.org

Access provided by Massachusetts Institute of Technology (MIT) on 03/19/19. For personal use only.

EC10CH18_Abadie ARI 28 June 2018 11:30

confounders (see, e.g., Lok et al. 2004, Murphy 2003, Robins 2000). Also, a large literature on sen-

sitivity to unobserved confounders analyzes the impact of departures from the unconfoundedness

assumption in Equation 12 (see, e.g., Altonji et al. 2005, Imbens 2003, Rosenbaum 2002).

5. DIFFERENCE IN DIFFERENCES AND SYNTHETIC CONTROLS

5.1. Difference in Differences

In the previous section, we consider settings where treatment assignment is confounded but where

there exists a set of observed covariates, X, such that treatment assignment becomes unconfounded

after conditioning on X. In many applied settings, however, researchers confront the problem of the

possible existence of unobserved confounders. In these settings, difference-in-differences models

aim to attain identification by restricting the way in which unobserved confounders affect the

outcome of interest over time. Consider the following panel data regression model,

Y

it

= W

it

τ

it

+ μ

i

+ δ

t

+ ε

it

,

where only Y

it

and W

it

are observed. We regard the mapping represented in this equation as

structural (or causal). That is, the equation describes potential outcomes, which are now indexed

by time period, t,

Y

0it

= μ

i

+ δ

t

+ ε

it

,

Y

1it

= τ

it

+ μ

i

+ δ

t

+ ε

it

. 18.

Then, we have τ

it

= Y

1it

− Y

0it

. To simplify the exposition, and because it is a common setting in

empirical practice, we assume that there are only two time periods. Period t = 0 is the pretreatment

period, before the treatment is available, so W

i0

= 0 for all i. Period t = 1 is the posttreatment

period, when a fraction of the population units are exposed to the treatment. δ

t

is a time effect,

common across units. We treat μ

i

as a time invariant confounder, so μ

i

and W

i1

are not inde-

pendent. In contrast, we assume that ε

it

are causes of the outcome that are unrelated to selection

for treatment, so E[ε

it

|W

it

] = E[ε

it

]. This condition can be weakened to E[ε

i1

|W

it

] = E[ε

i1

],

where is the first difference operator, so ε

i1

= ε

i1

− ε

i0

. This type of structure is the same as

the widespread linear fixed effect panel data model (see, e.g., Arellano 2003). Then, we have

E[Y

i1

|W

i1

= 1] = E[τ

i1

|W

i1

= 1] + E[μ

i

|W

i1

= 1] + δ

1

+ E[ε

i1

]

E[Y

i0

|W

i1

= 1] = E[μ

i

|W

i1

= 1] + δ

0

+ E[ε

i0

]

E[Y

i1

|W

i1

= 0] = E[μ

i

|W

i1

= 0] + δ

1

+ E[ε

i1

]

E[Y

i0

|W

i1

= 0] = E[μ

i

|W

i1

= 0] + δ

0

+ E[ε

i0

].

In this model, the effect of the unobserved confounders on the average of the outcome variable

is additive and does not change in time. It follows that

τ

ATET

= E[τ

i1

|W

i1

= 1]

=

E[Y

i1

|W

i1

= 1] − E[Y

i1

|W

i1

= 0]

−

E[Y

i0

|W

i1

= 1] − E[Y

i0

|W

i1

= 0]

=

E[Y

i1

|W

i1

= 1] −

E

[

Y

i1

|W

i1

= 0

]

. 19.

The reason for the name difference in differences is apparent from Equation 19. Notice that τ

ATET

is defined here for the posttreatment period, t = 1. Intuitively, identification in the difference-

in-differences model comes from a common trends assumption implied by Equation 18. It can be

482 Abadie

·

Cattaneo

Annu. Rev. Econ. 2018.10:465-503. Downloaded from www.annualreviews.org

Access provided by Massachusetts Institute of Technology (MIT) on 03/19/19. For personal use only.

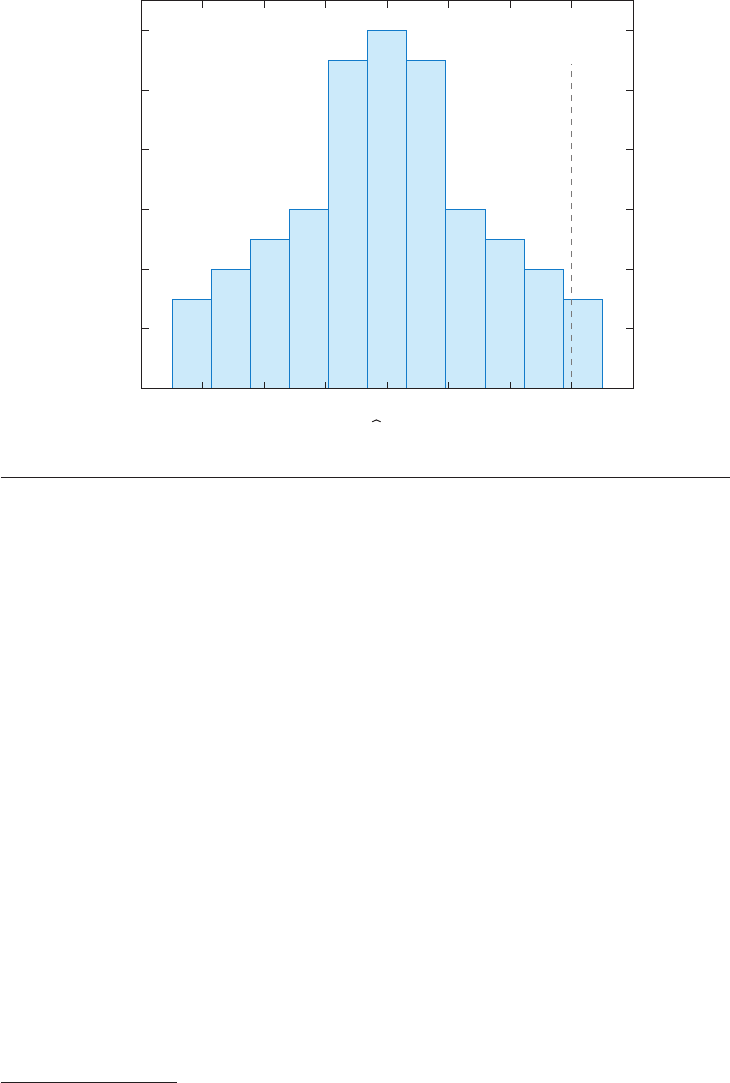

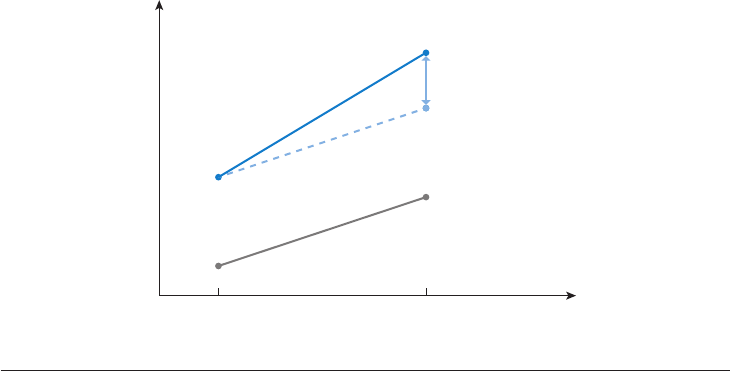

EC10CH18_Abadie ARI 28 June 2018 11:30

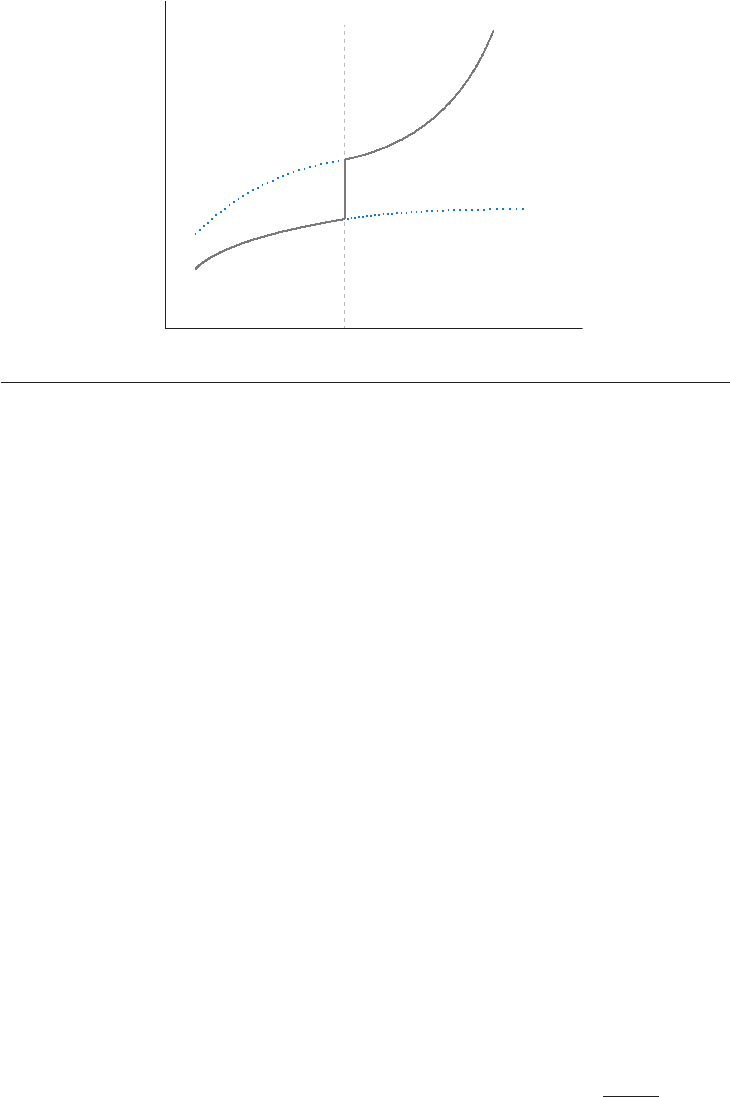

t = 0

Time

t = 1

E[τ

i1

|W

i1

= 1]

E[Y

it

|W

i1

= 1]

E[Y

it

|W

i1

= 0]

Figure 5

Identification in a difference-in-differences model. The dashed line represents the outcome that the treated

units would have experienced in the absence of the treatment.

easily seen that Equation 18, along with E[ε

i1

|W

it

] = E[ε

i1

], implies

E[Y

0i1

|W

it

= 1] = E[Y

0i1

|W

it

= 0]. 20.

That is, in the absence of the treatment, the average outcome for the treated and the average

outcome for the nontreated would have experienced the same variation over time.

Figure 5 illustrates how identification works in a difference-in-differences model. In this set-

ting, a set of untreated units identifies the average change that the outcome for the treated units

would have experienced in the absence of the treatment. The set of untreated units selected to

reproduce the counterfactual trajectory of the outcome for the treated is often called the control

group in this literature (borrowing from the literature on randomized controlled trials).

Notice also that the common trend assumption in Equation 20 is not invariant to nonlinear

transformations of the dependent variable. For example, if Equation 20 holds when the outcome is a

wage rate measured in levels, then the same equation will not hold in general for wages measured

in logs. In other words, identification in a difference-in-differences model is not invariant to

nonlinear transformations in the dependent variable.

In a panel data regression (that is, in a setting with repeated pre- and posttreatment observations

for t he same units), the right-hand side of Equation 19 is equal to the regression coefficient on W

i1

in a regression of Y

i1

on W

i1

and a constant. Consider, instead, a regression setting with pooled

cross-sections for the outcome variable at t = 0andt = 1 and information on W

i1

for all of the

units in each cross-section. In this setting, one cross-section contains information on (Y

i0

, W

i1

)

and the other cross-section contains information on (Y

i1

, W

i1

). Let T

i

be equal to zero if unit i

belongs to the pretreatment sample and equal to one if it belongs to the posttreatment sample.

Then, the right-hand side of Equation 19 is equal to the coefficient on the interaction W

i1

T

i

in

a regression of the outcome on a constant, W

i1

, T

i

, and the interaction W

i1

T

i

for a sample that

pools the preintervention and postintervention cross-sections.

Several variations of the basic difference-in-differences model have been proposed in the lit-

erature. In particular, the model naturally extends to a fixed-effects regression in settings with

more than two periods or models with unit-specific linear trends (see, e.g., Bertrand et al. 2004).

www.annualreviews.org

•

Econometric Methods for Program Evaluation 483

Annu. Rev. Econ. 2018.10:465-503. Downloaded from www.annualreviews.org

Access provided by Massachusetts Institute of Technology (MIT) on 03/19/19. For personal use only.

EC10CH18_Abadie ARI 28 June 2018 11:30

Logically, estimating models with many time periods or trends imposes greater demands on the

data.

The common trends restriction in Equation 20 is clearly a strong assumption and one that

should be assessed in empirical practice. The plausibility of this assumption can sometimes be

evaluated using (a) multiple preintervention periods (like in Abadie & Dermisi 2008) or (b) popu-

lation groups that are not at risk of being exposed to the treatment (like in Gruber 1994). In both

cases, a test for common trends is based on the difference-in-differences estimate of the effect of

a placebo intervention, that is, an intervention that did not happen. In the first case, the placebo

estimate is computed using the preintervention data alone and evaluates the effect of a nonexistent

intervention taking place before the actual intervention period. Rejection of a null effect of the

placebo intervention provides direct evidence against common trends before the intervention.

The second case is similar but uses an estimate obtained for a population group known not to be

amenable to receiving the treatment. For example, Gruber (1994) uses difference in differences to

evaluate the effect of the passage of mandatory maternity benefits in some US states on the wages

of married women of childbearing age. In this context, Equation 20 means that, in the absence of

the intervention, married women of childbearing age would have experienced the same increase in

log wages in states that adopted mandated maternity benefits and states that did not. To evaluate

the plausibility of this assumption, Gruber (1994) compares the changes in log wages in adopting

and nonadopting states for single men and women over 40 years old.

The plausibility of Equation 20 may also be questioned if the treated and the control groups

are different in the distribution of attributes that are known or suspected to affect the outcome

trend. For example, if average earnings depend on age or on labor market experience in a nonlinear

manner, then differences in the distributions of these two variables between treated and nontreated

in the evaluation of an active labor market program pose a threat to the validity of the conventional

difference-in-differences estimator. Abadie (2005b) proposes a generalization of the difference-in-

differences model for the case when covariates explain the differences in the trends of the outcome

variable between treated and nontreated. The resulting estimators adjust the distribution of the

covariates between treated and nontreated using propensity score weighting.

Finally, Athey & Imbens (2006) provide a generalization of the difference-in-differences model

to the case when Y

0it

is nonlinear in the unobserved confounder, μ

i

. Identification in this model

comes from strict monotonicity of Y

0it

with respect to μ

i

and from the assumption that the distri-

bution of μ

i

is time invariant for the treated and the nontreated (although it might differ between

the two groups). One of the advantages of their approach is that it provides an identification result

that is robust to monotonic transformations of the outcome variable [e.g., levels, Y

it

, versus logs,

log(Y

it

)].

5.2. Synthetic Controls

Difference-in-differences estimators are often used to evaluate the effects of events or interventions

that affect entire aggregate units, such as states, school districts, or countries (see, e.g., Card 1990,

Card & Krueger 1994). For example, Card (1990) estimates the effect of the Mariel Boatlift, a

large and sudden influx of immigrants to Miami, on the labor market outcomes of native workers

in Miami. In a difference-in-differences design, Card (1990) uses a combination of four other cities

in the south of the United States (Atlanta, Los Angeles, Houston, and Tampa-St. Petersburg) to

approximate the change in native unemployment rates that would have been observed in Miami in

the absence of the Mariel Boatlift. Studies of this type are sometimes referred to as comparative case

studies because a comparison group is selected to evaluate an instance or case of an event or policy

of interest. In the work of Card (1990), the case of interest is the arrival of Cuban immigrants to

484 Abadie

·

Cattaneo