PATIENT-FOCUSED DRUG DEVELOPMENT

GUIDANCE PUBLIC WORKSHOP

Incorporating Clinical Outcome

Assessments into Endpoints for

Regulatory Decision-Making

Workshop Date: December 6, 2019

Discussion Document for Patient-Focused Drug Development Public Workshop on Guidance 4:

INCORPORATING CLINICAL OUTCOME ASSESSMENTS INTO ENDPOINTS FOR

REGULATORY DECISION-MAKING

TABLE OF CONTENTS

I. INTRODUCTION............................................................................................................. 1

A. Guidance Series ................................................................................................................. 1

B. Document Summary ......................................................................................................... 2

II. ESTIMAND FRAMEWORK OVERVIEW .................................................................. 3

A. COA Research Objective: Foundation for Your Work ................................................ 4

B. Target Study Population: In Whom Are You Going to Do the Research and Which

Subject Records Are in the Analysis? ............................................................................. 5

C. Endpoint of Interest: What Are You Testing or Measuring in the Target Study

Population? ........................................................................................................................ 6

1. Endpoint Definition(s) ............................................................................................... 6

2. Pooling Different Tools and/or Different Concepts to Construct the Endpoint ........ 6

Correlation of Subcomponents and Effect on Power and Type 1 Error ................ 6

Multidomain Responder Index .............................................................................. 7

Personalized Endpoints ......................................................................................... 8

Pooling Scores Across Reporters .......................................................................... 8

Pooling Across Delivery Modes (Same Tool, Same Reporter) ............................. 8

3. Timing of Assessments ............................................................................................... 9

4. Defining Improvement and Worsening .................................................................... 10

5. Clinical Trial Duration and COA-Based Endpoints ................................................ 10

D. Intercurrent Events: What Can Affect Your Measurement’s Interpretation? ........ 11

1. Use of Assistive Devices, Concomitant Medications, and Other Therapies ............ 11

2. Impact of Disease/Condition Progression, Treatment, and Potential Intercurrent

Events ....................................................................................................................... 12

3. Practice Effects ........................................................................................................ 12

4. Participant Burden................................................................................................... 14

5. Mode of Administration ........................................................................................... 15

6. Missing Data and Event-Driven COA Reporting .................................................... 15

7. Missing Scale-Level Data ........................................................................................ 15

E. Population-Level Summary: What Is the Final Way All Data Are Summarized and

Analyzed?......................................................................................................................... 16

1. Landmark Analysis................................................................................................... 16

2. Analyzing Ordinal Data ........................................................................................... 16

3. Time-to-Event Analysis ............................................................................................ 16

4. Responder Analyses and Percent Change From Baseline ....................................... 17

III. MEANINGFUL WITHIN-PATIENT CHANGE ........................................................ 18

A. Anchor-Based Methods to Establish Meaningful Within-Patient Change ................ 19

B. Using Empirical Cumulative Distribution Function and Probability Density

Function Curves to Supplement Anchor-Based Methods ........................................... 20

C. Other Methods ................................................................................................................ 22

1. Potentially Useful Emerging Methods ..................................................................... 22

2. Distribution-Based Methods .................................................................................... 22

3. Receiver Operator Characteristic Curve Analysis .................................................. 22

D. Applying Within-Patient Change to Clinical Trial Data............................................. 22

IV. ADDITIONAL CONSIDERATIONS ........................................................................... 25

A. Other Study Design Considerations .............................................................................. 26

B. Formatting and Submission Considerations ................................................................ 27

APPENDIX 1: CASE STUDY OF ESTIMAND FRAMEWORK ......................................... 29

A. Example Research Objective ......................................................................................... 29

1. Define COA Scientific Research Question A Priori ................................................ 30

2. Define Target Study Population Based on the Research Question A Priori ........... 30

3. Define Endpoint of Interest Based on the Research Question A Priori ................... 31

4. Address Intercurrent Events in Alignment with the Research Question .................. 32

5. Define Population-Level Summary Based on Research Question A Priori ............. 33

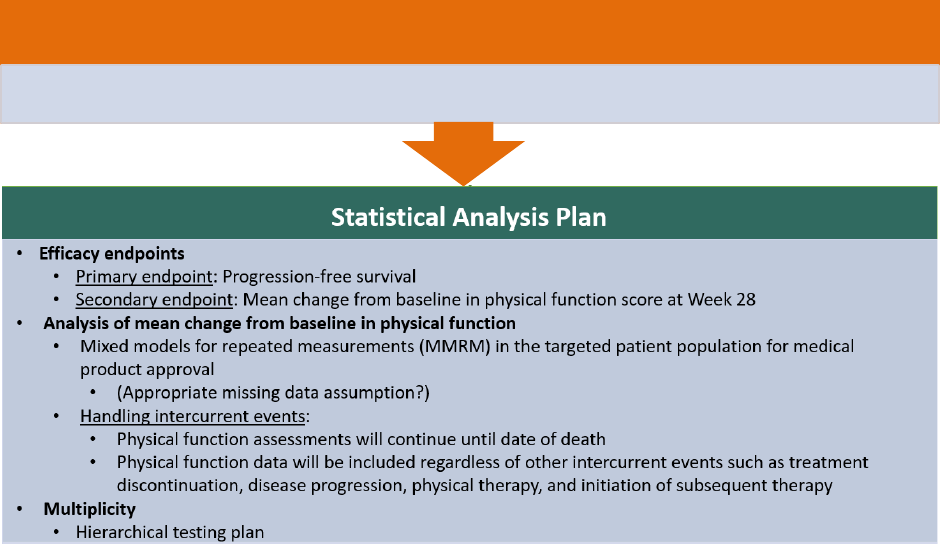

6. Prespecify Statistical Analysis Plan......................................................................... 34

B. Summary of Decisions Made in This Case Study ........................................................ 35

APPENDIX 2: EXAMPLE FROM GENE THERAPY .......................................................... 36

APPENDIX 3: REFERENCES .................................................................................................. 42

APPENDIX 4: GLOSSARY ...................................................................................................... 44

TABLE OF FIGURES

Figure 1: Attributes of an Estimand Placed in Context .................................................................. 4

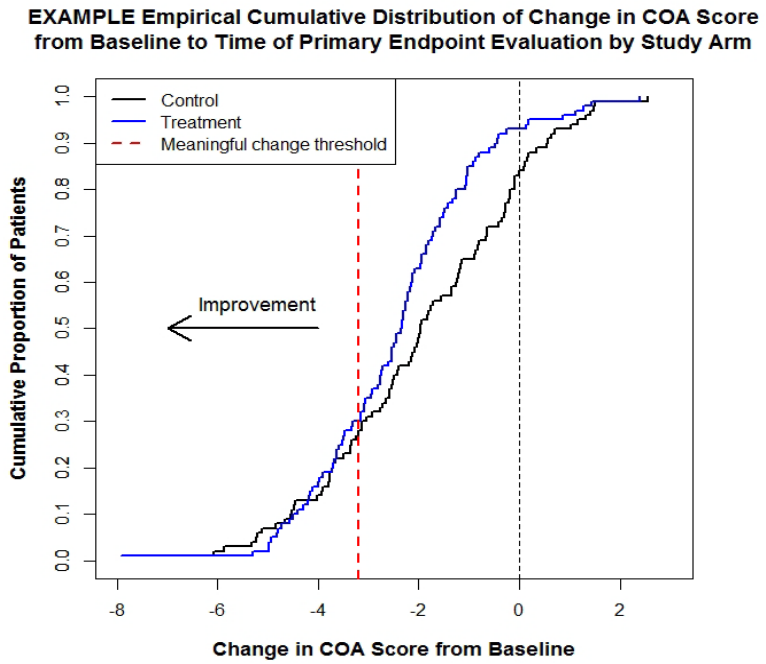

Figure 2: Example of Empirical Cumulative Distribution Function Curves of Change in COA

Score from Baseline to Primary Time Point by Change in PGIS Score ....................................... 21

Figure 3: Example of Density Function Curves of Change in COA Score from Baseline to

Primary Time Point by Change in PGIS Score ............................................................................. 21

Figure 4: An eCDF Curve by Treatment Arm Showing Consistent Separation Between Two

Treatment Arms ............................................................................................................................ 23

Figure 5: An eCDF Curve Where Treatment Effect Is Not in Range Considered Clinically

Meaningful by Patients ................................................................................................................. 24

Figure 6: MLMT Scores in Phase 3 Trial ..................................................................................... 40

TABLE OF TABLES

Table 1: Considerations of Defining a COA Target Study Population .......................................... 5

Table 2: Considerations When Defining a COA-Based Endpoint ................................................ 31

Table 3: Considerations When Addressing Intercurrent Events ................................................... 33

Table 4: Considerations When Defining a COA Population-Level Summary ............................. 33

Table 5: Summary of Estimand Decisions Made ......................................................................... 35

Table 6: MLMT Illuminance Level, Score Code, and Real-World Examples ............................. 37

1

I. INTRODUCTION 1

A. Guidance Series 2

The Food and Drug Administration (FDA) recognizes the need to obtain meaningful patient 3

experience data

1

to understand patients’ experience with their disease and its treatment. This can 4

help inform development of endpoint measures to assess clinical outcomes of importance to 5

patients and caregivers in medical product development. To ensure a patient-focused approach 6

to medical product

2

development and regulation, FDA is developing guidance on methods to 7

identify what matters most to patients for measurement in clinical trials; specifically, how to 8

design and implement studies to capture the patient’s voice in a robust manner. FDA created this 9

Discussion Document to facilitate discussions at the December 6, 2019, public meeting that will 10

inform FDA’s development of a patient-focused drug development (PFDD)

3

guidance on 11

incorporating clinical outcome assessments (COAs) into endpoints for regulatory decision-12

making. 13

14

This public workshop will inform the development of the fourth in a series of four 15

methodological PFDD guidance documents

4

that FDA is developing to describe in a stepwise 16

manner how stakeholders (patients, researchers, medical product developers, and others) can 17

collect and submit patient experience data and other relevant information from patients and 18

caregivers for medical product development and regulatory decision-making. The topics that 19

each guidance document will address are: 20

21

• Methods to collect patient experience data that are accurate and representative of the 22

intended patient population (Guidance 1)

5

23

1

The Glossary defines many of the terms used in this Discussion Document. Words or phrases found in the Glossary

appear in bold italics at first mention.

2

A drug, biological product, or medical device.

3

See https://www.fda.gov/Drugs/NewsEvents/ucm607276.htm.

4

The four guidance documents that will be developed correspond to commitments under section I.J.1 associated with

the sixth authorization of the Prescription Drug User Fee Amendments (PDUFA VI) under Title I of FDA

Reauthorization Act of 2017. The projected time frames for public workshops and guidance publication reflect

FDA’s published plan aligning the PDUFA VI commitments with some of the guidance requirements under section

3002 of the 21st

Century Cures Act (available at

https://www.fda.gov/downloads/forindustry/userfees/prescriptiondruguserfee/ucm563618.pdf

).

5

See draft guidance for industry, FDA staff, and other stakeholders Patient-Focused Drug Development: Collecting

Comprehensive and Representative Input (June 2018). When final, this guidance will represent FDA’s current

thinking on this topic. For the most recent version of a guidance, check the FDA guidance web page at

https://www.fda.gov/RegulatoryInformation/Guidances/default.htm

.

2

• Approaches to identify what is most important to patients with respect to their experience 24

as it relates to disease burden and treatment burden (Guidance 2)

6

25

• Approaches to identify and develop methods to measure impacts in clinical trials 26

(Guidance 3) 27

• Methods, standards, and technologies to collect and analyze COA data for regulatory 28

decision-making (Guidance 4) 29

30

All documents in the series encourage stakeholders to obtain feedback from FDA during the 31

study and trial development period when considering collection of patient experience data. FDA 32

encourages engagement of broader disciplines during clinical development (e.g., qualitative 33

researchers, survey methodologists, statisticians, psychometricians, patient preference 34

researchers, data managers) when designing and implementing studies because the logistics in 35

some cases can be daunting for a seemingly simple piece of patient data to address a simple 36

research objective. 37

38

B. Document Summary 39

The purpose of this Discussion Document is to help stakeholders understand what FDA 40

considers when a COA in a clinical study will be used to eventually support medical product 41

regulatory decision-making. 42

43

The document first lays out a framework that aims to align the clinical study objective with the 44

study design, endpoint, and analysis to improve study planning and the interpretation of analyses. 45

Several examples are provided to help illustrate the framework. 46

47

The document then describes methods to aid in the interpretation of study results to evaluate 48

what constitutes a meaningful within-patient change (i.e., improvement and deterioration from 49

the patients’ perspective) in the concepts assessed by COAs. This information is important 50

because statistical significance can be achieved for small differences between comparator 51

groups, but this finding does not indicate whether individual patients have experienced 52

meaningful clinical benefit. 53

54

A list of considerations when developing an endpoint from a COA is included in Section IV of 55

this Discussion Document. 56

57

6

See draft guidance for industry, FDA staff, and other stakeholders Patient-Focused Drug Development: Methods to

Identify What Is Important to Patients https://www.fda.gov/media/131230/download

. When final, this guidance will

represent FDA’s current thinking on this topic.

3

II. ESTIMAND FRAMEWORK OVERVIEW 58

Section Summary

An estimand is a quantity used to define a treatment effect in a clinical study. The estimand framework

aims to align the clinical study objective with the study design, endpoint, and analysis to improve study

planning and the interpretation of analyses. The attributes of an estimand include specifically defining:

• Who is the target population for the study?

• What is the endpoint (e.g., what variables will be used including which time points)?

• How will events precluding observation or affecting interpretation be accounted for in the

analyses, e.g., dropouts, use of rescue medication, not following prescribed regimen?

• What is the population level summary (e.g., comparing means, hazard ratios)?

Decisions for all the attributes, implicitly or explicitly, are currently present in every data analysis that

is performed. The choices made strongly impact interpretation of the analysis, power, and data

collected.

59

Technical Summary: Key Messages in This Section

To develop endpoints from COAs, a fundamental issue must first be addressed: What is the clinical

question or research objective that the clinical study should be designed to answer? The estimand

framework based on International Council on Harmonisation (ICH) E9(R1) aims to improve clinical

studies by putting the focus on a set of attributes to ensure they align with the study research objectives.

This section discusses four attributes:

Target Study Population

• Patients who are targeted by the scientific question; who will be included in the analysis

• A different population may be appropriate for each scientific question

Endpoint of Interest

• Outcome obtained for each patient that will be statistically analyzed to address the scientific

question; this may include data from multiple variables

• Research protocols should define the concept, COA instrument, score/summary score, type of

endpoint, and thresholds/estimates for clinical interpretation

Intercurrent Events

• Events that occur after randomization/treatment initiation/or study start that could preclude

observation of the variable or affect its interpretation

• An example of an intercurrent event: taking subsequent therapy beyond treatment

discontinuation with an endpoint of physical function measured at a time point several

weeks after treatment discontinuation

• For nonrandomized trials or trials borrowing data, intercurrent events could occur at any

time a subject is considered ‘on study’

• Protocols should specify intercurrent events and how they will be accounted for in analyses to

address the scientific question of interest

4

Population-Level Summary

• Basis for comparison between treatment arms, treatment conditions, other groups, or otherwise

summarizes information

• Examples include a) mean physical function score at baseline for everyone in an

observational research study and b) difference compared to control of a new medical

product’s median time to pain resolution

60

The attributes listed above should be clearly defined prior to developing a protocol and included 61

in both the protocol and Statistical Analysis Plan (SAP). They will determine the data collected, 62

procedures, and other sections of the protocol beyond statistical methods. The attributes also 63

drive the SAP and communication of trial results, as highlighted in Figure 1. 64

65

Figure 1: Attributes of an Estimand Placed in Context 66

67

A. COA Research Objective: Foundation for Your Work 68

The essence of clinical research is to ask important questions and answer them with appropriate 69

studies (ICH E8(R1)). The research objective should be clearly and explicitly stated. To develop 70

the objective, both the natural history of the disease and the treatment goal for the intended 71

product must be considered. For example, the choice of an endpoint will likely be very different 72

between a product intended to treat an acute disease, where the symptoms of many patients will 73

likely resolve within several weeks, versus a product intended to be used for patients living with 74

a chronic disease. Even for a chronic disease, endpoint selection could vary depending on 75

whether the disease is degenerative/progressive, relapsing and remitting, episodic, or relatively 76

stable. Heterogeneity of symptoms or functional status of patients with the disease is also a 77

crucial issue. As an example, relating to the intended goal of the treatment, a product intended to 78

cure a disease is likely to have a different research objective from a product designed to decrease 79

the symptom severity of a chronic disease. 80

81

Research

Objective

Estimand

→ T

arget Study Population

→

Endpoint of Interest

→ I

ntercurrent Events

→

Population-Level Summary

Statistical

Analysis Plan

Communication

of Results

5

B. Target Study Population: In Whom Are You Going to Do the Research and Which 82

Subject Records Are in the Analysis? 83

The target study population used to address a COA research objective (the COA ‘analysis 84

population’) may vary based on the COA-derived endpoint and scientific research question. 85

There may be multiple COA analysis populations in a single trial. The COA analysis 86

population(s) should be defined a priori in the protocol and SAP, with clear justification made 87

for each COA analysis population. The choice of COA analysis population will affect the COA-88

related estimand and interpretation of patient experience. 89

90

Table 1 presents COA target study population examples. There may be other target study 91

populations of interest depending on your research objective. 92

93

Table 1: Considerations of Defining a COA Target Study Population 94

Target Study Population (Examples)

• ITT: All patients randomized according to

assigned treatment arm, regardless of

adherence

• Safety: All patients who received at least

one dose of product, regardless of

randomization

• Analysis populations are often defined based on

their availability of COA data

• All patients who are eligible for the COA

• Completed the COA at baseline

• Completed baseline and at least one

postbaseline assessment

• COA data are available at any trial

timepoint

Abbreviations: ITT = intent-to-treat; COA = clinical outcome assessment 95

96

For sponsors considering an effectiveness claim from a COA-derived endpoint in a randomized 97

trial, the intent-to-treat (ITT) population generally should be used to preserve the benefits of 98

randomization. Justification should be provided if treatment comparisons are made using a COA 99

analysis population different from the ITT population. Any justification should incorporate 100

discussion of trial blinding

7

procedures and their potential impact on data interpretation. If the 101

COA objective is safety or tolerability, including patients who received at least one dose of the 102

investigational product, regardless of randomization, may be more appropriate. Consider how 103

interpretation of the COA-derived endpoint changes if all patients in a trial are not eligible for 104

the COA. For example, generalizability of the results may be narrowed if some patients do not 105

have access to a COA because it is unavailable in a language in which they are fluent. 106

Additionally, depending on the trial protocol, eligibility to complete a COA may change over 107

time. 108

109

Every effort should be made to have high completion rates throughout the study. At baseline, this 110

is important otherwise all postbaseline assessments will be difficult to put into context without a 111

reference variable. Because there is the potential for patients to have missing assessments, 112

sponsors should clearly specify in the SAP how missing observations will be dealt with for clear 113

7

Blinding is sometimes referred to as “masking”; for purposes of this document, we will use blinding.

6

interpretation. Removing subjects without a baseline measurement is common but depending on 114

the research question it may not be the better option. 115

116

C. Endpoint of Interest: What Are You Testing or Measuring in the Target Study 117

Population? 118

1. Endpoint Definition(s) 119

An endpoint is a precisely defined variable intended to reflect an outcome of interest that is 120

statistically analyzed to address a specific research question. An endpoint definition typically 121

specifies the type of assessments made, the timing of those assessments, the tools used, and 122

possibly other details, as applicable, such as how multiple assessments within an individual are 123

to be combined. Measurement properties remain crucial in the context of developing useful 124

endpoints, as endpoints (as well as COAs) should be understood as imperfectly measuring 125

concepts. Hence, assessment of an endpoint’s reliability, content validity, construct validity, as 126

well as ability to detect change are important (refer to FDA PFDD G3 Public Workshop 127

Discussion Document for details). Within the protocol, the specific COA concept(s) should be 128

assessed by fit-for-purpose COA(s) and should be incorporated into a corresponding clinical trial 129

objective or hypothesis and reflected in the endpoint definition and positioning in the testing 130

hierarchy. 131

132

A multidomain COA may successfully support claims based on one or a subset of the domains 133

measured if an analysis plan prespecifies (1) the domains that will be targeted for supporting 134

endpoints and (2) the method of analysis that will adjust for the multiplicity of tests for the 135

specific claim. The use of domain subsets to support clinical trial endpoints assumes the COA 136

was adequately developed and validated to measure the subset of domains independently from 137

the other domains. A complex, multidomain claim cannot be substantiated by instruments that 138

do not adequately measure the individual components of the domain. 139

140

2. Pooling Different Tools and/or Different Concepts to Construct the Endpoint 141

Correlation of Subcomponents and Effect on Power and Type 1 Error 142

Since most diseases have more than one relevant clinical outcome, trials can be designed to 143

examine the effect of a medical product on more than one endpoint (i.e., multiple endpoints). For 144

example, a COA with multiple domains may be used in a clinical trial to assess the most relevant 145

and meaningful clinical outcomes (i.e., each domain corresponds to one clinical outcome) to 146

patients. In such a case, a multiple endpoint approach would be of clinical interest, specifically a 147

multicomponent endpoint approach (refer to FDA draft guidance Multiple Endpoints in Clinical 148

Trials (January 2017)). 149

150

Other analytical methods, such as global tests, could potentially be used to pool scores from 151

different tools of a similar type, e.g., patient-reported outcomes (PROs). The use of these 152

methods should be discussed with the FDA. 153

154

7

Some researchers have considered combining different scores from different measurement tools 155

that evaluate different parts of the latent construct to create a new endpoint using item response 156

theory and other methods to take in to account the different potential dimensions of the COAs. 157

FDA is open to discussion of well-defined and reliable endpoints. 158

159

For a COA composed of multiple domains, with each domain measured using either an ordinal 160

or a continuous response scale, a within-patient combination (e.g., sum or average) of all the 161

individual domain (i.e., component) scores to calculate a single overall rating creates a type of 162

multicomponent endpoint. Other types of multicomponent endpoints may include a dichotomous 163

(event) endpoint corresponding to an individual patient achieving prespecified criteria on each 164

individual component. Careful considerations should be made regarding the choice of individual 165

components and whether all components will have reasonably similar clinical importance or 166

whether the algorithm combining the scores uses differential weighting and how those weights 167

are determined. Since multicomponent endpoints are constructed as a single endpoint, no 168

multiplicity adjustment is necessary to control Type I error. In addition, multicomponent 169

endpoints may provide gains in efficiency if different components are not that highly correlated. 170

Regardless of how a multicomponent endpoint is constructed, the choice of the endpoint needs to 171

be clinically relevant and interpretable. 172

173

Multidomain Responder Index 174

For some rare diseases with heterogeneous patient populations and variable disease 175

manifestations, it may be challenging to assess a single concept of interest across all patients. 176

Stakeholders occasionally propose combining multiple measurement concepts (e.g., a variety of 177

individual COA-based endpoints) into a single dichotomous (event) endpoint. While FDA 178

regulations allow for flexibility and judgement in considering multicomponent endpoints, the 179

selection of these endpoints faces similar challenges as those described under responder 180

endpoints—responder analyses (refer to Section II.E.4). In addition, the choice of the individual 181

components relies on the requirement that all components are of reasonably similar clinical 182

importance (Multiple Endpoints in Clinical Trials (January 2017)). An example dichotomous 183

(event) endpoint is the multidomain responder index (MDRI) approach, which thus far has not 184

been demonstrated as a viable approach based on evidence submitted to FDA. 185

186

In general, the MDRI approach combines multiple individual domains or endpoints with a 187

prespecified responder threshold for each endpoint. Various methods have been proposed to 188

construct an MDRI endpoint. For example, each domain score is presented as +1 for 189

improvement, 0 for no change, and -1 for decline, and an overall MDRI response for a patient is 190

defined based on the individual scores (e.g., if any domain shows improvement). It is important 191

to note that successful creation of an MDRI requires clearly defined and clinically relevant 192

endpoints with appropriate responder thresholds (i.e., what constitutes a clinically meaningful 193

within-patient change score) established a priori for those endpoints. In practice, these responder 194

thresholds are often hard to establish, especially for rare diseases with small patient populations 195

and limited natural history data. Additionally, defining an endpoint score as +1, 0, or -1 relies on 196

the assumption that the degree of improvement and deterioration in a concept of interest is 197

symmetric, which often is not a valid assumption. Another important consideration for the MDRI 198

8

approach is the amount of missing data for each domain, component, or individual endpoint of 199

the MDRI. Large amounts of missing data will impede the interpretation of the endpoint results. 200

201

Personalized Endpoints 202

Similar concerns exist with personalized or individualized endpoints, which often are analyzed 203

descriptively as exploratory endpoints. The process to construct a personalized endpoint should 204

be standardized, and the criteria for selecting the outcome assessments should be consistent 205

across sites and patients. The same set of outcome assessments should be assessed for all 206

patients, regardless of their own personalized endpoint, to allow for an assessment of any new or 207

worsening symptoms and/or functional limitation(s) during the trial duration. Certain outcome 208

assessments may not be applicable to all trial patients. However, if an outcome is not assessed in 209

a patient at a given time point, the reason for the assessment not being performed should be 210

noted, included in the analysis data set, and used as part of the analysis. 211

212

Pooling Scores Across Reporters 213

To evaluate the treatment benefit of a medical product, sometimes it may be necessary to use 214

different types of COAs to assess the same construct(s) in the same clinical trial (e.g., in a 215

pediatric trial in which a PRO measure is used for older children who can reliably self-report and 216

an observer-reported outcome (ObsRO) measure is used by caregivers to report signs and 217

behaviors of younger children who are unable to reliably self-report). In general, scores 218

generated by different types of COAs, (i.e., PROs, ObsROs, clinician-reported outcomes 219

(ClinROs), and performance outcomes (PerfOs)), cannot be pooled to form a single clinical trial 220

endpoint, even if they are developed to assess the same construct(s), in analyses submitted to 221

FDA to support a medical product application. Because these different types of COAs are 222

developed for different contexts of use (e.g., PRO measures to report direct experiences of 223

symptoms by the patients themselves and ObsRO measures to report observable signs and 224

behaviors of the patients by their caregivers), they are distinct outcome assessments, and it is 225

therefore inappropriate to pool the resulting sets of scores. 226

227

Scores generated by different types of COAs should be analyzed separately with—where 228

feasible—enough reporters included in each group to support any subsequent inferences to the 229

target population. Simply because each tool has the same score range (e.g., 0 to 10) does not 230

mean data can be pooled. 231

232

Pooling Across Delivery Modes (Same Tool, Same Reporter) 233

Scores generated by the same tool administered (“delivered”) via different modes (e.g., 234

interactive voice response; interview; paper-based; electronic device) may be pooled under very 235

specific and limited conditions. Although scores yielded by different modes are generally 236

considered to be comparable when there is no difference between modes in terms of the wording 237

of item stems and response options, item formats, the appearance and usage of graphics or other 238

visuals, or order of the items (see FDA PFDD G3 Public Workshop Discussion Document for 239

9

further discussion), administering a tool using more than one mode or method per study can 240

introduce noise (i.e., construct-irrelevant variance in COA score) that may not be completely 241

random and may make it more difficult to discern treatment effects. 242

243

3. Timing of Assessments 244

Clinical trials using COAs should include a schedule of COA administration as part of the 245

overall study assessment schedule in the protocol. The timing of assessments plays a vital role in 246

gaining reliable and meaningful information on the concept(s) of interest and should be selected 247

carefully and supported by adequate rationale for the choice of assessment time points. The COA 248

schedule should correspond directly with the natural course of the disease or condition (i.e., 249

acute, chronic, or episodic), research questions to be addressed, trial duration, disease stage of 250

the target patient population, and current treatment of patients, and be administered within the 251

expected time frame for observing changes in the concept(s) of interest. Other important 252

considerations for determining the most appropriate timing of assessments for COA-based 253

endpoints include, but are not limited to, the following: 254

255

• Recall period: A COA should not be administered more frequently than the recall period 256

allows (refer to FDA PFDD G3 Public Workshop Discussion Document Section VI.B.7 257

for in-depth discussion of considerations regarding an instrument’s recall period). For 258

example, an instrument with a 1-week recall period should be administered no more 259

frequently than 1 week (7 days) after the previous administration. If the recall period 260

implies assessment at a specific time of day (e.g., in the morning, at night) or at a specific 261

time relative to treatment (e.g., since last dose) or relative to some other event (e.g., since 262

waking up today, since going to bed last night, since last bowel movement), assessments 263

should be timed accordingly. This issue also arises in wording of COAs administered 264

using ecological momentary assessment. 265

• Anticipated rate of change in the underlying construct to be measured: The timing of 266

assessments should align with the anticipated rate of change in the underlying construct 267

to be measured (but, as mentioned above, should be no more frequent than what the 268

instrument’s recall period allows). For example, if the construct to be measured is 269

expected to change rapidly over the course of the study period, assessments should be 270

placed closer together. If the construct is expected to change slowly, one might place 271

assessments further apart. Note that rate and direction of change in the underlying 272

construct is linked to the rate and direction of change in the underlying disease/condition 273

to be treated (i.e., linked to the pace of improvement or deterioration/progression in the 274

underlying disease), but the two may not move together in lock-step (i.e., they probably 275

would move in the same direction but may move at different rates). 276

• COA administration burden: The length and frequency of COA administration should 277

take into consideration patient burden which may result in patient fatigue and lead to an 278

increase of missing data, as well as impact data quality. 279

• COA administration schedule: The schedule of COA administration should align with 280

the administration of other prespecified endpoints (i.e., primary and secondary) and 281

proposed SAP. 282

10

• Collect COA data at baseline: The COAs should be administered at baseline. If the trial 283

includes a run-in period during which the effect on the COA might be expected to change 284

(e.g., medication washout, patient behavior modification), this should be considered 285

when considering the timing of assessments. Note that some diseases, conditions, or 286

clinical trial designs may necessitate more than one baseline assessment and several COA 287

administrations during treatment. 288

• Align anchor administration time: The timing of anchor scale administration should 289

align with both the recall period and the administration of the corresponding COA (e.g., 290

patient global impression of severity (PGIS) with PRO timing; clinician global 291

impression of severity with ClinRO timing). 292

• Use same COA administration order: The order of COA administration should be 293

standardized to help reduce measurement error. 294

• Timing of treatment administration: If treatment is administered repeatedly over the 295

clinical trial period and change in the target construct(s) is to be assessed repeatedly over 296

the trial period, it may be sensible to measure the construct at the same time relative to 297

treatment administration throughout the trial—unless treatment considerations dictate 298

otherwise. 299

300

4. Defining Improvement and Worsening 301

Clinically relevant within-patient thresholds for improvement and worsening should be 302

predefined and justified. A few suitable supplementary analyses may be conducted to evaluate a 303

range of thresholds when appropriate. See Section III of this Discussion Document for additional 304

information. 305

306

Superiority versus noninferiority or equivalence testing of a COA-based endpoint must be 307

predefined in the SAP. It is inappropriate to conclude “no worsening” when there is a 308

nonsignificant test of superiority (e.g., p > 0.05). Trials with small sample sizes lead to wide 309

confidence intervals of the treatment effect of the COA-based endpoint, which will likely not 310

demonstrate superiority. 311

312

5. Clinical Trial Duration and COA-Based Endpoints 313

Generally, the duration a COA is collected should be the same duration as indicated for other 314

measures of effectiveness or safety in the clinical trial protocol. It is important to consider 315

whether the clinical trial’s duration is of adequate length to assess a durable COA-based endpoint 316

in the disease or condition being studied. Determination of the clinical trial duration should be 317

driven by the disease course as well as treatment and endpoint objectives outlined in the clinical 318

trial protocol. 319

320

11

D. Intercurrent Events: What Can Affect Your Measurement’s Interpretation? 321

Intercurrent events are events that occur after randomization/treatment initiation/or trial start that 322

either preclude observation of the variable (and potentially subsequently the endpoint) of interest 323

or affect its interpretation (e.g., taking rescue medication). While missing data is a part of the 324

definition, it is not the only definition. 325

326

1. Use of Assistive Devices, Concomitant Medications, and Other Therapies 327

It is important to consider what other activities may impact the COA score and endpoint value, 328

such as use of assistive devices (e.g., walkers), concomitant medications including rescue 329

therapies (e.g., bronchodilators or pain medication), and other therapies (e.g., physical therapy). 330

For example: 331

332

• Use of assistive devices may particularly impact PerfO assessment of mobility and can 333

impact other types of COAs 334

• If a specific published administrator’s manual is selected for a performance-based test, it 335

is important to conduct the test in accordance with the selected manual, including the use 336

of standardized assistive devices, if allowed 337

• If study procedures are not aligned with the instrument’s user manual, changes should be 338

detailed in the study documents and training should occur specific to the changes 339

• Case report forms (CRFs) for data collection should include information on whether an 340

assistive device (and what type) was used during the test 341

342

For diseases where patients’ underlying disease status is expected to change during the trial, with 343

corresponding changes in the use and the type of assistive device, it would be informative to 344

incorporate the information on the assistive device into the COA-based endpoint construction, as 345

the change in assistive device may reflect either an improvement or a deterioration in the 346

patient’s disease status. 347

348

Two other examples of intercurrent events: 349

350

• If an item assesses difficulty buying groceries and wording does not account for use of a 351

food delivery service, an intercurrent event could occur. 352

• If a patient in a trial breaks their leg in a car accident, that likely impacts the physical 353

function PRO instrument’s score. 354

355

Use of other supportive therapies that may impact the interpretation of the endpoint should be 356

assessed consistently. Data should be collected and recorded in a standardized manner, and 357

incorporated into the endpoint model and supplementary analyses. A discussion with study 358

coordinators, statisticians, clinicians, and patients will result in a list of likely intercurrent events 359

to include in study planning. 360

361

12

2. Impact of Disease/Condition Progression, Treatment, and Potential Intercurrent Events 362

In the planning stages of a clinical study, it is important to consider how both the 363

disease/condition and treatment may impact a patient’s ability to function cognitively and 364

physically over the course of the study as the disease/condition progresses or as treatment side 365

effects manifest, including ability to communicate, follow instructions (verbal and written), 366

receive and understand information, and complete the assessment.

8

Missed or incomplete 367

assessments due to disease progression or treatment side effects “may provide meaningful 368

information on the effect of a treatment and hence may be incorporated into a variable [(or 369

endpoint)], with appropriate summary measure, that describes a meaningful treatment effect” 370

(ICH E9(R1)). 371

372

Since model-based estimates generally tend to be “very sensitive” to model misspecification, it is 373

recommended that supplementary and sensitivity analyses be conducted to examine how much 374

the results/findings change under various assumptions about the missing data mechanism 375

(National Research Council, 2010). Principles and methods for sensitivity analyses are discussed 376

further in ICH E9(R1) and Chapter 5 of the National Research Council’s 2010 report on The 377

Prevention and Treatment of Missing Data in Clinical Trials (National Research Council, 2010). 378

379

Changes in physical or cognitive function due to disease/condition progression and/or treatment 380

effects are important outcomes to be measured and either incorporated into the study endpoint 381

structure or reported as safety findings. 382

383

For some risk factors of cognitive or physical change unrelated to disease or treatment (such as 384

advancing age), the chances of a patient’s cognitive or physical function changing over the 385

course of the study may increase with study duration. Use of appropriate inclusion and exclusion 386

criteria may help mitigate some potential causes of cognitive and physical change. However, 387

restrictive criteria can impact the ability to recruit and the generalizability of study results. 388

Because changes in cognitive and physical function may still occur during the study, it is 389

important to note sources of competing risks and other intercurrent events in the SAP and Study 390

Report. 391

392

3. Practice Effects 393

A practice effect

9

(sometimes also called a learning effect) is any change that results from 394

practice or repetition of completing particular tasks or activities including repeated exposure to 395

an instrument. A simple example is taking a math test. After completing the same test three times 396

8

When disease progression or treatment side effects result in missed or incomplete assessments, those missing COA

data are considered to be informatively missing or missing not at random (MNAR). Missing observations (e.g.,

missing COA data) are considered to be informatively missing or MNAR “when there is some association between

whether or not an observation is missing (or observed) and the status of the patient’s underlying disease” (Lachin,

1999). Failing to incorporate both observed and unobserved (i.e., missing but potentially observable) COA data from

the entire ITT population in analyses involving the COA-based endpoint will likely yield biased (erroneous;

misleading) results.

9

Note that practice effects may be referred to using different terminology in different disciplines.

13

your speed (and maybe accuracy in answering) likely will improve because you recognize the 397

questions and have ‘learned’ the test. While potentially an issue for any COA, practice effects 398

may be of particular concern in studies utilizing PerfOs with within-subject designs in which 399

repeated measurements are taken over time, i.e., over the course of the study period (American 400

Psychological Association, 2018; Shadish, Cook, & Campbell, 2002). 401

402

Practice effects may be problematic for studies conducted to support a medical product 403

regulatory application. Practice effects, by definition, lead to improvement in the score of the 404

assessment. This score improvement confounds score changes attributable to the clinical trial 405

intervention. In randomized controlled trials, if practice effects are constant across trial arms, 406

they will not bias the difference of the outcomes between arms. However, if practice effects 407

interact with clinical trial intervention such that the magnitude and direction of practice effects 408

differ by trial arm, the treatment effects may be deflated or inflated (Song & Ward, 2015). 409

Deflation of treatment effects may result in delayed patient access to effective treatment options, 410

and inflation of treatment effects may expose patients to risk due to wasted time and resources 411

spent pursuing ineffective treatments. Whether the practice effects are constant or differ across 412

clinical trial arms is generally unknown. Therefore, the best strategy is to minimize the potential 413

for practice effects in clinical studies. 414

415

Currently, approaches exist for attenuating, but not eliminating, practice effects (Jones, 2015). In 416

addition, no consensus on best practices for attenuating practice effects has yet been reached 417

(Jones, 2015). Some general strategies for mitigating practice effects are summarized below. 418

These strategies may be used in isolation but may be more effective when used in combination. 419

420

• Consider available evidence on practice effects when identifying an instrument: 421

Some instruments may be more robust to practice effects than others. When selecting an 422

instrument, one may wish to consider available evidence of the candidate instruments’ 423

robustness (or vulnerability) to practice effects. Such evidence may be obtained through, 424

for example, a thorough review of the literature. 425

• Increase length of time (spacing) between assessments: In general—and all else being 426

equal—the magnitude of practice effects is expected to decrease as time between 427

assessments increases (Shadish, Cook, & Campbell, 2002). Decisions regarding the 428

length of time (spacing) to place between assessments should take into consideration both 429

how rapidly (or slowly) change in the underlying construct is expected to occur and the 430

recall period utilized by the instrument. Refer to Section II.C.3 of this Discussion 431

Document for more detailed considerations regarding timing of assessments. 432

• Increase the length of the run-in period: In general, the magnitude of practice effects is 433

largest at the beginning of a study and gradually levels off or decreases as the number of 434

assessments increases. Having a long run-in period allows large practice effects to occur 435

for the first few assessments until its magnitude does not significantly increase such that 436

the baseline and postbaseline score are minimally affected by practice effects. 437

• Use alternate forms (sometimes also referred to as parallel forms or equivalent 438

forms): Alternate forms are different versions of an instrument “that are considered 439

interchangeable, in that they measure the same constructs in the same ways, are built to 440

the same content and statistical specifications, and are administered under the same 441

14

conditions using the same directions” (Test Design and Development, 2014). 442

Administering different forms comprised of distinct sets of items may make practice 443

effects less likely to occur. 444

For the use of alternate forms to attenuate practice effects without introducing additional 445

bias: (1) alternate forms must be truly psychometrically equivalent;

10

and (2) alternate 446

forms must be administered in a random order that differs by study arm (i.e., a 447

counterbalanced, randomized order) (Jones, 2015; Goldberg, Harvey, Wesnes, Snyder, & 448

Schneider, 2015). 449

450

4. Participant Burden 451

The possibility of participant burden compromising the validity of the endpoint should be 452

assessed. Burden may lead to missing data or inaccurate data (e.g. answering the first response to 453

every item). When an endpoint is derived from multiple administrations of a COA, attention 454

should be paid to whether study subject fatigue or patient burden might diminish the validity of 455

COA scale scores. This, in turn, could compromise the validity of the endpoint itself, leading to 456

biased estimates of treatment effects and inaccurate hypothesis tests. Study subject fatigue is less 457

likely to occur if an endpoint is based on a small number of widely spaced administrations, and 458

more likely to occur if an endpoint is based on a larger number of administrations over a limited 459

period of time. The effort required for the subject to complete the COA also influences the 460

probability that subject fatigue will compromise scale and endpoint validity. 461

462

For the sake of illustration, suppose subjects are expected to complete a 25-item PRO for seven 463

consecutive days, with the endpoint being the average of the seven daily scores. Some study 464

subjects may grow fatigued at needing to complete the PRO for seven consecutive days, and 465

such fatigue could manifest itself in a variety of ways: 466

467

• Subjects stop completing the PRO at some point after the initial administration and/or 468

choose not to respond to some items at a given administration. 469

• Subjects recall item responses they made the previous day and repeat prior item 470

responses rather than carefully considering how to respond to each item. 471

• Subjects tend to give the same rote response to each item rather than carefully 472

considering how to respond to each item. 473

474

The first type of fatigue response will increase endpoint missingness. While this is not, strictly 475

speaking, an issue of reliability or validity, it clearly compromises the use of the endpoint for 476

assessing its construct. The second and third types of fatigue responses compromise the validity 477

10

For two different instruments to be considered parallel, they must have matching content (i.e., each instrument

must measure the same symptom, function, or impact); estimated item parameters and corresponding standard errors

must not significantly differ; estimated score reliability and corresponding standard errors must not significantly

differ; and score means and standard deviations (surrogates for the distributions of the two sets of scores) in the

target population must not significantly differ (Test Design and Development, 2014).

15

of the endpoint, as the validity of at least some of the PRO administrations per fatigued subject 478

are compromised. 479

480

5. Mode of Administration 481

Changes or disruptions to standardized instrument administration procedures should be 482

documented and may need to be included in the data analyses. Depending on the construct being 483

measured, the assessment environment should provide the reporter with reasonable comfort and 484

minimal distractions to avoid introducing construct-irrelevant variance into the resulting COA 485

scores (American Educational Research Association; American Psychological Association; 486

National Council on Measurement in Education, 2014). 487

488

COA data collection modes can include paper-based and/or electronic-based approaches. Types 489

of COA administration can include self-administration, interviewer-administration (e.g. face-to-490

face, via telephone or electronic means), clinician-administration, and/or trained administrator-491

administration (FDA PFDD G3 Discussion Document). To help ensure the instrument’s 492

established psychometric measurement properties hold in the study at hand, the COA must be 493

administered in accordance with standardized administration and scoring algorithm specified by 494

the instrument developer (such as in the instrument’s user manual or website). For modes of data 495

collection that do not include a date and time stamp (e.g., paper diaries), it is difficult to ensure 496

that patients enter data at the protocol-specified time. 497

498

6. Missing Data and Event-Driven COA Reporting 499

Programming errors can result in significant amounts of missing data which impedes 500

interpretation of analysis results. For example, a COA may be designed to give patients the 501

option to report additional events and event-related symptoms not reported during the day; 502

however, a potential programming error could cause the additional questions to not be 503

administered at the end of the day. Large amounts of missing data would be generated, resulting 504

in underreporting of the event and the study endpoint itself being unreliable and uninterpretable. 505

506

7. Missing Scale-Level Data 507

Missing data should be distinguished from data that do not exist or data that are not considered 508

meaningful due to an intercurrent event. The protocol and the SAP should address plans for how 509

the statistical analyses will handle missing COA data when evaluating clinical benefit and when 510

considering patient success or patient response. 511

512

In cases where patient-level COA data are missing for the entire domain(s) or the entire 513

measurement(s), sponsors should clearly define missing data and propose statistical methods that 514

properly account for such data with respect to a particular estimand. Methods to handle the 515

missing data for a COA-based endpoint and any related supportive endpoints should be 516

addressed in the protocol and the SAP. In addition, the supplementary and sensitivity analyses of 517

the COA-based endpoints should be prospectively proposed in the protocol and the SAP. These 518

16

analyses investigate assumptions used in the statistical model for the main analytic approach, 519

with the objective of verifying that inferences based on an estimand are robust to limitations in 520

the data and deviations from the assumptions. 521

522

E. Population-Level Summary: What Is the Final Way All Data Are Summarized and 523

Analyzed? 524

The population-level summary serves as the basis for comparison between treatment arms, 525

treatment conditions, other groups, or otherwise summarizes information. Examples include a) 526

mean physical function score at baseline for everyone in an observational research study and b) 527

difference compared to control of a new medical product’s median time to pain resolution. 528

529

The statistical analysis considerations for COA-based endpoints are similar to the statistical 530

considerations for any other endpoint used in medical product development. This section briefly 531

discusses several considerations that commonly arise when analyzing COA-based endpoints. 532

533

1. Landmark Analysis 534

Sponsors should justify the use of and time in which a landmark analysis (an analysis at a fixed 535

time point, e.g. 12 weeks) is to be performed. If a COA-based endpoint is collected repeatedly, 536

information may be lost in conducting a landmark analysis. However, even when conducting a 537

landmark analysis at a fixed time point, data from intermediate time points (i.e., measurements 538

taken prior to the fixed time point) can still be included in the model. Interpretation of an 539

analysis of overall COA score over time may be difficult in the presence of missing data. The 540

interpretation of potential analyses when COA data collection is truncated due to death or other 541

events should be carefully discussed within the research team. 542

543

2. Analyzing Ordinal Data 544

When an ordinal endpoint has a limited number (e.g., 3 to 7) of categories, you should describe, 545

analyze, and interpret the study result on this endpoint using methods appropriate for ordinal 546

variables, e.g., ordinal regression. For descriptive statistics, mean and standard deviation should 547

not be used on an ordinal endpoint. Percentiles and bar graphs can be informative. 548

549

3. Time-to-Event Analysis 550

Defining and identifying an event is an issue for time-to-event analysis of COA-based endpoints 551

and responder analyses of ordinal or continuous COA data. A clinically relevant threshold for 552

deterioration, maintenance, or improvement must be predefined and justified. Relevant 553

information on intercurrent events, censoring rules, and defining an event should be prespecified 554

in the protocol. It is important to explicitly state how to handle intercurrent events. For example, 555

estimates may differ if death is considered a deterioration event versus censored. Censoring rules 556

in the presence of missing COA data should be prespecified in the SAP. Furthermore, analyses to 557

17

evaluate assumptions of the primary time-to-event analysis should be performed under differing 558

censoring rules. 559

560

4. Responder Analyses and Percent Change From Baseline 561

As previously mentioned, COA data often are ordinal or continuous in nature. Sponsors should 562

consider analyzing COA-based endpoints as continuous or ordinal variables rather than as a 563

responder (i.e., dichotomized from either ordinal or continuous COA data) to avoid 564

misclassification errors and potential loss of statistical power. There tends to be more precision 565

in the evaluation of medical product effects on continuous variables (i.e., based on a comparison 566

of means), especially when sample size is of concern. Alternative approaches for analysis (e.g., 567

analyses based on ranks) should be included, if appropriate, in the SAP to account for occurrence 568

of extreme outliers. 569

570

If a responder endpoint is deemed appropriate for a trial and the endpoint is proposed based on 571

dichotomization from either ordinal or continuous data, it is prudent for the sponsor to prespecify 572

a single responder threshold and provide evidence to justify that the proposed responder 573

threshold constitutes a clinically meaningful within-patient change prior to the initiation of the 574

trial. Proposed responder threshold(s) should be discussed with FDA prior to the initiation of the 575

trial as it is crucial for sample size planning and to appropriately power the study. 576

577

In general, for COA-based endpoints FDA does not recommend a responder analysis endpoint or 578

a percent change from baseline endpoint unless the targeted response is complete resolution of 579

signs and symptoms. While percent change from baseline is popular in other contexts, the 580

statistical measurement properties are poor. Strange occurrences arise, for example in 581

randomized withdrawal studies we have seen subjects needing to reach a percent change from 582

baseline threshold who end up needing significantly higher symptom burden to go back on 583

treatment compared to symptom levels needed to enter the trial based on inclusion criteria. 584

Extreme caution should be exercised, and all potential endpoint situations explored especially 585

near the floor and ceiling of the COA or COA-based endpoint’s values, before using percent 586

change from baseline as the population-level summary. 587

588

The Appendix contains a case study that illustrates several of these concepts and guides us to 589

using the estimand framework to better develop the SAP and ultimately more transparently 590

communicate study results. 591

592

593

594

18

III. MEANINGFUL WITHIN-PATIENT CHANGE 595

Section Summary

To aid in the interpretation of study results, FDA is interested in what constitutes a meaningful within-

patient change (i.e., improvement and deterioration from the patients’ perspective) in the concepts

assessed by COAs. Statistical significance can be achieved for small differences between comparator

groups, but this finding does not indicate whether individual patients have experienced meaningful

clinical benefit.

596

Technical Summary: Key Messages in This Section

• What constitutes, from a patient perspective, a meaningful within-patient change in the concepts

evaluated by COAs.

• FDA recommends the use of anchor-based methods to establish meaningful within-patient changes,

although there are other methods that can be used.

• Anchors selected for the trial should be plainly understood in context, easier to interpret than the

clinical outcome itself, and sufficiently associated with the target COA and/or endpoint.

• Anchor-based methods should be supplemented by the use of empirical cumulative distribution

function (eCDF) curves and probability density function (PDF) curves.

597

Interpretation of Within-Patient Meaningful Change 598

599

To holistically determine what is a meaningful change, both benefit and risk, improvement and 600

deterioration, may need to be accounted for. This document is not directly addressing this 601

integration of benefit and risk, but the methods described can be used to help interpret benefit or 602

risk. As such, special consideration should be given by the sponsor to assess how meaningful the 603

observed differences are likely to be. To aid in the interpretation of the COA-based endpoint 604

results, sponsors should propose an appropriate threshold(s) (e.g., a range of score change) that 605

would constitute a clinically meaningful within-patient change in scores in the target patient 606

population for FDA review. 607

608

In addition, if the selected threshold(s) are based on transformed scores (e.g., linear 609

transformation of a 0-4 raw score scale to a 0-100 score scale), it is important to consider score 610

interpretability of the meaningful change threshold(s) for both transformed scores and raw 611

scores. Depending on the proposed score transformation, selected threshold(s) based on 612

transformed scores may reflect less than one category change on the raw score scale, which is 613

not useful for the evaluation and interpretation of clinically meaningful change. 614

615

19

Meaningful Within-Patient Change Versus Between-Group Difference 616

617

It is important to recognize that individual within-patient change is different from between-618

group difference. From a regulatory standpoint, FDA is more interested in what constitutes a 619

meaningful within-patient change in scores from the patient perspective (i.e., individual 620

patient level). The between-group difference is the difference in the score endpoint between 621

two trial arms that is commonly used to evaluate treatment difference. Between-group 622

differences do not address the individual within-patient change that is used to evaluate 623

whether a meaningful score change is observed. A treatment effect is different from a 624

meaningful within-patient change. The terms minimally clinically important difference 625

(MCID) and minimum important difference (MID) do not define meaningful within-patient 626

change if derived from group-level data and therefore should be avoided. Additionally, the 627

minimum change may not be sufficient to serve as a basis for regulatory decisions. 628

629

A. Anchor-Based Methods to Establish Meaningful Within-Patient Change 630

Anchor-based methods utilize the associations between the concept of interest assessed by the 631

target COA and the concept measured by separate measure(s), referred to as anchoring 632

measure(s), often other COAs. FDA recommends the use of anchor-based methods 633

supplemented with both empirical cumulative distribution function (eCDF) and PDF curves to 634

establish a threshold(s), or a range of thresholds, that would constitute a meaningful within-635

patient change score of the target COA or the derived endpoint for the target patient population. 636

The anchor measure(s) are used as external criteria to define patients who have or have not 637

experienced a meaningful change in their condition, with the change in COA score evaluated in 638

these sets of patients. Sponsors should provide evidence for what constitutes a meaningful 639

change on the anchor scale by specifying and justifying the anchor response category that 640

represents a clinically meaningful change to patients on the anchor scale, e.g., a 2-category 641

decrease on a 5-category patient global impression of severity scale. 642

643

Considerations for Anchor Measures 644

645

• Selected anchors should be plainly understood in context, easier to interpret than the 646

COA itself, and sufficiently associated with the target COA or COA endpoint 647

• Multiple anchors should be explored to provide an accumulation of evidence to help 648

interpret a clinically meaningful within-patient score change (can also be a range) in the 649

clinical outcome endpoint score 650

• Selected anchors should be assessed at comparable time points as the target COA but 651

completed after the target COA 652

20

• The following anchors are sometimes recommended to generate appropriate threshold(s) 653

that represent a meaningful within-patient change in the target patient population: 654

– Static, current-state global impression of severity scale (e.g., PGIS) 655

– Global impression of change scale (e.g., patient global impression of change or 656

PGIC) 657

– Well-established clinical outcomes (if relevant) 658

• A static, current state global impression of severity scale is recommended at minimum, 659

when appropriate, since these scales are less likely to be subject to recall error than global 660

impression of change scales; they also can be used to assess change from baseline. 661

662

B. Using Empirical Cumulative Distribution Function and Probability Density 663

Function Curves to Supplement Anchor-Based Methods 664

The eCDF curves and PDF curves can be used to supplement anchor-based methods. The eCDF 665

curves display a continuous view of the score change (both positive and negative) in the COA-666

based endpoint score from baseline to the proposed time point on the horizontal axis, with the 667

vertical axis representing the cumulative proportion of patients experiencing up to that level of 668

score change. An eCDF curve should be plotted for each distinct anchor category as defined and 669

identified by the anchor measure(s) (e.g., much worse, worse, no change, improved, much 670

improved). 671

672

As a reference, Figure 2 provides an example of eCDF curves. Note that the median change is 673

indicated by the red line in this example. The number of PGIS category increases and decreases 674

defines the example’s curves. In some instances, not all two (or 1 or 0) category changes are the 675

same. This should be considered when choosing an anchor summary and interpreting these 676

figures and data. 677

678

21

Figure 2: Example of Empirical Cumulative Distribution Function Curves of Change in 679

COA Score from Baseline to Primary Time Point by Change in PGIS Score 680

681

Abbreviations: PGIS = patient global impression of severity; COA = clinical outcome assessment 682

683

The PDF curves are useful in aiding the interpretation of eCDF curves. Compared with eCDF 684

curves, PDF curves may provide a more intuitive overview of the shape, dispersion, and 685

skewness of the distribution of the change from baseline in the endpoint of interest across 686

various anchor categories. Figure 3 provides an example of PDF curves. 687

688

Figure 3: Example of Density Function Curves of Change in COA Score from Baseline to 689

Primary Time Point by Change in PGIS Score 690

691

Abbreviations: PGIS = patient global impression of severity; COA = clinical outcome assessment 692

22

C. Other Methods 693

1. Potentially Useful Emerging Methods 694

Other methods may be explored to complement the anchor-based methods or when anchor-based 695

methods are not feasible (i.e., when no adequate anchor measure(s) are available). For example, 696

mixed methods may be used to triangulate and interpret COA-based endpoint results. The 697

qualitative research methods in the PFDD Guidance 1 and Guidance 2 documents are frequently 698

used, including cognitive interviews, exit interviews, or surveys to help inform the improvement 699

threshold. In addition, patient preference studies, typically surveys or interviews, may be utilized 700

to help interpret and support clinical trial results. 701

702

There are several methods emerging in the health sector as potential ways to derive and interpret 703

clinically meaningful change (Duke Margolis meeting summary, 2017), including scale-704

judgement and bookmarking/standard-setting. These methods are relatively new in the regulatory 705

setting. 706

707

2. Distribution-Based Methods 708

Distribution-based methods (e.g., effect sizes, certain proportions of the standard deviation 709

and/or standard error of measurement) do not directly take into account the patient voice and as 710

such cannot be the primary evidence for within-patient clinical meaningfulness. Distribution-711

based methods can provide information about measurement variability. 712

713

3. Receiver Operator Characteristic Curve Analysis 714

Unless there is significant knowledge about how a COA performs in a specific context of use, 715

FDA does not recommend using receiver operator characteristic (ROC) curve analysis as a 716

primary method to determine the thresholds for within-patient meaningful change score. The 717

ROC curve method is a model-based approach, such that different models may yield different 718

threshold values. Additionally, the ROC curve method is partially a distributional-based 719

approach, such that the distribution of the change scores of the two groups will determine the 720

location of the threshold. The most sensitive threshold identified by ROC may not actually be the 721

most clinically meaningful threshold to patients. 722

723

The ROC curve method is appropriate for evaluating the performance (e.g., sensitivity and 724

specificity) of the proposed responder thresholds derived from the anchor-based methods. 725

726

D. Applying Within-Patient Change to Clinical Trial Data 727

Clinical trials compare groups. To help evaluate what constitutes a meaningful within-patient 728

change (i.e., improvement and deterioration from the patients’ perspective), you should examine 729

whether treatment arms show separation in the range of clinically meaningful within-patient 730

change thresholds evaluated using methodologies described in other parts of this document. 731

732

23

When analyzing a COA-based endpoint as either a continuous or an ordinal variable, it is 733

important to evaluate and justify the clinical relevance of any observed treatment effect. 734

Sponsors should plan to evaluate the meaningfulness of within-patient changes to aid in the 735

interpretation of the COA-based endpoint results by submitting a supportive graph (i.e., eCDF) 736

of within-patient changes in scores from baseline with separate curves for each treatment arm. 737

The graph will be used to assess whether the treatment effect occurs in the range that patients 738

consider to be clinically meaningful. 739

740

Figure 4 provides an example of an eCDF curve by treatment arm, where there is consistent 741

separation between the treatment arms. The treatment effect occurs in the range patients consider 742

to be clinically meaningful. 743

744

Figure 4: An eCDF Curve by Treatment Arm Showing Consistent Separation Between 745

Two Treatment Arms 746

747

Abbreviation: eCDF = empirical cumulative distribution function 748

749

Figure 5 provides an example of an eCDF curve where the treatment effect does not occur in the 750

range patients consider to be clinically meaningful. Of note, the eCDF does not take in to 751

account estimation uncertainty and is not a test. 752

753

24

Figure 5: An eCDF Curve Where Treatment Effect Is Not in Range Considered Clinically 754

Meaningful by Patients 755

756

Abbreviations: eCDF = empirical cumulative distribution function; COA = clinical outcome assessment 757

758

759

25

IV. ADDITIONAL CONSIDERATIONS 760

Key Messages in This Section

• Appropriately positioned COAs intended to support approval and/or labeling claims are in the

endpoint testing hierarchy.

• A trial’s protocol and SAP should state each COA-based endpoint as a specific clinical trial

objective.

• Address multiplicity concerns and plans for handling missing data at both the instrument and

patient level.

• Short list of formatting and submission considerations applicable to COA data.

761

When planning a study, confirm the following: 762

763

1. Each COA-based endpoint is stated as part of a specific clinical trial objective 764

2. COAs intended to support meaningful outcomes to patients (i.e., labeling claims or other 765

communications) are fit-for-purpose and sensitive to detect clinically meaningful changes 766

3. Clinical trial duration is adequate to support COA objectives 767

4. Frequency and timing of COA administration is appropriate given patient population, clinical 768

trial design and objectives, and demonstrated COA measurement properties 769