December 2019

United States Government Accountability Office

Science, Technology Assessment, and Analytics

Report to Congressional Requesters

TECHNOLOGY ASSESSMENT

Artificial Intelligence

in Health Care

Benefits and Challenges of Machine Learning in

Drug Development

With field background content from the National Academy

of Medicine

GAO-20-215SP

Jointly published with

The cover image displays a stylized representation of data inputs from a variety of sources—including patient data from

health records or clinical trials, lab research data, textual data from scientific and medical literature, and data on

compounds and their properties—to a computer representing machine learning algorithms. Those algorithms then

assist with various aspects of the drug development process, eventually resulting in a marketed drug.

This report is being jointly published by the Government Accountability Office (GAO) and the National Academy of

Medicine (NAM). Part One of this joint publication presents material excerpted and adapted by NAM from its 2020

NAM Special Publication Artificial Intelligence in Health Care: The Hope, the Hype, the Promise, the Peril. Part Two is the

full presentation of GAO’s Technology Assessment Artificial Intelligence in Health Care: Benefits and Challenges of

Machine Learning in Drug Development. Although GAO and NAM staff consulted with and assisted each other

throughout this work, reviews were conducted by NAM and GAO separately and independently, and authorship of the

text of Part One and Part Two of the report lies solely with NAM and GAO, respectively.

With the exception of Part One of this joint publication, this is a work of the U.S. government and is not subject to

copyright protection in the United States. All but Part One may be reproduced and distributed in its entirety without

further permission from GAO. However, because the joint publication may contain copyrighted images or other

material, permission from the copyright holder may be necessary if you wish to reproduce this material separately.

The National Academy of Medicine is the author of Part One and waives its copyright rights for that material.

GAO-20-215SP i

Foreword

The U.S. health care system is at an important crossroads as it faces major demographic shifts,

burgeoning costs, and transformative technologies. Although the growth in health care costs has

moderated recently, total annual health care spending in the United States is projected to reach nearly

$6 trillion by 2027. Federal spending for health care programs—which accounts for more than a quarter

of all health care spending—has grown faster than the overall economy in recent years, a trend

projected to continue. Every day more than 10,000 Americans turn age 65, becoming eligible for

Medicare. These demographic realities help illustrate the critical need to better address the

effectiveness and efficiency of our nation’s health care delivery systems.

Artificial intelligence and machine learning (AI/ML) is a set of technologies that includes automated

systems able to perform tasks that normally require human intelligence, such as visual perception,

speech recognition, and decision-making. AI/ML has promising applications in health care, including

drug development. For example, it may have the potential to help identify new treatments, reduce

failure rates in clinical trials, and generally result in a more efficient and effective drug development

process. However, applying AI/ML technologies within the health care system also raises ethical, legal,

economic, and social questions.

The Government Accountability Office (GAO) and the National Academy of Medicine (NAM), individually

and in collaboration, have taken up the charge to explore AI/ML in health care, assess its implications,

and identify key options available for optimizing its use. In recognition of mutual interests and

obligations, and to reinforce and complement each other’s work, NAM and GAO have cooperated on the

development of two publications. The first is NAM’s Special Publication: Artificial Intelligence in Health

Care: The Hope, the Hype, the Promise, the Peril, adapted excerpts of which are presented as Part One of

this joint publication. Any recommendations in Part One are those of NAM alone. The second is GAO’s

Technology Assessment: Artificial Intelligence in Health Care: Benefits and Challenges of Machine

Learning in Drug Development, presented as Part Two.

This cooperative effort included two expert meetings, bringing diverse, interdisciplinary, and cross-

sectoral perspectives to the discussions. We are grateful to the exceptionally talented staff of NAM and

GAO as well as the experts, all of whom worked hard with enthusiasm, great skill, flexibility, clarity, and

drive to make this joint publication possible.

Sincerely,

Timothy M. Persons, PhD

Chief Scientist and Managing Director,

Science, Technology Assessment, and Analytics

U.S. Government Accountability Office

J. Michael McGinnis, MD, MA, MPP

Leonard D. Schaeffer Executive Officer, and

Executive Director, NAM Leadership Consortium

GAO-20-215SP ii

Executive Summary

This report is being jointly published by the Government Accountability Office (GAO) and the National

Academy of Medicine (NAM). Part One of this joint publication presents material excerpted and adapted

by NAM from its 2020 Special Publication Artificial Intelligence in Health Care: The Hope, the Hype, the

Promise, the Peril. Part Two is the full presentation of GAO’s Technology Assessment Artificial

Intelligence in Health Care: Benefits and Challenges of Machine Learning in Drug Development. Although

GAO and NAM staff consulted with and assisted each other throughout this work, reviews were

conducted by NAM and GAO separately and independently, and authorship of the text of Part One and

Part Two of this Executive Summary and the following report lies solely with NAM and GAO,

respectively.

OVERVIEW OF PART ONE – NAM Special Publication: Artificial Intelligence in Health

Care: The Hope, the Hype, the Promise, the Peril

The National Academy of Medicine’s Special Publication: Artificial Intelligence in Health Care: The Hope,

the Hype, the Promise, the Peril surveys current knowledge to present an accessible guide for relevant

health care stakeholders such as artificial intelligence, machine learning (AI/ML) model developers,

clinical implementers, clinicians, patients, and regulation and policy makers.

1

In this publication, an NAM

expert working group comprised of leaders from various disciplines—public health, informatics,

biomedical ethics, and implementation science—provides a sampling of present-day AI applications with

a look to near-term possibilities, highlights the associated challenges and limitations, and outlines

fundamental ethical, legal, regulatory, and societal considerations for the successful development and

implementation of health care AI.

A key component shaping the publication was a January 2019 NAM convening of more than 60 experts

from a range of stakeholder communities to consider how the draft could best ensure coverage of the

most significant issues facing the development, deployment, or use of AI/ML in health care; that the

1

Matheny, M., S. Thadaney, M. Ahmed, and D. Whicher, editors. Artificial Intelligence in Health Care: The Hope, the Hype, the Promise, the Peril.

Washington, DC: National Academy of Medicine. Part One of this Joint Publication presents material excerpted and adapted by NAM from its

2020 Special Publication Artificial Intelligence in Health Care: The Hope, the Hype, the Promise, the Peril. Although GAO staff and leadership

were consulted throughout the development process, authorship for the text lies solely with the National Academy of Medicine, the editors,

and the authors (identified in the relevant sections and at the end of Part One).

GAO-20-215SP iii

solutions and approaches described and reviewed provided fair and balanced guidance for those

interested in developing and deploying AI/ML models in health care settings; and the ways the content

of the publication could most facilitate progress in the field. As an active participant, the GAO provided

critical feedback on the content of the publication focusing on these dimensions.

Drawing on those discussions, and supplemented with written comments from external experts, the

NAM publication identified several cross-cutting themes.

Potential Importance of AI/ML to Progress in Health and Health Care

With much of health and health care moving onto digital platforms, there has been a stunning growth in

the volume of information generated through routine health-related processes and from products of

health, health care, and biomedical science research. Especially as insights continue to emerge from

exploration of underlying genetic predispositions to health and disease, the ability to use of AI and ML

tools will soon be essential to assist with the growing field of precision medicine.

Furthermore, the ability to glean insights from the enormous body of data points generated daily from

mobile apps (m-Health) and sensors will require the capacity for simultaneous data processing from

multiple sources. The increasing availability of environmental and geospatial sensors developed on

digital platforms contribute yet additional data universes requiring AI/ML before incorporation into

predictive modeling tools.

AI and Transparency

As AI applications grow in their ability to lend perspective to health and health care decision-making,

there is a compelling need for transparency in algorithms and data sources with the recognition that the

need for algorithmic transparency is context-dependent, based on risk and intended use. For example, a

high impact AI tool with immediate clinical implications warrants more stringent explanation

requirements than a tool with a proven record of accuracy that is low risk and clearly conveys its

recommendations to the end user. “Therefore, AI developers, implementers, users, and regulators

should collaboratively define guidelines for clarifying the level of transparency needed across a

spectrum.”

2

2

Matheny, Thadaney, Ahmed, and Whicher. Artificial Intelligence in Health Care.

GAO-20-215SP iv

As the field advances rapidly, regulators and legislators are required to remain nimble as they balance

the complex interplay among AI innovation, safety, and trust. To avoid stymying AI development while

ensuring proper oversight, regulators must engage myriad stakeholders and experts in the evaluation of

clinical AI based on real-world data. As a harbinger of things to come, U.S. Food and Drug Administration

(FDA) recently issued a framework for evaluating health care AI based on the level of patient risk, AI

autonomy, and the dynamism of the tool.

3

Yet, to the extent that machine-learning models evolve with

new data, issues of liability will continue to unfold with increasing involvement by the courts, regulators,

and insurers.

Mitigating the hype

As the communication on the potential wonders of AI pervades social consciousness, it is easy for

misguided fears and optimism to obscure its legitimate near-term possibilities. Although AI is certainly

limited in its capacity to match the problem solving capacity of humans, AI-enabled automation is poised

for disruptive workplace innovations. Given the necessary reliance on information technology (IT) and

ML to help health professionals keep pace with the rapidly growing knowledge base, medical education

will need a substantial overhaul. This needs to happen with an added focus on the use of AI as a routine

decision-assistance tool. Training programs across multiple professions will require a focus on data

science and the appropriate use of AI products and services. The bridging function to patient and

consumer comfort levels with these emerging technologies will also need to be established to secure

the bond of confidence between clinicians and their patients. Ultimately, the goal is to build

competency in AI and data science to the point that health care AI provides an assistive benefit to

humans rather than replacing them. For this reason, the near-term focus might be better termed

“augmented intelligence.”

Prioritizing Equity and Inclusivity

Among the many considerations in the NAM publication, especially strong emphasis was placed on the

“the appropriate and equitable development and implementation of health care AI.”

1

Prioritizing equity

and inclusion begins with algorithms that have been developed from rich, population-representative

datasets. Despite an abundance of health data, the lack of system interoperability and suboptimal data

3

Food and Drug Administration. 2019. Proposed Regulatory Framework for Modifications to Artificial Intelligence/Machine Learning (AI/ML) –

Based Software as a Medical Device (SaMD). Available from: https://www.fda.gov/media/122535/download.

GAO-20-215SP v

standardization techniques prevent the effective integration of health data from disparate systems.

Without a robust base of data, AI algorithms fail to achieve successful levels of generalizability and

utility.

Ensuring that equity, and inclusivity remain at the forefront in the deployment of AI requires the active

engagement of system leaders, AI implementers, and regulators as they work to determine whether an

AI tool is suitable for a particular environment and question whether its introduction could exacerbate

existing biases and inequities. To address patient and community needs, health delivery organizations

are in the process of developing (IT) governance strategies that expand linkages to social determinants

and psychosocial data. National-scale efforts are needed to lower the barrier for adoption of these

technologies and minimize the possible creation of a digital divide in underserved communities where IT

capacities are less developed.

OVERVIEW OF PART TWO – GAO Technology Assessment: Artificial Intelligence in Health Care:

Benefits and Challenges of Machine Learning in Drug Development

The GAO report Artificial Intelligence in Health Care: Benefits and Challenges of Machine Learning in

Drug Development is the first in a planned series of technology assessments on the use of AI

technologies in health care that GAO is conducting at the request of Congress.

4

This report discusses

three topics: (1) current and emerging AI technologies available for drug development and their

potential benefits, (2) challenges to the development and adoption of these technologies, and (3) policy

options to address challenges to the use of machine learning in drug development. As one component of

this review, NAM facilitated consultation with colleagues from the National Academies, to work closely

with GAO in organizing a July 2019 meeting of 19 experts to explore these topics. NAM staff provided

expertise, based on their work on the NAM Special Publication Artificial Intelligence in Health Care: The

Hope, the Hype, the Promise, the Peril, to GAO in the identification of experts from federal agencies,

academia, biopharmaceutical companies, machine learning-focused companies, and legal scholars. The

meeting was intended to enhance GAO’s understanding of ML in health care and drug development.

4

Part Two of this Joint Publication presents the GAO Technology Assessment: Artificial Intelligence in Health Care: Benefits and Challenges of

Machine Learning in Drug Development. Although NAM staff and leadership provided assistance and advice in the identification of issues and

experts consulted during the development process (identified in app. II), responsibility for the text, findings, and options lies solely with GAO.

GAO-20-215SP vi

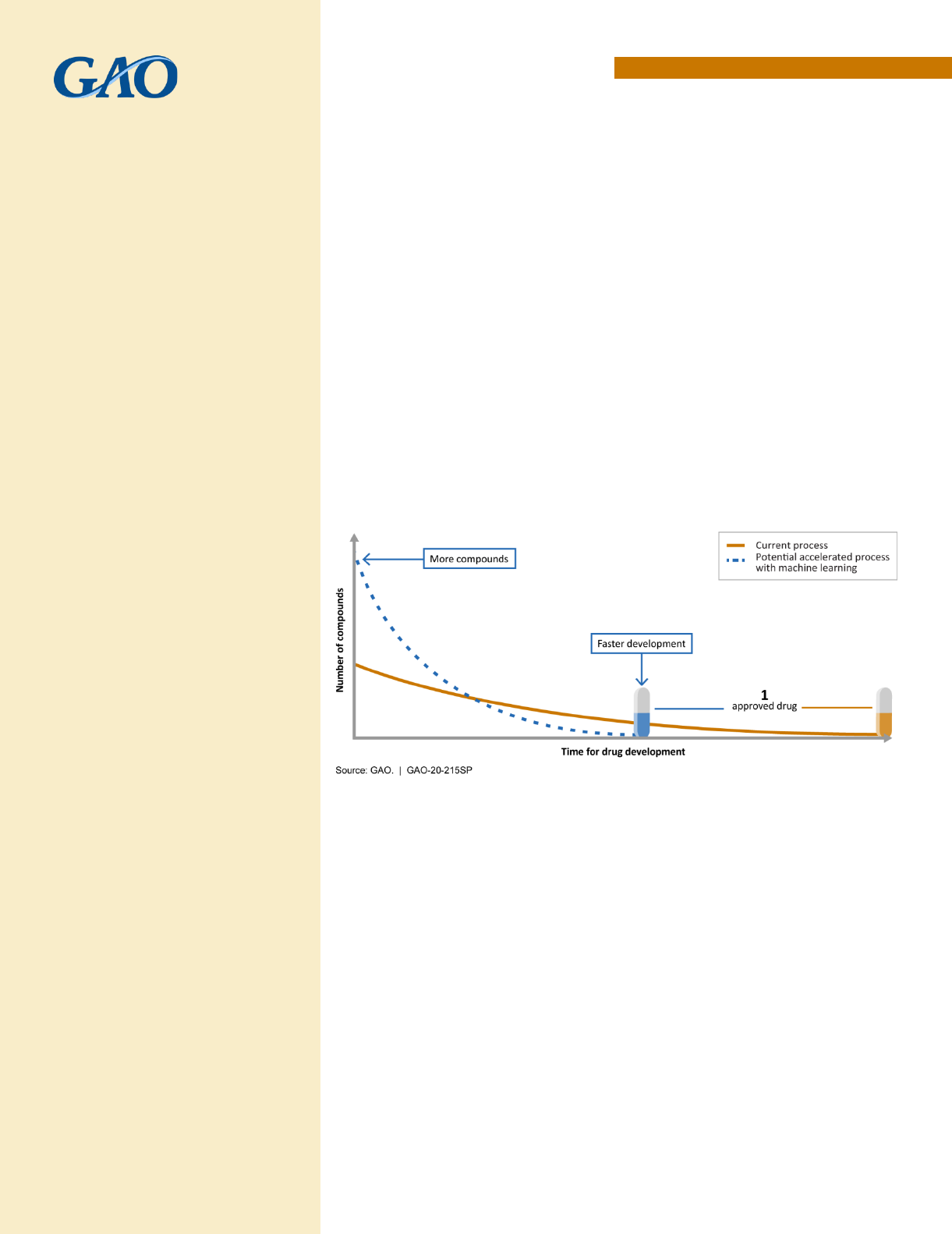

One of the report’s high-level findings is that machine learning holds tremendous potential in drug

development, according to stakeholders from government, industry, and academia. The current drug

development process is lengthy and expensive, and can take 10 to 15 years to develop a new drug and

bring it to market. ML techniques are already used throughout the drug development process and have

the potential to expedite the discovery, design, and testing of drug candidates, decreasing the time and

cost required. These improvements could save lives and reduce suffering by getting drugs to patients in

need more quickly.

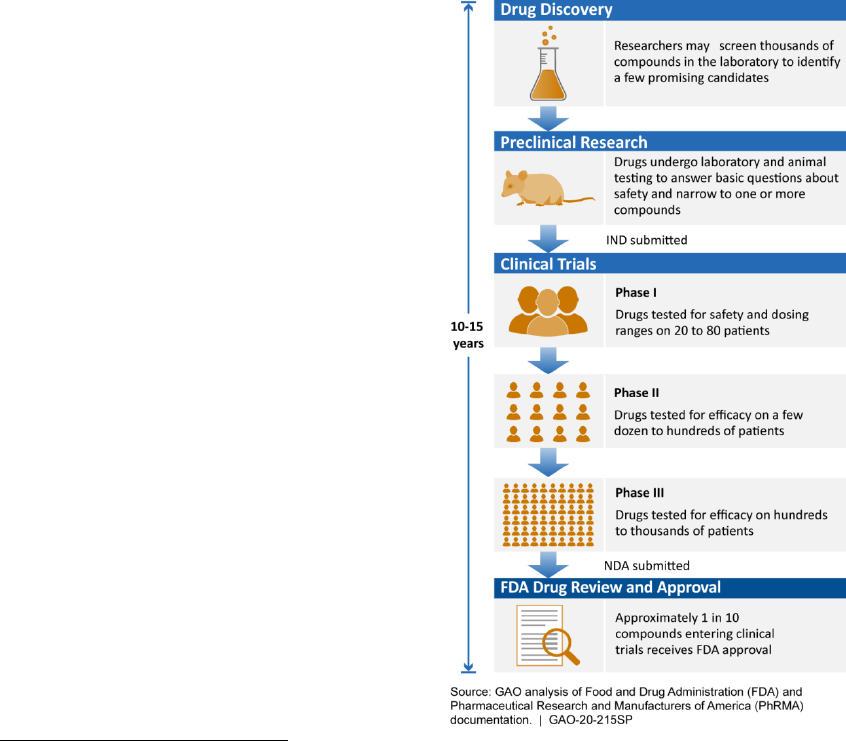

The technology assessment demonstrates the breadth of machine learning research and applications

with examples from the first three steps of the drug development process—drug discovery, preclinical

research, and clinical trials. In drug discovery, researchers are using ML to identify new drug targets,

screen known compounds for new therapeutic applications, and design new drug candidates, among

other applications. In preclinical research, ML can augment preclinical testing of drug candidates and

predict toxicity before human testing. Researchers are also beginning to use ML to improve clinical trial

design, a point where many drug candidates fail. These efforts include applying ML to patient selection

and recruitment, and to identify patient populations who may react better to certain drugs, thus

advancing towards the promise of precision medicine.

The technology assessment also identifies challenges that hinder the adoption and impact of machine

learning in drug development, according to stakeholders, experts, and the literature. Gaps in research in

biology, chemistry, and ML limit the understanding of and impact in this area. A shortage of high-quality

data, which are required for ML to be effective, is another challenge. It is also difficult to access and

share these data because of costs, legal issues, and a lack of incentives for sharing. Furthermore, a low

supply of skilled and interdisciplinary workers creates hiring and retention challenges for drug

companies. Lastly, uncertainty about regulation of machine learning used in drug development may limit

investment in this field. Some of these challenges are similar to those identified in the NAM special

publication, such as the lack of high-quality, structured data, and others are unique to drug

development.

GAO describes options for policymakers—which GAO defines broadly to include federal agencies, state

and local governments, academic and research institutions, and industry, among others—to use in

addressing these challenges. In addition to the status quo, GAO identifies five policy options centered

around research, data access, standardization, human capital, and regulatory certainty.

GAO-20-215SP vii

Table of Contents

Part One - Artificial Intelligence in Health Care: Field Background (National Academy of

Medicine) ............................................................................................................................. 1

Introduction ...................................................................................................................................... 2

1 Definitions of Key AI Terms ........................................................................................................... 2

2 A Historical Perspective and Overview of Current AI .................................................................... 4

3 How Artificial Intelligence Is Changing Health and Health Care .................................................... 6

4 Potential Tradeoffs and Unintended Consequences of AI .......................................................... 11

5 Best Practices for Machine-Learning Model Development and Validation ................................ 15

6 Deploying AI in Clinical Settings .................................................................................................. 18

7 Conclusion ................................................................................................................................... 22

Bibliography .................................................................................................................................... 24

Authors of NAM Special Publication .............................................................................................. 29

Part Two - Artificial Intelligence in Health Care: Benefits and Challenges of Machine Learning

in Drug Development (U.S. Government Accountability Office) ............................................ 30

Introduction .................................................................................................................................... 34

1 Background .................................................................................................................................. 37

1.1 The drug discovery, development, and approval process ............................................... 37

1.2 Machine learning in AI innovation ................................................................................... 39

1.3 Data generated and used in health care ......................................................................... 41

1.4 Economic considerations of drug development .............................................................. 42

2 Status and Potential Benefits of Machine Learning in Drug Development ................................. 44

2.1 Drug discovery ................................................................................................................. 45

2.2 Preclinical research .......................................................................................................... 48

2.3 Clinical trials ..................................................................................................................... 49

3 Challenges Hindering the Use of Machine Learning in Drug Development ................................ 52

3.1 Gaps in research .............................................................................................................. 53

3.2 Data quality ...................................................................................................................... 55

3.3 Data access and sharing................................................................................................... 56

3.4 Low supply of skilled and interdisciplinary workers ........................................................ 57

3.5 Regulatory challenges and federal commitment ............................................................ 57

GAO-20-215SP viii

4 Policy Options to Address Challenges to the Use of Machine Learning in Drug Development .. 59

5 Agency and expert comments ..................................................................................................... 66

Appendix I: Objectives, scope, and methodology .......................................................................... 68

Appendix II: Expert participation. ................................................................................................... 72

Appendix III: GAO contact and staff acknowledgments ................................................................. 74

National Academy of Medicine GAO-20-215SP 1

PART ONE

Artificial Intelligence in

Health Care:

Field Background

National Academy of Medicine (NAM)

Part One of this Joint Publication presents material excerpted and adapted by NAM from

its 2020 Special Publication: Artificial Intelligence in Health Care: The Hope, the Hype, the

Promise, the Peril. Although GAO staff and leadership were consulted throughout the

development process, authorship of the text lies solely with the National Academy of

Medicine, the editors, and the authors (identified in the relevant sections and at the end

of Part One).

National Academy of Medicine GAO-20-215SP 2

PART I: NAM FIELD OVERVIEW

Introduction: The emergence of artificial intelligence (AI) as a tool for better health care offers

unprecedented opportunities to improve patient and clinical team outcomes, reduce costs, and

impact population health. Many are already in use in health care. Nonetheless, the authors of

the National Academy of Medicine’s Special Publication titled Artificial Intelligence in Health

Care: The Hope, the Hype, the Promise, the Peril not only underscore the promise, but also call

out the issues for care and caution.

The material presented here has been adapted from the NAM’s Special Publication and serves to

provide a broad overview of current and near-term AI solutions; the challenges, limitations, and

best practices for AI model development, adoption, and maintenance; the current legal and

regulatory landscape for AI tools in health care; and prioritizes the need for equity, inclusion,

and a human rights lens as we proceed together into a more technological future.

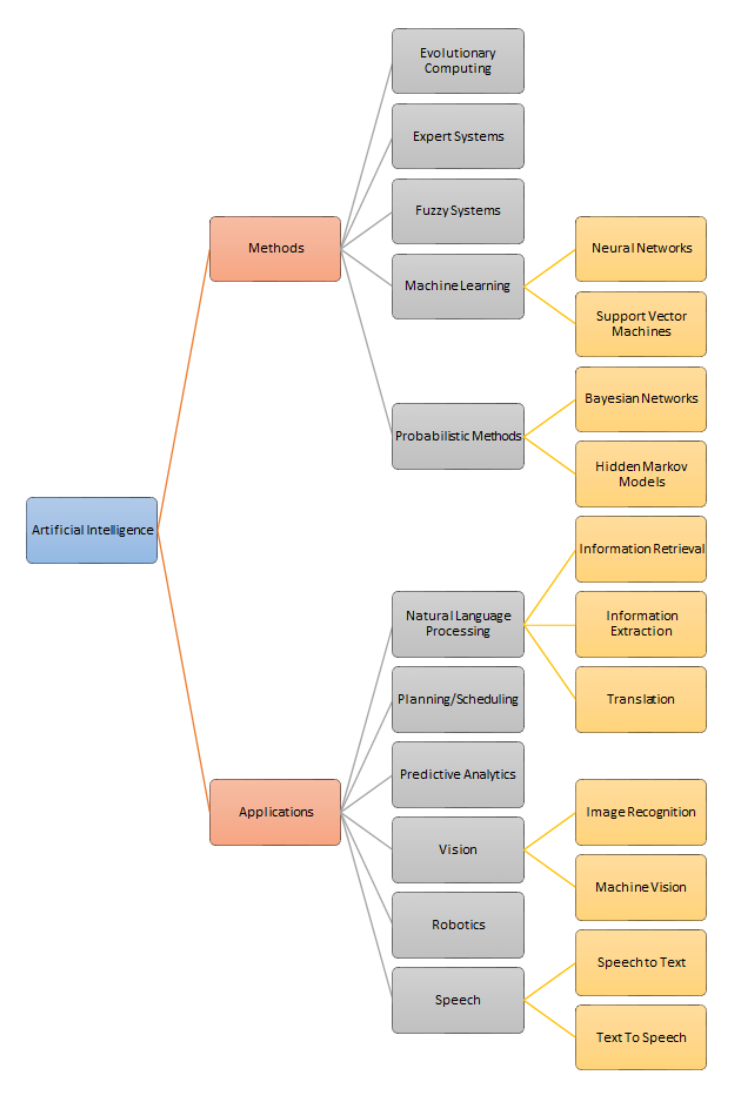

1. Definitions of Key AI Terms: The term artificial intelligence (AI), colloquially and in the

scientific literature, takes on a range of meanings, from specific forms of AI, such as machine

learning, to a hypothetical AI could be considered conscious or sentient. A formal definition of

AI starts with the Oxford English Dictionary: “The capacity of computers or other machines to

exhibit or simulate intelligent behavior; the field of study concerned with this.” More nuanced

definitions of AI might also consider what goal the AI is attempting to achieve and how it is

pursuing that goal. In general, AI systems range from those that attempt to accurately model

human reasoning to solve a problem, to those that ignore human reasoning and exclusively use

large volumes of data to generate a framework to answer the question(s) of interest, to those

that attempt to incorporate elements of human reasoning but do not require accurate modeling

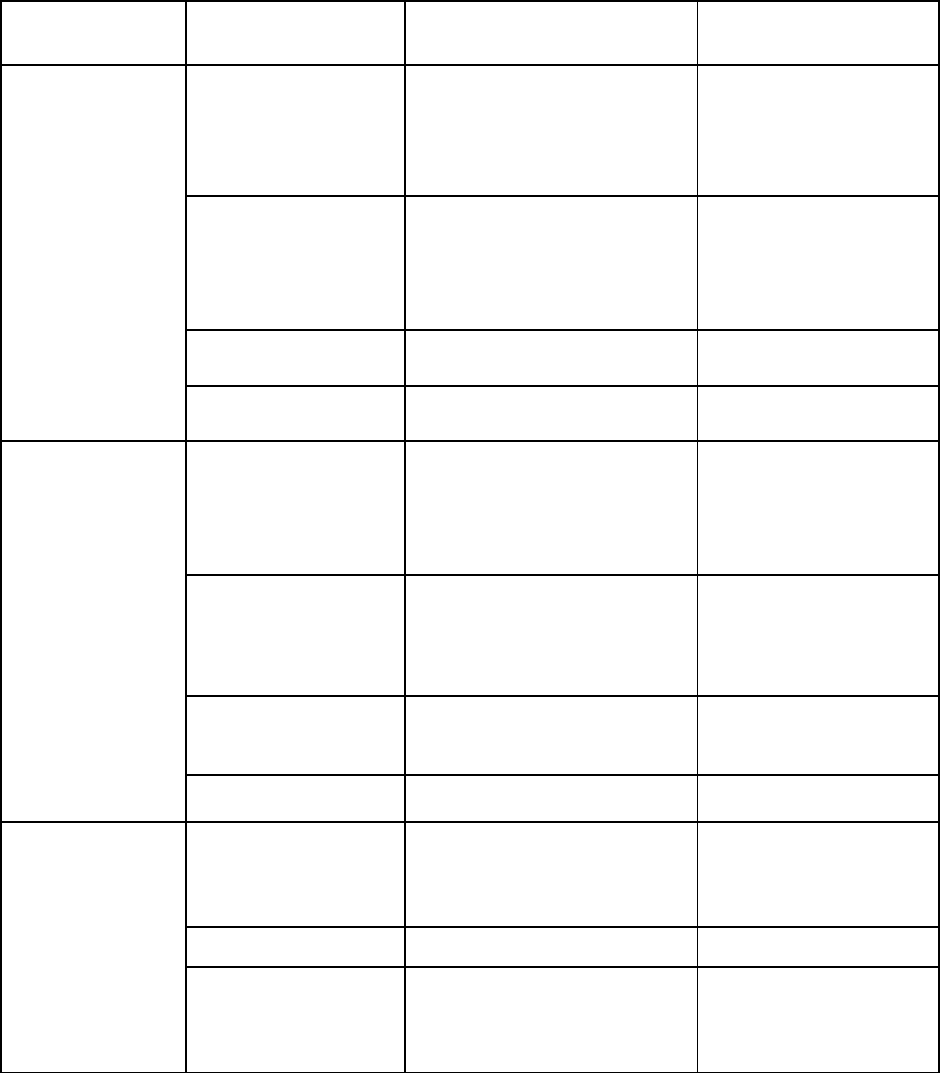

of human processes. The graphic below summarizes the domains of artificial intelligence

(Figure 1).

National Academy of Medicine GAO-20-215SP 3

Figure 1 | A summary of the domains of artificial intelligence

SOURCE: Adapted with permission from a figure in Mills, M. 2015. Artificial Intelligence in Law—

The State of Play in 2015? Legal IT Insider. https://www.legaltechnology.com/latest-

news/artificial-intelligence-in-law-the-state-of-play-in-2015.

National Academy of Medicine GAO-20-215SP 4

Machine learning is a family of statistical and mathematical modeling techniques that uses a

variety of approaches to automatically learn and improve the prediction of a target state,

without explicit programming (Witten et al., 2016). Different methods, such as Bayesian

networks, random forests, deep learning, and artificial neural networks, each use different

assumptions and mathematical frameworks for how data is ingested, and learning occurs

within the algorithm. Regression analyses, such as linear and logistic regression, are also

considered machine learning methods, although many users of these algorithms distinguish

them from commonly defined machine learning methods (e.g., random forests, Bayesian

Networks [BNs], etc.).

Natural language processing (NLP) enables computers to understand and organize human

languages (Manning and Schütze, 1999). NLP needs to model human reasoning because it

considers the meaning behind written and spoken language in a computable, interpretable, and

accurate way. NLP incorporates rule-based and data-based learning systems, and many of the

internal components of NLP systems are themselves machine learning algorithms with pre-

defined inputs and outputs, sometimes operating under additional constraints. Examples of

NLP applications include assessment of cancer disease progression and response to therapy

among radiology reports (Kehl et al., 2019), and identification of post-operative complication

from routine EHR documentation (Murff et al., 2011).

Expert systems are a set of computer algorithms that seek to emulate the decision-making

capacity of human experts (Feigenbaum, 1992; Jackson, 1998; Leondes, 2002; Shortliffe and

Buchanan, 1975). These systems rely largely on a complex set of Boolean and deterministic

rules. An expert system is divided into a knowledge base, which encodes the domain logic, and

an inference engine, which applies the knowledge base to data presented to the system to

provide recommendations or deduce new facts.

Authors: Michael Matheny, MD, MS, MPH, Sonoo Thadaney Israni, MBA, Mahnoor Ahmed,

MEng, and Danielle Whicher, PhD, MHS

2. A Historical Perspective and Overview of Current AI: If the term “artificial intelligence”

might be given a birth date, it could be August 31, 1955, when John McCarthy, Marvin L. Minsky,

Nathaniel Rochester, and Claude E. Shannon submitted “A Proposal for the Dartmouth Summer

Research Project on Artificial Intelligence”. The proposal and the resulting conference—the

National Academy of Medicine GAO-20-215SP 5

1956 Dartmouth Summer Research Project on Artificial Intelligence—were the culmination of

decades of thought by many others (Buchanan, 2005; Kline, 2011; Turing, 1950; Weiner, 1948).

Although the conference produced neither formal collaborations nor tangible outputs, it helped

galvanize the field (Moor, 2006).

Thought leaders in this era saw the future clearly, although optimism was substantially

premature. In 1960, J. C. R. Licklider wrote, “The hope is that, in not too many years, human

brains and computing machines will be coupled together very tightly, and that the resulting

partnership will think as no human brain has ever thought and process data in a way not

approached by the information-handling machines we know today” (Licklider, 1960).

By the 1970s, excitement gave way to disappointment because early successes that worked in

well-structured, narrow problems failed to both generalize to broader problem solving and

deliver operationally useful systems. The disillusionment, summarized in the ALPAC (Automatic

Language Processing Advisory Committee)

and Lighthill reports, resulted in an “AI Winter” with

shuttered projects, evaporation of research funding, and general skepticism on the potential for

AI systems (McCarthy, 1974; National Research Council, 1996).

Yet in health care, work continued. Iconic expert systems such as MYCIN (Shortliff, 1974) and

others including Iliad, Quick Medical Reference, and Internist-1, were developed to assist with

clinical diagnosis. AI flowered commercially in the 1980s, becoming a multibillion-dollar

industry advising military and commercial interests (Miller, 1982; Sumner, 1993). However, all

of these prospects ultimately failed to fulfill the hype and lofty promises, resulting in a second

AI Winter from the late 1980s until the late 2000s

During this second AI Winter, the schools of computer science, probability, mathematics, and AI

collaborated to overcome the initial failures of AI. In particular, techniques from probability and

signal processing, such as Hidden Markov Models, Bayesian networks, and Stochastic search

and optimization were incorporated into AI thinking, resulting in the field known as machine

learning.

Around 2010, AI again regained prominence due to the success of machine learning and data

science techniques, as well as significant increases in computational storage and power. These

advances fueled the growth of technology titans such as Google and Amazon. Various ideas have

laid the groundwork for artificial neural networks which have come to dominate the field of

National Academy of Medicine GAO-20-215SP 6

machine learning. (Halevy et al., 2009; Krizhevsky, 2012). The resulting systems are called

“deep learning systems” and show significant performance improvements over prior

generations of algorithms for some use cases.

Modern AI has evolved from an interest in machines that think to ones that sense, think, and act.

It is important to distinguish narrow from general AI. The popular conception of AI is of a

computer, hypercapable in all domains, such as was seen even decades ago in science fiction

with HAL 9000 in 2001: A Space Odyssey (Stanley Kubrick, 1968) or aboard the USS Enterprise

in the Star Trek franchise (Gene Roddenberry, 1966). These are examples of general AIs and, for

now, are fictional. There is an active but niche general AI research community represented by

Deepmind, Cyc, and OpenAI, among others. Narrow AI, in contrast, is an AI specialized at a

single task, such as playing chess, driving a car, or operating a surgical robot.

Still, history has shown that AI has gone through multiple cycles of emphasis and

disillusionment in use. It is critical that all stakeholders are aware and actively seek to educate

and address public expectations and understanding of AI (and associated technologies) in order

to manage hype and establish reasonable expectations, which will enable AI to be applied in

effective ways that have reasonable opportunities for sustained success.

Authors: Jim Fackler, MD and Edmund Jackson, PhD

3. How Artificial Intelligence Is Changing Health and Health Care: The health care industry

has been investing for years in technology solutions with the potential to transform health and

health care There are promising examples, but there are gaps in the evaluation of these tools

including AI, so it can be difficult to assess their impact. The NAM Special Publication reviews

the potential of AI solutions for patients and families; the clinical care team; public health and

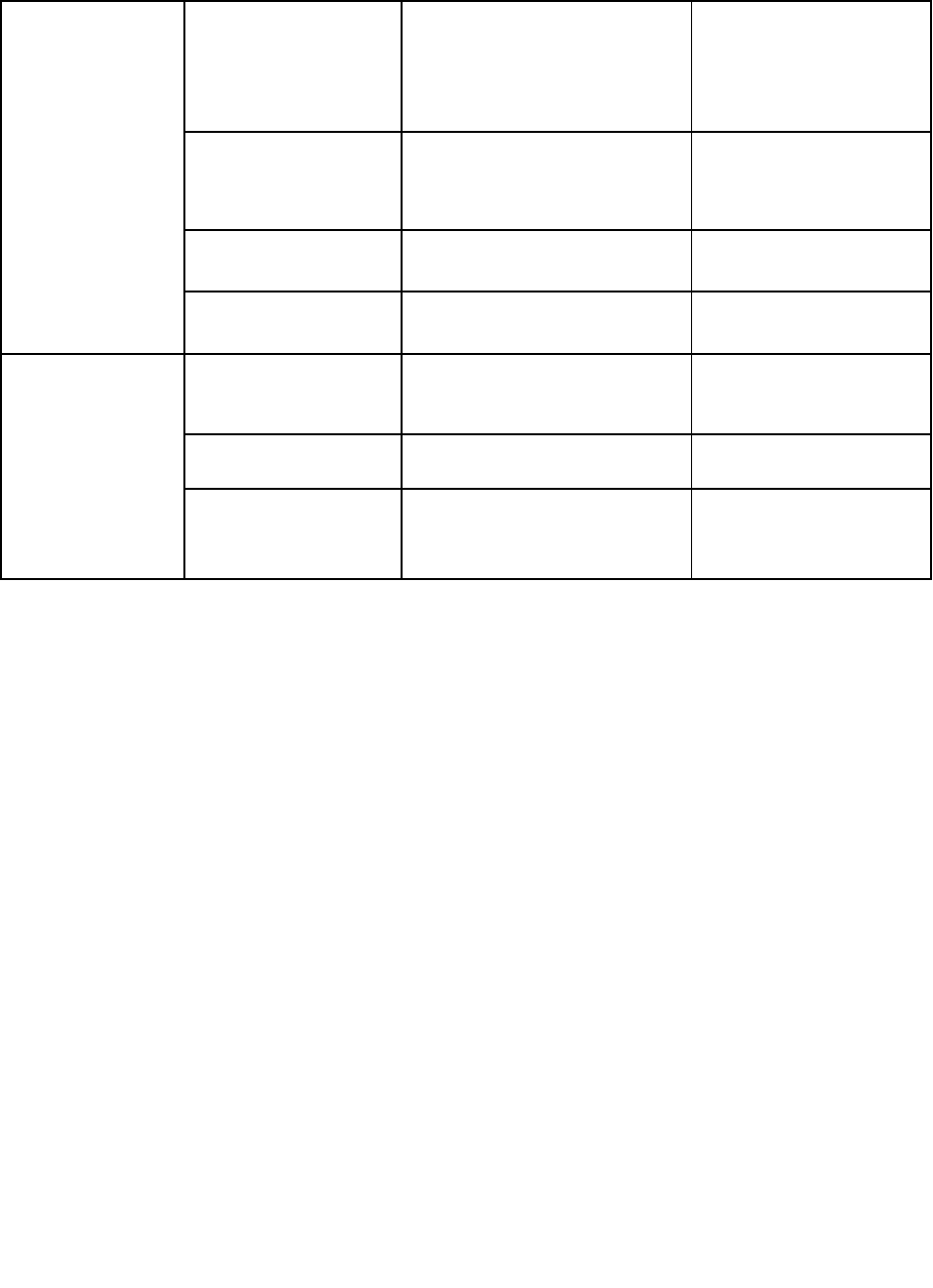

population health program managers; business administrators; and researchers (Figure 2).

Here we provide a sample of the potential solutions for patients and families, the clinical care

team, and public health and population health program managers are detailed below.

National Academy of Medicine GAO-20-215SP 7

Figure 2 | Examples of AI applications for stakeholder groups

Use Case/User

Group

Category

Illustrative Examples of

Applications

Technology

Patients and

Families

• Health

monitoring

• Benefit/risk

assessment

• Devices and wearables

• Smartphone and tablet

apps, websites

Machine learning,

natural language

processing (NLP),

speech recognition,

chatbots

• Disease

prevention and

management

• Obesity reduction

• Diabetes prevention and

management

• Emotional and mental

health support

Conversational AI, NLP,

speech recognition,

chatbots

• Medication

management

• Medication adherence

Robotic home telehealth

• Rehabilitation

• Stroke rehabilitation

using apps and robots

Robotics

Clinical Care

Teams

• Early detection,

prediction, and

diagnostics tools

• Imaging for cardiac

arrhythmia detection,

retinopathy

• Early cancer detection

(e.g., melanoma)

Machine Learning

• Surgical

Procedures

• Remote-controlled

robotic surgery

• AI-supported surgical

roadmaps

Robotics, machine

learning

• Precision

Medicine

• Personalized

chemotherapy treatment

Supervised machine

learning, reinforcement

learning

• Patient Safety

• Early detection of sepsis

Machine learning

Public Health

Program

Managers

• Identification

of individuals at

risk

• Suicide risk identification

using social media

Deep learning

(convolutional and

recurrent neural

networks)

• Population health

• Eldercare monitoring

Ambient AI sensors

• Population health

• Air pollution

epidemiology

• Water microbe detection

Deep learning,

geospatial pattern

mining, machine

learning

National Academy of Medicine GAO-20-215SP 8

Business

Administrators

• International

Classification

of Diseases, 10th

Rev. (ICD-10)

coding

• Automatic coding of

medical records for

reimbursement

Machine learning, NLP

• Fraud detection

• Health care billing fraud

• Detection of unlicensed

providers

Supervised,

unsupervised, and

hybrid machine learning

• Cybersecurity

• Protection of personal

health information

Machine learning, NLP

• Physician

management

• Assessment of physician

competence

Machine learning, NLP

Researchers

• Genomics

• Analysis of tumor

genomics

Integrated cognitive

computing

• Disease

prediction

• Prediction of ovarian

cancer

Neural networks

• Discovery

• Drug discovery and

design

Machine learning,

computer-assisted

synthesis

AI for Patients and Family: AI could soon play an important role in assisting patients and their

families in the self-management of chronic diseases such as cardiovascular diseases, diabetes,

and depression by assisting patients with taking medications, modifying diet, getting more

physically active, assisting with care management, wound care, device management, and the

delivery of injectables. Conversational agents, which can engage in two-way dialogue with the

user via speech recognition, offer one example of how self-management of these diseases could

be supplemented by AI solutions. Well known examples include Apple’s Siri, Amazon’s Alexa, or

Microsoft’s Cortana. Powered by NLP and natural language understanding, these interfaces may

include text-based dialogue or present a human image (e.g., the image of nurse or coach) or a

non-human image (e.g., a robot or animal) to provide a richer interactive experience.

Conversational agents actually already exist to address depression, smoking cessation, asthma,

and diabetes, although formal evaluation of these agents has been limited (Fitzpatrick et al.,

2017).

In a more passive application for patients and families, AI can use raw data from

accelerometers, gyroscopes, microphones, cameras, and smartphones for health monitoring

and risk prediction. By using machine-learning algorithms to recognize patterns from the raw

National Academy of Medicine GAO-20-215SP 9

data inputs and then categorize these patterns as indicators of an individual’s behavior and

health status, these systems can allow patients to understand and manage their own health and

symptoms, as well as share data with medical providers. Consumer interest is high (~50%) in

using data generated by apps, wearables, and Internet-of-Things devices to predict health risks

(Accenture, 2018). Since 2013, AI start-up companies with a focus on health care and wearables

have raised $4.3 billion to develop smart clothing, for example, bras designed for breast cancer

risk prediction and other clothes for cardiac, lung, and movement sensing (Wiggers, 2018).

AI Solutions for the Clinical Care Team: There are two main areas of opportunity for AI in clinical

care: (1) enhancing and optimizing care delivery and (2) improving information management,

user experience, and cognitive support in EHRs. Prediction, early detection, and risk assessment

for individuals is one of the most fruitful areas of AI applications (Sennaar, 2018). For example,

diagnostic image recognition, which can be supported by AI applications, can differentiate

between benign and malignant melanomas, diagnose retinopathy, identify cartilage lesions

within the knee joint (Liu et al., 2018), detect lesion-specific ischemia, and predict node status

after positive biopsy for breast cancer. Image recognition techniques can differentiate among

competing diagnoses, assist in screening patients, and guide clinicians in radiotherapy and

surgery planning (Matheson, 2018). AI platforms can, relatedly, provide roadmaps to assist

surgical teams in the operating room, reducing risk and making surgery safer (Newmarker,

2018).

Clinicians are testing whether AI will permit them to personalize chemotherapy dosing and

map patient response to a treatment to plan future dosing (Poon et al., 2018), a variation of

precision medicine enabled by AI. AI-driven NLP has been used to identify polyp descriptions in

pathology reports that trigger guideline-based clinical decision support to help clinicians

determine the best surveillance intervals for colonoscopy exams (Imler et al., 2014). Other AI

tools have helped clinicians select the best treatment options for complex diseases like cancer

(Zauderer et al., 2014). Using retrospective data from other patients, AI techniques can predict

treatment responses to different therapy combinations for an individual patient (Brown, 2018).

These types of tools may serve to help select a treatment immediately, and may also provide

new knowledge for future practice guidelines.

As genome-phenome integration is realized, the use of genetic data in AI systems for diagnosis,

clinical care, and treatment planning will probably increase. To truly impact routine care,

National Academy of Medicine GAO-20-215SP 10

though, genetic datasets will need to better represent the diversity of patient populations

(Hindorff et al., 2018).

AI also has the potential to improve the way in which clinicians store and retrieve clinical

documentation in EHRs. AI also has the potential to not only improve existing clinical decision

support modalities, but to support improved cognitive support functions like smarter CDS

alerts and reminders, as well as better access to peer-reviewed literature.

Population and Public Health Management: A spectrum of market-ready AI approaches to

support population health programs already exists. They are used in areas of automated retinal

screening, clinical decision support, predictive population risk stratification, and patient self-

management tools (Contreras and Vehi, 2018; Dankwa-Mullan et al., 2018). Several solutions

have received regulatory approval; for example, the U.S. Food and Drug Administration

approved Medtronic’s Guardian Connect, the first AI-powered continuous glucose monitoring

system. Crowd-sourced, real-world data on inhaler use, combined with environmental data, led

to a policy recommendation model that can be replicated to address many public health

challenges by simultaneously guiding individual, clinical, and policy decisions. (Barrett et al.,

2018) Other areas of potential overlap are standard risk prediction models that apply AI tools

to facilitate recognition of clinically important but unanticipated predictor variables; and how

AI can be used to not only predict risk, but also the presence or absence of a disease in an

individual.

For public health professionals, the focus is on solutions for more efficient and effective

administration of programs, policies, and services; disease outbreak detection and surveillance;

as well as research. The range of AI solutions that can improve disease surveillance is

considerable. For a number of years, researchers have tracked and refined the options for

tracking disease outbreaks using search engine query data. Some of these approaches rely on

the search terms that users type into internet search engines (e.g., Google Flu Trends, etc.).

At the same time, caution is warranted with these approaches. Relying on data not collected for

scientific purposes (e.g., Internet search terms) to predict flu outbreaks has been fraught with

error (Lazer et al., 2014). Non-transparent search algorithms that change constantly cannot be

easily replicated and studied. These changes may occur due to business needs (rather than the

needs of a flu outbreak detection application) or due to changes in the search behavior of

National Academy of Medicine GAO-20-215SP 11

consumers. Finally, relying on such methods exclusively misses the opportunity to combine

them and co-develop them in conjunction with more traditional methods. As Lazer et al. details,

combining traditional and innovative methods (e.g., Google Flu Trends) performs better than

either method alone.

AI and machine learning have also been used to develop a dashboard to provide live insight into

opioid usage trends in Indiana (Bostic, 2018). This tool enabled prediction of drug positivity for

small geographic areas (i.e., hot spots), allowing for interventions by public health officials, law

enforcement, and program managers in targeted ways. A similar dashboarding approach

supported by AI solutions has been used in Colorado to monitor HIV surveillance and outreach

interventions and their impact after implementation (Snyder et al., 2016). This tool integrated

data on regional resources with near real-time visualization of complex information to support

program planning, patient management, and resource allocation.

Authors: Joachim Roski, PhD, MPH, Wendy Chapman, PhD, Jaimee Heffner, PhD, Ranak Trivedi,

PhD, Guilherme Del Fiol, MD, PhD, Rita Kukafka, PhD, Paul Bleicher, MD, PhD, Hossein Estiri,

PhD, Jeffrey Klann, PhD, and Joni Pierce, MBA, MS

4. Potential Tradeoffs and Unintended Consequences of AI: While we optimistically look to a

future where AI-driven solutions can systematically improve health and medicine, AI systems

could also have far-reaching unintended consequences and implications for patient populations,

health systems, and the workforce. To mitigate the effect of these potential consequences, care

must be given to the consideration of how tradeoffs between efficiency and equity impact

populations in delivering against the unmet and unlimited demands of health care.

The Future of Employment and Displacement: While anxiety over job losses due to AI and

automation are likely exaggerated, advancing technology will almost certainly change roles as

certain tasks are automated as seen in other industries. A conceivable future could see AI

eliminating a clinician’s need to perform manual tasks like checking patient vital signs

(especially with self-monitoring devices), collecting laboratory specimens, preparing

medications for pickup, transcribing clinical documentation, completing prior authorization

forms, scheduling appointments, collecting standard history elements, and making routine

diagnoses. However, most clinical jobs and patient needs require much more cognitive

National Academy of Medicine GAO-20-215SP 12

adaptability, problem solving, and communication skills than a computer can muster. Despite

the fear of AI eliminating jobs, industrialization and technology typically yield net productivity

gains to society. For example, many assumed that automated teller machines (ATMs) would

eliminate the need for bank tellers. Instead, the efficiencies gained through the use of ATMs

enabled the expansion of bank branches and resulted in an even greater demand for tellers that

could focus on higher cognitive tasks, such as interacting with customers, rather than simply

counting money (Pethokoukis, 2016).

Need for Education and Workforce Development: A graceful transition into the AI era of health

care that minimizes the unintended consequences of displacement will require deliberate

redesigning of training programs. This ranges from support for a core basis of primary

education in science, technology, engineering, and math literacy in the broader population to

continuing professional education in the face of a changing environment. Health care workers in

the AI future will need to learn how to use and interact with information systems, with

foundational education in information retrieval and synthesis, statistics and evidence-based

medicine appraisal, and interpretation of predictive models in terms of diagnostic performance

measures. Institutional organizations (e.g., National Institutes of Health, health care systems,

professional organizations, universities, and medical schools) should shift focus from skills that

are easily replaced by AI automation to specific education and workforce development

programs for work in the AI future, with emphasis in STEM, data science skills, and human skills

that are hard to replace with technology.

AI System Augmentation of Human Tasks: While much of the popular discussion of AI focuses on

how AI tools will replace human workers, realistically, in the foreseeable future, AI will function

in an augmenting role, adding to the capabilities of the technology’s human partners. As the

volume of data and information available to inform patient care grows exponentially, AI tools

will naturally become part of the clinical care team in much the same way a doctor is supported

by a team of intelligent agents including specialists, nurses, physician assistants, pharmacists,

social workers, and other health professionals (Meskó, Hetényi, and Győrffy, 2018). The

technologies will be able to provide task-specific expertise in the data and information space,

augmenting the capabilities of the physician and the entire team, making their jobs easier and

more effective, and ultimately improving patient care (Herasevich, Pickering, and Gajic, 2018;

Wu, 2019).

National Academy of Medicine GAO-20-215SP 13

Hype versus Hope: One of the greatest near-term risks in the current development of AI tools in

health care is not that it will cause serious unintended harm, but that it simply cannot meet the

expectations stoked by excessive hype. Over the last decade, several factors have led to

increasing interest and escalating hype of AI. Explicit advertising hyperbole may be one of the

most direct triggers for unintended consequences of hype. While such promotion is important

to drive interest and motivate progress, it can become counterproductive in excess. A

combination of technical and subject domain expertise is needed to recognize the credible

potential of AI systems and avoid the backlash that will come from overselling them.

Risks associated with model development and implementation: Since AI systems that will be

deployed in the health care setting are constrained to learn from available observational health

data, high fidelity and reliably measured outcomes are not always achievable. Although data

from EHRs and other health information systems provide a rich longitudinal, multi-dimensional

set of details about an individual’s health, these data are often both noisy and biased as they are

produced for different purposes in the process of documenting care. Poorly constructed or

interpreted models from observational data can harm patients. Health care data scientists must

be careful to apply the right types of modeling approaches based on the characteristics and

limitations of the underlying data.

Although correlation can be sufficient for diagnosing problems and predicting outcomes in

certain cases, methods that primarily learn associations between inputs and outputs can be

unreliable, if not overtly dangerous when used to drive medical decisions. (Schulam and Saria,

2017) There are three common reasons why this is the case. First, performance of association-

based models tend to be susceptible to even minor deviations between the development and

implementation datasets. The learned associations may memorize dataset-specific patterns that

do not generalize as the tool is moved to new environments where these patterns no longer

hold. (Subbaswamy, Schulam, and Saria, 2019) A common example of this phenomenon is shifts

in provider practice with the introduction of new medical evidence, technology, and

epidemiology. If a tool heavily relies on a practice pattern to be predictive, as practice changes,

the tool is no longer valid. (Schulam and Saria, 2017) Second, such algorithms cannot correct for

biases due to feedback loops that are introduced when learning continuously over time.

(Schulam and Saria, 2017) In particular, if the implementation of an AI system changes patient

exposures, interventions, and outcomes (often as intended), it can cause data shifts that

National Academy of Medicine GAO-20-215SP 14

degrade performance. Finally, the proposed predictors may be tempting to treat as factors one

can manipulate to change outcomes but these are often misleading.

One approach is to update models over time so that they continuously adapt to local and recent

data. Such adaptive algorithms offer constant vigilance and monitoring for changing behavior.

However, this may exacerbate disparities when only well-resourced institutions can deploy the

expertise to do so in an environment.

Training reliable models depends on training datasets being representative of the population

where the model will be applied. Learning from real world data---where insights can be drawn

from patients similar to a given index patient---has the benefit of leading to inferences that are

more relevant, but it is important to characterize populations where there is inadequate data to

support robust conclusions. For example, a tool may show acceptable performance on average

across individuals captured within a data set, but may perform poorly for specific

subpopulations because the algorithm has not had enough data to learn from. In genetic testing,

minority groups can be disproportionately adversely affected when recommendations are

made based on data that does not adequately represent them. (Manrai et al., 2016) Test-time

auditing tools that can identify individuals for whom the model predictions are likely to be

unreliable can reduce the likelihood of incorrect decision-making due to model bias. (Schulam

and Saria, 2017)

Machine learning that relies on observational data could also generally have an amplifying

effect on existing behavior, regardless of whether that behavior is beneficial or exacerbates

existing societal biases. For instance, a study found that machine translation systems were

biased against women due to the way in which women were described in the data used to train

the system. (Prates, Avelar, and Lamb, 2018) While some of these algorithms were revised or

discontinued, the underlying issues will continue to be significant problems, requiring constant

vigilance, as well as algorithm surveillance and maintenance to detect and address.

AI Systems Transparency: Transparency is a key theme that underlies deeper issues related to

privacy and consent or notification for patient data use, and to potential concerns on the part of

patients and clinicians around being subject to algorithmically-driven decisions. Consistent

progress in the development and adoption of AI in health care will only be feasible if health care

consumers and health care systems are mutually recognized as trusted data partners.

National Academy of Medicine GAO-20-215SP 15

Tensions exist among the desire for robust data aggregation to facilitate the development and

validation of novel AI models, the need to protect consumer privacy, and the need to

demonstrate respect for consumer preferences through informed consent or notification

procedures. However, lack of transparency about data use and privacy practices could create a

situation in which patients do not clearly consent to their data being used in ways they do not

understand, realize, or accept. Current consent practices for the use of EHR and claims data are

generally based on models focused on HIPAA privacy rules, and some argue that HIPAA needs

updating (Mello and Cohen, 2018). The progressive integration of other sources of patient-

related data (e.g., genetic information, social determinants of health), and the facilitated access

to highly granular and multi-dimensional data are changing the protections provided by

traditional mechanisms, such as HIPAA. For instance, with more data available, re-identification

becomes easier to perform (Cohen and Mello, 2019). Regulations need to be updated and

consent processes will need to be more informative of those added risks.

Authors: Jonathan Chen, MD, PhD, Andrew Beam, PhD, Suchi Saria, PhD, and Eneida Mendonca,

MD, PhD

5. Best Practices for Machine-Learning Model Development and Validation: Machine learning

models should be thoughtfully developed and validated. First, all stakeholders must understand

the needs of clinical practice, so that proposed AI systems address the practicalities of health

care delivery. Second, it is necessary that such models be developed and validated through a

team effort, involving AI experts and health care providers. Throughout the design and

validation process, it is important to be mindful of the fact that the datasets used to train AI are

heterogeneous, complex, and nuanced in ways that are often subtle and institution-specific.

This impacts how AI tools are monitored for safety and reliability, and how they are adapted for

different locations and over time. Third, before deployment at the point of care, AI systems

should be rigorously evaluated to ensure their competency and safety, in a similar process to

that done for drugs, medical devices, and other medical interventions.

Establishing Utility: When considering the use of AI in health care, it is necessary to know how a

member of the care team would act, given a model’s output. While model evaluation typically

focuses on metrics, such as positive predictive value, sensitivity (or recall), specificity, and

National Academy of Medicine GAO-20-215SP 16

calibration, constraints on the action triggered by the model’s output (e.g. continuous rhythm

monitoring might be constrained by availability of Holter monitors) often can have a much

larger influence in determining model utility (Moons et al., 2012). Completing model selection,

then doing a net-benefit analysis, and later factoring work constraints is suboptimal (Shah et al.,

2019). Realizing the benefit of implementation of AI into the work flow requires defining

potential utility upfront. Only by including the characteristics of actions taken on the basis of

the model’s predictions, and factoring in their implications, can a model’s potential usefulness

in improving care be properly assessed.

Learning a Model: After the potential utility of the model has been established, model

developers and model users need to interact closely when learning a model because many

modeling choices are dependent on the model’s context of use (Wiens et al., 2019). For example,

the need for external validity depends on what one wishes to do with the model, the degree of

agency ascribed to the model, and the nature of the action triggered by the model.

It is well known that biased data will result in biased models. Thus, the data that is selected to

learn from matters far more than the choice of the specific mathematical formulation of the

model. Model builders need to pay close attention to the data they train on and to think beyond

the technical evaluation of models. Even in technical evaluation, it is necessary to look beyond

the ROC curves, and examine multiple dimensions of performance. For decision making in the

clinic, additional metrics such as calibration, net reclassification, and a utility assessment are

necessary. Given the nonobvious relationship between a model’s positive predictive value,

recall, and specificity to its utility, it is important to examine simple and obvious parallel

baselines, such as a penalized regression model applied on the same data that are supplied to

more sophisticated models such as deep learning.

The topic of interpretability deserves special discussion because of ongoing debates around

interpretability, or the lack of it (Licitra, Trama, and Hosni, 2017; Lipton, 2016; Voosen, 2017).

To the model builder, interpretability often means the ability to explain which variables and

their combinations, in what manner, led to the output produced by the model (Friedler et al.,

2019). To the clinical user, interpretability could mean one of two things: a sufficient enough

understanding of what is going on, so that they can trust the output and/or be able to get

liability insurance for its recommendations; or enough causality in the model structure to

provide hints as to what mitigating action to take. To avoid wasted effort, it is important to

National Academy of Medicine GAO-20-215SP 17

understand what kind of interpretability is needed in a particular application. A black box

model may suffice if the output is trusted, and trust can be obtained by prospective assessment

of how often the model’s predictions are correct and calibrated.

Data Quality: Bad data quality adversely impacts patient care and outcomes (Jamal, McKenzie,

and Clark, 2009). A recent systematic review shows that the AI models could dramatically

improve if four particular adjustments were made: the use of multicenter datasets,

incorporation of time varying data, assessment of missing data as well as informative censoring,

and development of metrics of clinical utility (Goldstein et al., 2017). As a reasonable starting

point for minimizing data quality issues, the authors of the NAM Special Publication recommend

that data should adhere to the FAIR (findability, accessibility, interoperability, and reusability)

principles in order to maximize the value of data (Wilkinson et al., 2016). An often-overlooked

detail is when and where certain data become available and whether the mechanics of data

availability and access are compatible with the model being constructed.

Stakeholder education and managing expectations: The use of AI solutions presents a wide range

of challenges to law and ethics, most of which are still being worked out. For example, when a

physician makes decisions assisted by AI, it is not always clear where to place blame in the case

of failure. This subtlety is not new to recent technological advancements, and in fact was

brought up decades ago (American Journal of Bioethics, 2010). However, most of the legal and

ethical issues were never fully addressed in the history of computer-assisted decision support,

and a new wave of more powerful AI-driven methods only adds to the complexity of ethical

questions (e.g., the frequently condemned black box model) (Char et al., 2018).

Model builders need to better understand the datasets they choose to learn from. Decision

makers need to look beyond technical evaluations and ask for utility assessments. Media needs

to do a better job in articulating both immense potential and the risks of adopting the use of AI

in health care. Therefore, it is important to promote a measured approach to adopting AI

technology, which would further AI’s role as augmenting rather than replacing human actors.

This framework could allow the AI community to make progress while managing evaluation

challenges (e.g., when and how to employ interpretable models versus black-box models) as

well as ethical challenges that are bound to arise as the technology gets widely adopted.

National Academy of Medicine GAO-20-215SP 18

Authors: Hongfang Liu, PhD, Hossein Estiri, PhD, Jenna Wiens, PhD, Anna Goldenberg, PhD,

Suchi Saria, PhD, and Nigam Shah, MBBS, PhD

6. Deploying AI in Clinical Settings: For AI deployment in health care practice to be successful, it

is critical that the lifecycle of AI use be overseen through effective governance. IT governance

is the set of processes that ensure the effective and efficient use of IT in enabling an

organization to achieve its goals by overseeing the evaluation, selection, prioritization, and

funding, implementation, and tracking of IT projects. Another facet of IT governance that is

relevant to AI is data governance, which institutes methodical process that an organization

adopts to manage its data and ensure the data meet specific standards and business rules

before entering them into a data management system. A health care enterprise that seeks to

leverage AI should consider, characterize, and adequately resolve a number of key

considerations prior to moving forward with the decision to develop and implement an AI

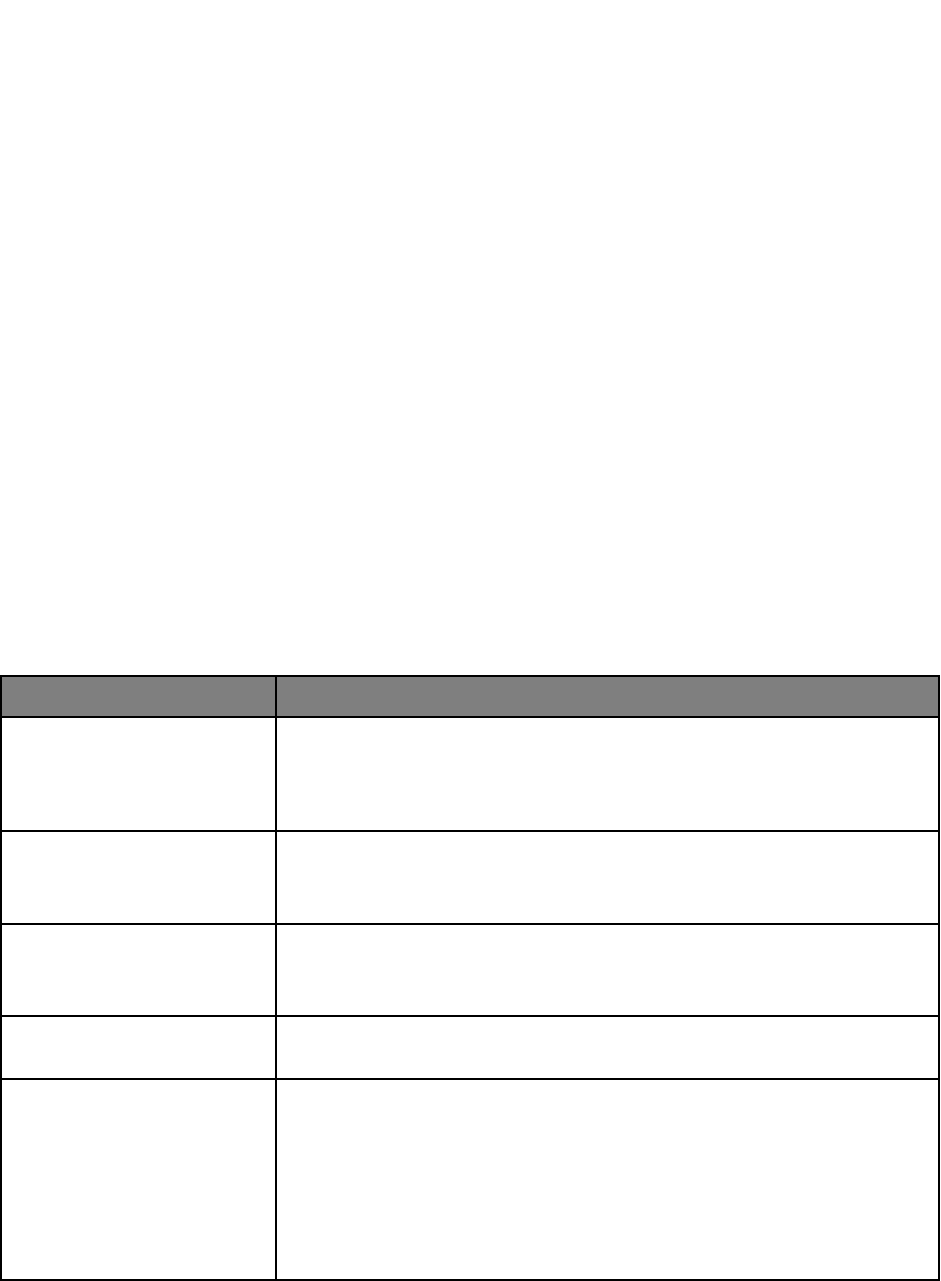

solution (see Figure 3).

Figure 3 | Key Considerations for Instructional Infrastructure and Governance

Consideration

Relevant Governance Questions

Organizational Capabilities

Does the organization possess the necessary technologic (e.g., IT

infrastructure, IT personnel) and organizational (knowledgeable

and engaged workforce, educational and training capabilities) to

adopt, assess and maintain AI driven tools?

Data Environment

What data are available for AI development? Do current systems

possess the adequate capacity for storage, retrieval, and

transmission to support AI tools?

Interoperability

Does the organization support and maintain data at rest and in

motion per national and local standards for interoperability (e.g.,

SMART on FHIR)?

Personnel Capacity

What expertise exists in the health care system to develop and

maintain the AI algorithms?

Cost, Revenue, and Value

What will be the initial and ongoing costs to purchase, install, and

train users, to maintain underlying data models, and to monitor for

variance in model performance?

Is there an anticipated return on investment from the AI

deployment?

What is the perceived value for the institution related to AI

deployment?

National Academy of Medicine GAO-20-215SP 19

Safety and Efficacy

Surveillance

Are there governance and processes in place to provide regular

assessments of the safety and efficacy of AI tools?

Patient/Family/Consumer

Engagement

Does the institution have in place formal mechanisms for

patient/family/consumer such a council or advisory board that can

engage and voice concerns on relevant issues related to

implementation, evaluation etc.?

Cybersecurity and Privacy

Does the digital infrastructure for health care data in the

enterprise have sufficient protections in place to minimize the risk

of breaches of privacy if AI is deployed?

Ethics and Fairness

Is there an infrastructure in place at the institution to provide

oversight and review of AI tools to ensure that the known issues

related to ethics and fairness are addressed and that vigilance for

unknown issues is in place?

Regulatory Issues

Are there specific regulatory issues that must be addressed and if

so, what type of monitoring and compliance programs will be

necessary?

Organizational Approach to Implementation: AI development and implementation should follow

established best practice frameworks in implementation science and software development.

Frameworks for conceptualizing, designing and evaluating this process are discussed in more

detail in the NAM Special Publication, but all implicitly incorporate the most fundamental basic

health care improvement model, often referred to as a plan-do-study-act (PDSA) cycle first

introduced by W.E. Deming more than two decades ago (Deming, 2000). The PDSA cycle relies

on the intimate participation of employees involved in the work, detailed understanding of

workflows, and careful ongoing assessment of implementation that informs iterative

adjustments. Newer methods of quality improvement introduced since Deming represent

variations or elaborations of this approach. All too often, however, quality improvement efforts

frequently fail because they are focused narrowly on a given task or set of tasks using

inadequate metrics without due consideration of the larger environment in which change is

expected to occur (Muller, 2018).

Such concerns are certainly relevant to AI implementation. New technology promises to

substantially alter how medical professionals currently deliver health care at a time when

morale in the workforce is generally poor (Shanafelt et al., 2012). One of the challenges of the

use of AI in health care is that integrating it within the EHR and improving existing decision and

workflow support tools may be viewed as an extension of an already unpopular technology

National Academy of Medicine GAO-20-215SP 20

(Sinsky et al., 2016). Moreover, there are a host of concerns that are unique to AI, some well and

others poorly founded, which might add to the difficulty of implementing AI applications.

In recognition that basic quality improvement approaches are generally inadequate to produce

large-scale change, the field of implementation science has arisen to characterize how

organizations can undertake change in a systematic fashion that acknowledges their

complexity. Some frameworks are specifically designed for evaluating the effectiveness of

implementation, such as the Consolidated Framework for Implementation Research (CFIR) or

the Promoting Action on Research Implementation in Health Services (PARiHS). In general,

these governance and implementation frameworks emphasize sound change management and

methods derived from implementation science that undoubtedly apply to implementation of AI

tools (Damschroder et al., 2009; Rycroft-Malone, 2004).

Clinical Outcome Monitoring: The complexity and extent of local evaluation and monitoring may

necessarily vary depending on the way AI tools are deployed into the clinical workflow, the

clinical situation, and the type of CDS being delivered, as these will in turn define the clinical

risk attributable to the AI tool.

For higher risk AI tools, a focus on clinical safety and effectiveness—from either a non-

inferiority or superiority perspective—is of paramount importance even as other metrics (e.g.,

API data calls, user experience information) are considered. High-risk tools will likely require

evidence from rigorous studies for regulatory purposes and will certainly require substantial

monitoring at the time of and following implementation. For low-risk clinical AI tools used at

point of care, or those that focus on administrative tasks, evaluation may rightly focus on

process of care measures and metrics related to the AI’s usage in practice to define its positive

and negative effects. The authors of the NAM Special Publication strongly endorse

implementing all AI tools using experimental methods (e.g., randomized controlled trials or A/B

testing) where possible. Large-scale pragmatic trials at multiple sites will be critical for the field

to grow but may be less necessary for local monitoring and for management of an AI formulary.

In some instances, due to feasibility, costs, time constraints or other limitations, a randomized

trial may not be practical or feasible. In these circumstances quasi-experimental approaches

such as stepped-wedge designs or even carefully adjusted retrospective cohort studies, may

provide valuable insights.

National Academy of Medicine GAO-20-215SP 21

Monitoring outcomes after implementation will permit careful assessment, in the same manner

that systems regularly examine drug usage or order sets and may be able to utilize data that are

innately collected by the AI tool itself to provide a monitoring platform. Recent work has

revealed that naive evaluation of AI system performance may be overly optimistic, providing a

need for more thorough evaluation and validation.

Clinical AI performance can also deteriorate within a site when practices, patterns, or

demographics change over time. As an example, consider the policy by which physicians order

blood lactate measurements. Historically, it may have been the case that, at a particular

hospital, lactate measurements were only ordered to confirm suspicion of sepsis. A clinical AI

tool for predicting sepsis that was trained using historical data from this hospital would be

vulnerable to learning that the act of ordering a lactate measurement is associated with sepsis

rather than the elevated value of the lactate. However, if hospital policies change and lactate

measurements are more commonly ordered, then the association that had been learned by the

clinical AI would no longer be accurate. Alternatively, if the patient population shifts, for

example to include more drug users, then elevated lactate might become more common and the

value of lactate being measured would again be diminished. In both the case of changing policy

or patient population, performance of the clinical AI application is likely to deteriorate,

resulting in an increase of false positive sepsis alerts.

More broadly, such examples illustrate the importance of careful validation in evaluating the

reliability of clinical AI. A key means for measuring reliability is through validation on multiple

datasets. Classical algorithms that are applied natively or used for training AI are prone to

learning artifacts specific to the site that produced the training data or specific to the training

dataset itself. There are many subtle ways that site-specific or dataset-specific bias can occur in

real world datasets. Validation using external datasets will show reduced performance for

models that have learned patterns that do not generalize across sites (Schulam and Saria,

2017).

In addition to monitoring overall measures of performance, evaluating performance on key

patient subgroups can further expose areas of model vulnerability: High average performance

overall is not indicative of high performance across every relevant subpopulation. Careful

examination of stratified performance can help expose subpopulations where the clinical AI

model performs poorly and therefore poses higher risk. Further, tools that detect individual

National Academy of Medicine GAO-20-215SP 22

points where the clinical AI is likely to be uncertain or unreliable can flag anomalous cases. By

introducing a manual audit for these individual points, one can improve reliability during use

(e.g., Soleimani, Hensman, and Saria, 2018 and Schulam and Saria, 2019). Traditionally,

uncertainty assessment was limited to the use of specific classes of algorithms for model

development. However, recent approaches have led to wrapper tools that can audit some black

box models (Schulam and Saria, 2019). Logging cases flagged as anomalous or unreliable and

performing a review of such cases from time to time may be another way to bolster post

marketing surveillance, and FDA requirements for such surveillance could require such

techniques.

Authors: Stephan D. Fihn, MD, MPH, Suchi Saria, PhD, Eneida Mendonca, MD, PhD, Seth Hain,

MS, Michael Matheny, MD, MS, MPH, Nigam Shah, MBBS, PhD, Hongfang Liu, PhD, and Andrew

Auerbach, MD

7. Conclusion: AI in health care is poised to make transformative and disruptive advances in

health care. It is prudent to balance the need for thoughtful, inclusive health care AI that plans

for and actively manages and reduces potential unintended consequences, while not yielding to

marketing hype and profit motives. The straightforward path for AI is to start with real

problems in health care, explore the best solutions by engaging relevant stakeholders, frontline

users, patients and their families—including AI and non-AI options—and implement and scale

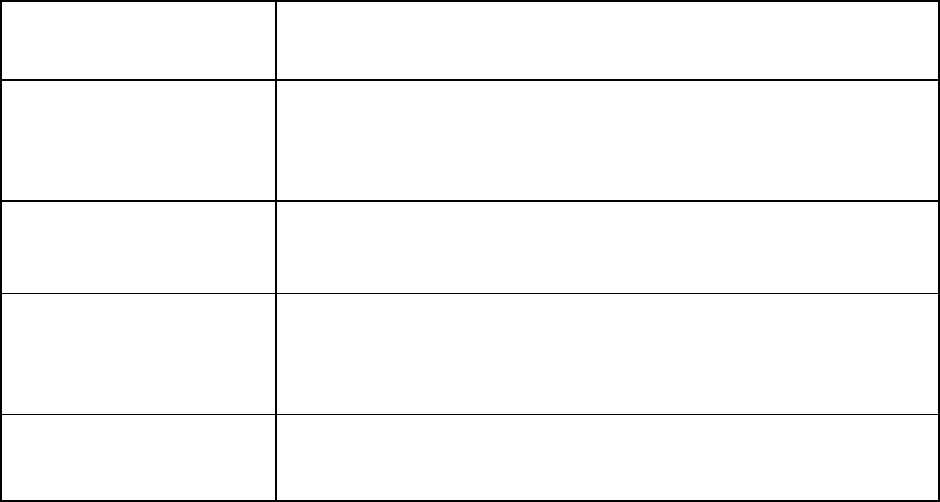

the ones that meet a new Quintuple Aim of equity and inclusion (See Figure 4).

National Academy of Medicine GAO-20-215SP 23

Figure 4 | Advancing the Quintuple Aim

SOURCE: Matheny, M., S. Thadaney, M. Ahmed, and D. Whicher, editors. Artificial Intelligence

and Health Care: The Hope, the Hype, the Promise, and the Perils. Washington, DC: National

Academy of Medicine.

In 21 Lessons for the 21st Century, Yuval Noah Harari writes, “Humans were always far better at

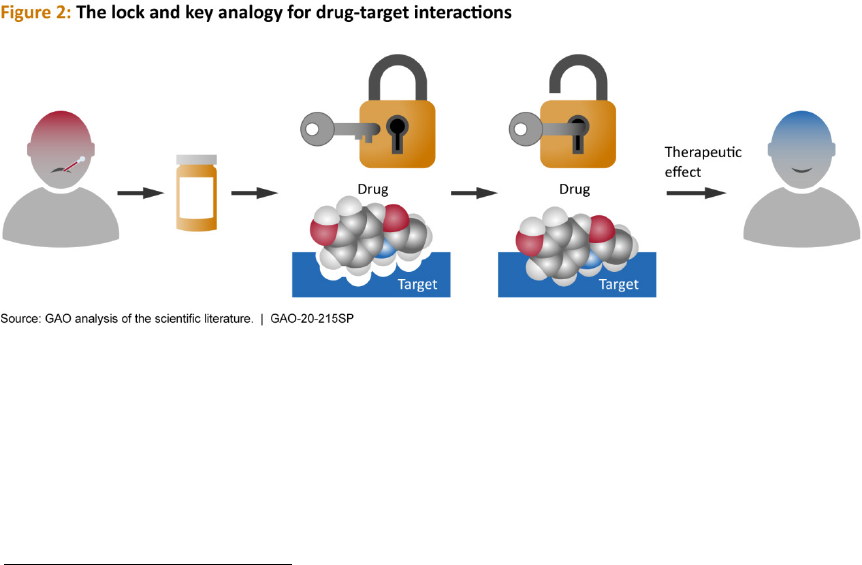

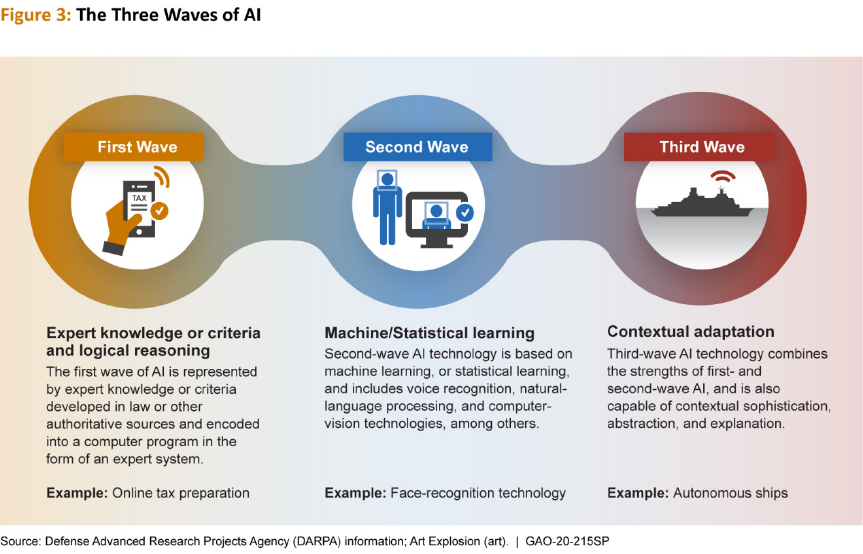

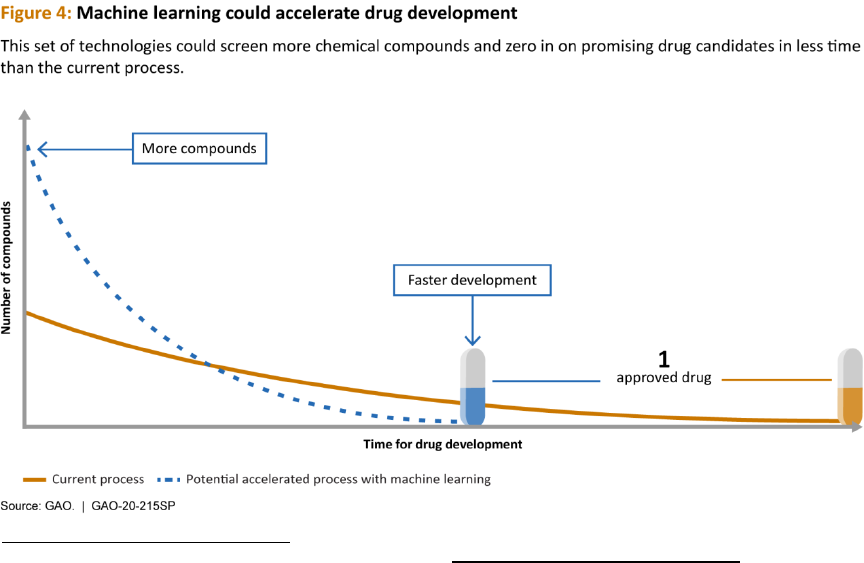

inventing tools than using them wisely” (Harari, 2018, p. 7). It is up to us, the stakeholders,