NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page

NY-Sun Solar Photovoltaic Program Impact

Evaluation for May 1, 2016 through March 31, 2018

Final Report

Prepared for:

New York State Research and Development Authority

Albany, NY

Dana Nilsson

Project Manager, NYSERDA

Prepared by:

DNV GL

Arlington, VA

Maura Nippert

Senior Engineer, Policy Advisory and Research

NYSERDA Contract [104536] June 2020

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page ii

NYSERDA RECORD OF REVISION

Document Title

NY-Sun Solar Photovoltaic Program Impact Evaluation for May 1, 2016

through March 31, 2018

June 2020

Revision Date

Description of Changes

Revision on Page(s)

June 12, 2020

Original Issue

Original Issue

June 16, 2020

Modified graphics per NYSERDA review

9,16

July 8, 2020

Text revisions per program manager review

9, 10, 14, 18

July 31, 2020

Modified text per program manager review

7,8

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page iii

Notice

This report was prepared by DNV GL in the course of performing work contracted for and

sponsored by the New York State Energy Research and Development Authority (hereafter

“NYSERDA”). The opinions expressed in this report do not necessarily reflect those of

NYSERDA or the State of New York, and reference to any specific product, service, process,

or method does not constitute an implied or expressed recommendation or endorsement of it.

Further, NYSERDA, the State of New York, and the contractor make no warranties or

representations, expressed or implied, as to the fitness for particular purpose or

merchantability of any product, apparatus, or service, or the usefulness, completeness, or

accuracy of any processes, methods, or other information contained, described, disclosed, or

referred to in this report. NYSERDA, the State of New York, and the contractor make no

representation that the use of any product, apparatus, process, method, or other information

will not infringe privately owned rights and will assume no liability for any loss, injury, or

damage resulting from, or occurring in connection with, the use of information contained,

described, disclosed, or referred to in this report.

NYSERDA makes every effort to provide accurate information about copyright owners and

related matters in the reports we publish. Contractors are responsible for determining and

satisfying copyright or other use restrictions regarding the content of reports that they write,

in compliance with NYSERDA’s policies and federal law. If you are the copyright owner and

believe a NYSERDA report has not properly attributed your work to you or has used it

without permission, please email print@nyserda.ny.gov.

Information contained in this document, such as web page addresses, are current at the time

of publication.

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page iv

TABLE OF CONTENTS

NYSERDA Record of Revision ........................................................................................................................... ii

1 Introduction............................................................................................................................................. 5

1.1 Program Description ........................................................................................................................ 5

1.2 Summary of Evaluation Objectives and Methods ............................................................................ 6

2 Results, Findings, and Recommendations ............................................................................................... 7

2.1 Data Collection Results .................................................................................................................... 7

2.1.1 Data Collection Challenges........................................................................................................ 8

2.1.2 Persistence Data Collection Results .......................................................................................... 9

2.2 Analysis Results ................................................................................................................................ 9

2.2.1 File Review Results .................................................................................................................... 9

2.2.2 Production Analysis Results .................................................................................................... 11

2.2.3 Detailed Findings ..................................................................................................................... 15

2.3 Key Findings and Recommendations ............................................................................................. 17

3 Methods ................................................................................................................................................ 19

3.1 Data Collection Approach .............................................................................................................. 19

3.1.1 Large C&I Data Collection ....................................................................................................... 19

3.1.2 Residential and Small Business ............................................................................................... 19

3.2 Analysis Approach .......................................................................................................................... 20

3.2.1 Production Data Analysis ........................................................................................................ 20

3.2.2 File Reviews ............................................................................................................................. 21

3.2.3 Weather Normalization .......................................................................................................... 22

3.2.4 Expansion of Production Results to Sample Frame ................................................................ 22

LIST OF FIGURES

Figure 1: Realization Rate Interpretation ............................................................................................................ 12

Figure 2. Plot of Evaluated Production vs. Application-specific Production ............................................................ 16

LIST OF TABLES

Table 1: Evaluation Objectives, Purpose, and Methods .......................................................................................... 6

Table 2: NY-Sun Evaluation Data Collection Results ............................................................................................... 7

Table 3: Resolutions from File Reviews of Sites with Capacity Factor Discrepancies ................................................ 10

Table 4: Production Analysis Results by System Size ............................................................................................ 13

Table 5: Production Analysis Results by Customer Sector .................................................................................... 13

Table 6: Production Analysis Results by Region ................................................................................................... 14

Table 7: Production Analysis Results by Purchase Type ........................................................................................ 14

Table 8: Total Production by Region, Size, and Purchase Type .............................................................................. 15

Table 9: Findings and Recommendations ........................................................................................................... 17

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page 5

1 Introduction

This report presents the impact evaluation of solar photovoltaic (PV) projects installed under

NYSERDA’s NY-Sun program from May 1, 2016 through March 31, 2018. A subset of solar PV

installations under the NY-Sun program benefitted from support by NY Green Bank (NYGB), a

division of NYSERDA. Previous installations under the NY-Sun and predecessor programs were

evaluated in the NYSERDA Solar Photovoltaic Program Impact Evaluation for 2008 and 2011-

2016.

1.1 Program Description

The NYSERDA NY-Sun PV Incentive Program

1

, open August 12, 2010 through December 29,

2025, provides cash incentives and/or financing according to a megawatt (MW) block structure.

“Blocks,” or specific MW targets per defined sector and geographic region of New York, are

active on a rolling basis until fulfilled. The original program goal of installing 3 gigawatts (GW

DC) of PV capacity by 2023 was expanded to 6 GW DC by 2030, and NYSERDA’s 2019

petition to extend the NY-Sun program and increase funding was approved in 2020. The Long

Island region incentive block closed to new residential customer applications in April 2016.

2

Under the NY-Sun Program, NYSERDA provides cash incentives/and or financing for the

installation by contractors of new grid-connected PV systems that are 25 kW or less for

residential, and 200 kW or less for non-residential sites (NY-Sun Small

Residential/Commercial

3

), as well as incentives for the installation of new PV systems greater

than 200 kW (NY-Sun Commercial/Industrial

4

). These incentives apply to systems that are

leased, owned, or governed by power purchase agreements (PPA).

A subset of sites installed with incentives from the NY-Sun program also benefited from support

from NYGB. Launched in 2014, NYGB is a state-sponsored specialized financial entity whose

mission is to accelerate clean energy deployment in New York State by working with the private

sector to transform financing markets.

1

NYSERDA PON 2112

2

https://www.nyserda.ny.gov/-/media/Files/Programs/NYSun/2018-Performance-Report.pdf

3

(NYSERDA NY-Sun MW Res/Comm Block Incentive Dashboard, 2016)

4

(NYSERDA NY-Sun MW C/I Block Incentive Dashboard, 2016)

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page 6

1.2 Summary of Evaluation Objectives and Methods

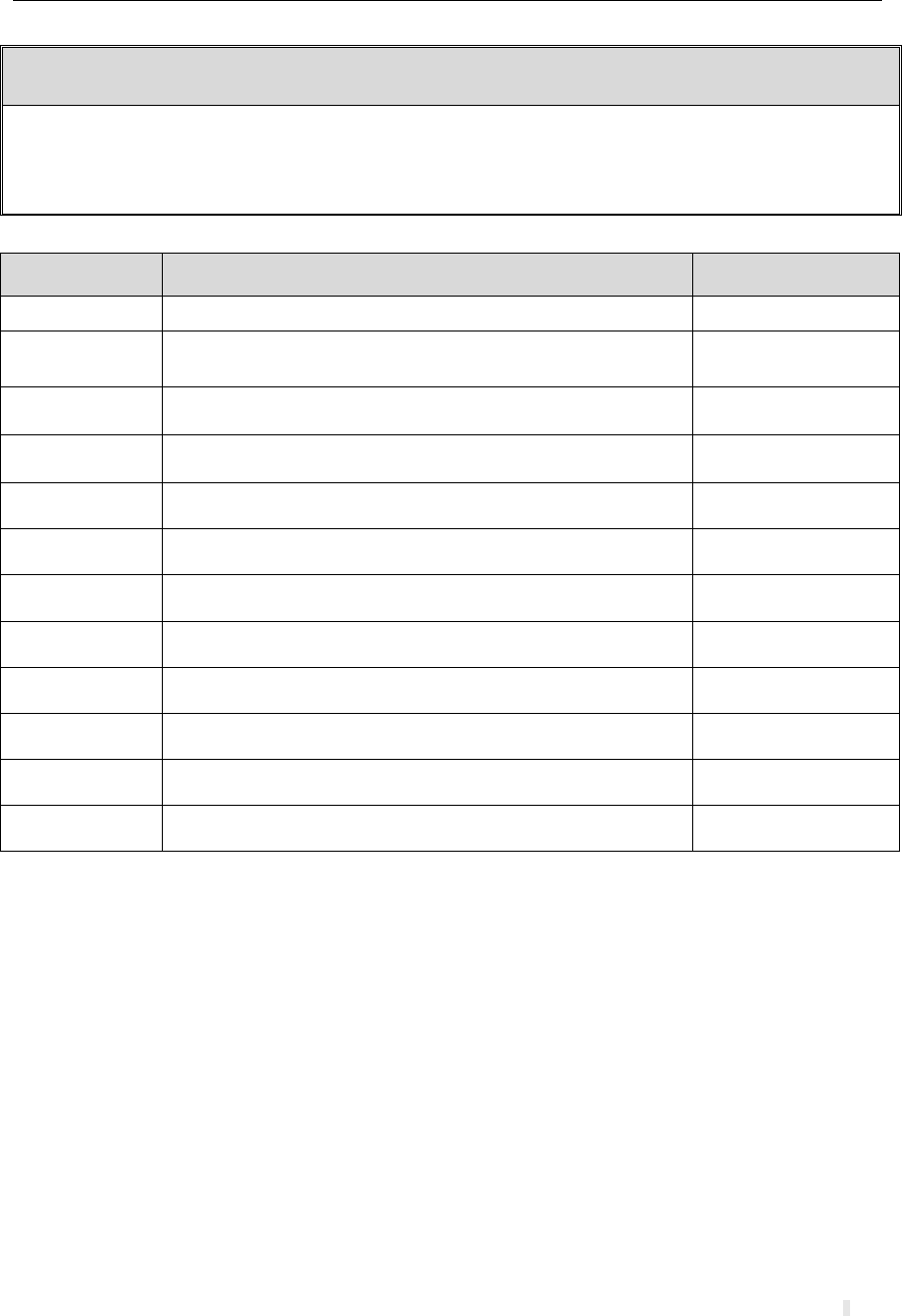

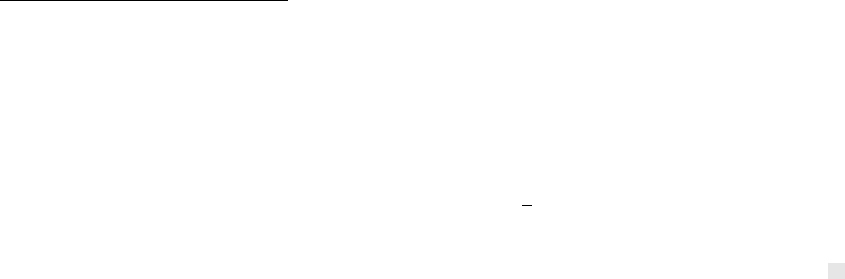

Table 1 summarizes the impact evaluation objectives, purposes for the research, and methods

employed to satisfy the objectives.

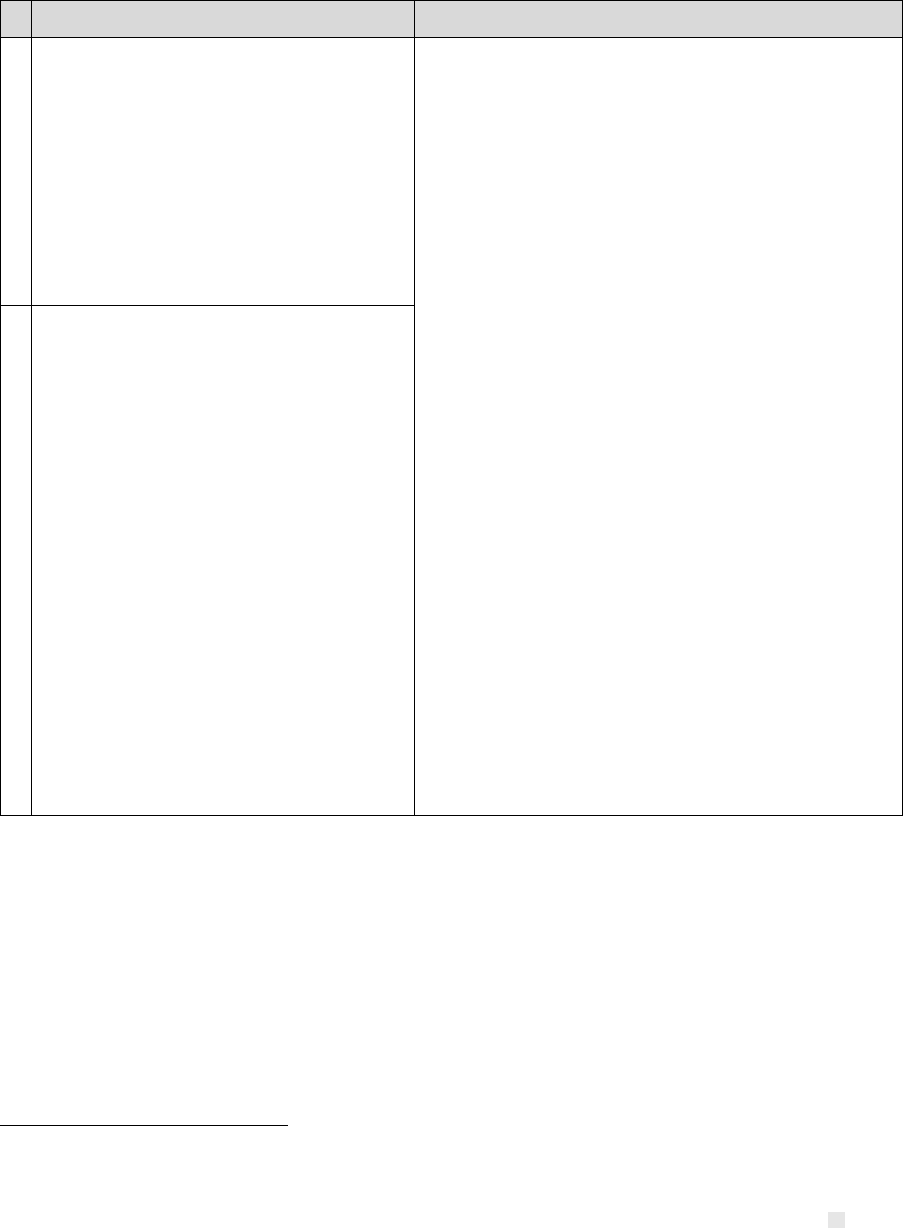

Table 1: Evaluation Objectives, Purpose, and Methods

Objective

Purpose

Methods

Precision

Design samples to meet but not

exceed a target of 10% precision

level for program gross energy

production at 90% confidence.

Collect population tracking data from NY-

Sun database in Salesforce;

Design and select representative sample

according to stratification and precision

criteria and expected response rate.

Supplied Power

(Nameplate kW DC)

Provide power supplied per site

and region for comparison to

MW Block Dashboard goals.

Collect nameplate DC capacity (kW) for

sites from tracking data;

Review reported capacity values for sites

with first-year production unexpectedly high

or low production.

Energy Impact

(kWh Annual

Production, Reporting

Realization Rate (%),

Application-specific

Realization Rate (%))

Provide verified, weather-

normalized gross impacts for the

program overall and for specified

segments, including:

• Annualized first-year

verified gross energy

production (kWh)

• Verified gross reporting

realization rate

• Verified gross

application-specific

realization rate

Collect first-year (first 13 months after

interconnection) production data and

conduct contractor interviews for sampled

sites;

Normalize results for weather differences

across years of installation;

Flag sites with unexpectedly high or low

performance for file review;

Review NY-Sun project files, production

models, and QA/QC files (where available)

for sites with unexpectedly high or low

capacity factors;

Expand sampled site impacts to the program

population and calculate realization rates.

Capacity Factor (%)

Determine the ratio of actual

output over a period of time

(including variations due to

weather), to potential output if it

were possible for the system to

operate at full

nameplate capacity continuously

over the same period of time.

Calculate site-level capacity factors based

on available nameplate and weather

normalized first-year production data;

Expand site level results to population.

Performance

Persistence

Enable collection and cleaning of

data for future evaluations of

long-term persistence;

Determine long-term persistence

of evaluated energy production

of solar PV at the customer site;

Determine the factors

contributing to system

persistence/ underperformance.

Review response and attrition rates from this

and prior evaluation to establish long-term

persistence data collection and analysis

plan; future evaluations will assess

persistence and degradation for longitudinal

data collected over 10 years of sampled site

system operation.

Collect ongoing production data and conduct

contractor interviews for persistence sample

of sites.

Performance Model

Data

Collect data inputs required for

performance modeling in

National Renewable Energy

Laboratory (NREL) System

Advisor Model (SAM).

5

Conduct contractor interviews.

5

National Renewable Energy Laboratory System Advisor Model Version 2017.9.5 available at https://sam.nrel.gov/

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page 7

2 Results, Findings, and Recommendations

2.1 Data Collection Results

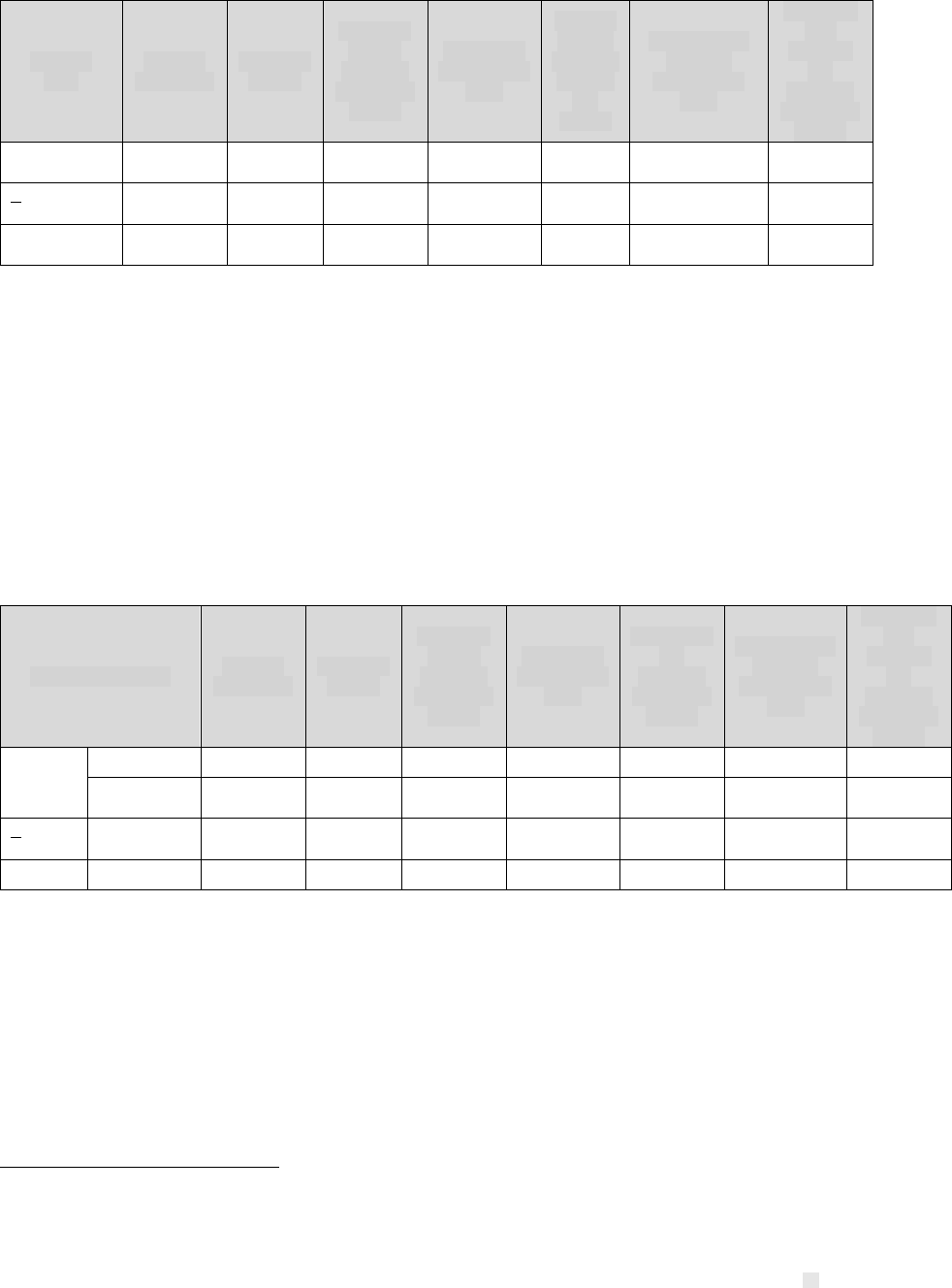

NYSERDA’s goal for this evaluation was to achieve an estimate of production capacity factors

with +10% relative precision and 90% confidence (90/10 precision) for four segmentations of

program data: region (Con Ed, Long Island, and Upstate), purchase type (lease, PPA, and

purchase), size (above and below 200 kW), and customer sector (residential and non-residential).

The NY-Sun and NYGB program populations and the achieved sample of first-year production

data

6

collection are shown in Table 2. To achieve the target precisions for each segmentation, the

sample design was stratified by a combination of customer sector (not shown: residential or non-

residential), region, system size (kW), and purchase type. Selecting from 29,000 unique records

in the sample frame, the resulting sample design has 70 strata and a total target sample of 240

sites, where a site is a single installed solar PV system enrolled through a NYSERDA program.

Individual premises may host multiple program sites, such as when multiple solar PV systems are

installed at a single address.

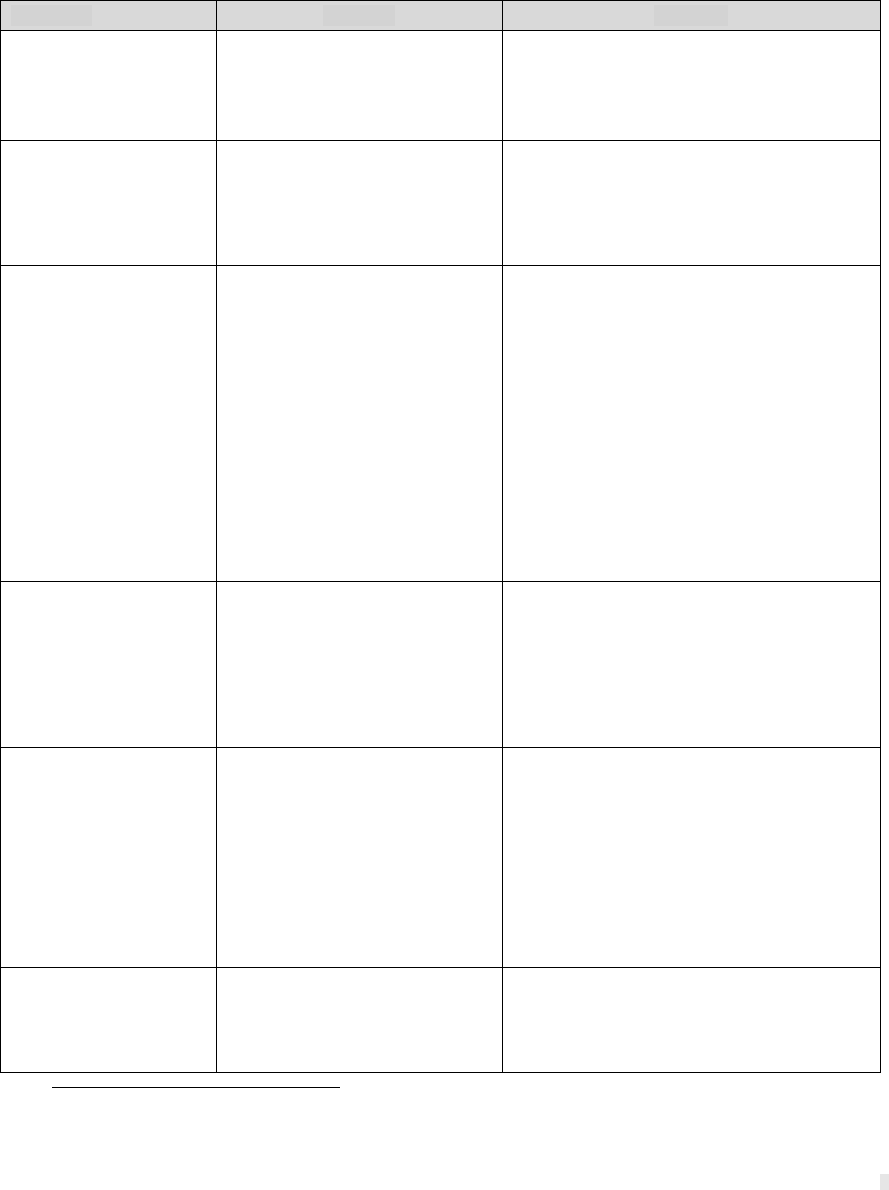

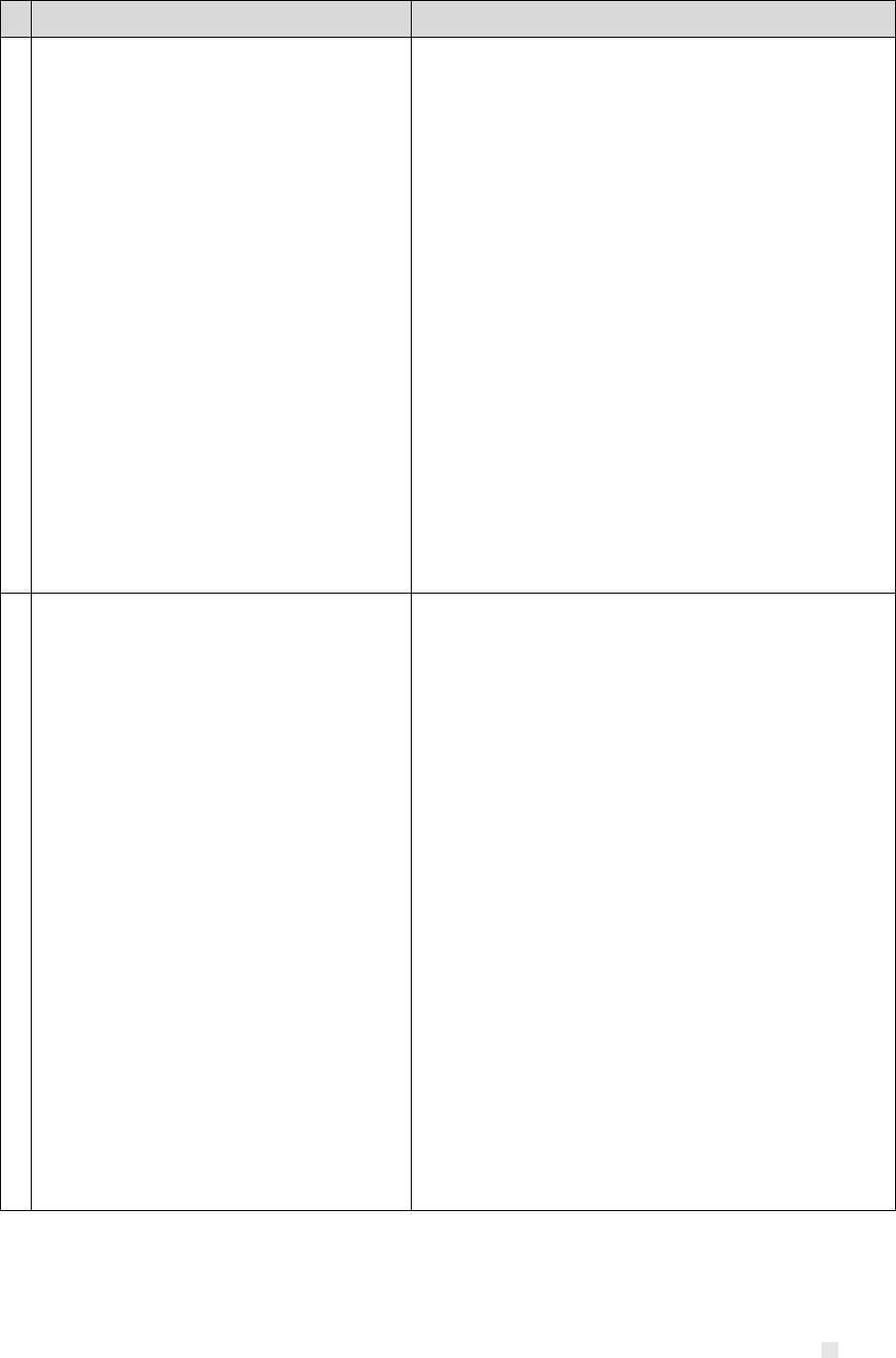

Table 2: NY-Sun and NYGB Evaluation Data Collection Results

Region

System

Size

(kW)

Purch-

ase

Type

NY-Sun

Population

Size (N)

a

NYGB

Overlap

Population

Size (N

NYGB

)

Target

Sample

Evaluated

Sample

(n)

NYGB Overlap

Evaluated

Sample (n

NYGB

)

Con Ed

Below

200 kW

Lease

2751

1200

33

22

17

PPA

723

577

22

21

19

Purchas

e

1851

420

37

39

26

Above

200 kW

All

12

0

12

10

0

Upstate

Below

200 kW

Lease

3914

2653

21

18

12

PPA

1977

1654

33

34

19

Purchas

e

6546

482

35

41

21

Above

200 kW

All

103

0

103

60

0

Long

Island

Below

200 kW

Lease

4817

2864

28

23

18

PPA

1991

1607

23

22

19

Purchas

e

4279

233

36

46

16

Above

200 kW

All

36

0

36

23

0

Overall

29,000

11,690

419

359

167

6

First-year production data collection was for the first 13 months of production after system interconnection/ inception of system

production. The first, potentially incomplete, month of production data is dropped from the analysis to utilize the first 12 months of

complete, consecutive production data.

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page 8

All projects in the population recieved NY-Sun funding, and a subset included NYGB

involvement, as shown in Table 2. The population size (N), target sample, and evaluated sample

each include projects that received support from NYGB, that was later confirmed by the impact

evaluation team. The NY-Sun and NYGB program overlap sites specified in the table are a subset

of the NY-Sun population and evaluated sample. For example, within the Con Ed <200 kW

leased group, 22 sites were in the evaluated sample, and of those, 17 sites were identified to have

received support from NYGB.

2.1.1 Data Collection Challenges

A total of 58 contractors, representing 359 projects across all sizes and sectors (as shown in Table

2), provided viable data for use in the evaluation. Of 32 companies from whom the evaluation

team was unable to collect data, 10 were closed businesses or had outdated contact information

for which new site ownership was not found, one declined to participate in the study, and 21

either did not respond or were otherwise unable to provide production data for the requested

site(s).

In the over 200 kW group, the evaluation team encountered limitations to availability of site data

planned for collection from the Distributed Generation (DG) Integrated Database.

7

While data

limitations did not have implications on the precision on the current evaluation, it does impact the

long-term data collection for the persistence study. In particular, the collection of persistence data

from sites more than three years old was limited by the expiration of requirements to report to the

DG Integrated Database. Additionally, the impact evaluation team found that a number of sites

over 200 kW opted to apply to the Small Commercial Program rather than the Commercial/

Industrial Program

8

. These sites recieved incentives capped at 200 kW, but avoided the

requirement to connect to the DG Integrated Database. Late in this study’s evaluation period, the

Commercial and Industrial program was preparing to shift the program requirements (and

associated DG Integrated Database reporting requirement) to sites over 750 kW, rather than 200

kW. The formal adoption of the new program requirement occured after the evaluation period,

but will apply to future evaluations. The transition to new program requirements did not have

statistically relevant impacts to the program beyond this data collection issue.

7

http://dg.nyserda.ny.gov/home/index.cfm?

8

As of October 25, 2018 (after the current evaluation period), the size requirement for the Commercial and Industrial program

shifted from greater than 200 kW to greater than 750 kW.

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page 9

2.1.2 Persistence Data Collection Results

In addition to the primary production data collection effort for this NY-Sun 2016-2018

evaluation, the impact evaluation team collected production data for persistence sample sites.

Data collection brings the persistence study to year six of a ten-year data collection effort.

Completion of the persistence study analysis will occur after year 10 (April 2026). A more

detailed description of the persistence study and future analysis plan is included in Appendix C.

Persistence sample sites are those for which multiple years of data (from installation through

October 2017) were successfully collected through the prior (2008 and 2011-2016) NY-Sun

impact evaluation. The effort for the present study attempted to collect follow-on years of data for

264 sites. Of these, production data for one or more additional years (from 2017 through 2019)

was collected from 213 sites. This is an attrition rate of 20% (response rate of 80%).

Of the 213 sites for which data was collected, up to 75 of the sites’ data was incomplete:

• One contractor provided the most recent 13 months of data rather than the requested

period for persistence and the evaluation was unable to collect data from the missing

months, resulting in a less-than-one-year gap in the time series data for 50 sites.

• The study plan anticipated that all large (>200 kW) sites would have additional years of

data available through the DG Integrated Database, as was the case for the prior study.

Out of the 114 large sites the evaluators attempted to download, 89 have current data, but

25 system owners stopped providing data to the DG database after their required three-

year reporting period ended. This is because there is some burden, in the form of

associated cost and staff time investment, that is sufficient to deter some contractors from

continuing to post to the DG Integrated Database.

To support future persistence analysis and overcome these data limitations, the impact evaluation

team may conduct additional production data collection for systems installed in earlier years of

the NY-Sun program.

2.2 Analysis Results

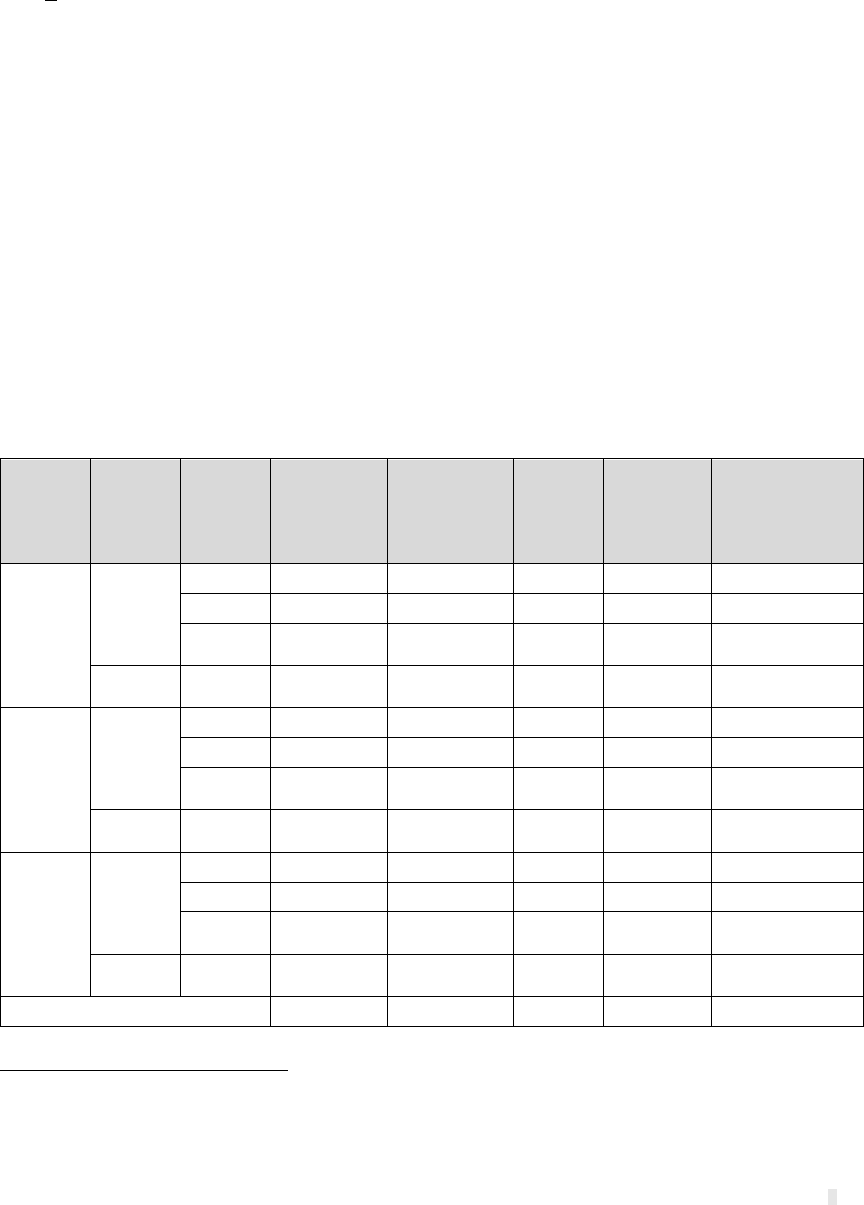

2.2.1 File Review Results

The impact evaluation team collected production data for a total of 359 of the 419 sampled

projects. Of this number, 140 (39%) were flagged for additional file review due to performance

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page 10

criteria outside of the expected range.

9

Table 3 shows the mutually exclusive resolutions from file

reviews of sites with capacity factor discrepancies, wherein each project was assigned a

discrepancy from the list below.

Table 3: Resolutions from File Reviews of Sites with Capacity Factor Discrepancies

Of the 140 files initially flagged, 26 were ultimately determined to be showing normal production

(within +/- 4% of modeled output) after detailed review. Projects performed in accordance with

design models, but below the 9% performance threshold of the file review. This type of

discrepancy was seen more frequently for some participating contractors.

File review dispositions resulting in lower-than-expected productivity that was sustained in the

verified production results include:

• Low production anomalies (separated into groups of three months or less, four to eleven

months, and persistent) verified to be accurate

• System faults (broken equipment or connectivity issues verified through customer

interviews)

9

Sites with capacity factors above 14% or below 9% were flagged for file review.

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page 11

• Shading (persistent or winter-only) identified through reviews of project shading analysis

files (submitted by contractors with predictive production models), project site plans, and

satellite map images of site locations

A total of 8 sites exhibited higher-than-expected production, verified with the following

dispositions:

• Larger system size than reported in tracking data and indicated by documentation,

causing apparent over-production, then verified by review of plans, satellite imagery, and

in some cases, information provided by the end user

• High production anomalies (separated into groups of three months or less and persistent)

where precise cause could not be determined

A total of five sites were dropped from the analysis based on results of the file review, as follows:

three sites were dropped due to missing or largely incomplete data (Unknown category in Table

3); one site was dropped due to incorrect/erroneous reporting (persistent repetition of identical

production values; Unknown category); and one site was dropped due to consistent/excessive

production possibly due to a second system installed on site that could not be verified (Installed

system larger than report category).

2.2.2 Production Analysis Results

This section provides weather-normalized verified gross impact results of the program: first-

year

10

capacity factors, reporting realization rates, and application-specific realization rates.

The

realization rates are ratios of verified normal-weather gross system production to: reporting

production, or production of the same capacity system with a 13.4% capacity factor

11

, and

application specific production, or contractor estimates of system production calculated per

system models submitted with program applications for each site.

12

The impact evaluation team

reviews the accuracy of these estimates for different categories relative to the 90/10 precision

target.

13

In each table, the categories shown (other than “Overall”) are independent of one

10

All verified gross impact results are based on the first 13 months of production data, starting the month of installation and

dropping that first, potentially partial-production month. All results are weather-normalized to account for differences in production

caused by weather (solar insolation and precipitation) across years of installation.

11

The reporting realization rate is the ratio of verified normal-weather gross system production to NYSERDA’s estimate of system

production (referred to as reporting production) for purposes of program-level progress and benefits reporting to the PSC. The

reporting realization rate assesses the difference between reporting production and actual evaluated system production. For the

current evaluation, the reporting realization rate is based on a CF of 13.4%.

12

The application-specific realization rate is the ratio of verified normal-weather gross system production to contractor estimates

of system production (referred to as application-specific production) calculated per system models submitted with program

applications for each site.

13

90/10 precision means that the result has a 90% probability of being within + 10% of the complete population result.

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page 12

another. Each table of results uses the same weights based on a single stratification, sample, and

sample frame. See Section 4.2 for explanation of analysis approach and weighting factor

calculation.

Figure 1 illustrates how realization rates are interpreted for overestimation or underestimation of

actual production for a given set of data.

Figure 1: Realization Rate Interpretation

Table 4 shows production analysis results for the two system size categories and overall. The

90/10 precision target was achieved for each segment. The NY-Sun program overall capacity

factor was found to be 12.6%, slightly higher than the 12.4% overall program capacity factor

evaluated for 2011-2016. There is not a statistically significant difference between large (above

200 kw) and small (below 200 kW) sites. The capacity factor for small sites is slightly higher than

the prior evaluation (2011-2016) result of 12.1%, but has gone down for large sites, from 13.2%

to 12.7%. Small sites are expected to be more frequently installed at existing buildings, and larger

sites at more ideal locations and orientations pre-verified by rigorous planning models, but the

lack of statistically significant difference suggests that small sites are well-oriented on average.

The application-specific realization rate for small systems is very close to 100%, with a

confidence interval of 98% to 103%, demonstrating that very accurate planning impacts for the

program are achieved when based on application-specific production (site-specific models

predicting production). This compares to a reporting realization rate confidence interval of 91%

to 97%. The difference in application-specific realization rate between large and small systems is

statistically significant, with a confidence interval between 94% and 96% for large sites.

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page 13

Table 4: Production Analysis Results by System Size

System

Size

Sample

Complete

Capacity

Factor

Capacity

Factor

Relative

Precision

@90%

Reporting

Realization

Rate

Report-

ing RR

Relative

Precis-

ion

@90%

Application-

specific

Realization

Rate

Applicat-

ion-

specific

RR

Relative

Precision

@90%

Below 200

kW

266

12.5%

3.0%

93.6%

3.0%

100.6%

2.7%

> 200 kW

93

12.7%

0.9%

95.0%

0.9%

94.7%

1.0%

Overall

359

12.6%

2.3%

93.9%

2.3%

99.2%

2.1%

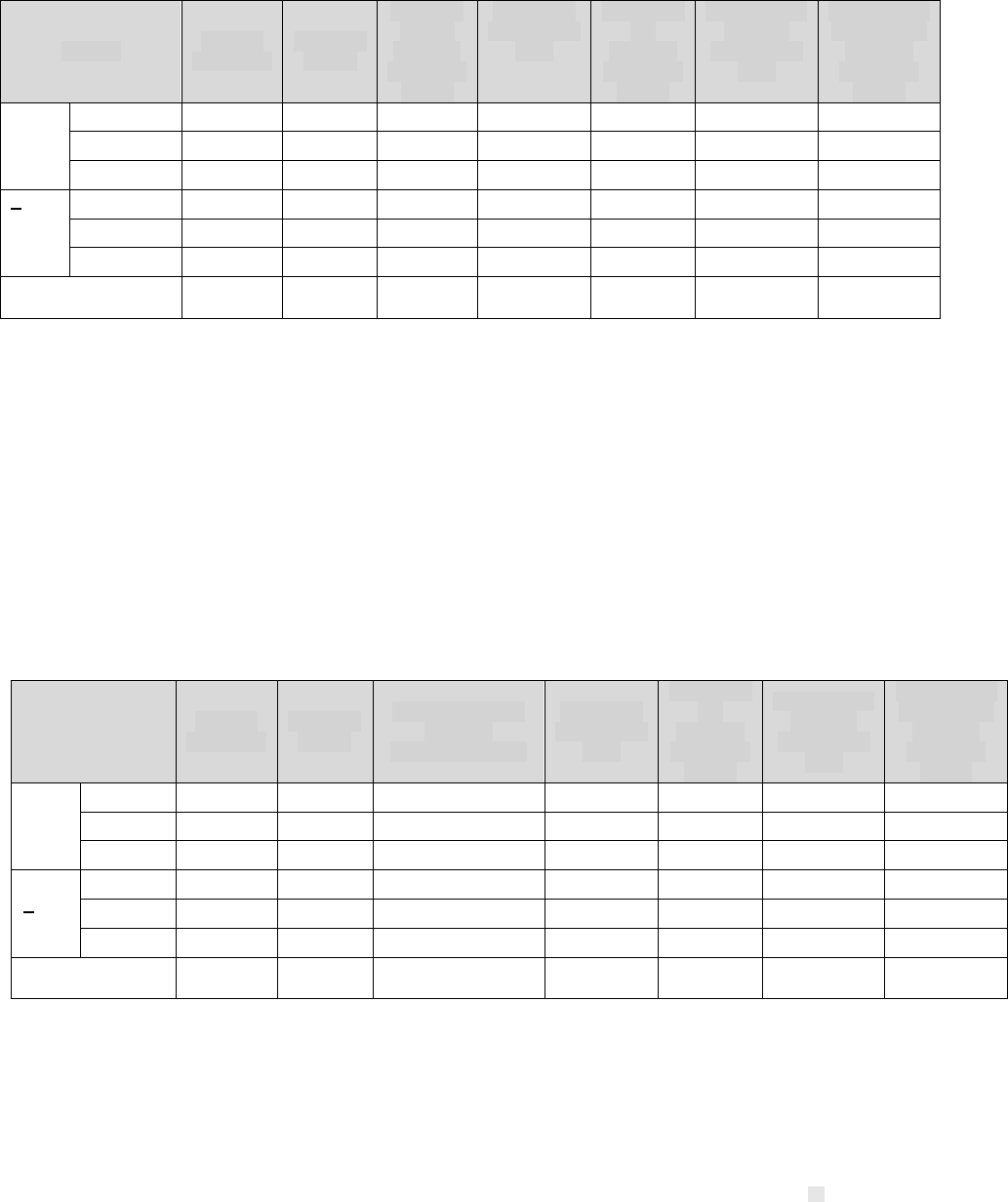

Table 5 shows production analysis results by customer sector. The 90/10 precision target was

achieved for each segment. The small non-residential capacity factor exceeds other sectors with a

statistically significant difference. The residential application-specific realization rate falls in a

confidence interval of 98% to 105%. Generally, the impact evaluation team would anticipate that

smaller systems experience more shading and less optimal orientation than large systems. It

appears, however, that these types of considerations were effectively captured for application-

specific realization rates for residential systems.

Table 5: Production Analysis Results by Customer Sector

14

Customer Sector

Sample

Complete

Capacity

Factor

Capacity

Factor

Relative

Precision

@90%

Reporting

Realization

Rate

Reporting

RR

Relative

Precision

@90%

Application-

specific

Realization

Rate

Applicat-

ion-

specific

RR

Relative

Precision

@90%

Below

200 kW

Residential

211

12.4%

3.4%

92.6%

3.4%

101.6%

3.3%

Non-

Residential

55

13.1%

1.5%

97.5%

1.5%

95.7%

2.1%

> 200

kW

Non-

Residential

93

12.7%

0.9%

94.8%

0.9%

94.6%

1.1%

Overall

359

12.6%

2.2%

93.9%

2.2%

98.8%

1.9%

Table 6 provides production analysis results by by region. The 90/10 capacity factor precision

target was achieved for all segments. Slightly higher capacity factors were expected on Long

Island (observed in the prior evaluation) because the average solar insolation is higher, on

average, than in the Upstate region, and there are fewer physical obstructions than in the Con Ed

region (especially for smaller sites built on existing buildings with less ideal orientations). The

impact evaluation did observe slightly higher capacity factors on Long Island; however, the

14

Calculations based on sample weighted results rather than the applied ratio results from the population.

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page 14

difference is not statistically significant, meaning that the observed difference could just be due to

the random samples of this study rather than the expected physical causes.

Table 6: Production Analysis Results by Region

Region

Sample

Complete

Capacity

Factor

Capacity

Factor

Relative

Precision

@90%

Reporting

Realization

Rate

Reporting

RR

Relative

Precision

@90%

Application-

specific

Realization

Rate

Application-

specific RR

Relative

Precision

@90%

Below

200

kW

Con Ed

82

12.4%

3.4%

92.4%

3.4%

101.8%

3.6%

Upstate

93

12.5%

4.1%

93.0%

4.1%

99.1%

4.2%

Long Island

91

12.7%

6.1%

95.1%

6.1%

102.3%

4.8%

> 200

kW

Con Ed

10

12.7%

3.4%

94.9%

3.4%

94.9%

3.4%

Upstate

60

12.7%

1.0%

94.5%

1.0%

94.1%

1.2%

Long Island

23

13.0%

2.0%

97.0%

2.0%

96.8%

1.8%

Overall

359

12.6%

2.3%

93.9%

2.3%

99.2%

2.1%

Table 7 shows the production analysis results for different system purchase types. The 90/10

precision target was achieved for each segment. Looking at differences across capacity factors

from low to high, the small PPA group shows the lowest capacity factor (driven by particularly

low production for several Long Island residential PPA sites), but it is not statistically different

from small leased or large PPA segments. The large leased segment has the highest capacity

factor at 13.5%, and also achieves a 100% realization rate, but this segment includes only a single

site. The purchased system segment performs better than PPA models for both large and small

groups.

Table 7: Production Analysis Results by Purchase Type

Purchase Type

Sample

Complete

Capacity

Factor

Capacity Factor

Relative

Precision @90%

Reporting

Realization

Rate

Reporting

RR

Relative

Precision

@90%

Application-

specific

Realization

Rate

Application-

specific RR

Relative

Precision

@90%

Below

200

kW

Lease

63

12.3%

6.0%

91.5%

6.0%

103.9%

4.3%

PPA

77

11.6%

8.9%

86.5%

9.0%

90.8%

7.4%

Purchase

126

13.1%

3.0%

98.0%

3.0%

102.5%

3.8%

> 200

kW

Lease

1

13.5%

0.0%

100.6%

0.0%

100.6%

0.0%

PPA

37

12.6%

1.3%

94.3%

1.3%

93.8%

1.5%

Purchase

55

12.8%

1.2%

95.8%

1.2%

95.7%

1.1%

Overall

359

12.6%

2.3%

93.9%

2.3%

99.2%

2.1%

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page 15

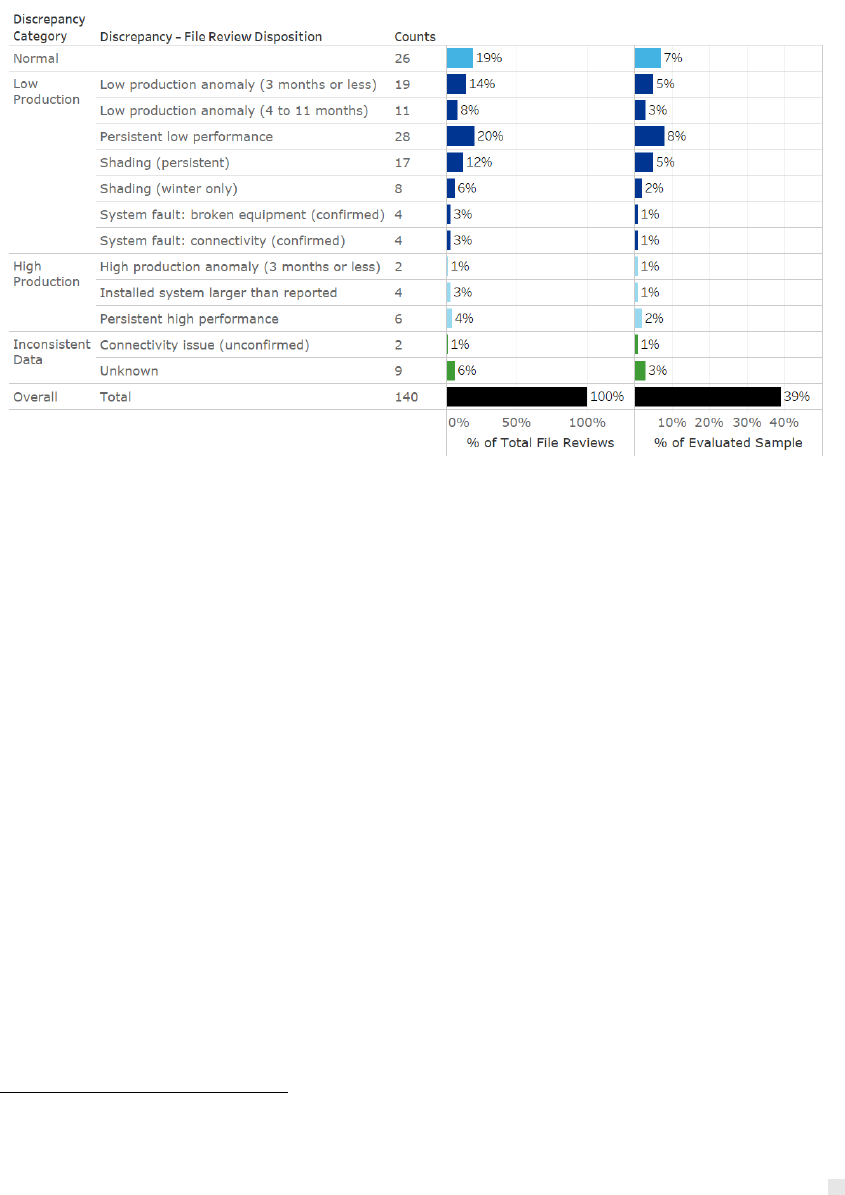

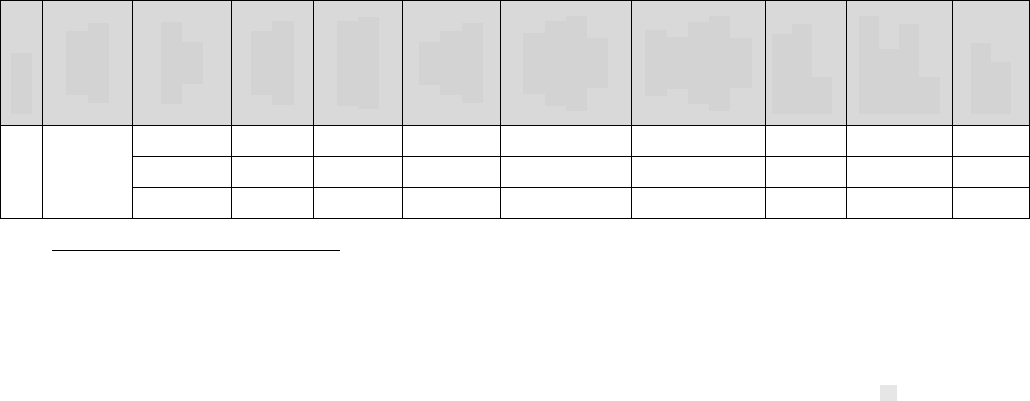

2.2.3 Detailed Findings

Estimated production, realization rates, and capacity factors from the impact evaluation of the

March 2016- May 2018 NYSERDA Solar PV program installations are shown in Table 8, by

region, size, and purchase type, and overall.

Application-specific realization rates are 99% for the program overall, for small systems overall,

and for most segments within the small group (excluding PPA and non-residential sites). All

other segments (excluding PPA) show application-specific realization rates in the mid-90s. These

results are consistent with the prior (2011-2016) evaluation result, demonstrating high

application-specific realization rates for nearly seven (7) years of the NY-Sun program.

Production realization rates for the program overall (94% for reporting and 99% for application-

specific) show that both methods are providing relatively accurate estimation of generation for

the program population, but the application-specific production is the better approach. The

program realized an overall 12.6% capacity factor during the evaluation period, which is slightly

higher than that of the 2008/2011-2016 evaluation result of 12.4%. Capacity factors for all groups

but small purchase sites are below the planned capacity factor of 13.4% for the program.

Among small units, the evaluation found high capacity factors for purchased units and low

capacity factors for PPA. Breaking these results down by region, the purchased model is

consistently higher than other ownership models in all regions, while the overall low capacity

factor for PPA is predominantly due to extremely low values on Long Island. Low producing

sites were reviewed closely; Long Island included three sites with especially low capacity factors

(below 5%) due to inverter failure, a low production anomaly, and extensive shading.

15

Small

purchased and leased sites and large sites on Long Island perform more in line with expectations.

Table 8: Total Production by Region, Size, and Purchase Type

Region

System

Size (kW)

Purchase

Type

Sample

Frame (N)

Evaluated

Sample (n)

Total

System

Size (kW)

Annual

Reporting

Production

(kWh)

Verified

Gross

First-year

Production

(kWh)

Reporting

Realization

Rate

Application-

Specific

Realization

Rate

Capacity

Factor

Con Ed

Below

200 kW

Lease

2751

22

19,518

22,910,482

20,779,807

90.7%

100.3%

12.2%

PPA

723

21

5,056

5,935,246

5,454,491

91.9%

101.3%

12.3%

Purchase

1851

39

16,099

18,897,258

17,857,909

94.5%

103.8%

12.7%

15

The owner of one of the sites verified that the inverter was down multiple times, totaling 8 months of lost production. Owners of

the other sites could not be contacted but, based on available data and information, one site had only three months of production

followed by zero production due to an unknown cause. The third appeared to be functioning normally with no anomalies but was

subject to extensive shading.

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page 16

> 200

kW

All

12

10

8,190

9,613,535

9,123,245

94.9%

94.9%

12.7%

Upstate

Below

200 kW

Lease

3914

18

32,205

37,803,721

33,796,527

89.4%

104.6%

12.0%

PPA

1977

34

33,544

39,375,720

35,123,142

89.2%

91.0%

12.0%

Purchase

6546

41

67,035

78,688,482

76,091,762

96.7%

100.5%

13.0%

> 200

kW

All

103

60

56,107

65,860,183

62,266,439

94.5%

94.1%

12.7%

Long Island

Below

200 kW

Lease

4817

23

31,459

36,928,262

34,749,495

94.1%

105.4%

12.6%

PPA

1991

22

13,776

16,170,292

12,612,827

78.0%

87.0%

10.5%

Purchase

4279

46

43,861

51,485,290

52,103,114

101.2%

105.0%

13.6%

> 200

kW

All

36

23

13,567

15,925,188

15,442,562

97.0%

96.8%

13.0%

Overall

29,000

359

340,416

399,593,659

375,401,320

93.9%

99.2%

12.6%

Figure 2 displays plots of evaluated production vs. application-specific production for all sampled

sites, as a representation of how well production was estimated by installers. Note that the two

plots have different scales: 0 to 300,000 kWh for smaller sites and 0 to 3,000,000 kWh for larger

sites. The plots show the relationship between evaluated production and application specific

production for each site. The line in each plot corresponds to a realization rate of 100%. The

vertical distance from a point on the plot to the line is the error associated with the site. Sites

above the line have realization rates above 100% and sites below have realization rates below

100%.

Figure 2. Plot of Evaluated Production vs. Application-specific Production

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page 17

2.3 Key Findings and Recommendations

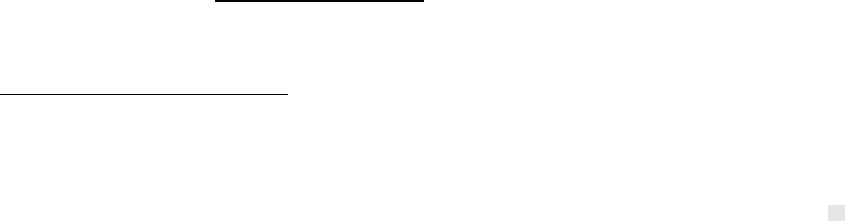

Key findings and recommendations from the impact evaluation are summarized in Table 9.

Table 9: Findings and Recommendations

#

Key Findings

Recommendations

1

Capacity factor results for all groups but

Long Island small purchase sites are

somewhat lower than the planning value

of 13.4%. The residential capacity factor

of 12.4% is higher than the 2011-2016

NY-Sun residential impact of 12.1%.

Small non-residential sites show an

increased capacity factor of 13.1%

(previously 12.1%), while the overall

non-residential group performance has

decreased from 13.2% to 12.7%.

NY-Sun plans to begin using application-specific

production estimates in the NYSERDA reporting

system for gross impact estimation in future years.

This change, which carries over from a prior

evaluation recommendation, should improve the

accuracy of gross impacts estimates and effectively

increase realization rates for the program.

16

Further

accuracy could be instituted by applying the

application-specific realization rates from this

evaluation.

2

Application-specific realization rates for

most results categories, especially small

systems (less than 200 kW) are more

accurate than reporting estimates. This

finding is consistent with prior evaluation

results. The application-specific

realization rates for small systems

(excluding PPA sites) are near 100%,

reflecting that the slightly lower verified

capacity factor (due to less optimal

physical characteristics, such as

orientation and shading) for this

segment is effectively captured in

residential system planning models.

The reporting realization rates for both

large and small systems are below

100%, indicating that the NYSERDA

reporting production overestimates

system production (due to finding #1,

capacity factors below the reporting

assumption of 13.4%).

16

This recommendation carries over from the prior NY-Sun solar PV impact evaluation (2008 and 2011-2016).

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page 18

#

Key Findings

Recommendations

3

Data collection through the DG

integrated database was lower than

expected:

• 25 of 114 sites in the persistence

sample discontinued reporting of

production to the DG Integrated

database after the required 3-year

post-installation period expired.

• Many sites expected to be enrolled in

the Large C&I program (due to

capacity size) were enrolled in the

Small Commercial program instead,

which did not require DG Integrated

Database data reporting.

NY-Sun Contractors were largely unable

or unwilling to provide responses to

phone surveys that sought to gather

system-specific installation and

production information, preventing the

collection of some information that would

help interpret unexpectedly high or low

production.

Establish expectations among NY-Sun participants

for periodic data collection, especially among the

persistence sample.

The evaluation team will provide an updated

persistence sampling plan for future evaluations to

account for increased attrition over original

expectations.

4

NYSERDA program tracking data has

improved considerably in quality and

completeness since the prior evaluation,

which bridged a shift and update of

tracking systems and protocols. Some

additional improvements would benefit

tracking and evaluability of the program

and related programs. Related findings

include:

Under the current reporting production

estimation scheme and program design,

the planned capacity factor differs by

array type for large commercial systems.

Most array type information was

unavailable for sites in this evaluation

period.

Contractor contact information was

sometimes incomplete or outdated.

Several sites with unexpected capacity

factors (which required additional

detailed review) were co-located. These

sites sometimes reported inaccurate

capacity per system, but in some cases,

the total system size was accurate.

• Confirm that array type is consistently available

in tracking data for future evaluations (as

expected per program manager information).

• Per program manager information, bi-facial

module installation has begun and thus will be

included in future program evaluation

populations, but is not tracked in program

tracking data. Consider adding a field to the

application (if not currently available) and to

Salesforce for tracking of this information.

• Updating contractor information when contractor

companies or contacts are known to have

changed would improve evaluation success.

Similarly, standardizing contractor company

names would improve evaluability and reduce

burden to contacts listed for multiple non-

standardized company names.

• Consider adding identifiers for co-located sites.

• Consider tracking site participation in other

NYSERDA and NY State programs, such as

NYGB and Clean Energy Communities

programs. This would strengthen tracking

records and improve evaluability of other solar

PV programs.

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page 19

3 Methods

This section summarizes the methods employed to collect production data for sampled sites and

analyze program performance.

3.1 Data Collection Approach

NYSERDA’s NY-Sun tracking database in SalesForce provided site-level account information,

including installed capacity (kW), application-specific (modeled) production estimations (kWh),

Total Solar Resource Fraction (TSRF), array type, system completion date, customer name and

contact information, purchase type, installation contractor, and region. The evaluation sample

frame was built from project information in this database.

The production data collection effort for this study sought to efficiently coordinate outreach

among contractors and, as a secondary source ultimately deemed unnecessary, participants. The

objectives of the data collection effort were two-fold:

• Collect production data (in kWh): first-year monthly (13 months) and pre-installation

annual energy use for net-metered sites

• Of lesser priority, the impact evaluation team sought to complete a short survey with

installation contractors to obtain any additional information required to understand the

system and production data.

Data collection surveys and communication were conducted by experienced program evaluators

with expertise in solar photovoltaic systems.

3.1.1 Large C&I Data Collection

Large Commercial and Industrial (>200 kW non-residential) sites with publicly incentivized

generation systems provide internet-connected monitoring data to NYSERDA and the public

through the DG Integrated Database. Some large C&I projects’ production data was not available

through this resource, in which case the site was added to the list for contractor data collection

described under the small business sector data collection plan.

3.1.2 Residential and Small Business

Residential and small business participating sites’ production data was collected through outreach

to installation contractors. Follow-up outreach to residential homeowners (for sites with non-

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page 20

responding sites) was deemed unnecessary because data collection from contractors was

sufficient to meet precision requirements.

The impact evaluation team developed advance letters (provided in Appendix E) for initial

communication to contractors to state the purpose of the study, formalize the data request, and

provide instructions for obtaining the data from PV system(s).

NYSERDA provided a solar PV program evaluation website

17

to encourage participation in the

study and provide a medium for email communication.

Finally, the impact evaluation team developed a recruitment script, survey script, and data

collection instrument to inventory and track collected data and to standardize the communication

from multiple evaluators. The survey script is provided in Appendix D.

3.2 Analysis Approach

All verified gross impact results for this evaluation are based on the first 13 months of production

data, starting the month of installation and dropping that first, potentially partial-production

month. All results are weather normalized to account for differences in production caused by

weather (solar insolation and precipitation) across years of installation.

The analysis of program data included cleaning and annualization of production data, calculation

of case weights for expansion of site data to the program population, and ratio estimation to

generate capacity factors with appropriate standard errors. Once the data collection and initial

analysis were complete, the impact evaluation team conducted a file review for sites with

particularly high or low capacity factors (above 14% or below 9%).

3.2.1 Production Data Analysis

The analysis calculated two key values from the production data for each evaluated site: capacity

factor and realization rate. Capacity factor provides a measure of system performance relative to

rated capacity. Many factors can influence capacity factor, such as installation direction and

angle, shading, temperature, and insolation. Capacity factor (CF) is calculated as:

��=

∑

��ℎ_����

�

�

�

�

�

∑

���

�

∗8,760 ℎ��∗�

�

�

�

Where:

17

https://www.nyserda.ny.gov/About/Publications/Program-Planning-Status-and-Evaluation-Reports/Solar-Photovoltaic-Impact-Evaluation

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page 21

kWh_eval

j

= First-year evaluated production for system j (kWh)

CAP

j

= System rated DC capacity j

W

j

= Weighting factor for system j

V = Evaluation sample

Realization rates (RR) provide a measure of the degree to which program estimates of production

predict first year generation.

��=

∑

��ℎ_����

�

�

�

�

�

∑

��ℎ _���

�

�

�

�

�

Where:

kWh_eval

j

= Evaluated first-year production for system j (kWh)

kWh_rep

j

= Program production for system j (kWh)

In the application-specific realization rate, kWh_rep

j

is based on the individual system estimates

provided by contractors. Application-specific production planning estimates are generated

through system models submitted by contractors as part of the program application process. In the

reporting realization rate, kWh_rep

j

is based on NYSERDA estimated solar PV system production

for purposes of external, program-level progress and benefits reporting to the PSC. Reporting

production estimates are based on a 13.4% capacity factor applied to the planned system size,

which was used by NYSERDA to estimate and report system production.

The method for calculating the sample weights, �

�

, for each stratum is described below. In lay

terms, the weight is simply the number of units in the sample frame (N) divided by the number of

completed units in the sample (n). The interpretation of the weight is that each completed sample

unit represents N/n units in the sample frame.

The weight W

x

is calculated as

W

x

= N

x

/ n

x

Where:

N

x

= Number of units of analysis in stratum X

n

x

= Number of completed sample units of analysis in stratum X

3.2.2 File Reviews

The evaluation team conducted a file and QC data review to determine reasons for capacity

factors and realization rates outside of the expected range (capacity factors above 14% or below

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page 22

9%), and subsequently clean the production data. NYSERDA provided production model files,

applications, site documentation, and QC data for these systems, for comparison to collected

production data and system details collected through customer surveys. The team reviewed

shading analysis and production estimation files from the system design to both the program

reported generation and the actual generation collected for this study, to determine whether

inaccurate modelled generation or metered data

18

caused the unreasonably high or low capacity

factors/ realization rates. The team also reviewed QA/QC documentation, where available, to

determine if differences between the designed and built systems were the source of unreasonably

high or low realization rates.

Where file reviews did not illuminate the cause or reasonability of site performance outside of the

expected range, the team conducted follow-up phone calls with program participants.

3.2.3 Weather Normalization

The evaluation team normalized production and capacity factors for weather differences (solar

insolation, temperature, snow, etc.) across installation years. The weather-normalized values

represent performance under typical weather conditions and provide a more meaningful basis for

comparison against the reported/expected production that was based on modeling.

The normalization approach modeled a set of representative solar PV sites (residential, small

commercial and large commercial), in System Advisory Model (SAM) production estimation

software, using common characteristics and weather data, including solar insolation, temperature,

and snow accumulation. For each month of each year in the first-year production period for

evaluated projects (spanning March 2016 through May 2019), the model results were used to

calculate the ratio of estimated production for the TMY month to the estimated production using

actual weather.

19

The observed production quantity for each site and month was then adjusted to

TMY conditions by multiplying each observed monthly quantity by the normalization ratio for

that region and month. Weather-normalization factors are provided in Appendix B.

3.2.4 Expansion of Production Results to Sample Frame

The final weather-normalized production results were expanded to the sample frame through a set

of sample weights based on the sample design stratification. Each weight is specific to an

18

Inaccurate metered data could be caused by metering of multiple projects on a single meter, net metered data, or poorly

captured data/ meter failure.

19

Hourly TMY and Actual weather data came from a combination of the Physical Solar Model (PSM) v3 provided by the National

Solar Radiation Database (NSRDV) and SolarAnywhere®. Monthly snow accumulation and frequency of events came from

NOAA weather data

NYSERDA Solar Photovoltaic Program Impact Evaluation for May 1, 2016 through March 31, 2018 Page 23

individual stratum and calculated as the number of units in the sample frame (N) for the stratum

divided by the number of completed units in the sample (n) for the stratum. The interpretation of

the weight is that each completed sample unit represents N/n units in the sample frame.