1

Decrease of Entropy

and Chemical Reactions

Yi

-

Fang Chang

Department of Physics, Yunnan University, Kunming, 650091, China

(e

-

mail:

)

Abstract

The chemical reactions are very complex, and include oscillation, condensation, catalyst and

self

-organization, etc. In these case changes of entropy may increase or decrease. The second law

of thermodynamics is based on an isolated system and statistical independence. If

fluctuations

magn

ified due to internal interactions exist in the system, entropy will decrease possibly. I

n

chemical reactions there are various internal interactions, so that some ordering processes with

decrease of entropy are possible on an isolated system. For example, a simplifying Fokker-

Planck

equation is solved, and the hysteresis as limit cycle is discussed.

Key words: entropy, chemical reaction, internal interaction, oscillation, catalyst.

PACS: 05.70.

-

a,

82.60.

-

s,

05.20.

-y.

1.Introduction

The measure of disorder is used by thermodynamic entropy. According to the second law of

thermodynamics, the entropy of the universe seems be constantly increasing. A mixture of two

pure substances or dissolve one substance in another is usually an increase of entropy. In a

chem

ical reaction, when we increase temperature of any substance, molecular motion increase and

so does entropy. Conversely, if the temperature of a substance is lowered, molecular motion

decrease, and entropy should decreases. In nature, the general tendency

is toward disorder.

Usual development of the second law of the thermodynamics was based on an open system,

for example, the dissipative structure theory.

Nettleton

discussed an extended thermodynamics,

which introduces the dissipative fluxes of classical nonequilibrium thermodynamics, and modified

originally rational thermodynamics with nonc

lassical

entropy flux [1]. The Gibbs equation from

maximum entropy is a statistical basis for differing forms of extended thermodynamics.

Recently, Gaveau, et al., [2] discussed the variational nonequilibrium thermodynamics of

reaction

-diffusion systems.

Cangialosi

, et al. [3], provided a new approach to describe the

component segmental dynami

cs

of miscible polymer blends combining the configurational

entropy and the self-concentration. The results show an excellent agreement between the

prediction and the experimental data. Stier [4] calculated the entropy production as function of

time in a closed system during reversible polymerization in nonideal systems, and found that the

nature of the activity coefficient has an important effect on the curvature of the entropy

production.

In particular,

Cybulski

, et al. [5], analyzed a system of two different types of Brownian

particles

confined in a cubic box with periodic boundary conditions. Particles of different types

annihilate when they come into close contact. The annihilation rate is matched by the birth rate,

thus

the total number of each kind of particles is conserved. The system evolves towards those

stationary

distributions of particles that minimize the Renyi entropy production, which decreases

monotonically during the evolution despite the fact that the topology and geometry of the interface

exhibit abrupt and violent changes.

Kinoshita

, et al. [6], developed an efficient method to evaluate

2

the translational and orientational contributions to the solute-

water

pair-correlation entropy that is

a major component of the hydration entropy in an analytical manner. They studied the effects of

the solute-water attractive interaction. A water molecule is modeled as a hard sphere of diameter,

which becomes larger, the p

ercentage

of the orientational contribution first increases, takes a

maximum value at depends on the strength of the solute-

water

attractive interaction, and

then

decreases toward a limiting value. The percentage of the orientational contribution reduces

progressively as the solute-water attractive interaction becomes stronger. They discussed the

physical origin of the maximum orientational restriction.

In fact, the second law of thermodynamics holds only for an isolated system, and it is a

probability law. This shows that transition probability from

the

molecular chaotic motion to

the

regular motion of a macroscopic body is very small. The basis of thermodynamics is the statistics,

in which a basic principle is statistical independence: The state of one subsystem does not affect

the probabilities of various states of the other subsystems, because different subsystems may be

regarded as weakly interacting [7]. It implies that various interactions among these subsystems

should not be considered. But, if various internal complex mechanism and interactions cannot be

neglected, perhaps a state with smaller entropy (for example, self-organized structure) will be able

to appear. In these cases, the statistics and the second law of thermodynamics should be different

[8

-10]. For instance, the entropy of an isolated fluid whose evolution depends on viscous pressure

and the internal friction does not increase monotonically [11].

According to the Boltzmann and Einstein fluctuation theories, all possible microscopic states

of a system are equal-probable in thermodynamic equilibrium, and the entropy tends to a

maximum value finally. In statistical mechanics fluctuations may occur, and always bring the

entropy to decrease [7,12

-

14]. Under certain condition fluctuations can be

magnified [12

-

14].

Chichigina

[15] proposed a new method for describing selective excitation as the addition of

information to a thermodynamic isolated system of atoms, decreasing the entropy of the system as

a result. This information approach is used to calculate the light-induced drift velocity. The

computational results are in good agreem

ent with experimental data.

2.Fokker

-

Planck Equation, Oscillation and Change of Entropy

There are various internal interactions in chemical reactions, and here noise and internal

fluctuations cannot be neglected often. The nonlinear chemical reactions belong to the

non

-equilibrium phase transformation, and fluctuations magnified may derive far-

equilibrium

states.

It is known that change of entropy may increase or decrease in chemical reactions [16,17]. If

the chemical heterogeneous reaction, multistability and hysteresis loop become to an entropy

function, which will be not a monotone increasing function, these phenomena will

possess

possibly

new property of entropy.

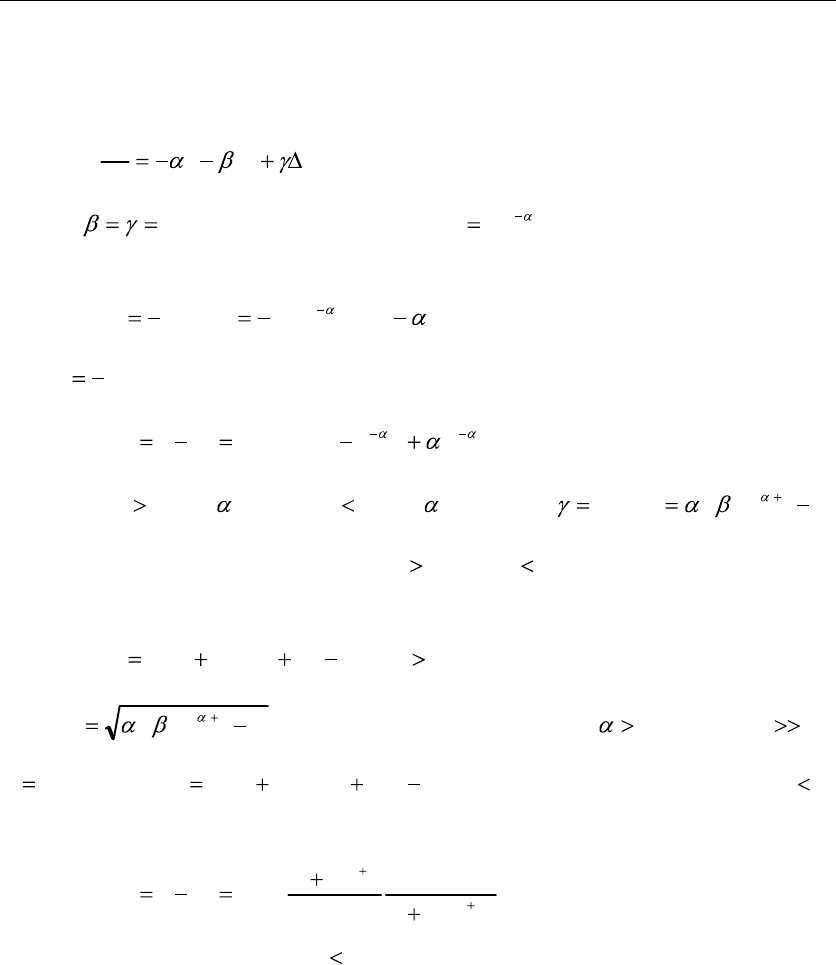

Gaveau, et al., introduced a standard Master equation [2,18]:

r

iiriiiir

i

tnPnWtrnPrnW

t

tnP

)]

},

({})({

)

},

({})({

[

)

},

({

, (1)

and an approximate Fokker

-

Planck equation:

1,

2

1

)(

2

1

)]

,()([

),(

ji

ij

ji

i

s

i

i

pD

xxV

txpxA

xt

txp

, (2)

3

where

p(x,t)

is the density of the probability distribution function. This is a kinetic equation of the

distribution function [12,19].

We solve a simplify

ing Fokker

-

Planck equation:

ppp

dt

dp

3

. (3)

1.When

0, the distribution function is

t

Ce

p . It corresponds to the Maxwell-

Boltzmann distribution, and its entro

py is

)

(ln

ln

tC

KCe

p

Kp

s

t

. (4)

Let

C

KC

s

ln

0

, so

])1(

[ln

0

tt

te

eC

KC

ss

ds

. (5)

Therefore,

0

ds

for

>0, and

0

ds

for

<0. 2.When

0

,

)1(/

22 ct

ep

.

In this case the equation has two solutions: 0p and 0p , but the latter is meaningless. It

corresponds to the Bose

-

Einstein distribution [13,

20], and its entropy is

0]

ln

)1

ln(

)1

[(

ppppKs . (6)

Here

)1(/

2 ct

ep

decreases with increasing t for 0 . When 0p

,

pKs

ln

. Let ]

ln

)1

ln(

)1

[(

00000

ppppKs , where

0

p corresponds to tt

0

,

so

]

)1(

)1(

ln[

0

0

1

0

0

1

0

p

p

p

p

p

p

p

p

Kss

ds

. (7)

This is a decreasing function, 0

ds

. It is consistent with the Bose-Einstein statistics

corresponding condensation. Further, the general equations with the nonlinear term, which

corresponds often to various interactions, may have different phase transformations.

In chemical reactions more intriguing is the Belousov-

Zhabotinskii

(BZ)

class of oscillating

reactions some of which can continue for hours [21-24]. Field and Dutt, et al., discussed the limit

cycles and the discontinuous entropy production changes in the reversible Oregonator [25,26],

which may describe the oscillations in the BZ

reaction.

The chemical oscillations as one type of

the limit cycle imply at least that entropy cannot be

increase monotonically.

The Fokker-Planck equation may be the reaction-diffusion equation. It can be extended to

several equations. The two equations may describe Brusselator in chemical reactions. The three

equations may describe

Oregonator

and BZ reaction.

Dutt, et al., reported changes discontinuously

at Hopf bifurcation points leading to limit cycle oscillations out of an unstable steady state [27].

Under some cases the factual changes of systems controlled by parametric values can be

achieved through the existing dynamical process inside systems, in which hysteresis loop may be

formed spontaneously. The character of the hysteresis loop can bring states of system to periodic

4

change s

pontaneously with time.

Steady states in the Raleigh-Benard convection have exhibited hysteresis in entropy

production [28]. At different steady states

D

utt calculated entropy production [29], and the average

entropy production for the unstable steady stat

es generating limit cycle oscillations. The hysteresis

in the entropy production as a function of the rate constant k of the steps in Oregonator (k

decreasing or increasing) as time evolves showed the stable and unstable (limit cycle) phases. In

the case the Floquet exponent is positive or negative for different critical points of Hopf

bifurcations, i.e., the entropy productions ha

ve

two values for the same chemical reaction. The

entropy production is calculated in the model in a subcritical Hopf bifurcation region. Dutt, et al.,

calculated the entropy production for steady and oscillatory states in another region [27,30,31].

Dutt

discussed the hysteresis in entropy production in a model exhibiting oscillations in the

BZ

reaction, and a bistability in the entropy production between a steady state and an oscillatory

state in the neighborhood of a subcritical Hopf bifurcation. He identified this region in the past by

a perturbation method. The numerical results present a thermodynamic formulation of multistable

structures in

a nonlinear dissipative system [29].

3.Decrease of Entropy and Condensed Pro

cess

We discussed fluctuations magnified due to internal interactions, etc., so that the equal-

probability does not hold. In this case, the entropy with time would be defined as [10]

r

rr

tPtPktS )(

ln

)()( . (8)

From this a possible decrease of entropy is calculated in an internal condensed process. Internal

interactions, which bring about inapplicability of the statistical independence, cause possibly

decreases of entropy in an isolated system. The possibility is researched for attractive process,

internal energy, system entropy and nonlinear interactions, etc. An isolated system may form a

self

-

organized structure [8

-

10].

For the systems with internal interactions, we proposed that the total entropy should be

extended

to [10]

ia

dSdSdS

, (9)

where

a

dS

is an additive part of entropy, and

i

dS

is an interacting part of entropy. Eq.(9) is

similar to a well known for

mula:

SdSddS

ei

, (

10

)

in the theory of dissipative structure proposed by Prigogine. Two formulae are applicable for

internal or external interactions, respectively.

The entropy of non

-i

deal gases is:

)/1

log(

V

Nb

NSS

id

. (

11

)

This is smaller than one of ideal gases, since b is four times volume of atom, 0b

.

This

corresponds to the existence of interactions between the gas molecules, and average of forces

betw

een molecules

is

attractive [13].

The entropy of a solid is:

332

)(15/2 u

VT

S , (12)

5

so

0

dS

for 0

dT

. The free energy with the

correlation part of plasma is [13]:

2/3

2

2/1

2/13

)(

3

2

a

aa

id

zN

VT

e

FF

. (13)

Correspondingly, the entropy is:

0

3

2/3

2/3

2

2/1

2/13

TzN

V

e

SS

dS

a

aa

id

. (14)

In these cases, there are

the

electric attractive forces between plas

ma.

According to

ln

kS , 15

in an isolated system there are the n-particles, which are in different states of energy respectively,

so

!

1

n . Assume that internal attractive interaction exists in the system, and the n-

particles

will cluster to m-particles. If they are in different states of energy still, there will be !

2

m

.

Therefore, in this process

)!/!

ln(

)/

ln(

1212

nmkk

dS

SS . 16

So long as

n

m

for the condensed process, entropy decreases 0

dS

. Conversely,

n

m

for the dispersed process, entropy increases 0

dS

. In these cases it is independent that each

cl

uster includes energy more or less. In an isolated system, cluster number is lesser, the degree of

irregularity and entropy are smaller also. This is consistent with a process in which entropy

decreases from gaseous state to liquid and solid states.

In the chemical reactions the entropy changes may be calculated from the standard molar

entropy [32]

)()(

000

reactions

Sv

products

SvS

rp

,

(1

7)

for example, for the conversion

10

22

5.146,32

JK

S

NO

O

NO

. Further, Petrucci,

et al., proposed to be able to apply some qualitative reasoning: Because three moles of gaseous

reactants produce only two moles of gaseous products, they can expect the entropy to decrease;

that is that

0

S

should be negative [17

].

When gaseous ions change over to aquosity in hydration reactions, their standard molar

entropy remarkably decreases. When ions are smaller or their charges increase, entropy of solution

decreases more. This is consistent with our conclusion [8-10]. A dissolved process may be an

automatic one.

Based on the thermodynamic relationships

ln

0

RT

G , (18)

and the change of the entropy

TGHS /)(

000

, (19)

6

the thermodynamic function

10

91

.

19

kJmolST

at

C

0

25

for the complex

2

42

])([

Me

NH

Cd

[33]. For

3224

,,, OOH

COCH

, there is

00

GH

, i.e.,

0

0

S

[1

7

].

These pointed out that change of entropy is negative value, namely,

entropy decrease in these

cases.

Moreover, the standard product entropy may be negative value in metal ions in aqueous

solution [17,33].

The entropy is expressed in terms of the partition function Z as

Zk

T

Z

kT

S

ln

)

(ln

. (20)

For order and disorder the increase of entropy in fusion is

nR

kN

S

fu

, (2

1)

which is called the communal entropy [17

]. Contrarily, the entropy in condensation sho

uld is

nRkN

S

con

, (

22

)

which decreases. In the general case, fusion and condensation are in the open systems. But, a few

condensations with internal interactions may be in some isolated systems. Further, the general

phase transformation is in the open systems. But, a spontaneous magnetization may be in an

isolated system. This is an ordering process, in which entropy should decrease.

4.Catalyst and Discussion

The catalysts may substantially lower the activation energy, and increase the rates of some

chemical reactions without itself being consumed in the reactions, and may control direction of

reactions. The mass of the catalyst remains constant [34,35].

In fact, the catalyst in chemical reactions possesses some character of the auto-

control

mechanism in an isolated system. If it does not need the input energy, at least in a given time

interval, the self

-

catalyst is similar with auto

-

control like a type of Maxwell demon, which is just a

type of internal interactions. The demon may be a permeable membrane. For the isolated system,

this is possible that the catalyst and other substance are mixed to produce new order substance

with smaller entropy. Ordering is the formation of structure through the self-organization from a

disordered state.

Dutt proposed the result interesting to biophysical processes like glycolytic oscillations, and

may be considered useful for better efficiency of the biochemical engines [27], namely if their

structures is bette

r, it will implies that there is smaller entropy for the same process.

In the chemical and biological self-organizing processes some isolated systems may tend to

the order states spontaneously. Ashby pointed out [36]: Ammonia and hydrogen are two gases, bu

t

they mix to form a solid. There are about 20 types of amino acid in germ, they gather together, and

possess a new reproductive property. Generally solid is more order than gas, and corresponding

solid entropy is smaller than gaseous entropy. Germ should

be more order than amino acid yet.

Prigogine and Stengers [14] discussed a case: When a circumstance of Dictyostelium

discoideum becomes lack of nutrition, discoideum as some solitary cells will unite to form a big

cluster spontaneously. In this case these cells and nutrition-liquid may be regarded as an isolated

7

system.

Jantsch [37] pointed out: When different types of sponge and water are mixed up in a

uniform suspension, they rest after few hours, and then separate different types automatically. It is

a

more interesting order process, a small hydra is cut into single cell, and these cells will

spontaneously evolve, firstly form some cell-clusters, next form some malformations, finally they

become a normal hydra.

The decrease of entropy relates possibly to the thermodynamics of enzyme-

catalyzed

reactions. An enzyme is a complex protein molecule that can act as a biological catalyst. In

biology there are self

-

organize and self

-

assembly [38], etc.

These phenomena show that nature does not tend toward disorder always, and are some

ordering processes with decrease of entropy.

Our conclusion is: The chemical reactions are complex processes. When there are various

internal interactions and fluctuations in an isolated system: 1. It should be discussed that all

midd

le change process from begin to end is always entropy increase. 2.The entropy increase

principle in these processes may not hold always. 3. All of world does not trend to disorder always.

Further,

a negative temperature is based on the Kelvin scale and the condition dU>0 and dS<0.

Conversely, there is also

the

negative temperature for dU<0 and dS>0. We find that

the

negative

temperature derives necessarily decrease of entropy [39]

.

Moreover,

the

negative temperature is

a

query, and is contradiction with usual meaning of temperature and with some basic concepts of

physics and mathematics. It is a

notable

question in nonequilibrium thermodynamics.

In this paper,

we attend mainly to thermodynamic results and change of entropy in the chemical reactions.

Referen

ces:

1.

R. E. Nettleton

, J. Chem. Phys. 93,8247(1990); 97, 8815(1992).

2.

B.Gaveau, M.Moreau

and J.Toth, J.Chem.Phys. 111,7736,7748(1999); 115,680(2001).

3.

D. Cangialosi

,

G. A. Schwartz

,

A. Alegr¨ª

and

J. Colmenero

, J. Chem. Phys.

123,

144908(2005).

4.

U.Stier, J. Chem. Phys.

124,

024901

(2006).

5.

O.

Cybulski

,

D.Matysiak

and

V.Babin

, J. Chem. Phys.

122,

174105

(2005).

6.

M. Kinoshita

,

N. Matubayasi

,

Y. Harano

and

M. Nakahara

, J. Chem. Phys.

124,

024512 (2006).

7.

L.D.Landau and E.M.Lifshitz, Statistical Physics (Pergamon Press. 1980).

8.

Yi

-Fang Chang, In Entropy, Information and Intersecting Science (C.Z.Yu, et al., Ed. Yunnan

Univ. Press. 1994). p53

-

60.

9.

Yi

-

Fang Chang, Apeiron, 4,97(1997).

10.

Yi

-

Fang Chang, Entropy

,

7,190(2005).

11.

J.Fort and J.E.Llebot, Phys. Lett. A205, 281(1995).

12.

H.Haken, Synergetics. An in

troduction (Springer

-

Verlag. 1977).

13.

L.E.Reichl, A Modern Course in Statistical Physics (Univ.of Texas Press. 1980).

14.

I.Prigogine and I.Stengers, Order Out of Chaos (New York: Bantam Books Inc. 1984).

15.

O.A.Chichigina, J. Exper.

Theor. Phys. 89,30(1999).

16.

D.A.Jo

hnson, Some Thermodynamic Aspects of Inorganic Chemistry. 2nd Ed.(Cambridge

Univ. Press. 1982).

17.

R.H.Petrucci, W.S.Harwood and F.G.Herring, General Chemistry: Principles and Modern

Applications, 8

th

Ed (Pearson Education, Inc. 2003).

18.

I.Prigogine, Nonequilib

rium Statistical Mechanics (New York: Wiley. 1962).

8

19.

H.

Risken, The

Fokker

-

Planck Equation (Springer

-

Verlag, 1984).

20.

J.F.Lee, F.W.Sears and D.L.Turcotte, Statistical Thermodynamics. (Addison-

Wesley

Publishing Company, Inc. 1963). p288

-

293.

21.

R.J.Field, E.Koros

and R.M.Noyes, J.Am.Chem.Soc. 94,8649(1972).

22.

P.De Kepper and J.Boissonad, J.Chem.Phys. 75,189(1972).

23.

A.T.Winfree, Spiral wave

s of chemical activity. Science

,

175,634(1972).

24.

C.Vidal. J.C.Roux and A.Rossi, J.Am.Chem.Soc. 102,1241(1980).

25.

R.J.Field, J. Chem. P

hys. 63,2289(1975).

26.

R.J.Field and H.D.Forsterling, J. Chem. Phys. 90,5400(1986).

27.

A.K.Dutt

,

D.K.Bhattacharyay

and B.C.Eu, J. Chem. Phys. 93,7929(1990).

28.

M.G.Velarde, X.L.Chu

and J.Ross, Phys. Fluids 6,550(1994).

29.

A.K.Dutt, J. Chem. Phys. 110, 1061(1999).

30.

A.K.Dutt

, J. Chem. Phys. 86,3959(1987).

31.

B.R.Irvin and J.Ross, J. Chem. Phys. 89,1064(1988).

32.

N.N.Greenwood and A.Earnshaw, Chemistry of The Elements (Pergamon Press.1984).

33.

J.P.Hunt, Metal

Ions in Aqueous Solution. (New York: W.A.Banjamin. 1963). p38

-

41.

34.

R.A.Burns, Fundamentals of Chemistry, 4

th

ed. (Pearson Education, Inc., 2003).

35.

A.T.Bell, et al., Science (Casalysis),299,1688

-

1706(2003).

36.

W.R.Ashby, An Introduction to Cybernetics (Chapman & Hall Ltd. 1956).

37.

E.Jantsch, The Self

-

Organizing (Universe. Pergamon. 1979).

38.

G.M.Whitesides, J.P.Mathias and S.T.Seto, Science, 5036,1182(1991).

39.

YI

-

Fang C

h

ang, arXiv:0704.1997.