title: Strategies and Games : Theory and Practice

author: Dutta, Prajit K.

publisher: MIT Press

isbn10 | asin: 0262041693

print isbn13: 9780262041690

ebook isbn13: 9780585070223

language: English

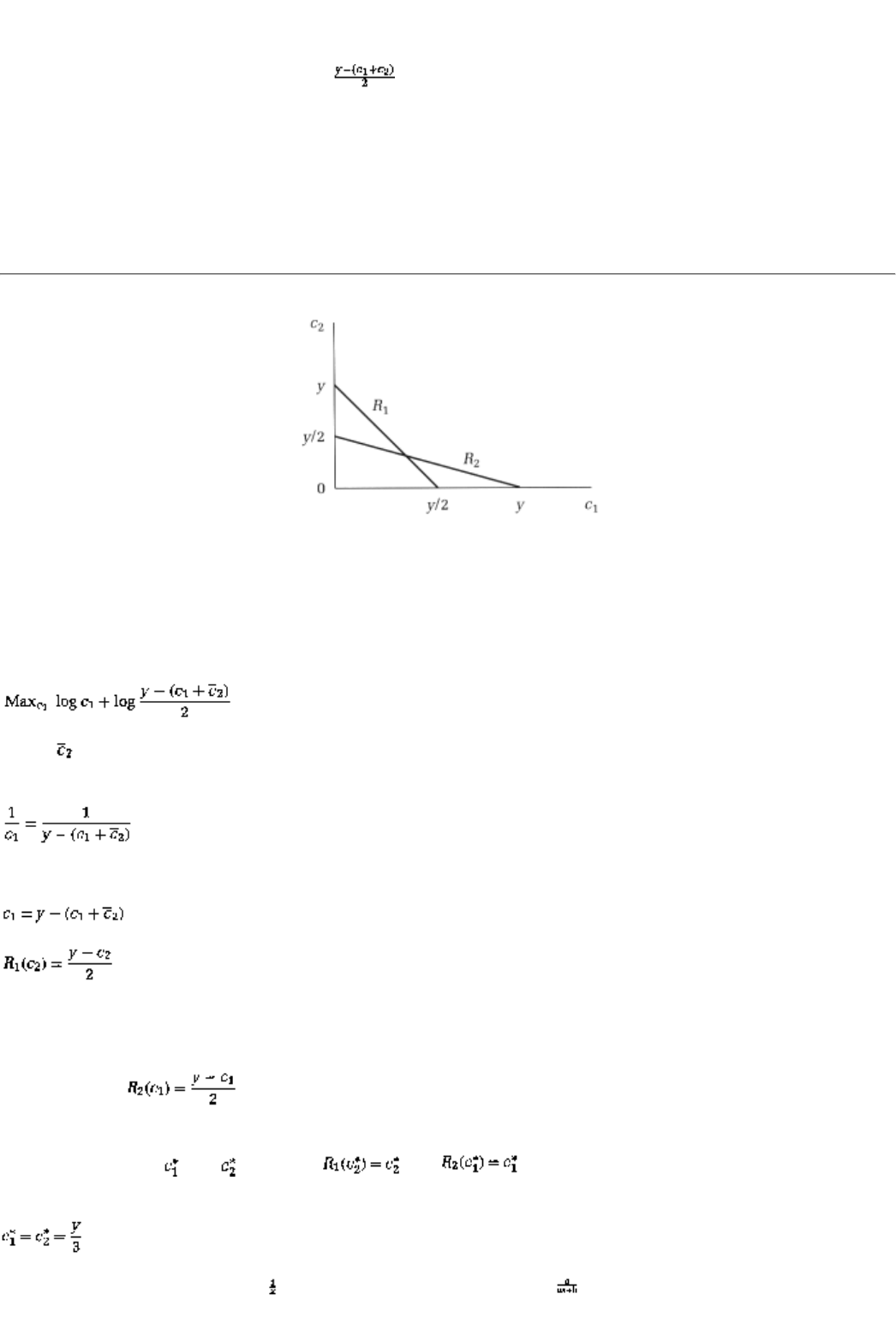

subject Game theory, Equilibrium (Economics)

publication date: 1999

lcc: HB144.D88 1999eb

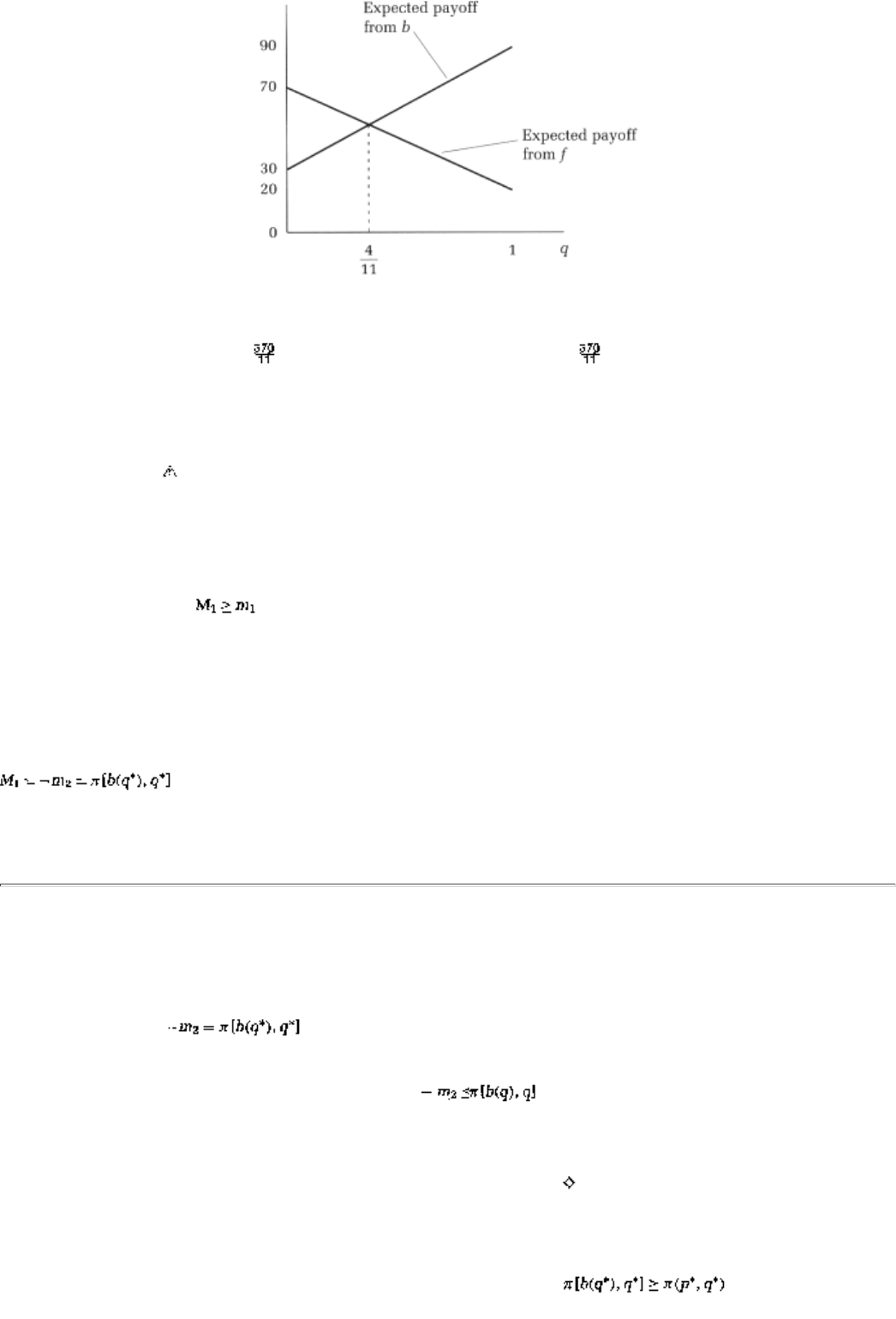

ddc: 330/.01/5193

subject: Game theory, Equilibrium (Economics)

cover

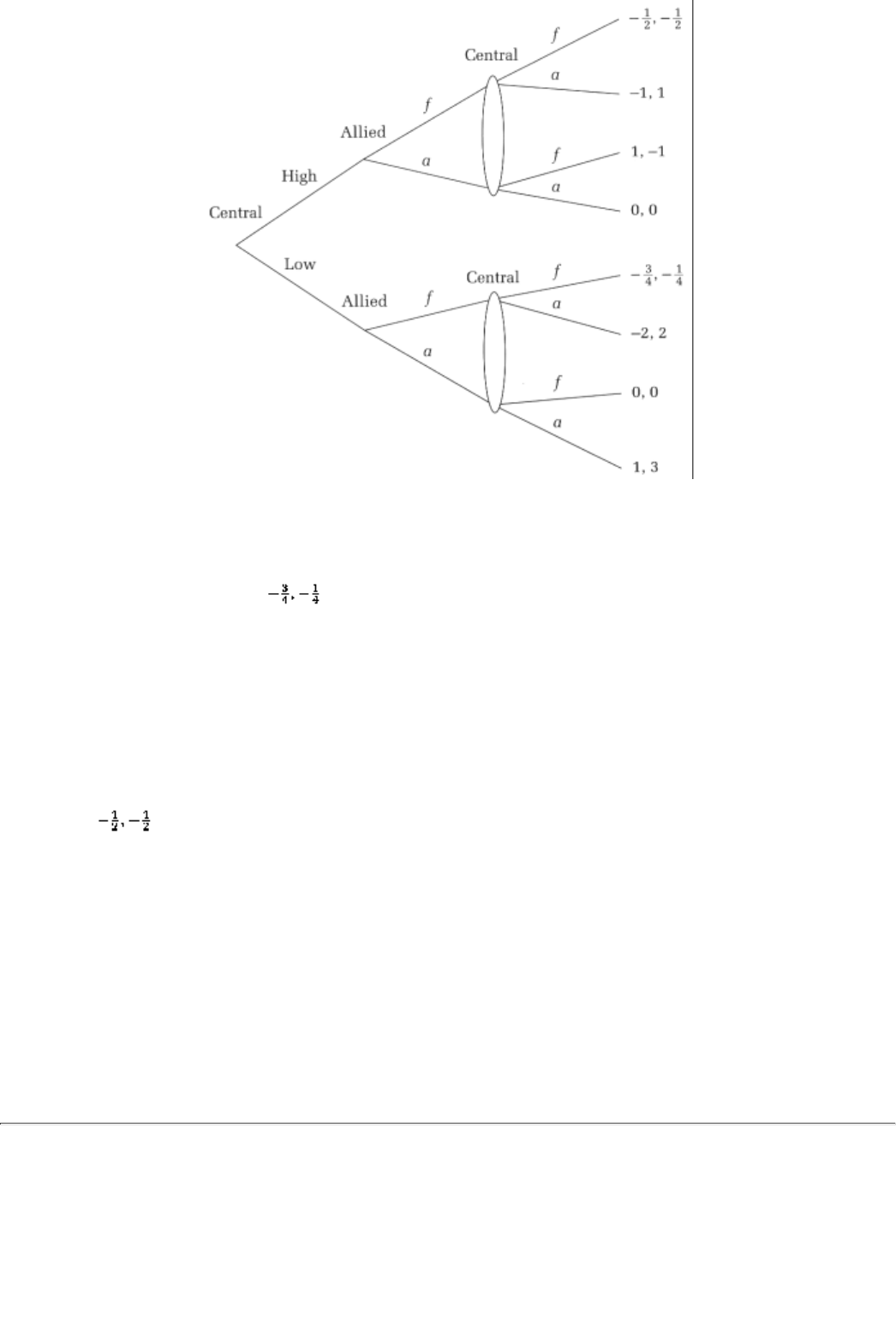

Page III

Strategies and Games

Theory and Practice

Prajit K. Dutta

THE MIT PRESS CAMBRIDGE, MASSACHUSETTS LONDON, ENGLAND

page_iii

Page IV

© 1999 Massachusetts Institute of Technology

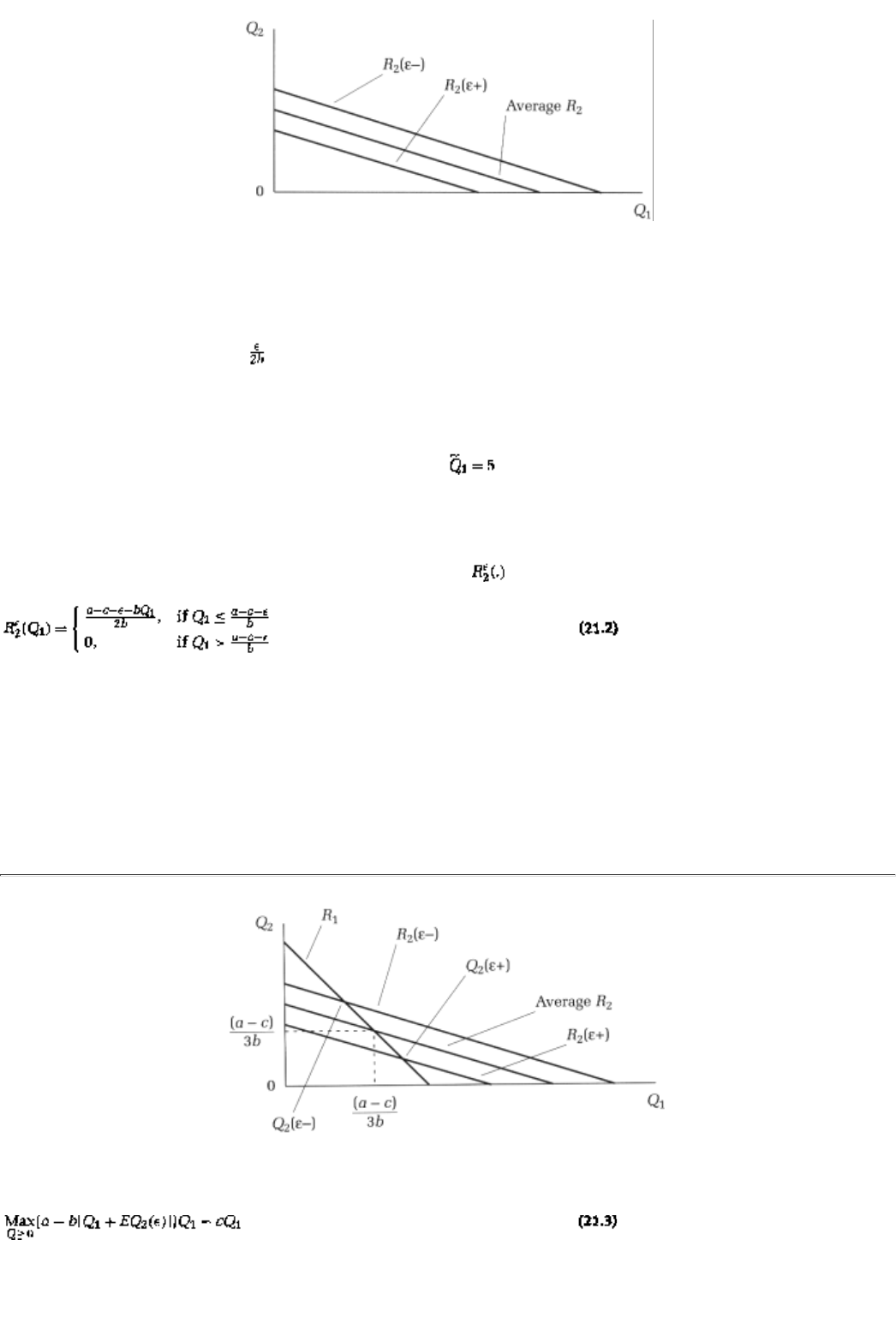

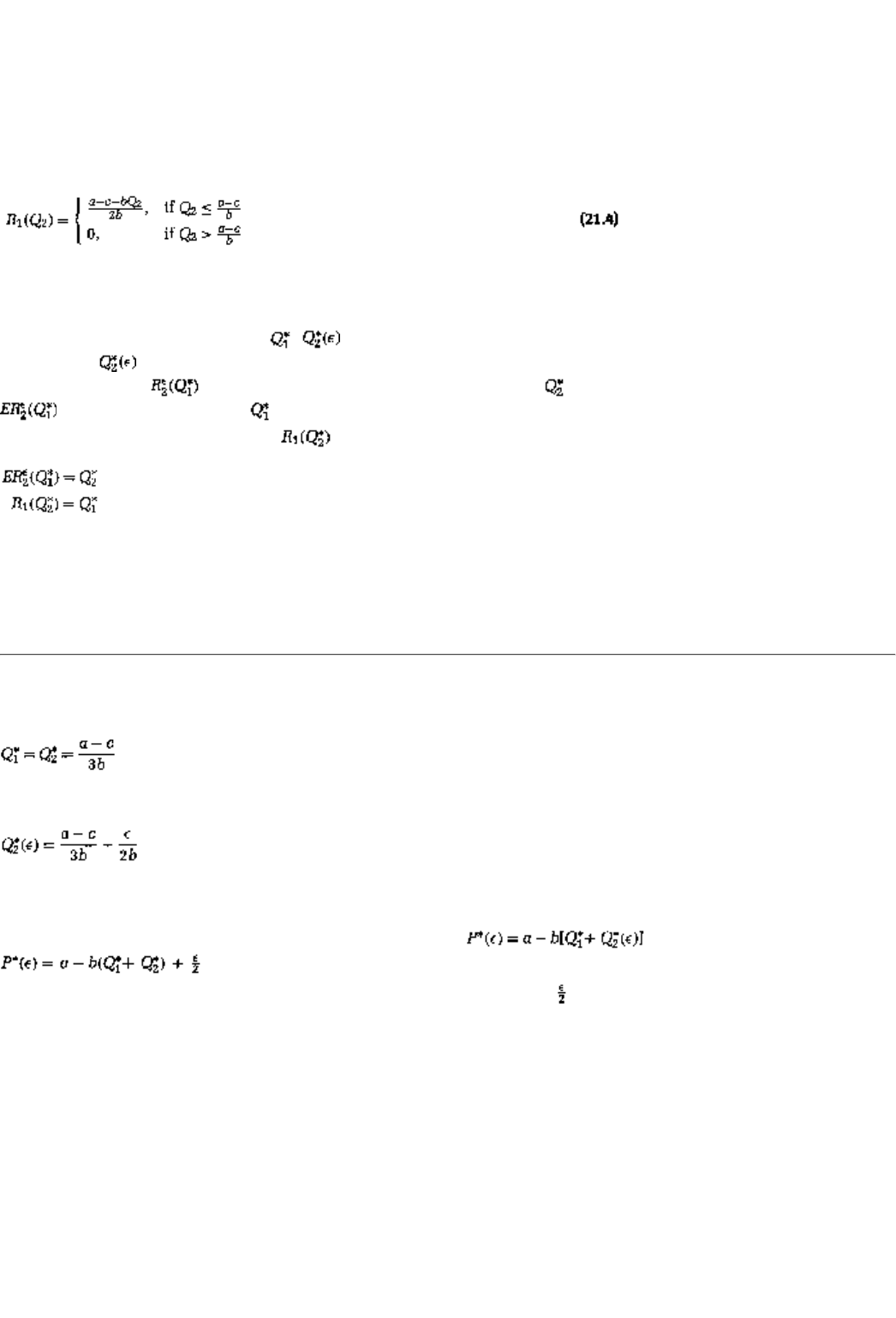

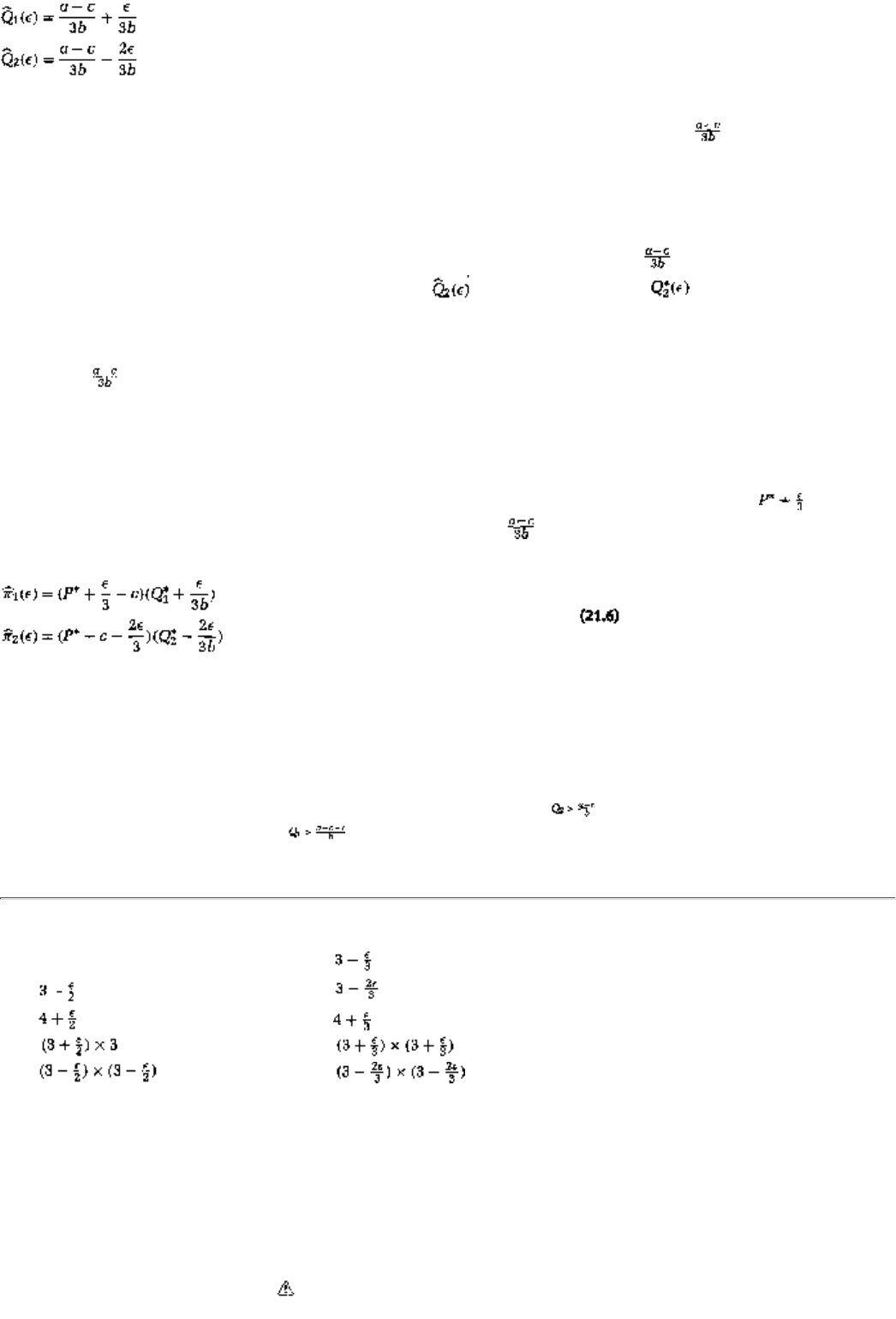

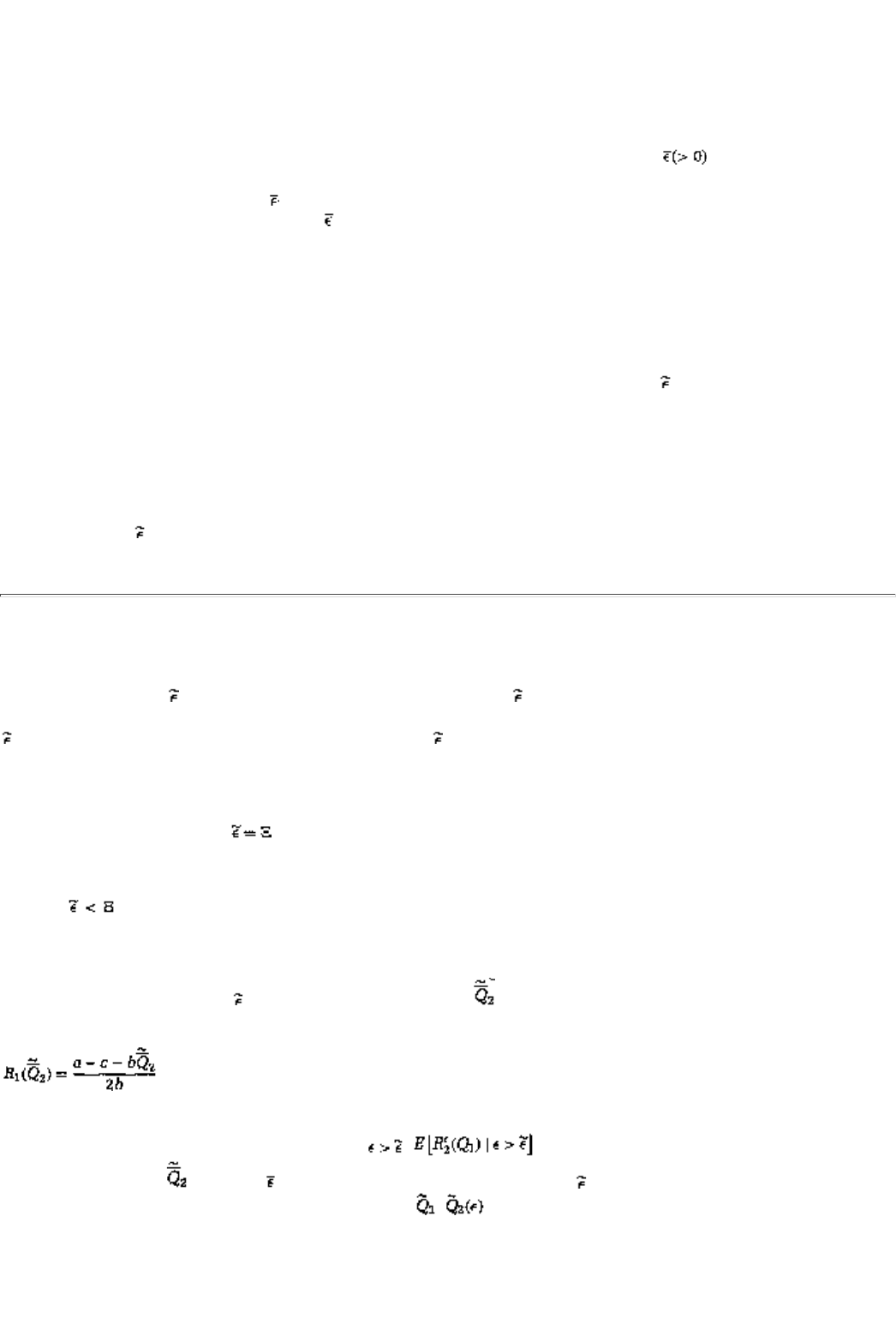

All rights reserved. No part of this book may be reproduced in any form by any electronic or mechanical

means (including photocopying, recording, or information storage and retrieval) without permission in

writing from the publisher.

This book was set in Melior and MetaPlus by Windfall Software using ZzTEX and was printed and bound in

the United States of America.

Library of Congress Cataloging-in-Publication Data

Dutta, Prajit K.

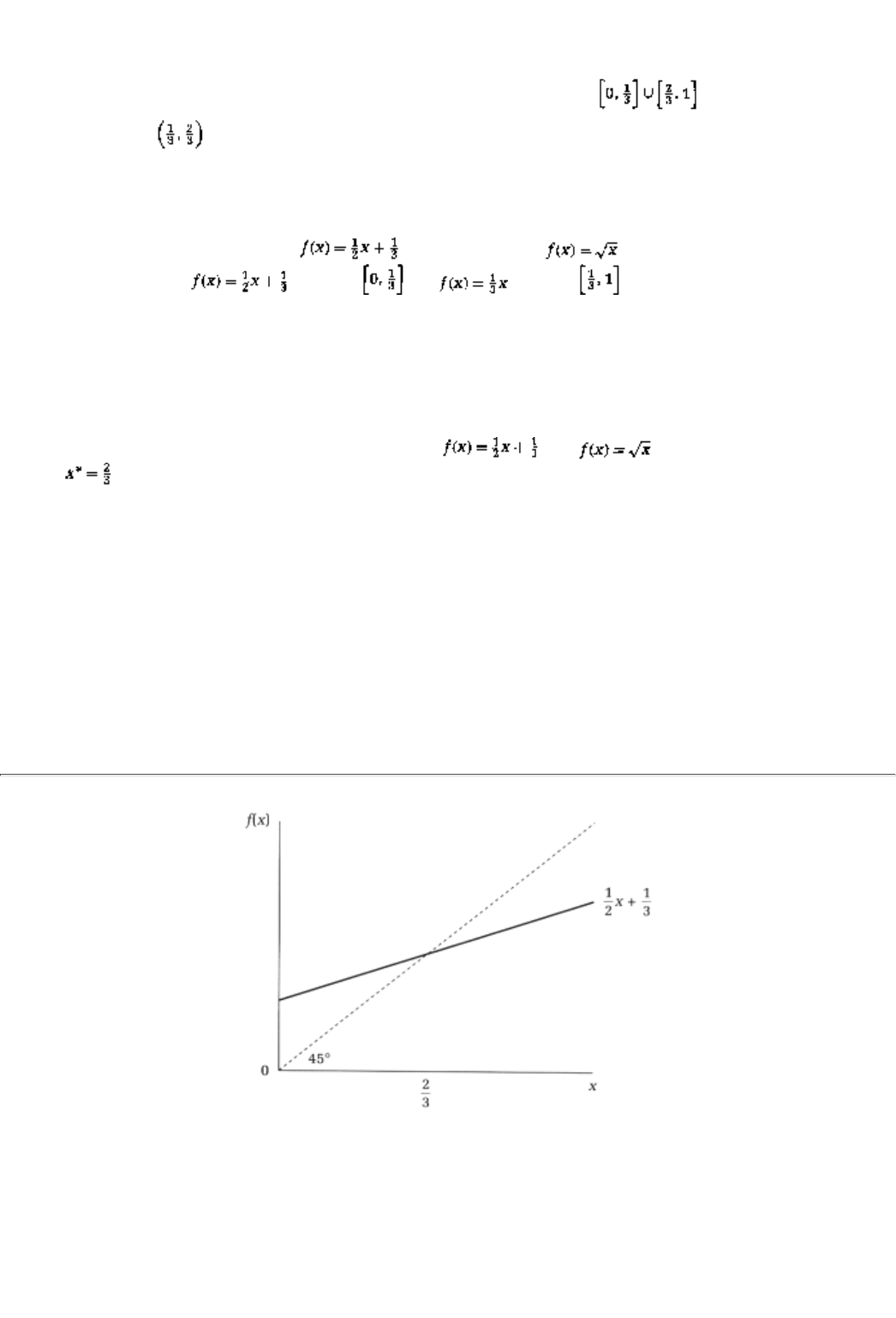

Strategies and games: theory and practice / Prajit K.

Dutta.

p. cm.

Includes bibliographical references and index.

ISBN 0-262-04169-3

1. Game theory. 2. Equilibrium (Economics). I. Title.

HB144.D88 1999

330'.01'15193dc21 98-42937

CIP

page_iv

Page V

MA AAR

BABA KE

page_v

Page VII

BRIEF CONTENTS

Preface XXI

A Reader's Guide XXIX

Part One Introduction 1

Chapter 1 A First Look at the Applications 3

2 A First Look at the Theory 17

Two Strategic Form Games: Theory and Practice 33

3 Strategic Form Games and Dominant Strategies 35

4 Dominance Solvability 49

5 Nash Equilibrium 63

6 An Application" Cournot Duopoly 75

7 An Application: The Commons Problem 91

8 Mixed Strategies 103

9 Two Applications: Natural Monopoly and Bankruptcy Law 121

10 Zero-Sum Games 139

Three Extensive Form Games: Theory and Applications 155

11 Extensive Form Games and Backward Induction 157

12 An Application: Research and Development 179

13 Subgame Perfect Equilibrium 193

14 Finitely Repeated Games 209

15 Infinitely Repeated Games 227

16 An Application: Competition and Collusion in the NASDAQ Stock Market 243

17 An Application: OPEC 257

18 Dynamic Games with an Application to the Commmons Problem 275

Four Asymmetric Information Games: Theory and Applications 291

19 Moral Hazard and Incentives Theory 293

20 Games with Incomplete Information 309

page_vii

Page VIII

21 An Application: Incomplete Information in a Cournot Duopoly 331

22 Mechanism Design, the Revelation Principle, and Sales to an Unknown Buyer 349

23 An Application: Auctions 367

24 Signaling Games and the Lemons Problem 383

Five Foundations 401

25 Calculus and Optimization 403

26 Probability and Expectation 421

27 Utility and Expected Utility 433

28 Existence of Nash Equilibria 451

Index 465

page_viii

Page IX

CONTENTS

Preface XXI

A Reader's Guide XXIX

Part One Indroduction 1

Chapter 1 A First Look at the Applications 3

1.1 Gabes That We Play 3

1.2 Background 7

1.3 Examples 8

Summary 12

Exercises 12

Chapter 2 A First Look at the Theory 17

2.1 Rules of the Game: Background 17

2.2 Who, What, When: The Extensive Form 18

2.2.1 Information Sets and Strategies 20

2.3 Who What, When: The Normal (or Strategic) Form 21

2.4 How Much: Von Neumann-Morgenstern Utility Function 23

2.5 Representation of the Examples 25

Summary 27

Exercises 28

Part Two Strategic Form Games: Theory and Practice 33

Chapter 3 Strategic Form Games and Dominant Strategies 35

3.1 Strategic Form Games 35

3.1.1 Examples 36

3.1.2 Equivalence with the Extensive Form 39

3.2 Case Study The Strategic Form of Art Auctions 40

3.2.1 Art Auctions: A Description 40

3.2.2 Art Auctions: The Strategic Form 40

3.3 Dominant Strategy Solution 41

page_ix

Page X

3.4 Cae Study Again A Dominant Strategy at the Auction 43

Summary 44

Exercises 45

Chapter 4 Dominance Solvability 49

4.1 The Idea 49

4.1.1 Dominated and Undominated Strategies 49

4.1.2 Iterated Elimination of Dominated Strategies 51

4.1.3 More Examples 51

4.2 Case Study Electing the United Nations Secretary General 54

4.3 A More Formal Definition 55

4.4 A Discussion 57

Summary 59

Exercises 59

Chapter 5 Nash Equilibrium 63

5.1 The Concept 63

5.1.1 Intuition and Definition 63

5.1.2 Nash Parables 64

5.2 Examples 66

5.3 Case Study Nash Equilibrium in the Animal Kingdom 68

5.4 Relation Between the Solution Concepts

69

Summary 71

Exercises 71

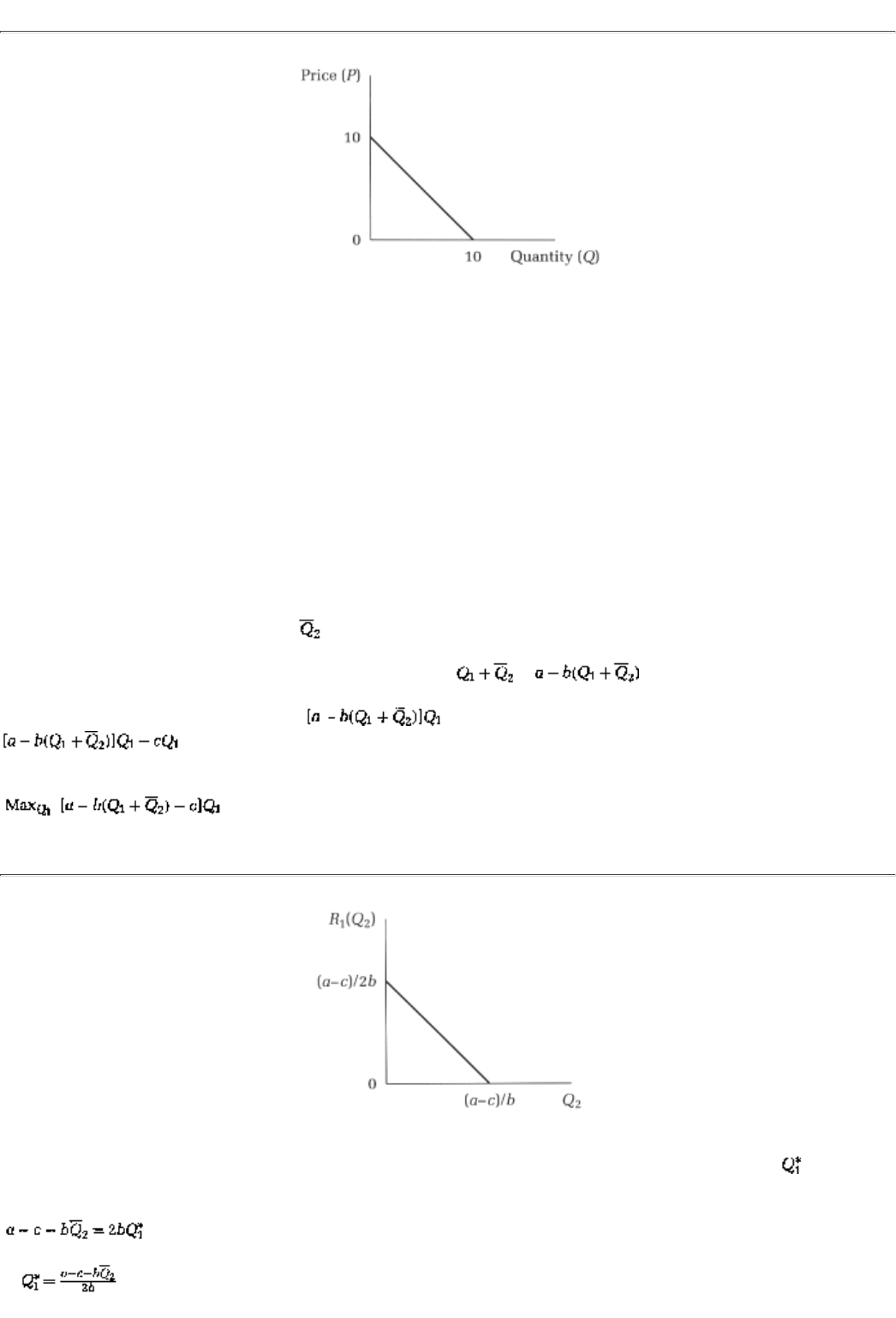

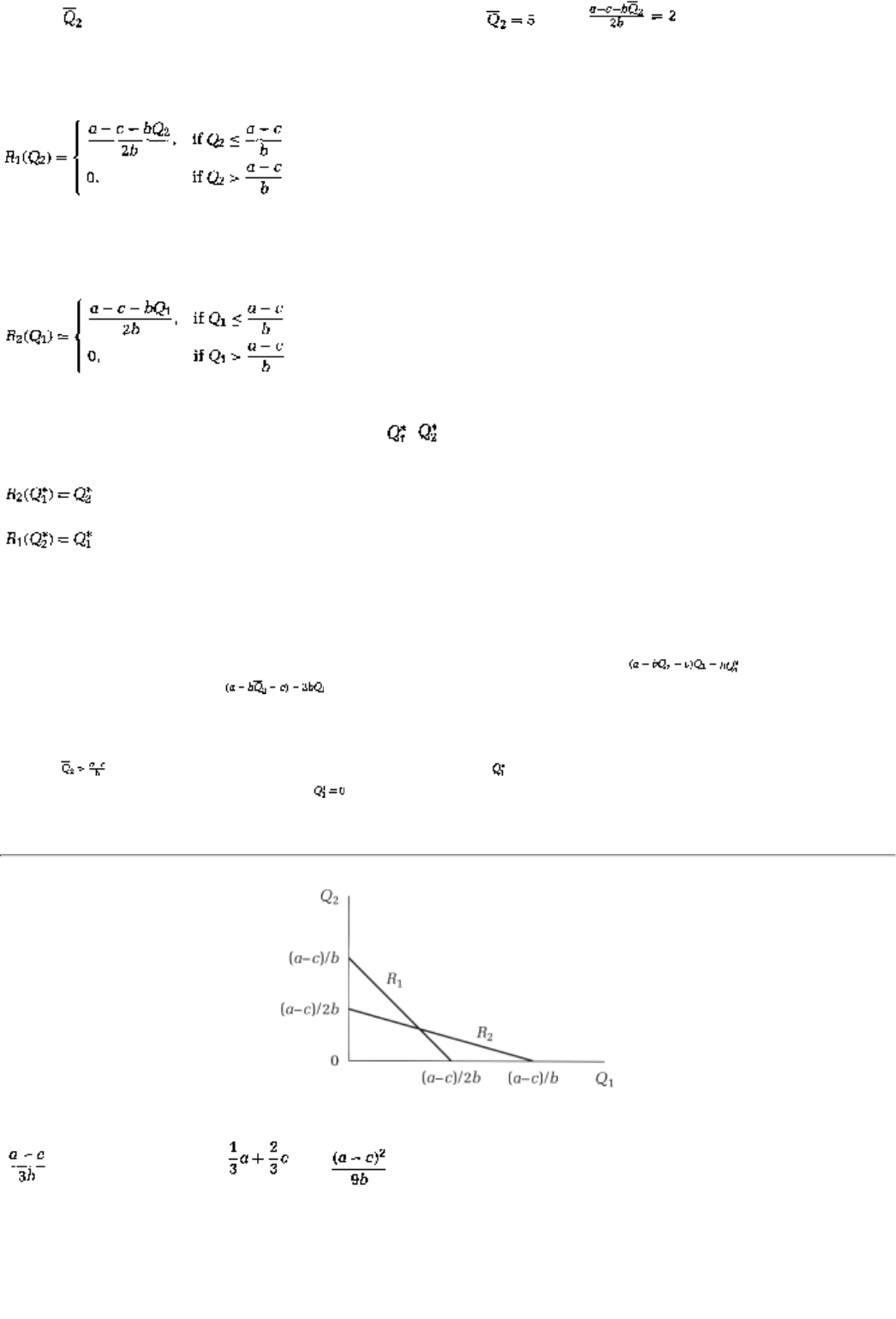

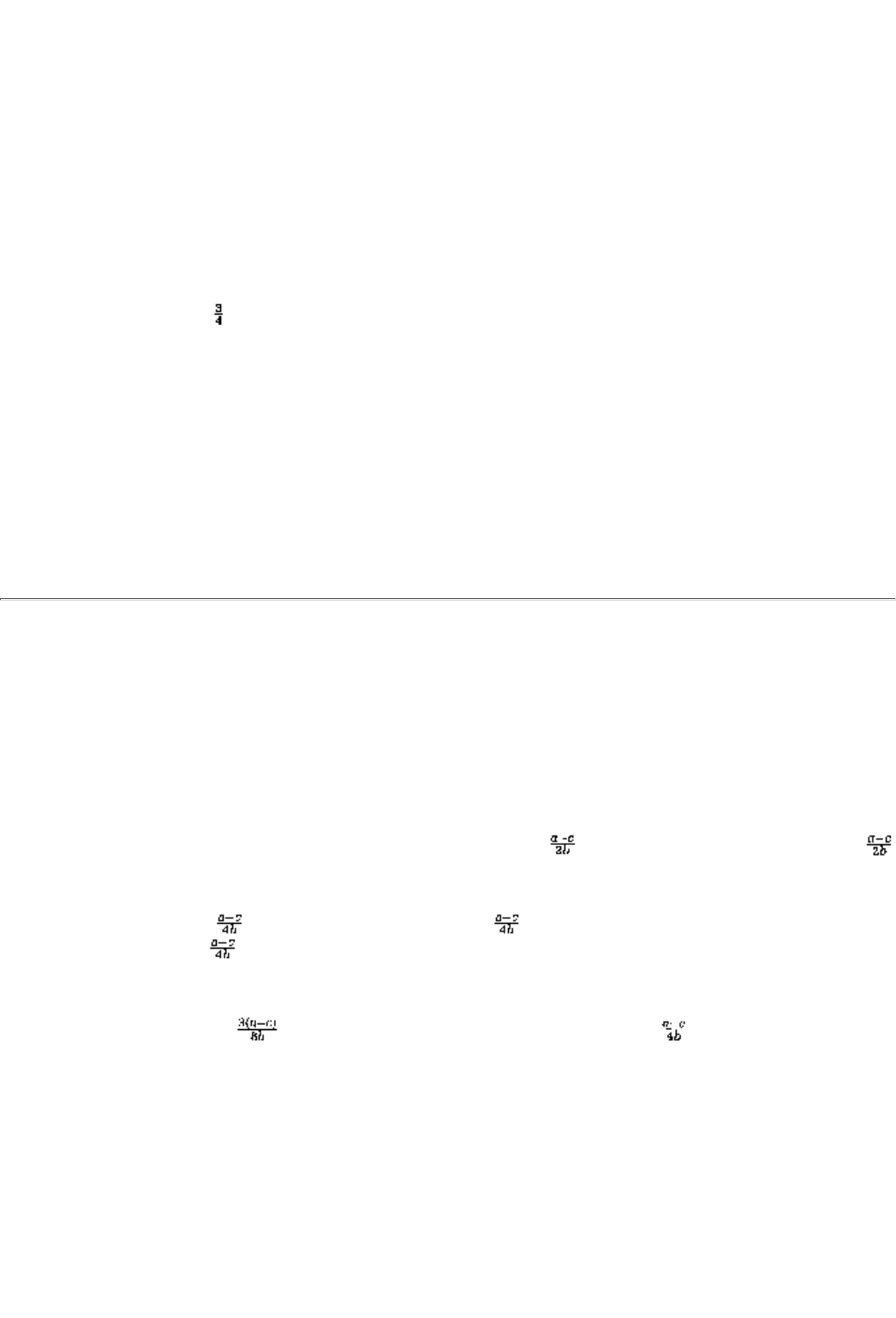

Chapter 6 An Application: Cournot Duopoly 75

6.1 Background 75

6.2 The Basic Model 76

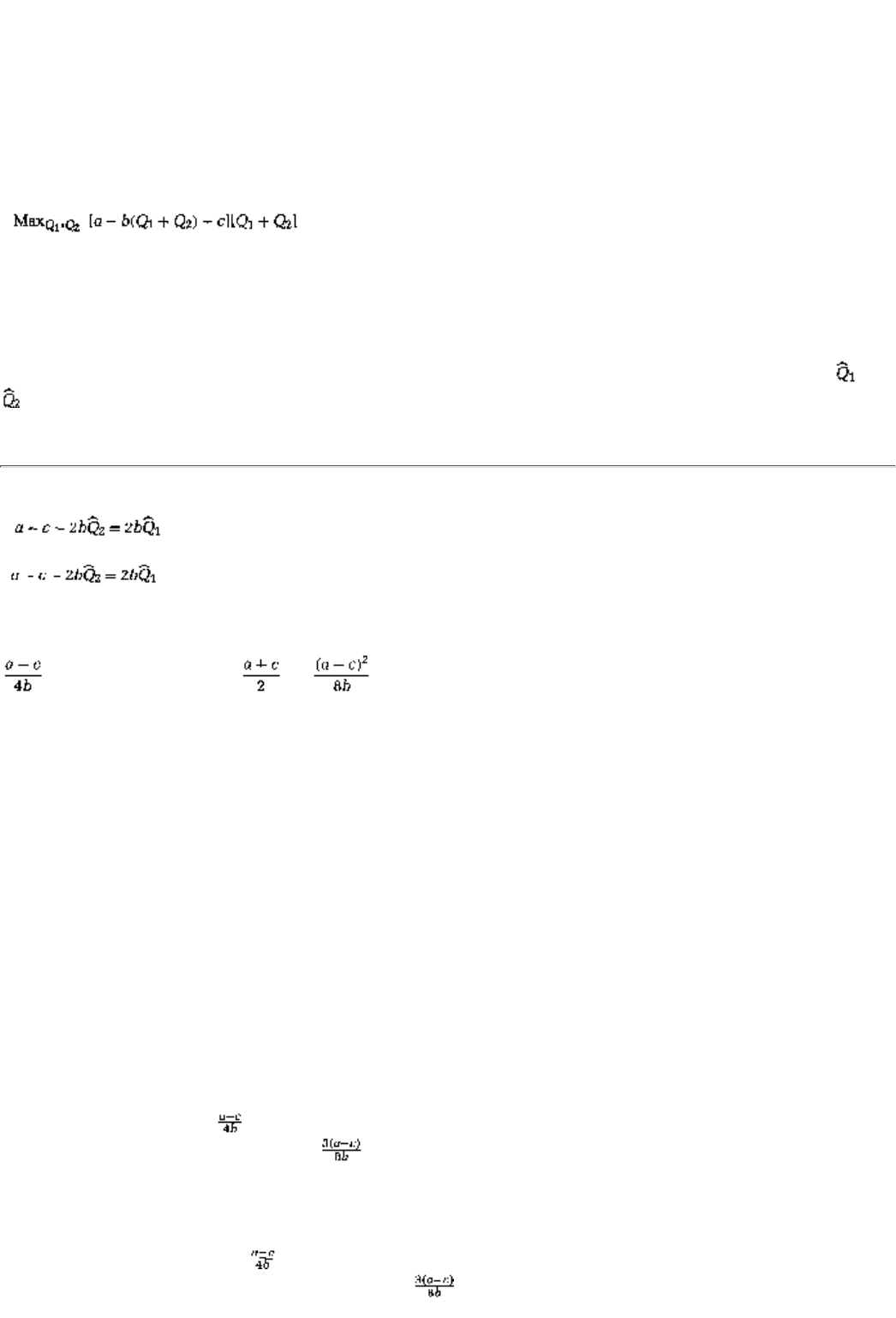

6.3 Cournot Nash Equilibrium 77

6.4 Cartel Solution 79

6.5 Case Study Today's OPEC 81

page_x

Page XI

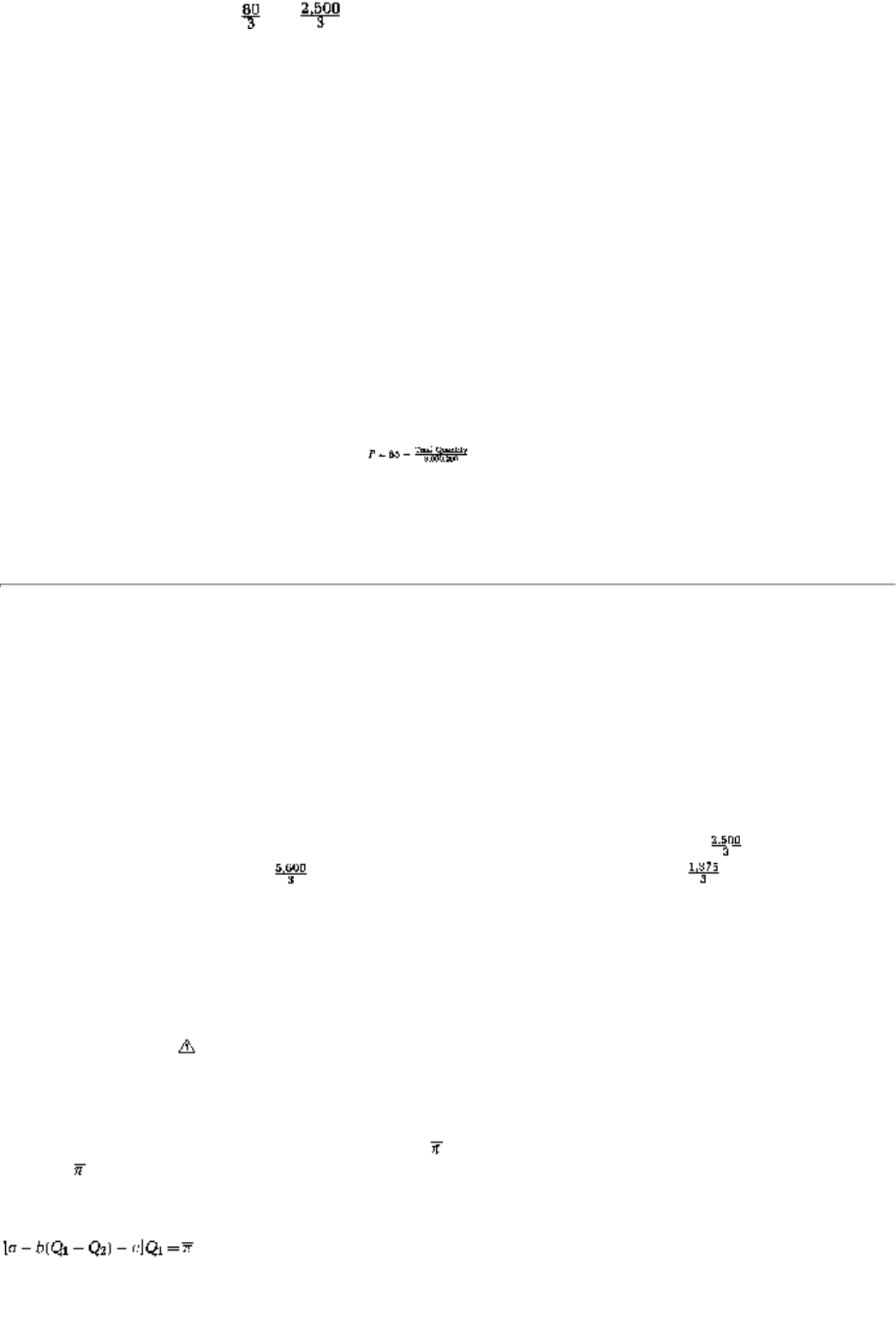

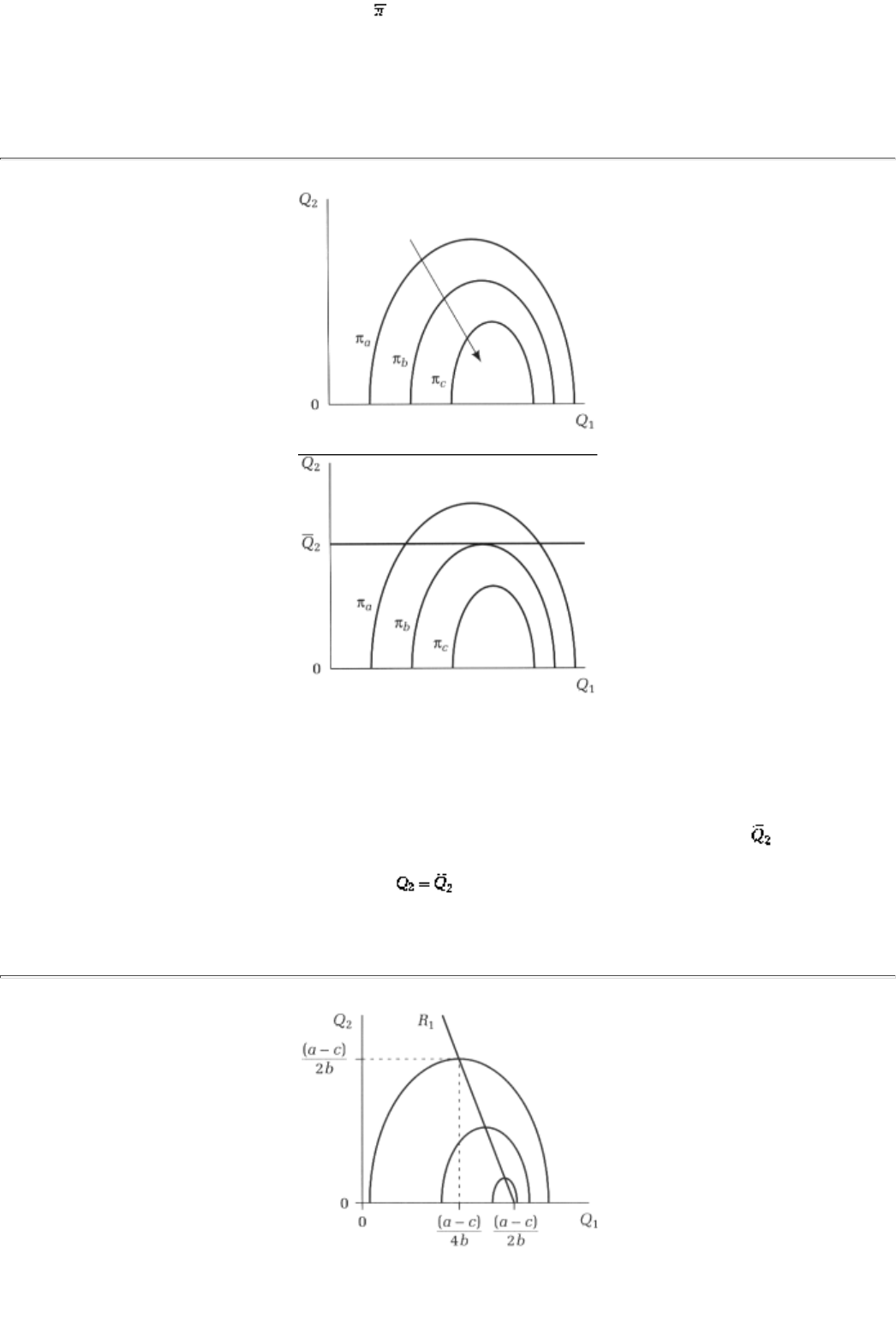

6.6 Variants on the Main Theme I: A Graphical Analysis 82

6.6.1 The IEDS Solution to the Cournot Model 84

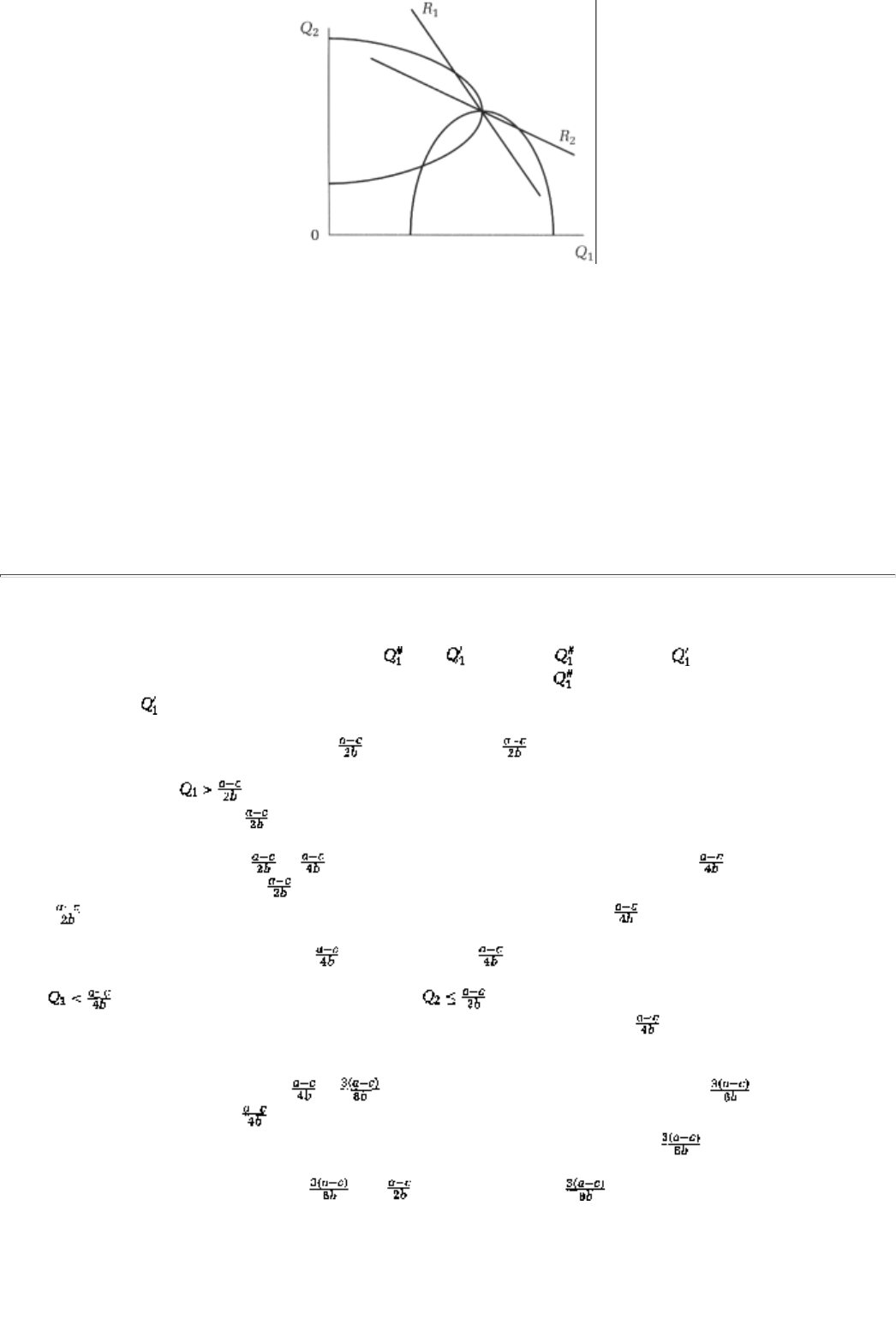

6.7 Variants on the Main Theme II: Stackelberg Model 85

6.8 Variants on the Main Theme III: Generalization 86

Summary 87

Exercises 88

Chapter 7 An Application: The Commons Problem 91

7.1 Background: What is the Commons? 91

7.2 A Simple Model 93

7.3 Social Optimality 95

7.4 The Problem Worsens in a Large Population 96

7.5 Case Studies Buffalo, Global Warming, and the Internet 97

7.6 Averting a Tragedy 98

Summary 99

Exercises 100

Chapter 8 Mixed Strategies 103

8.1 Definition and Examples 103

8.1.1 What Is a Mixed Strategy? 103

8.1.2 Yet More Examples 106

8.2 An Implication 107

8.3 Mixed Strategies Can Dominate Some Pure Strategies 108

8.3.1 Implications for Dominant Strategy Solution and IEDS 109

8.4 Mixed Strategies are Good for Bluffing 110

8.5 Mixed Strategies and Nash Equilibrium 111

8.5.1 Mixed-Strategy Nash Equilibria in an Example 113

8.6 Case Study Random Drug Testing 114

Summary 115

Exercises 116

page_xi

Page XII

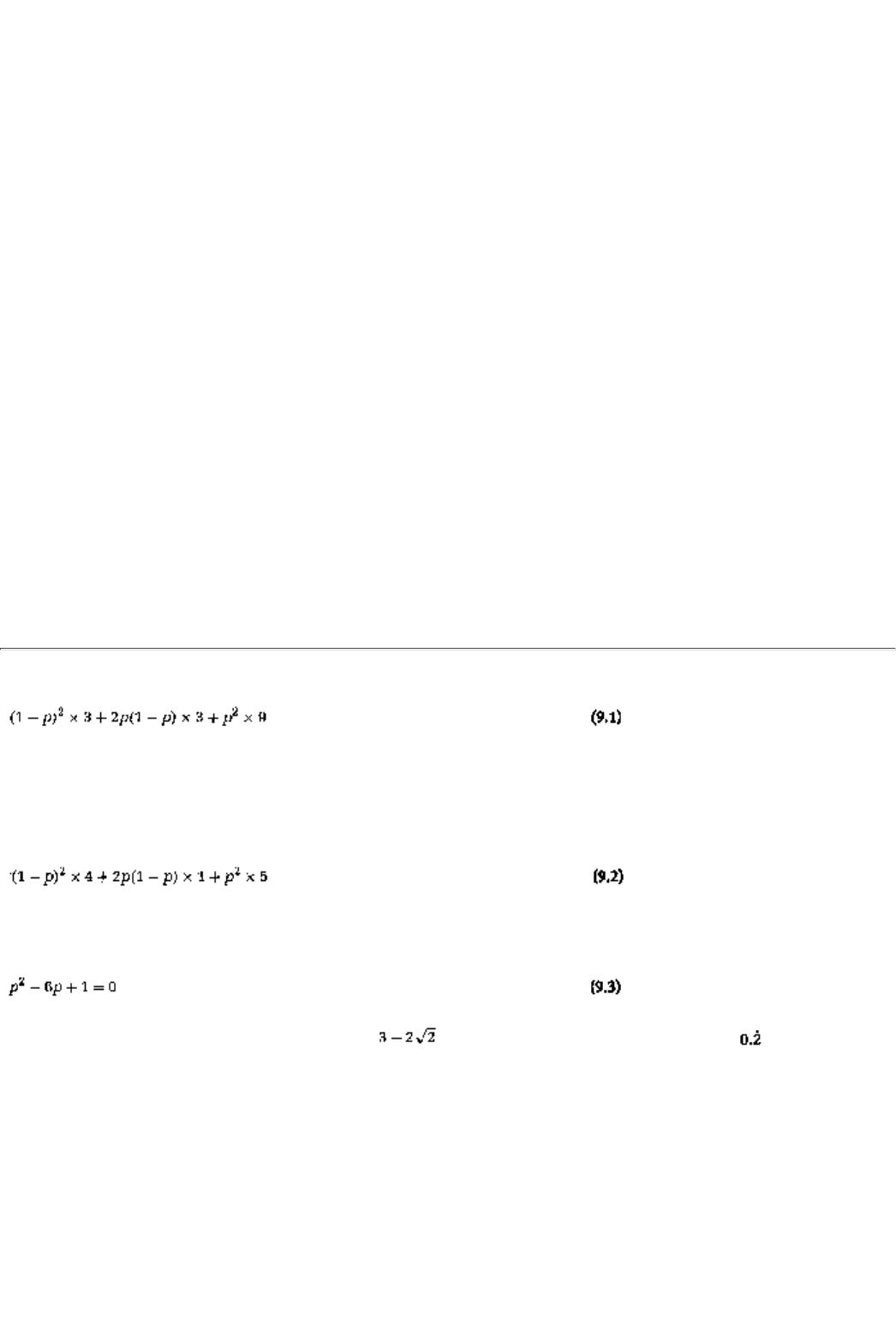

Chapter 9 Tow Applications: Naturla Monopoly and Bankruptcy Law 121

9.1 Chicken, Symmetric Games, and Symmetric Equilibria 121

9.1.1 Chicken 121

9.1.2 Symmetric Games and Symmetric Equilibria 122

9.2 Natural Monopoly 123

9.2.1 The Economic Background 123

9.2.2 A Simple Example 124

9.2.3 War of Attrition and a General Analysis 125

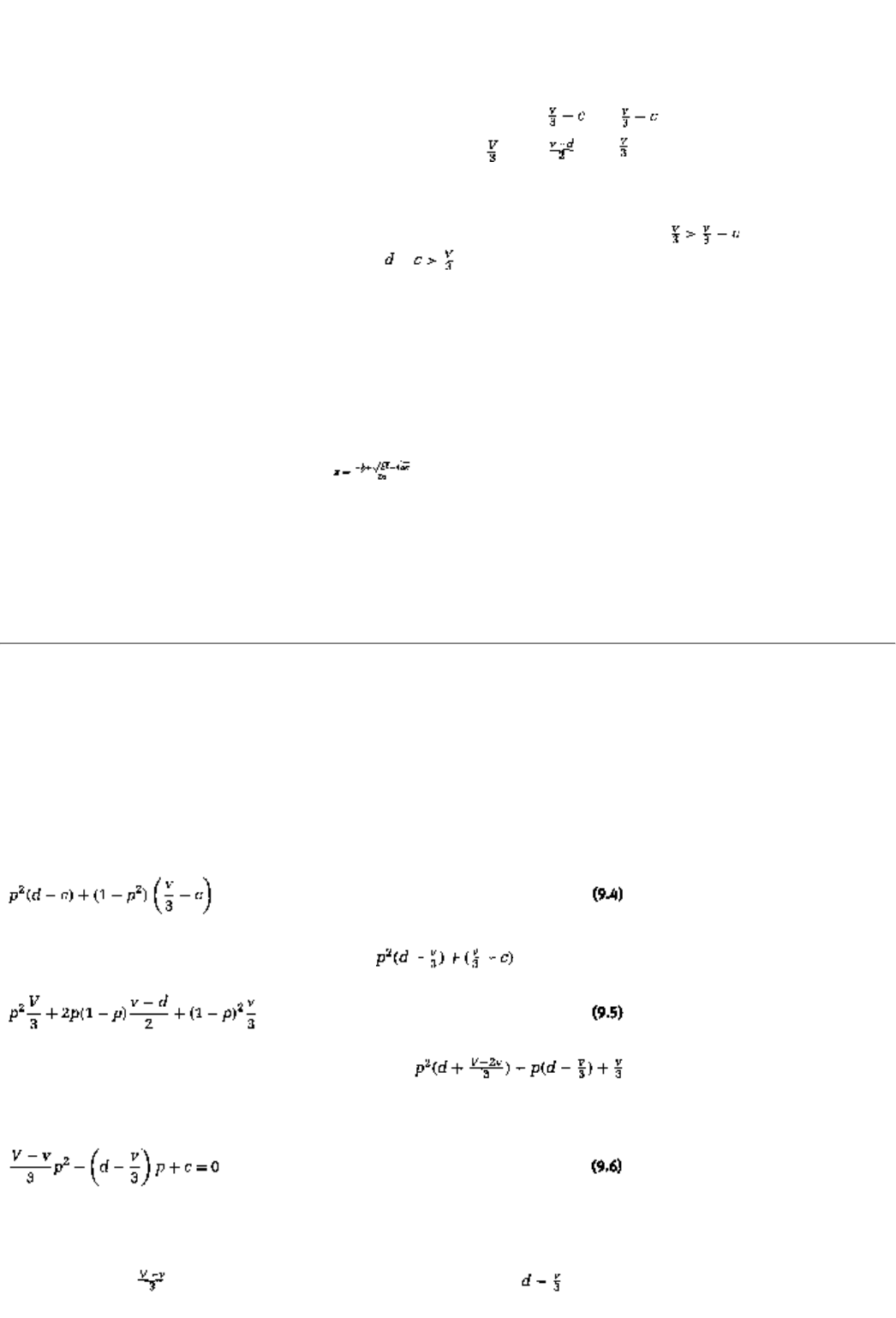

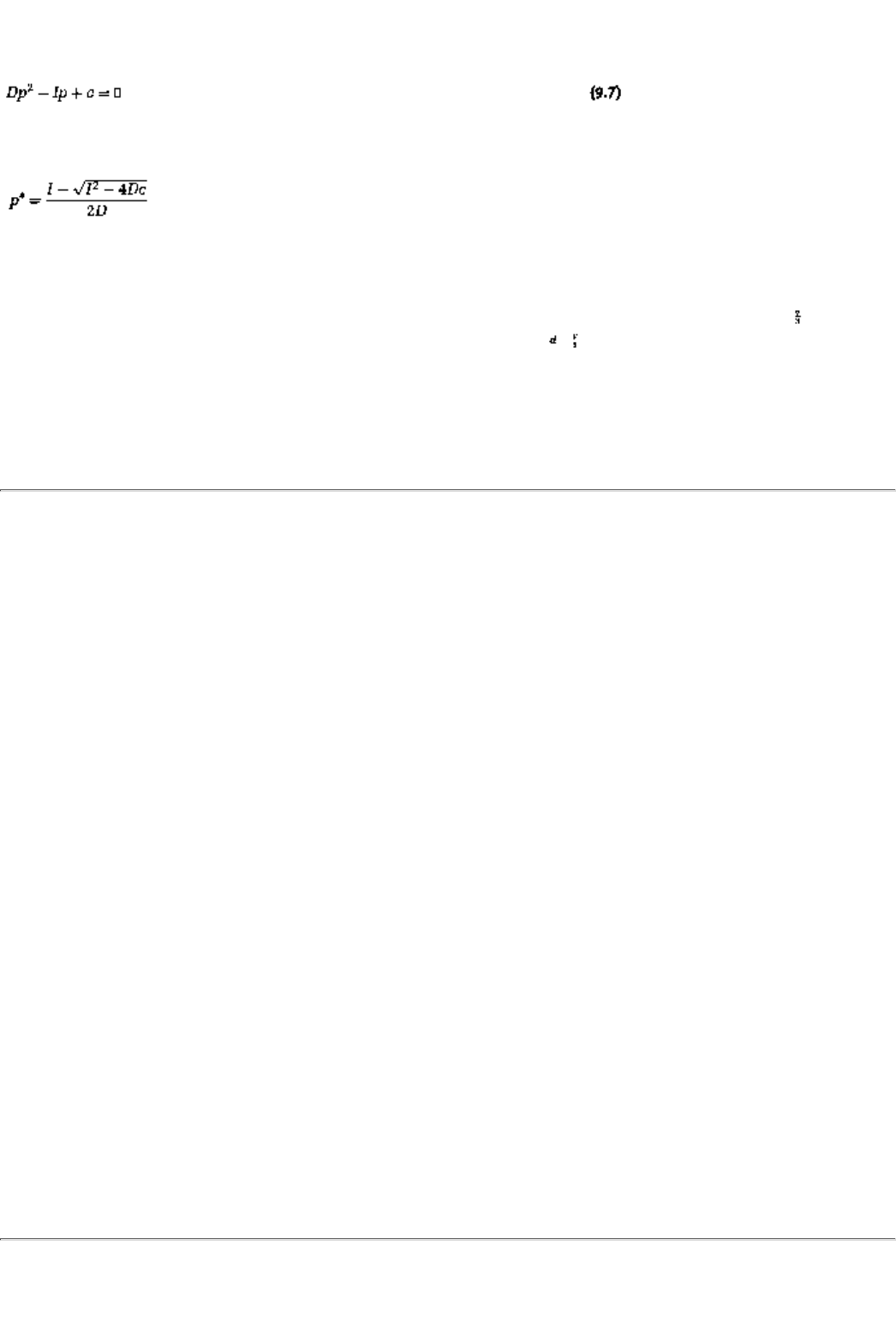

9.3 Bankruptcy Law 128

9.3.1 The Legal Background 128

9.3.2 A Numerical Example 128

9.3.3 A General Analysis 130

Summary 132

Exercises 133

Chapter 10 Zero-Sum Games 139

10.1 Definition and Examples 139

10.2 Playing Safe: Maxmin 141

10.2.1 The Concept 141

10.2.2 Examples 142

10.3 Playing Sound: Minmax 144

10.3.1 The Concept and Examples 144

10.3.2 Two Results 146

10.4 Playing Nash: Playing Both Safe and Sound 147

Summary 149

Exercises 149

Part Three Extensive Form Games: Theory and Applications 155

Chapter 11 Extensive Form Games and Backward Induction 157

11.1 The Extensive Form 157

11.1.1 A More Formal Treatment 158

page_xii

Page XIII

11.1.2 Strategies, Mixed Strategies, and Chance Nodes 160

11.2 Perfect Information Games: Definition and Examples 162

11.3 Backward Induction: Examples 165

11.3.1 The Power of Commitment 167

11.4 Backward Induction: A General Result 168

11.5 Connection With IEDS in the Strategic Form 170

11.6 Case Study Poison Pills and Other Takeover Deterrents 172

Summary 174

Exercises 175

Chapter 12 An Application: Research and Development 179

12.1 Background: R&D, Patents, and Ologopolies 179

12.1.1 A Patent Race in Progress: High-Definition Television 180

12.2 A Model of R&D 181

12.3 Backward Induction: Analysis of the Model 183

12.4 Some Remarks 188

Summary 189

Exercises 190

Chapter 13 Subgame Perfect Equilibrium 193

13.1 A Motivating Example 193

13.2 Subgames and Strategies Within Subgames 196

13.3 Subgame Perfect Equilibrium 197

13.4 Two More Examples 199

13.5 Some Remarks 202

13.6 Case Study Peace in the World War I Trenches 203

Summary 205

Exercises 205

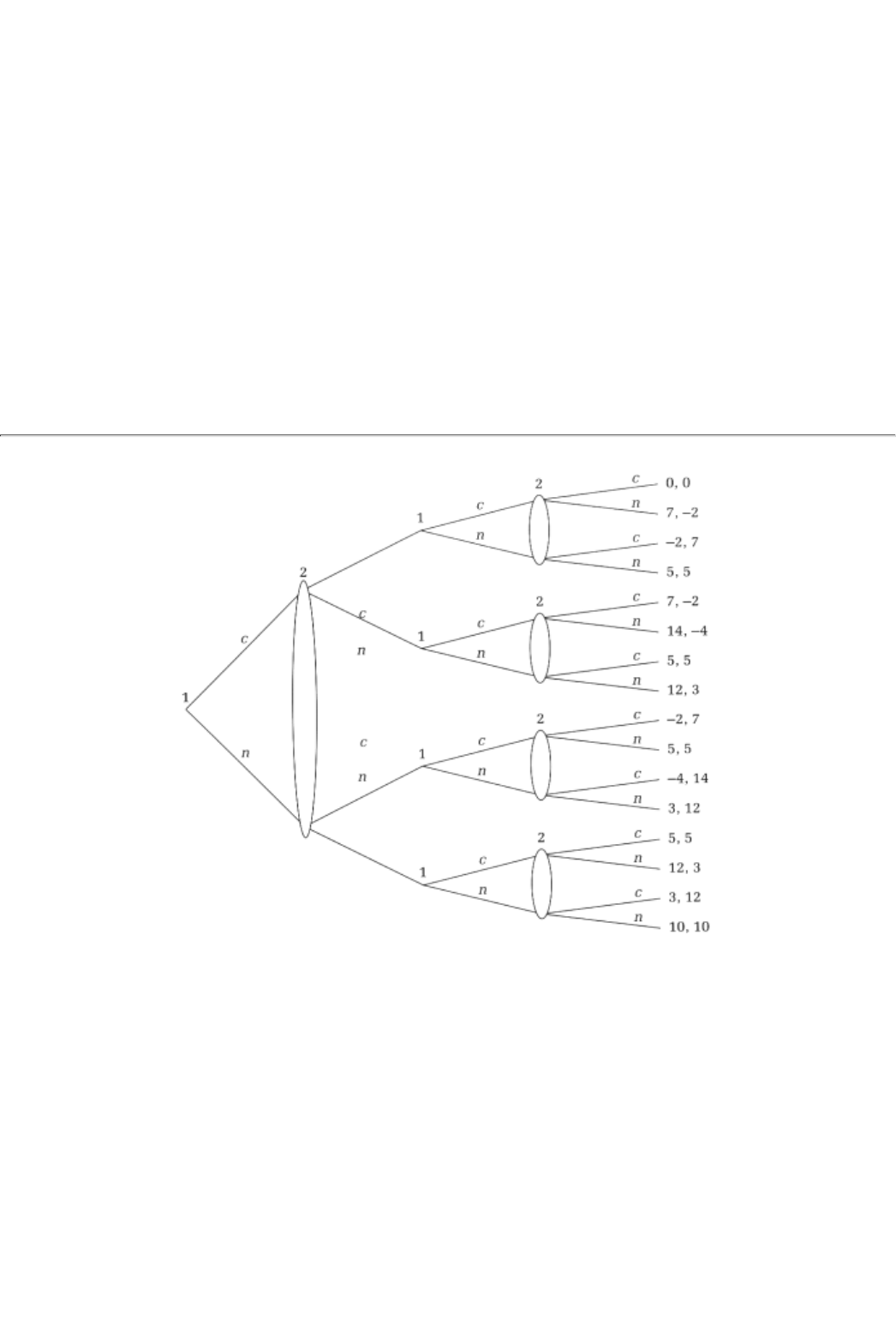

Chapter 14 Finitely Repeated Games 209

14.1 Examples and Economic Applications 209

page_xiii

Page XIV

14.1.1 Three Repeated Games and a Definition 209

14.1.2 Four Economic Applications 212

14.2 Finitely Repeated Games 214

14.2.1 Some General Conclusions 218

14.3 Case Study Treasury Bill Auctions 219

Summary 222

Exercises 222

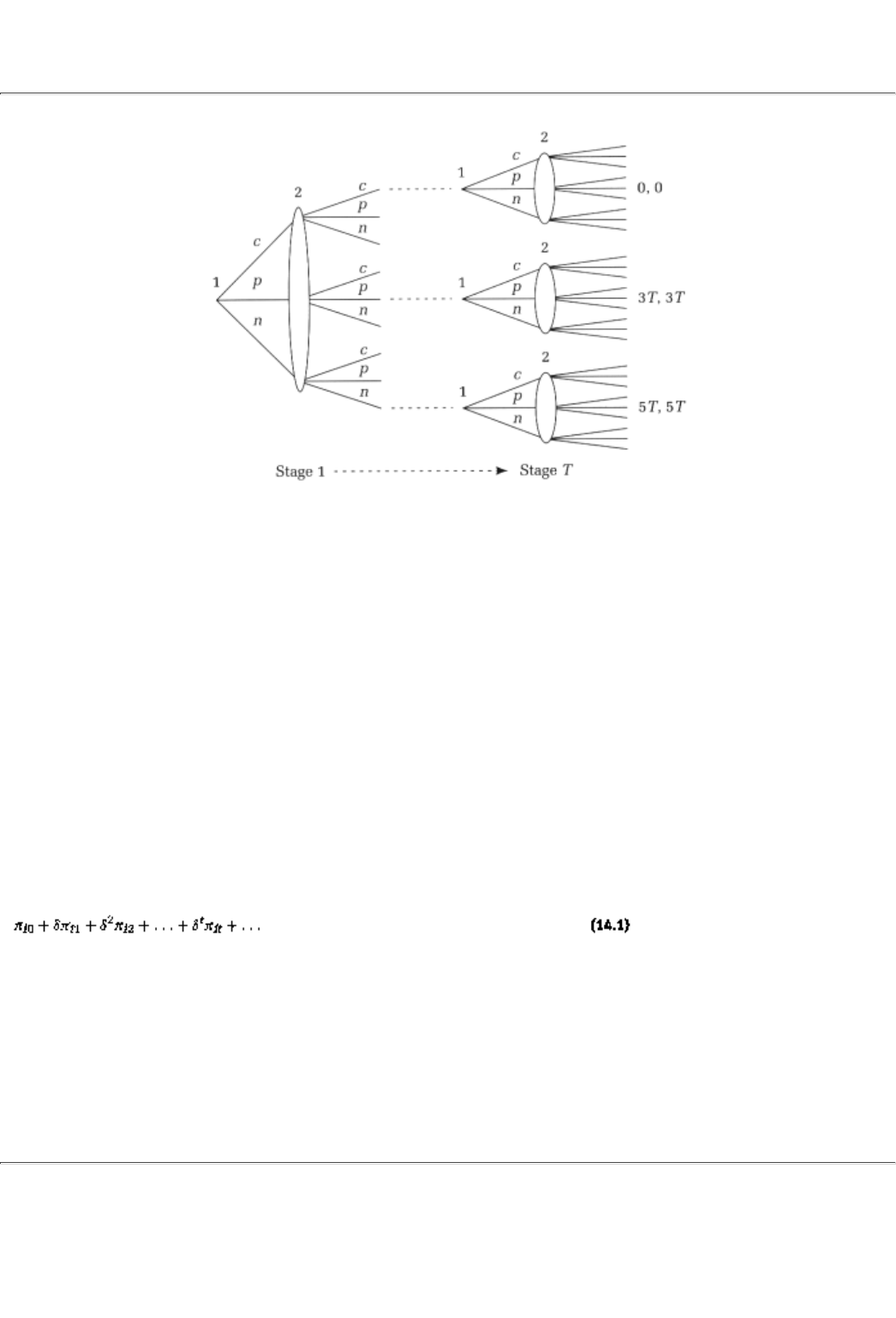

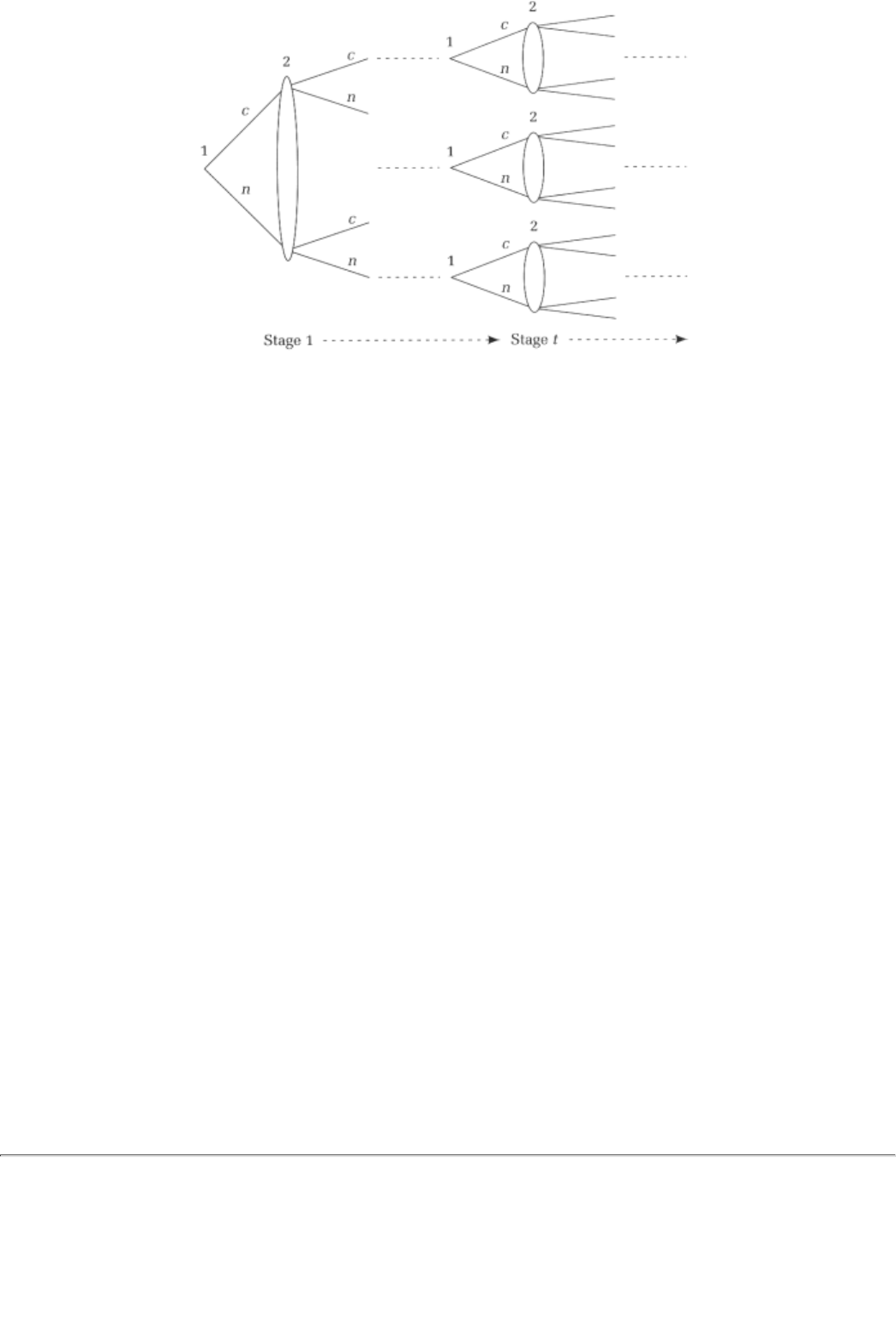

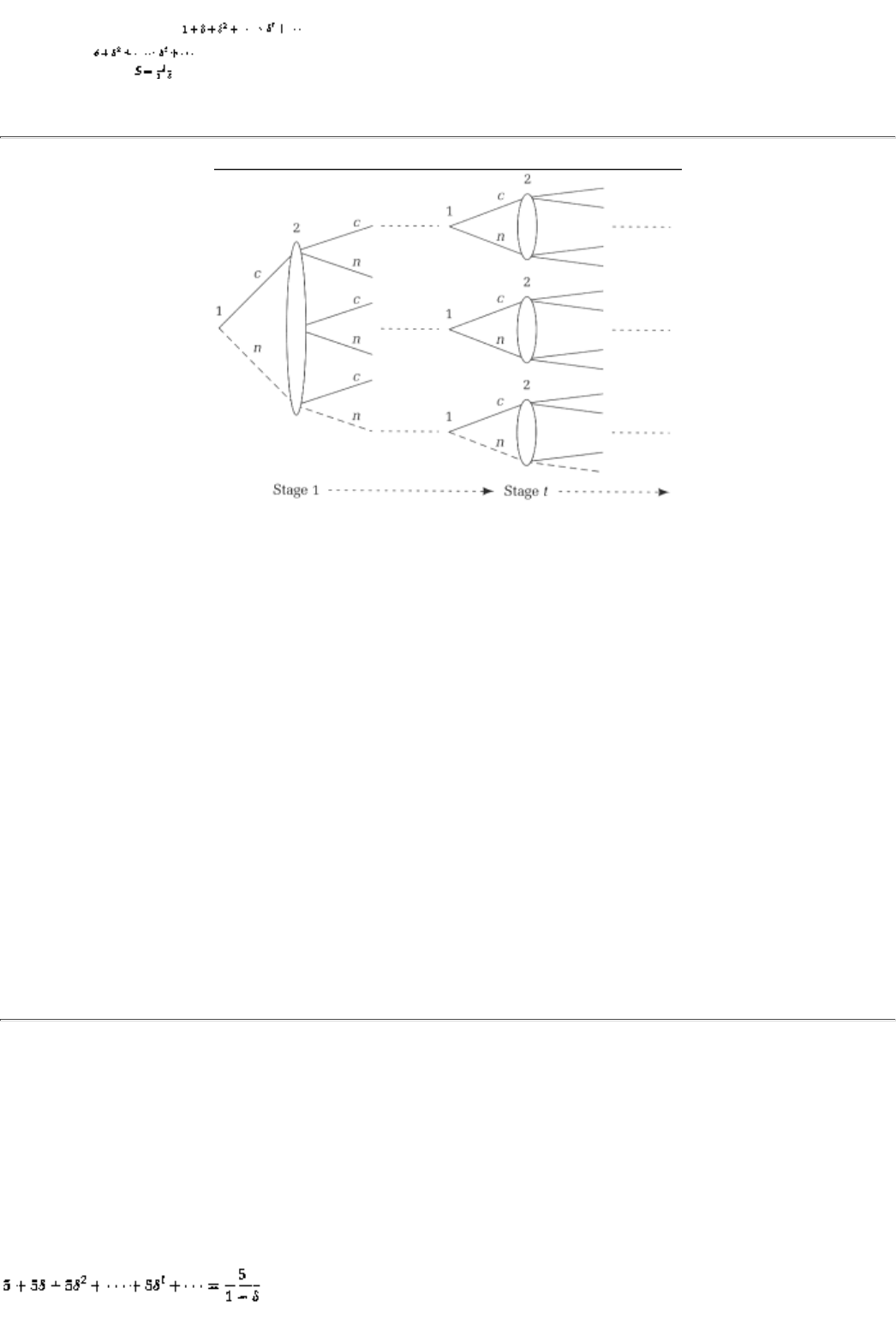

Chapter 15 Infinitely Repeated Games 227

15.1 Detour Through Discounting 227

15.2 Analysis of Example 3: Trigger Strategies and Good Behavior 229

15.3 The Folk Theorem 232

15.4 Repeated Games With Imperfect Detection 234

Summary 237

Exercises 238

Chapter 16 An Application: Competition and Collusion in the NASDAQ Stock Market243

16.1 The Background 243

16.2 The Analysis 245

16.2.1 A Model of the NASDAQ Market 245

16.2.2 Collusion 246

16.2.3 More on Collusion 248

16.3 The Broker-Dealer Relationship 249

16.3.1 Order Preferencing 249

16.3.2 Dealers Big and Small 250

16.4 The Epilogue 251

Summary 252

Exercises 252

Chapter 17 An Application: OPEC 257

17.1 Oil: A Historical Review 257

page_xiv

Page XV

17.1.1 Production and Price History 258

17.2 A Simple Model of the Oil Market 259

17.3 Oil Prices and the Role of OPEC 260

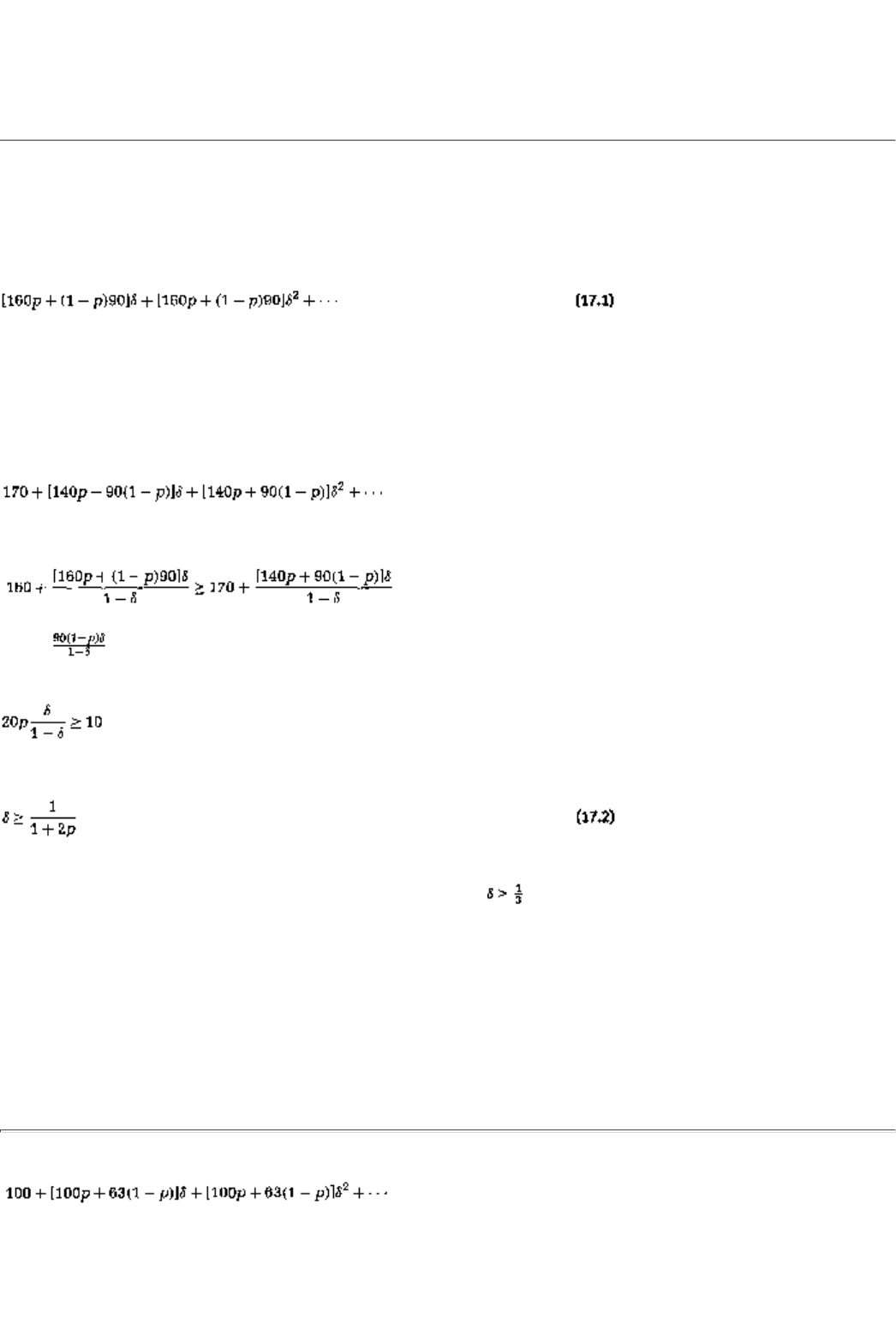

17.4 Repteated Games With Demand Uncertainty 262

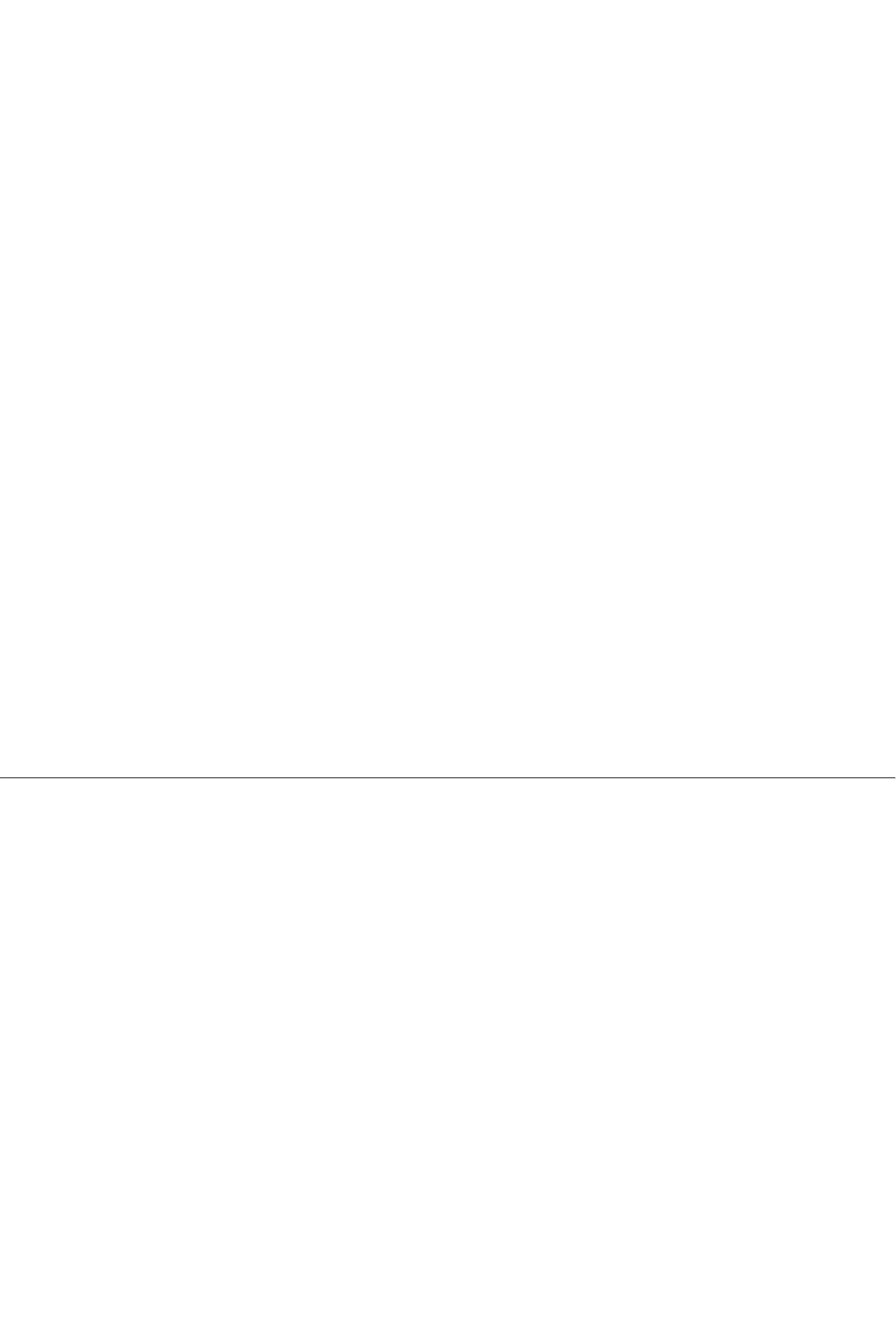

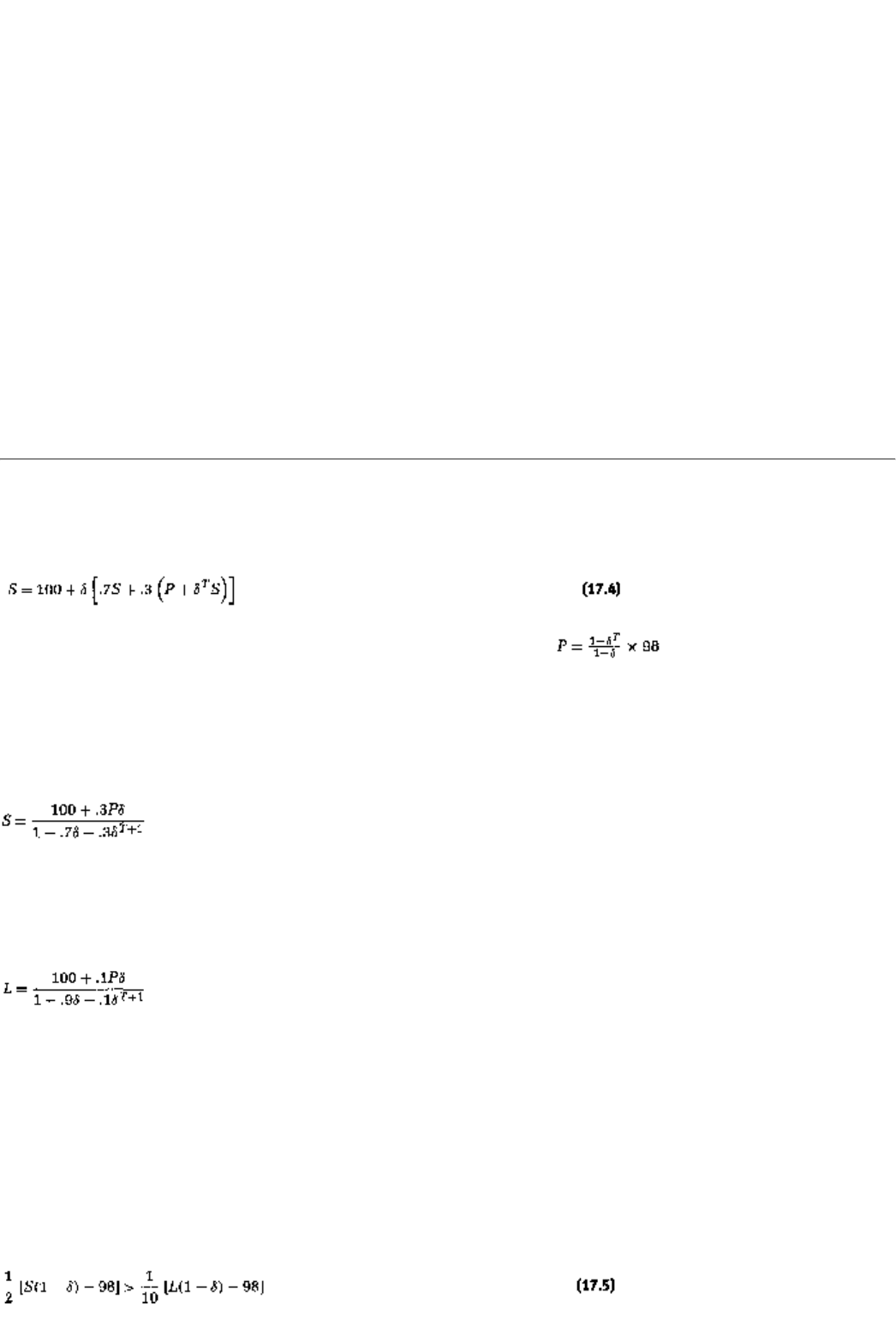

17.5 Unobserved Quota Violations 266

17.6 Some Further Comments 269

Summary 270

Exercises 271

Chapter 18 Dynamic Games With An Application to the Commons Problem 275

18.1 Dynamic Games: A Prologue 275

18.2 The Commons Problem: A Model 276

18.3 Sustainable Development and Social Optimum 278

18.3.1 A Computation of the Social Optimum 278

18.3.2 An Explanation of the Social Optimum 281

18.4 Achievable Development and Game Equilibrium 282

18.4.1 A Computation of the Game Equilibrium 282

18.4.2 An Explanation of the Equilibrium 284

18.4.3 A Comparison of the Socially Optimal and the Equilibrium Outcomes 285

18.5 Dynamic Games: An Epilogue 286

Summary 287

Exercises 288

Part Four Asymmetric Information Games: Theory and Applications 291

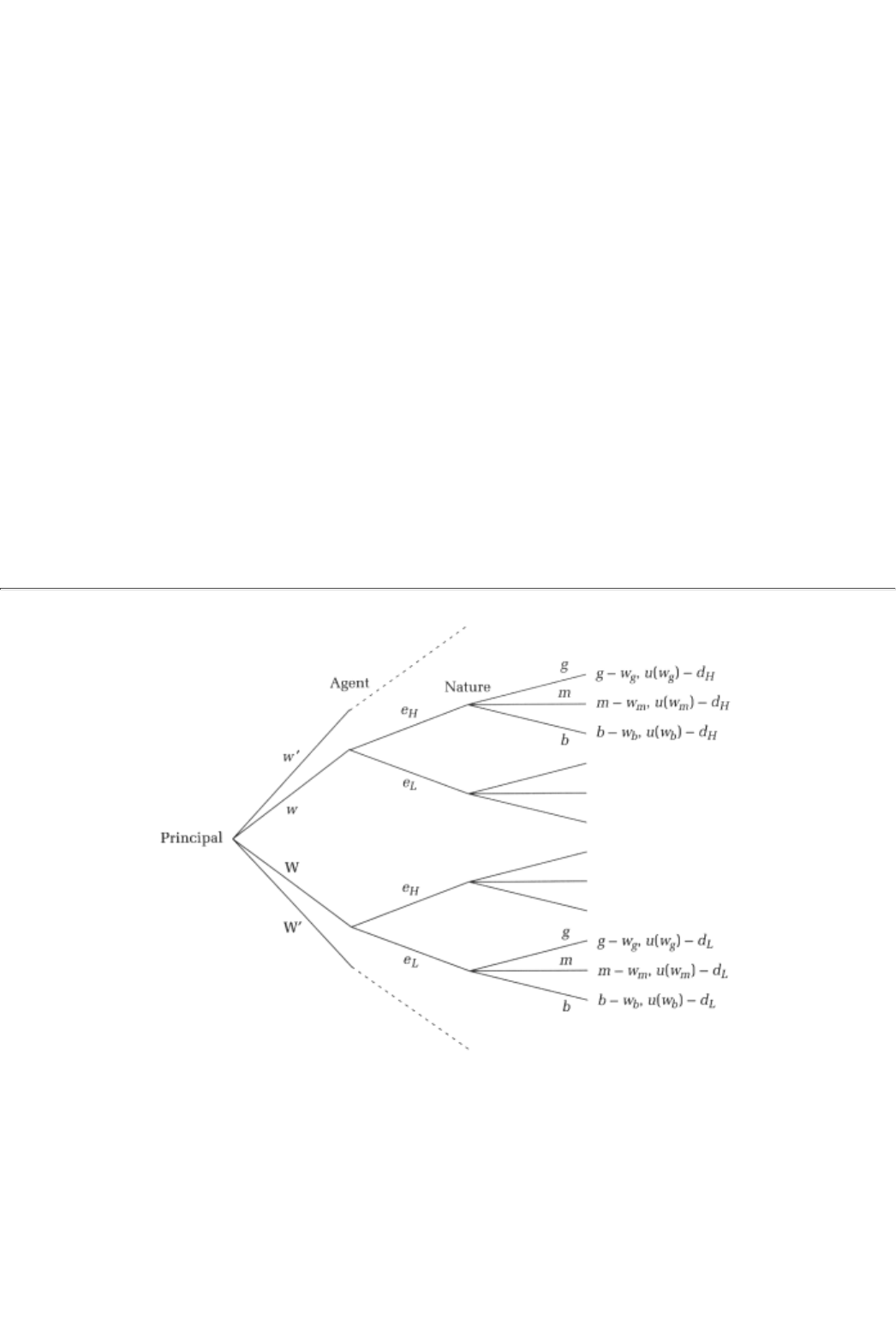

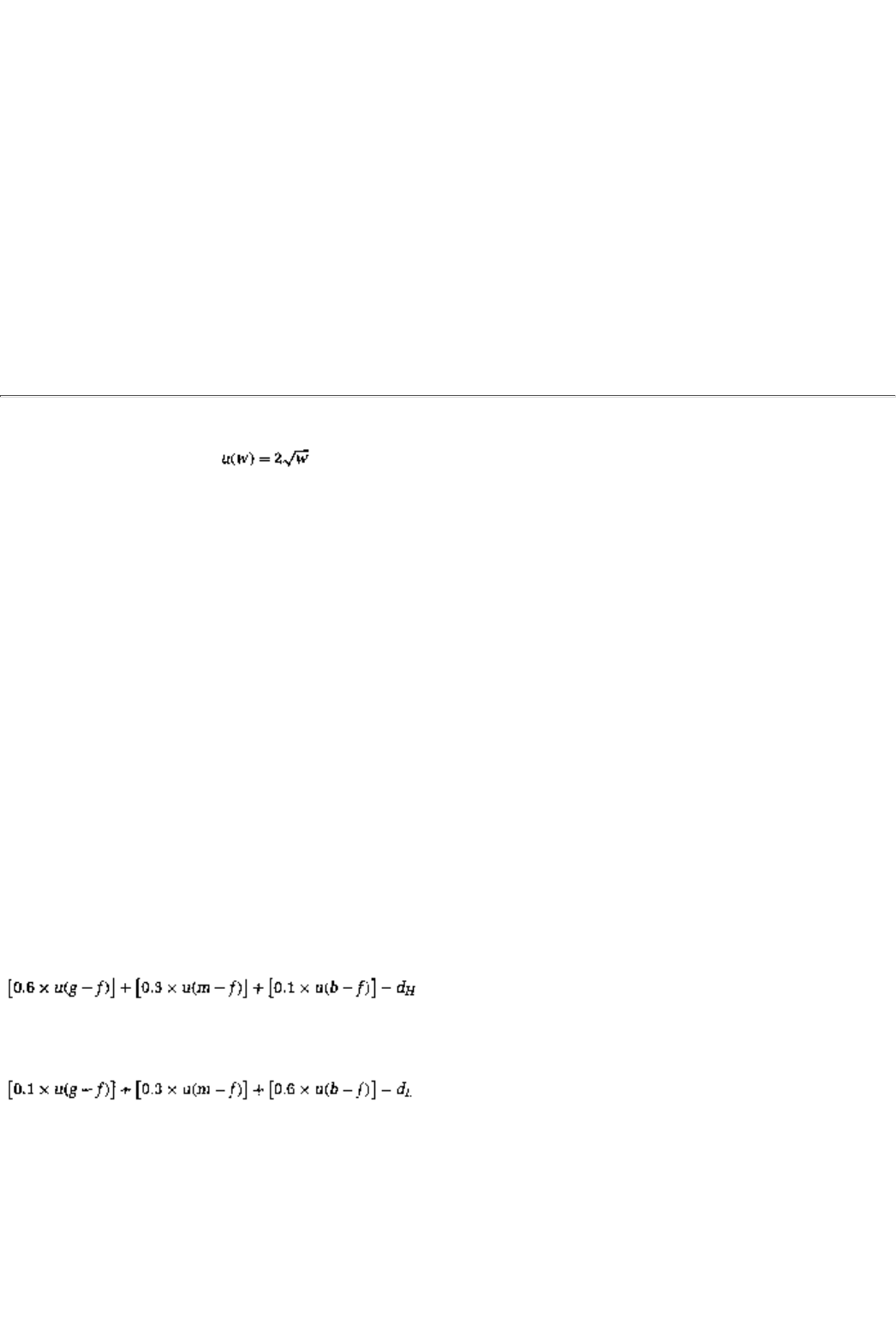

Chapter 19 Moral Hazard and Incentives Theory 293

19.1 Moral Hazard: Examples and a Definition 293

29.2 A Principal-Agent Model 295

19.2.1 Some Examples of Incentive Schemes 297

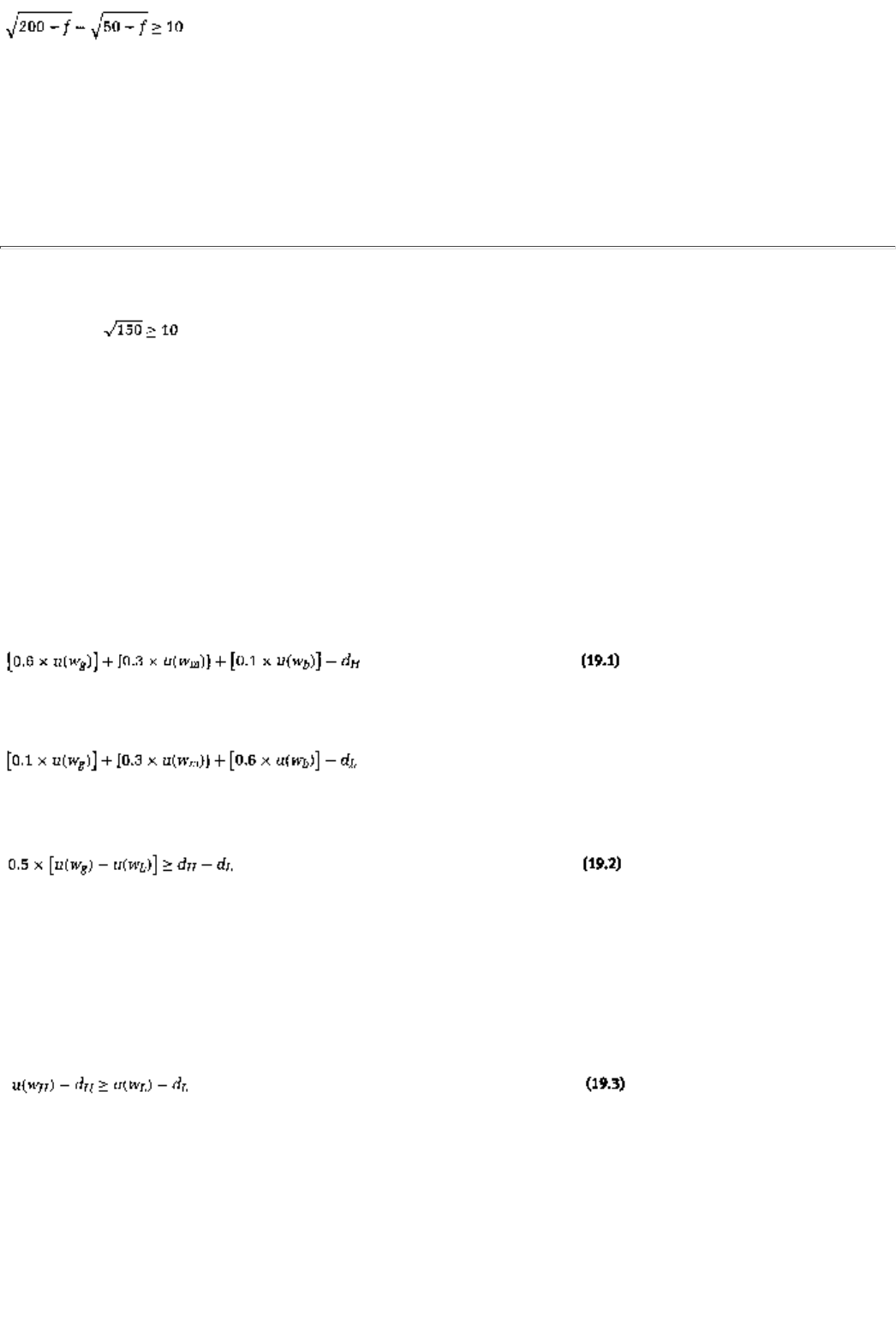

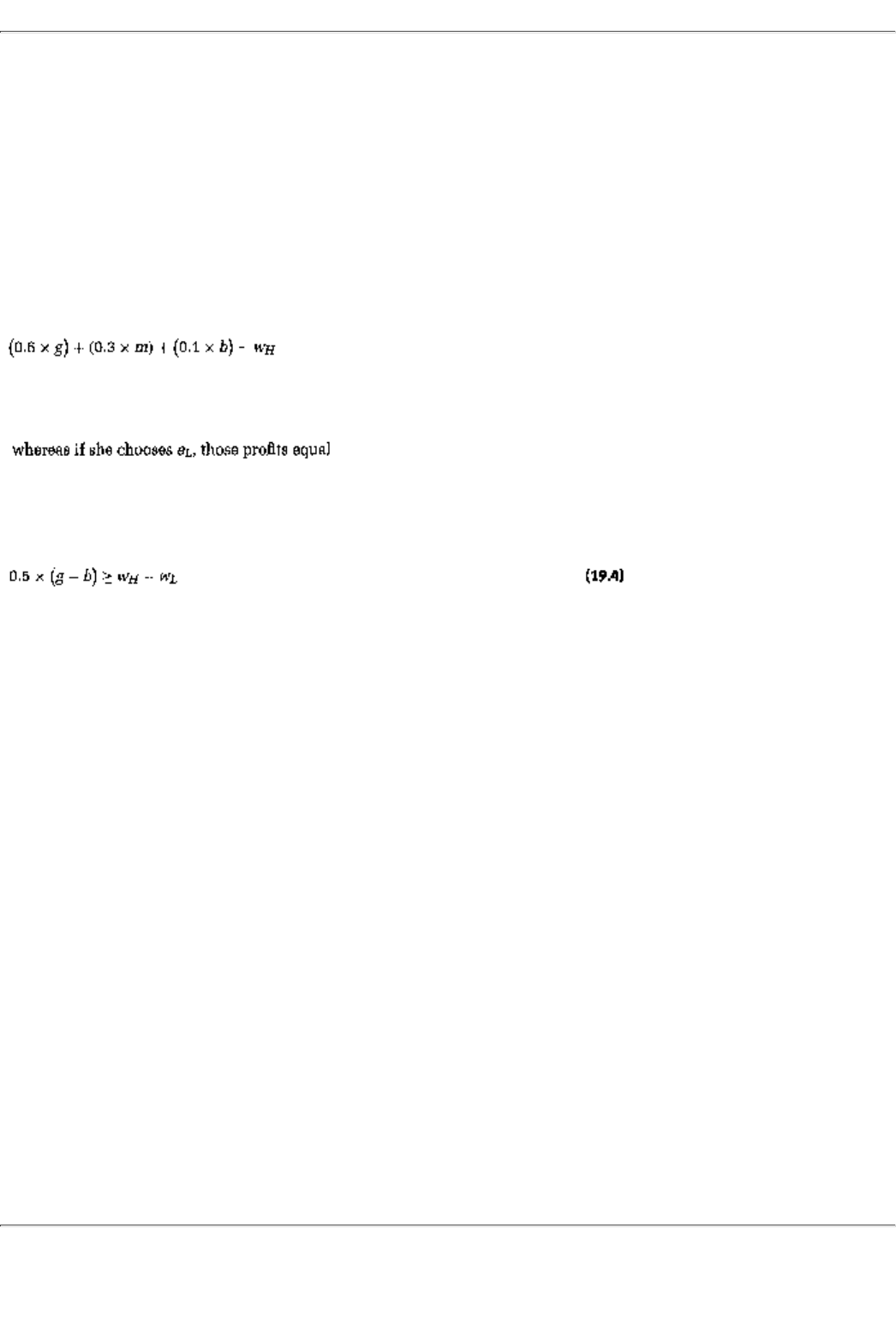

19.3 The Optimal Incentive Scheme 299

19.3.1 No Moral Hazard 299

page_xv

Page XVI

19.3.2 Moral Hazard 299

19.4 Some General Conclusions 301

19.4.1 Extensions and Generalizations 303

19.5 Case Study Compensating Primary Care Physicians in an HMO 304

Summary 305

Exercises 306

Chapter 20 Games with Incomplete Information 309

20.1 Some Examples 309

20.1.1 Some Analysis of the Examples 312

20.2 A Complete Analysis of Example 4 313

20.2.1 Bayes-Nash Equilibrium 313

20.2.2 Pure-Strategy Bayes-Nash Equilibria 315

20.2.3 Mixed-Strategy Bayes-Nash Equilibria 316

20.3 More General Considerations 318

20.3.1 A Modified Example 318

20.3.2 A General Framework 320

20.4 Dominance-Based Solution Concepts 321

20.5 Case Study Final Jeopardy 323

Summary 326

Exercises 326

Chapter 21 An Application: Incomplete Information in a Cournot Duopoly 331

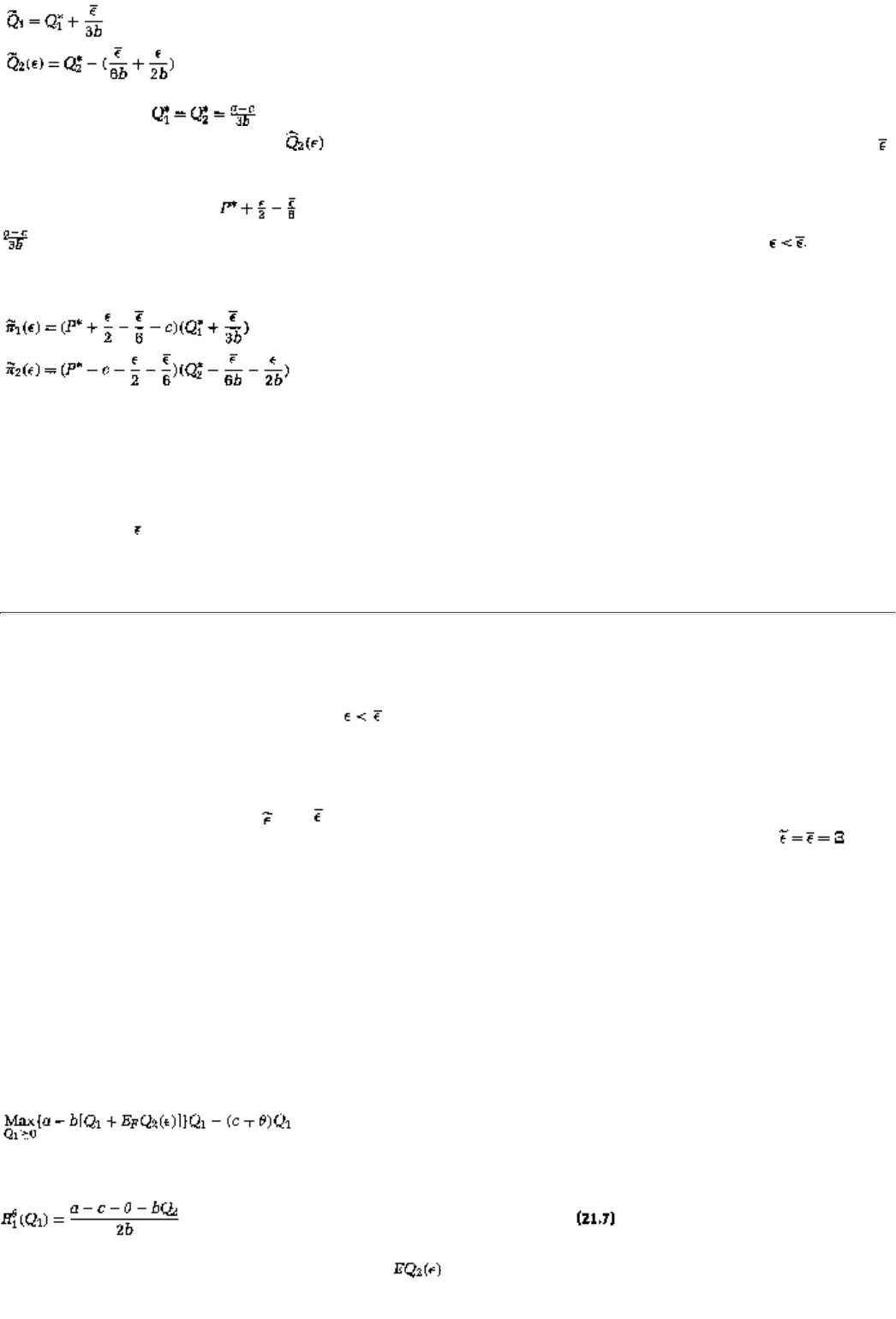

21.1 A Model and its Equilibrium 331

21.1.1 The Basic Model 331

21.1.2 Bayes-Nash Equilibrium 332

21.2 The Complete Information Solution 336

21.3 Revealing Costs to a Rival 338

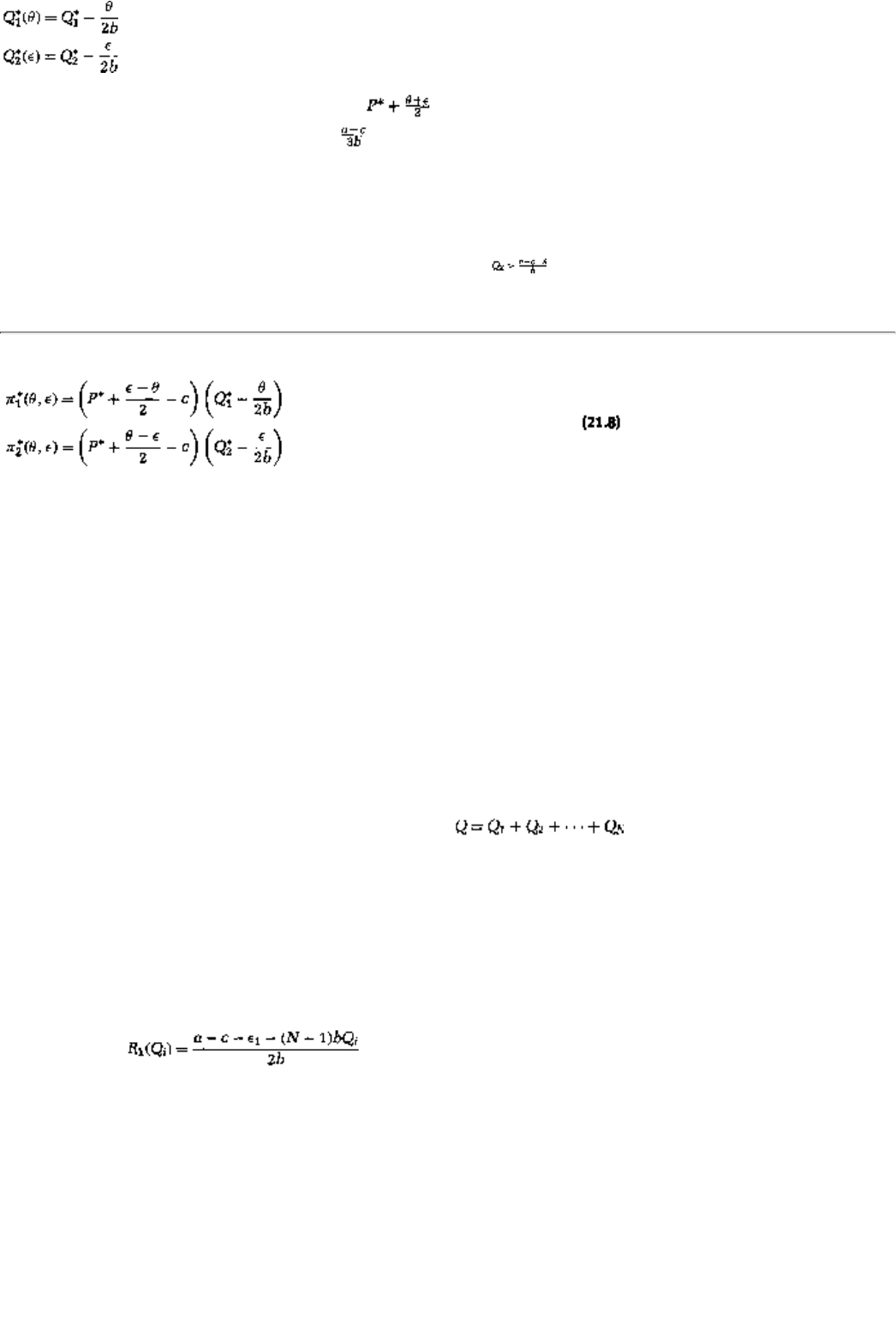

21.4 Two-Sided Incompleteness of Information 340

21.5 Generalizations and Extensions 341

21.5.1 Oligopoly 341

page_xvi

Page XVII

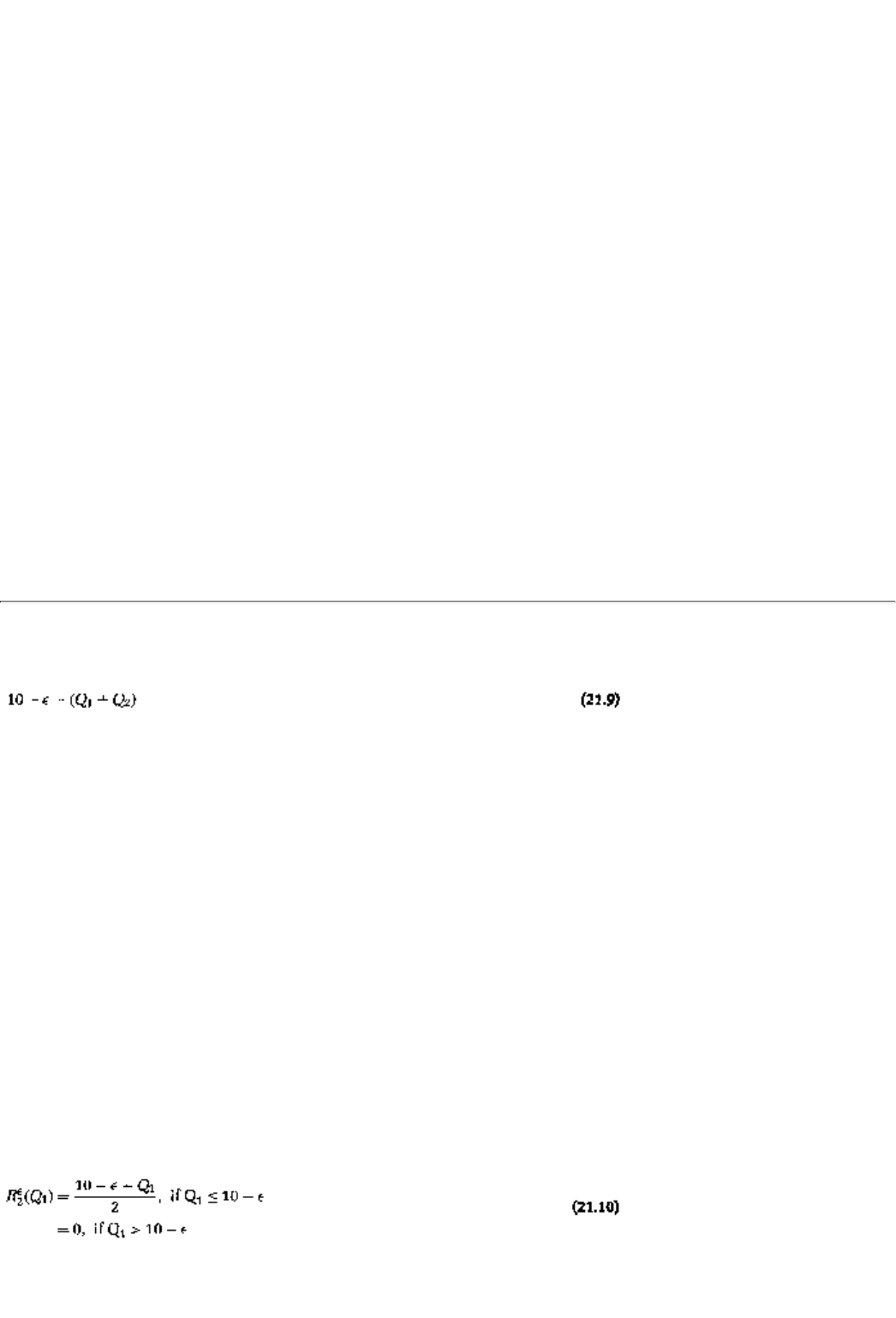

21.5.2 Demand Uncertainty 342

Summary 343

Exercises 343

Chapter 22 Mechanism Design, The Revelation Priciple, and Sales to an Unknown

Buyer

349

22.1 Mechanism Design: The Economic Context 349

22.2 A Simple Example: Selling to a Buyer With an Unknown Valuation 351

22.2.1 Known Passion 351

22.2.2 Unknown Passion 352

22.3 Mechanism Design and the Revelation Principle 356

22.3.1 Single Player 356

22.3.2 Many Players 357

22.4 A More General Example: Selling Variable Amounts 358

22.4.1 Known Type 359

22.4.2 Unknown Type 359

Summary 362

Exercises 362

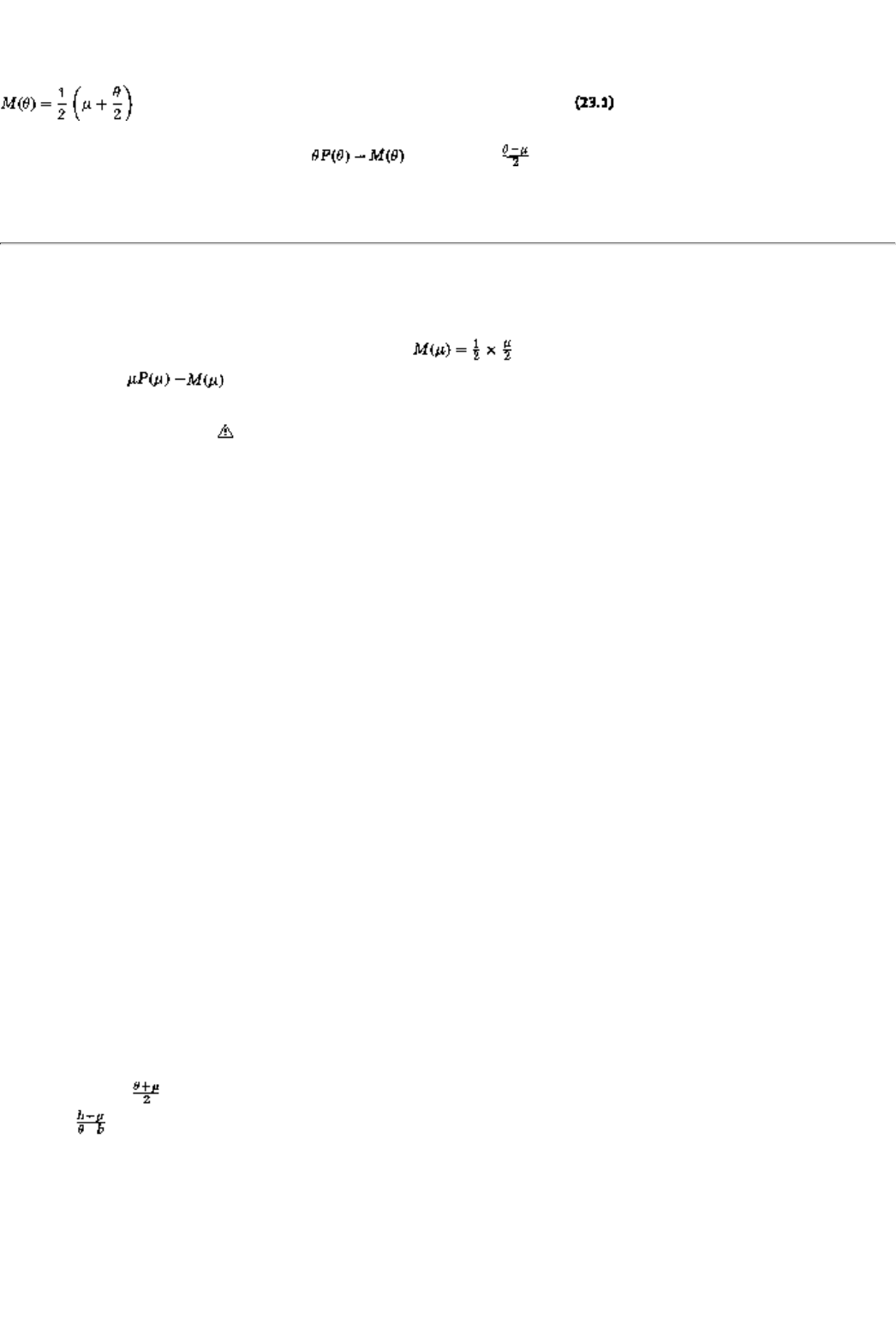

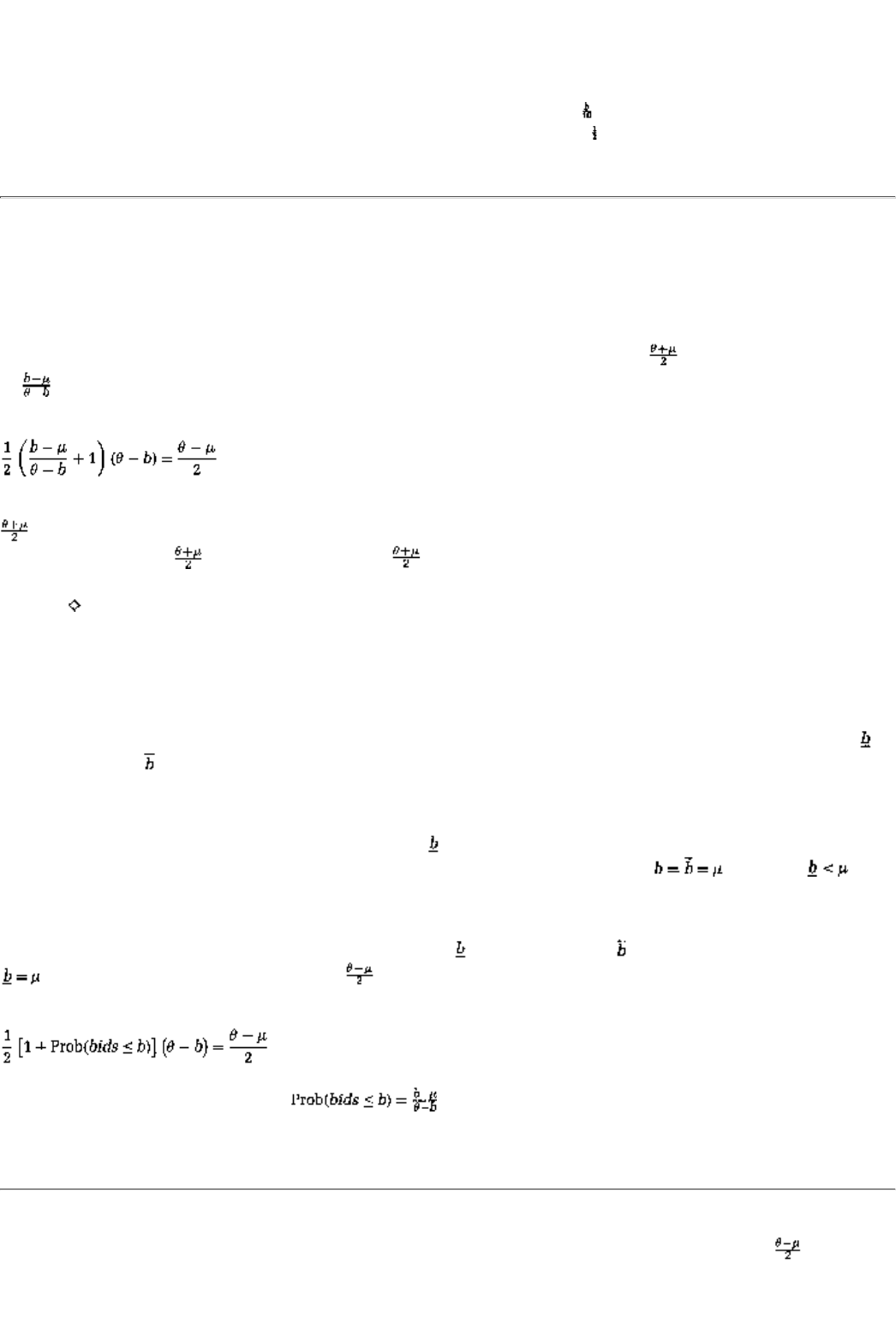

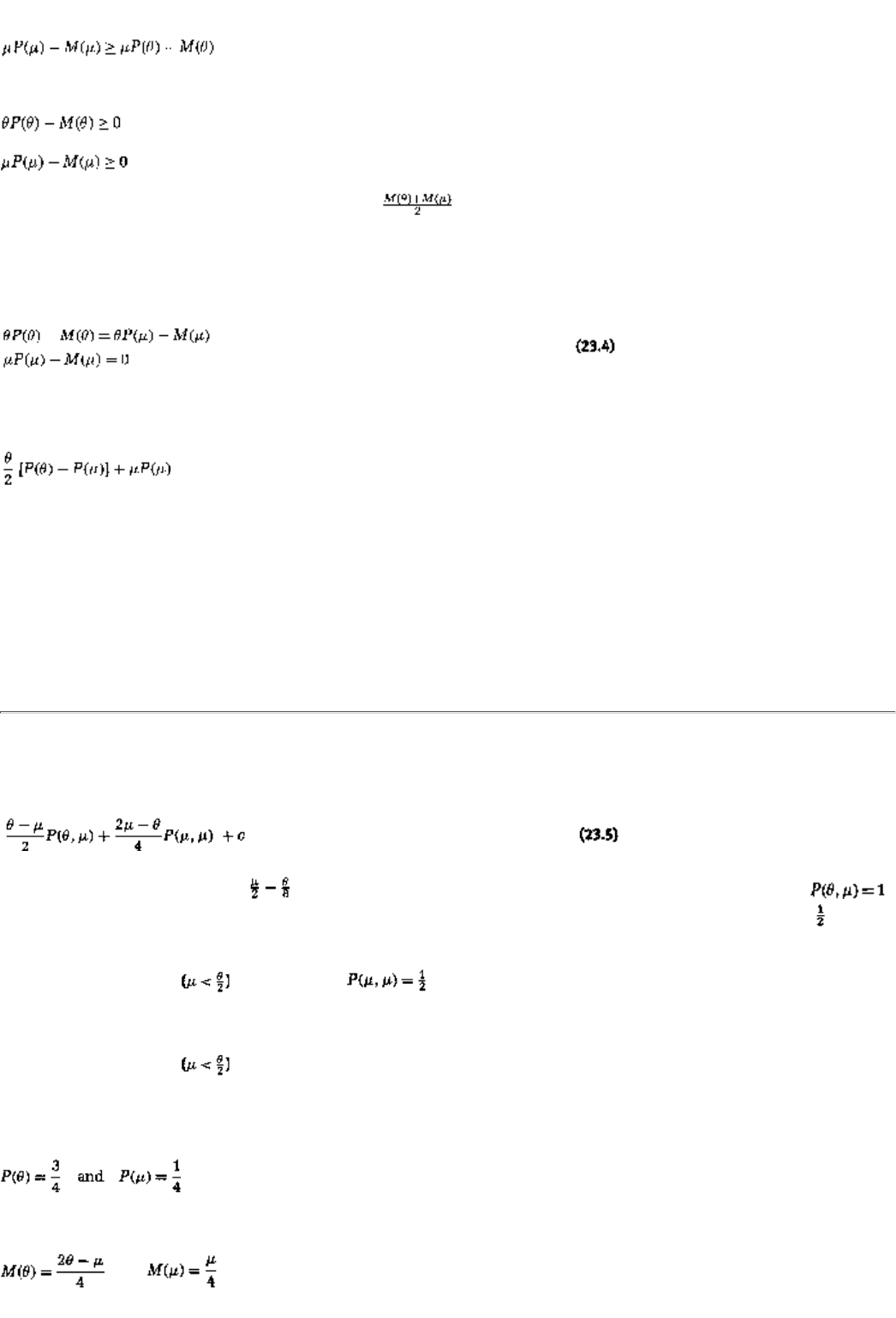

Chapter 23 An Application: Auctions 367

23.1 Background and Examples 367

23.1.1 Basic Model 369

23.2 Second-Price Auctions 369

23.3 First-Price Auctions 371

23.4 Optimal Auctions 373

23.4.1 How Well Do the First- and Second-Price Auctions Do? 375

23.5 Final Remarks 376

Summary 377

Exercises 378

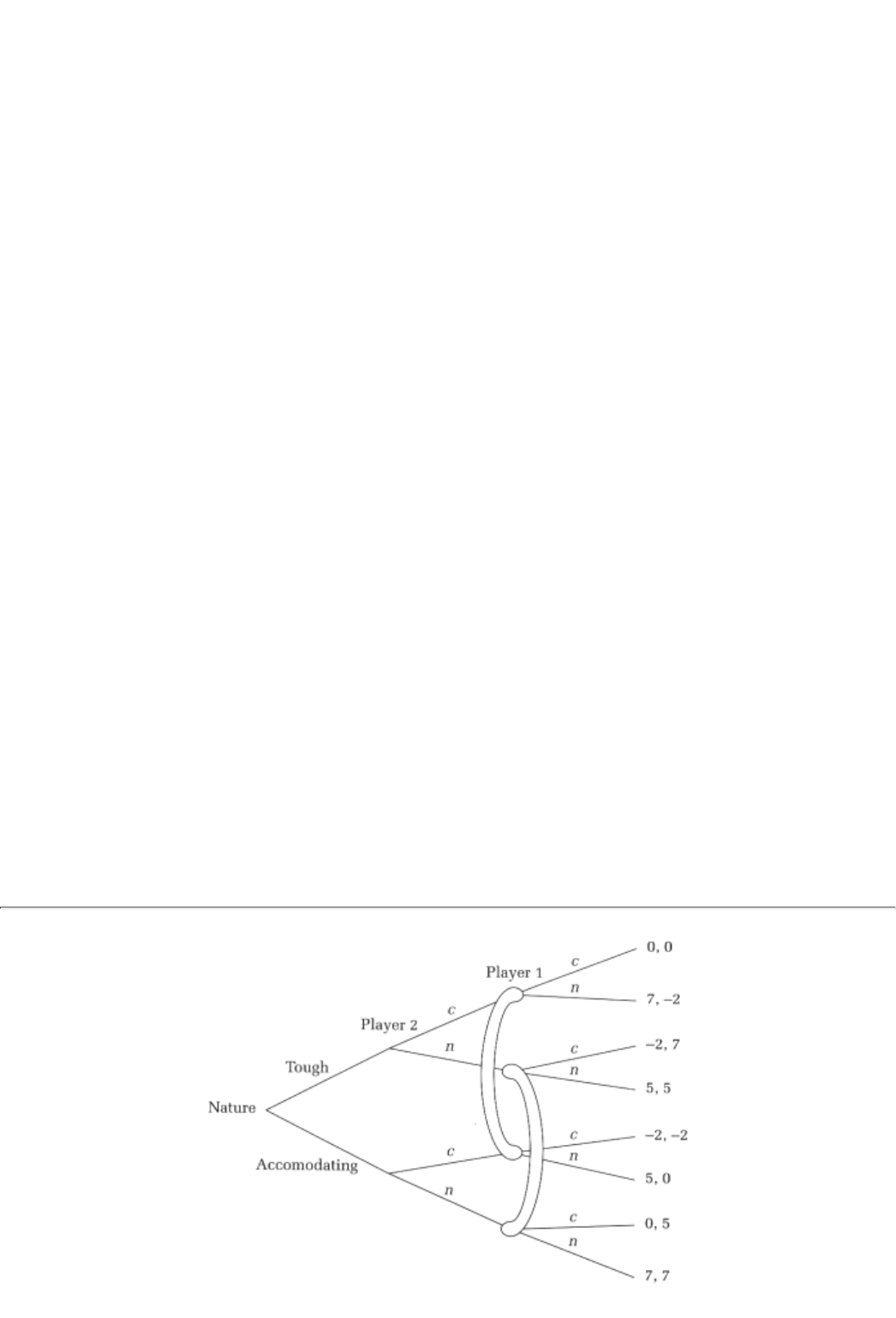

Chapter 24 Signaling Games and the Lemons Problem 383

24.1 Motivation and Two Examples 383

24.1.1 A First Analysis of the Examples 385

page_xvii

Page XVIII

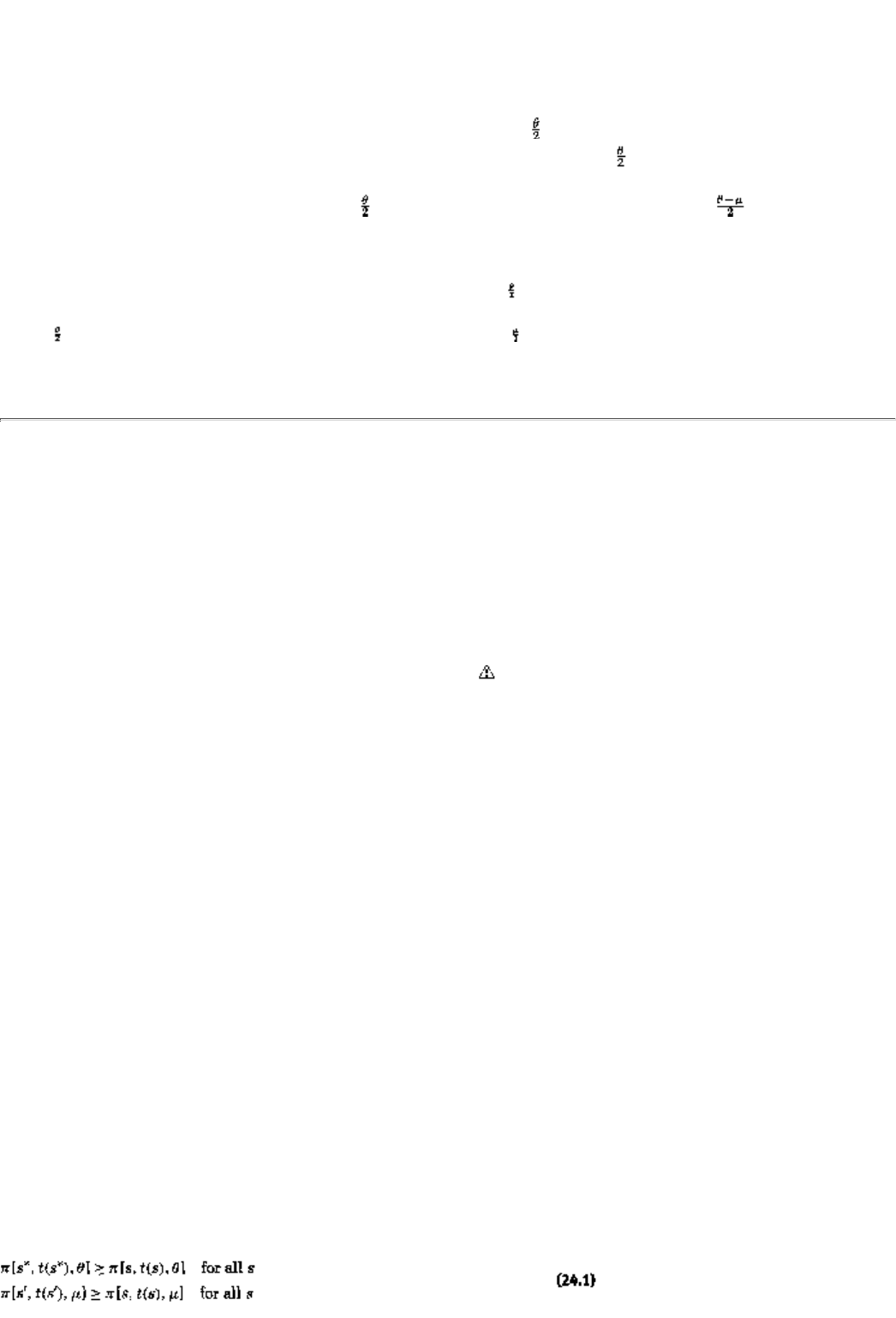

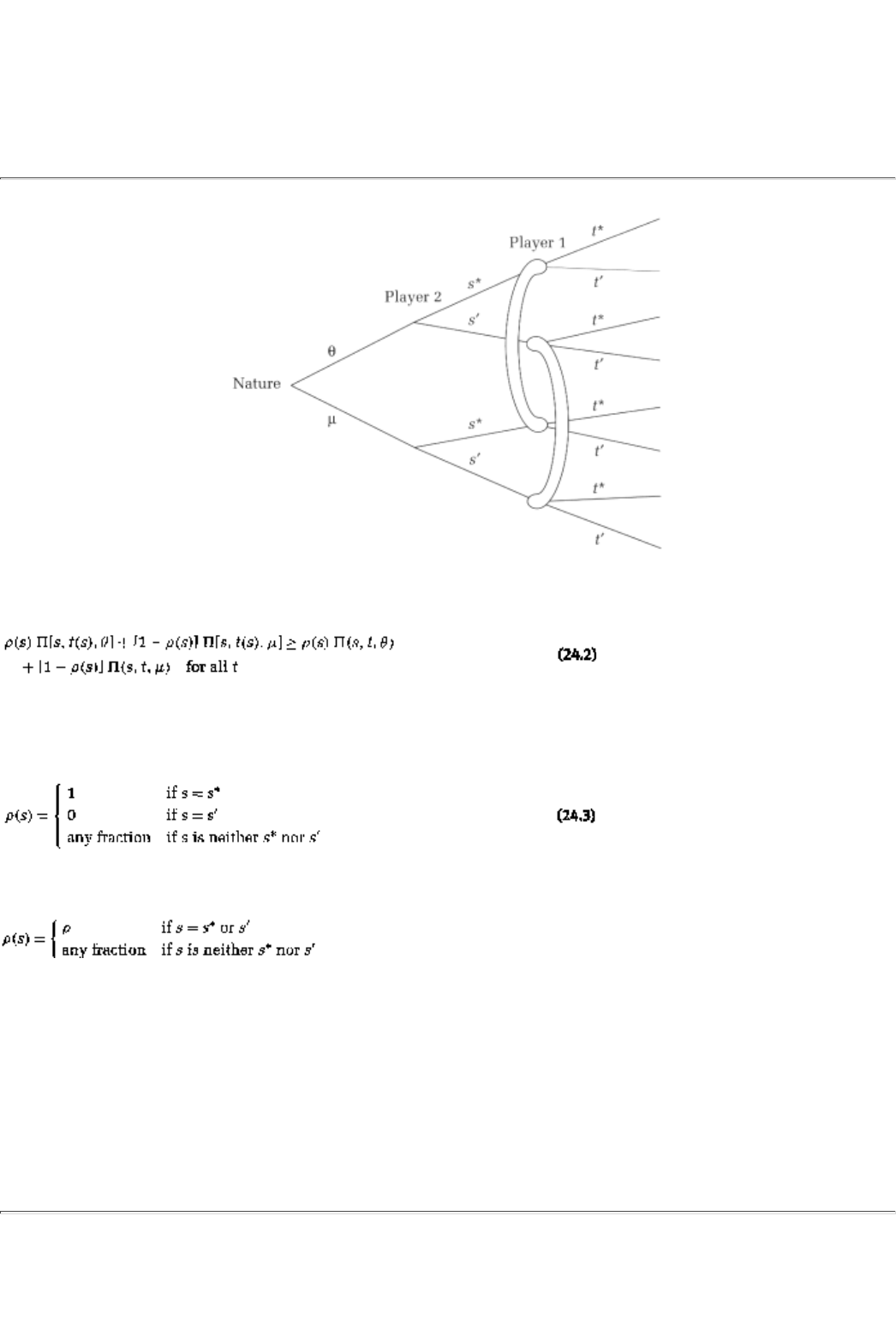

24.2 A Definition, an Equilibrium Concept, and Examples 387

24.2.1 Definition 387

24.2.2 Perfect Bayesian Equilibrium 387

24.2.3 A Further Analysis of the Examples 389

24.3 Signaling Product Quality 391

24.3.1 The Bad Can Drive Out the Good 391

24.3.2 Good Can Signal Quality? 392

24.4 Case Study Used CarsA Market for Lemons? 394

24.5 Concluding Remarks 395

Summary 396

Exercises 396

Part Five Foundations 401

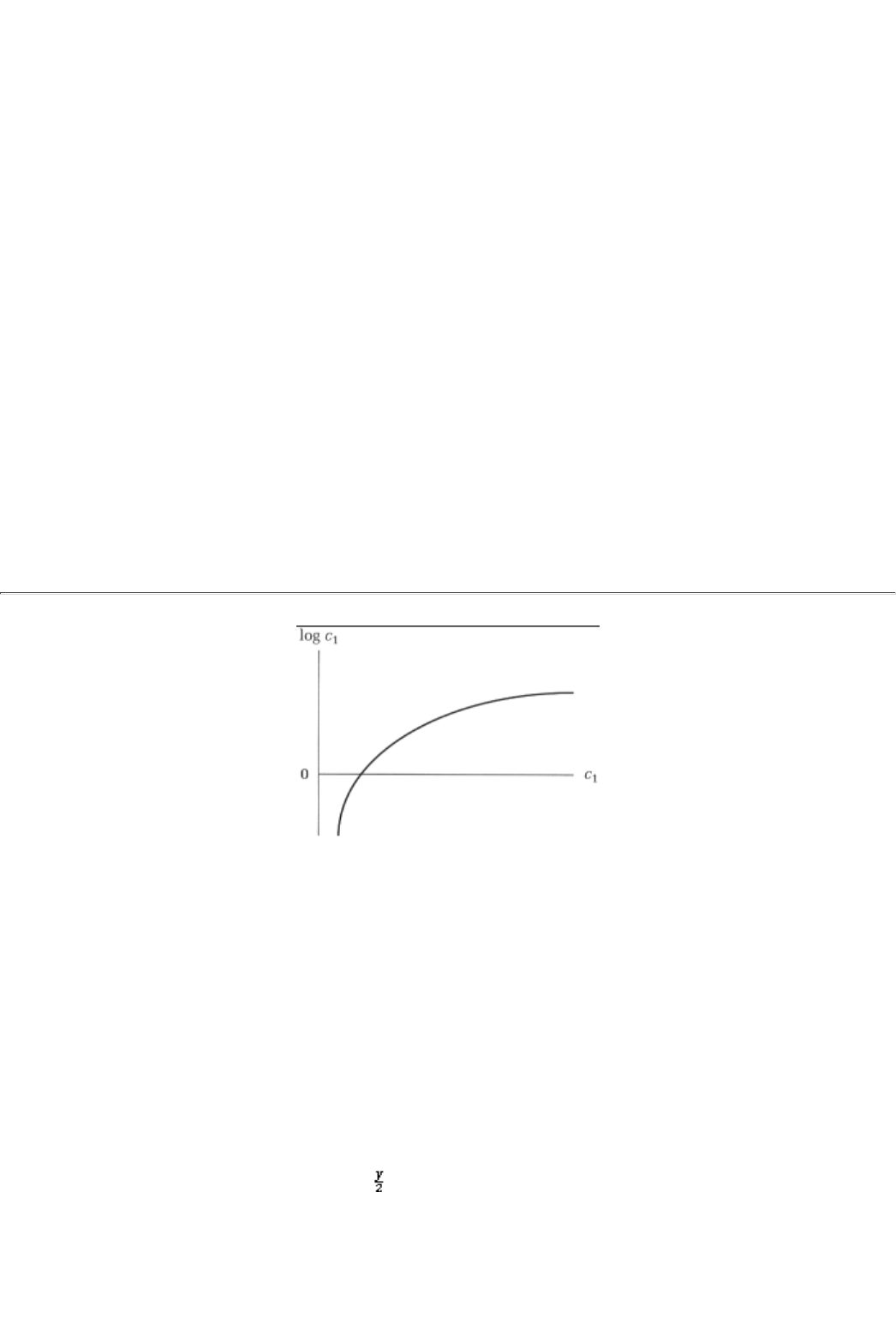

Chapter 25 Calculus and Optimization 403

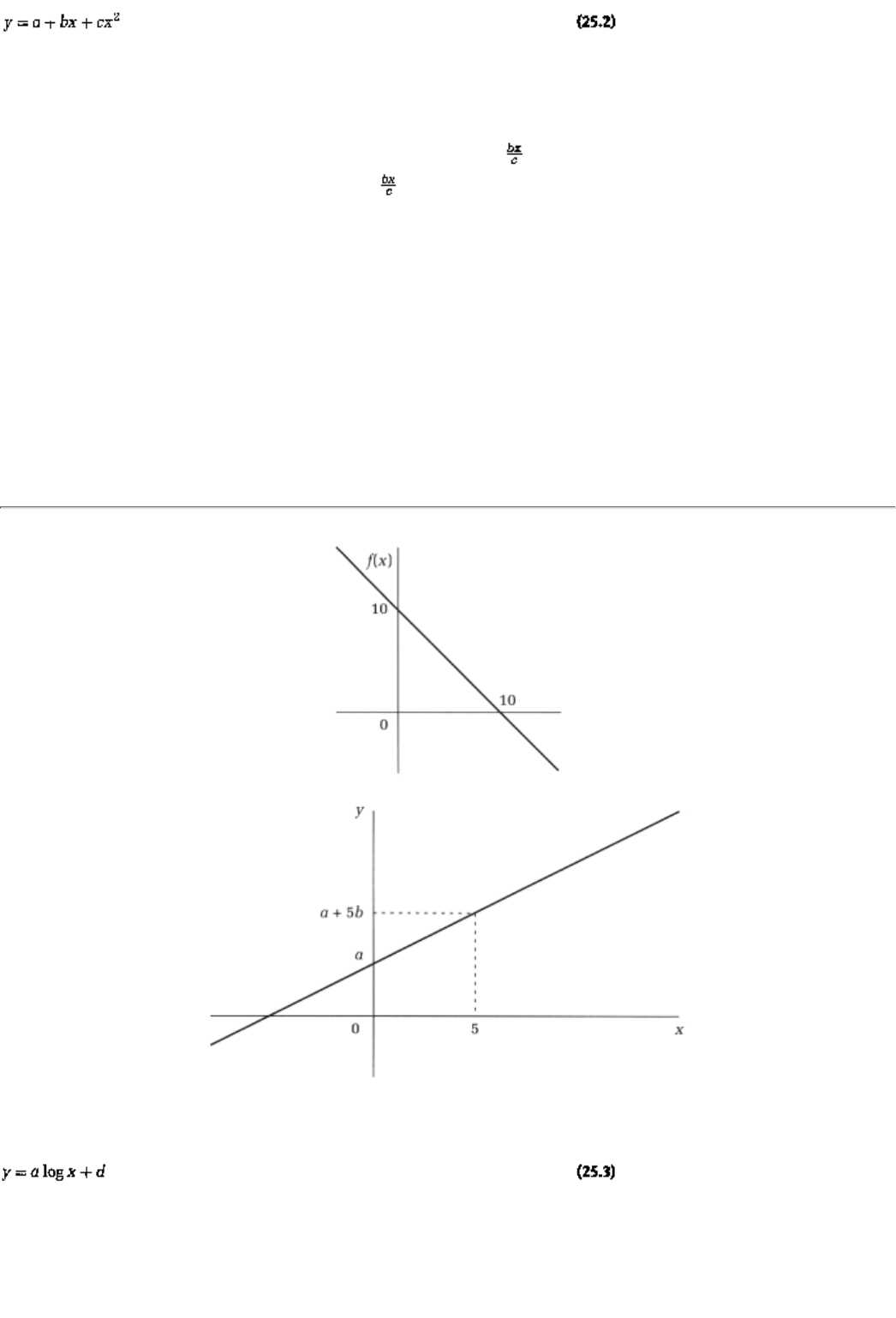

25.1 A Calculus Primer 403

25.1.1 Functions 404

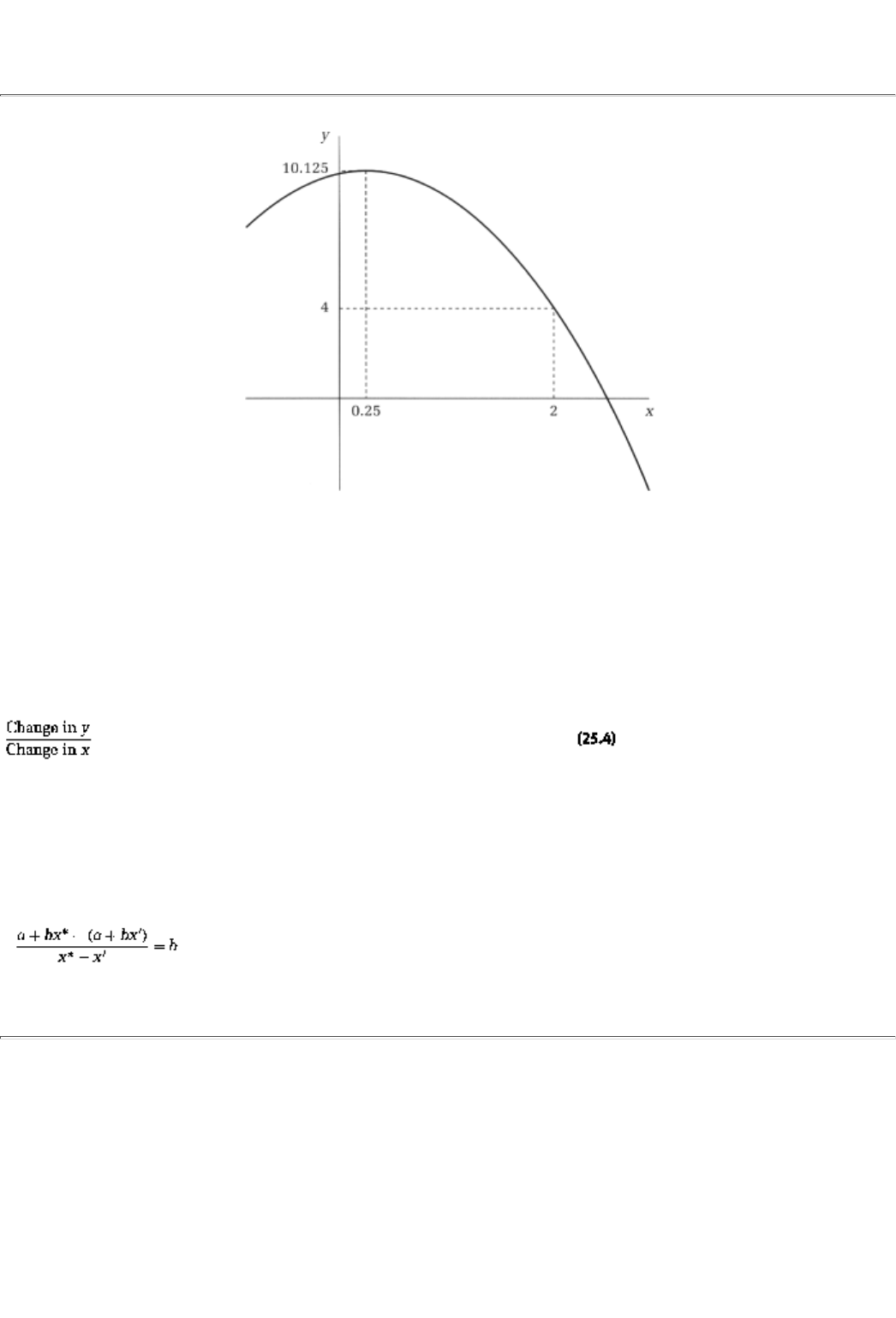

25.1.2 Slopes 405

25.1.3 Some Formulas 407

25.1.4 Concave Functions 408

25.2 An Optimization Theory Primer 409

25.2.1 Necessary Conditions 409

25.2.2 Sufficient Conditions 410

25.2.3 Feasibility Constraints 411

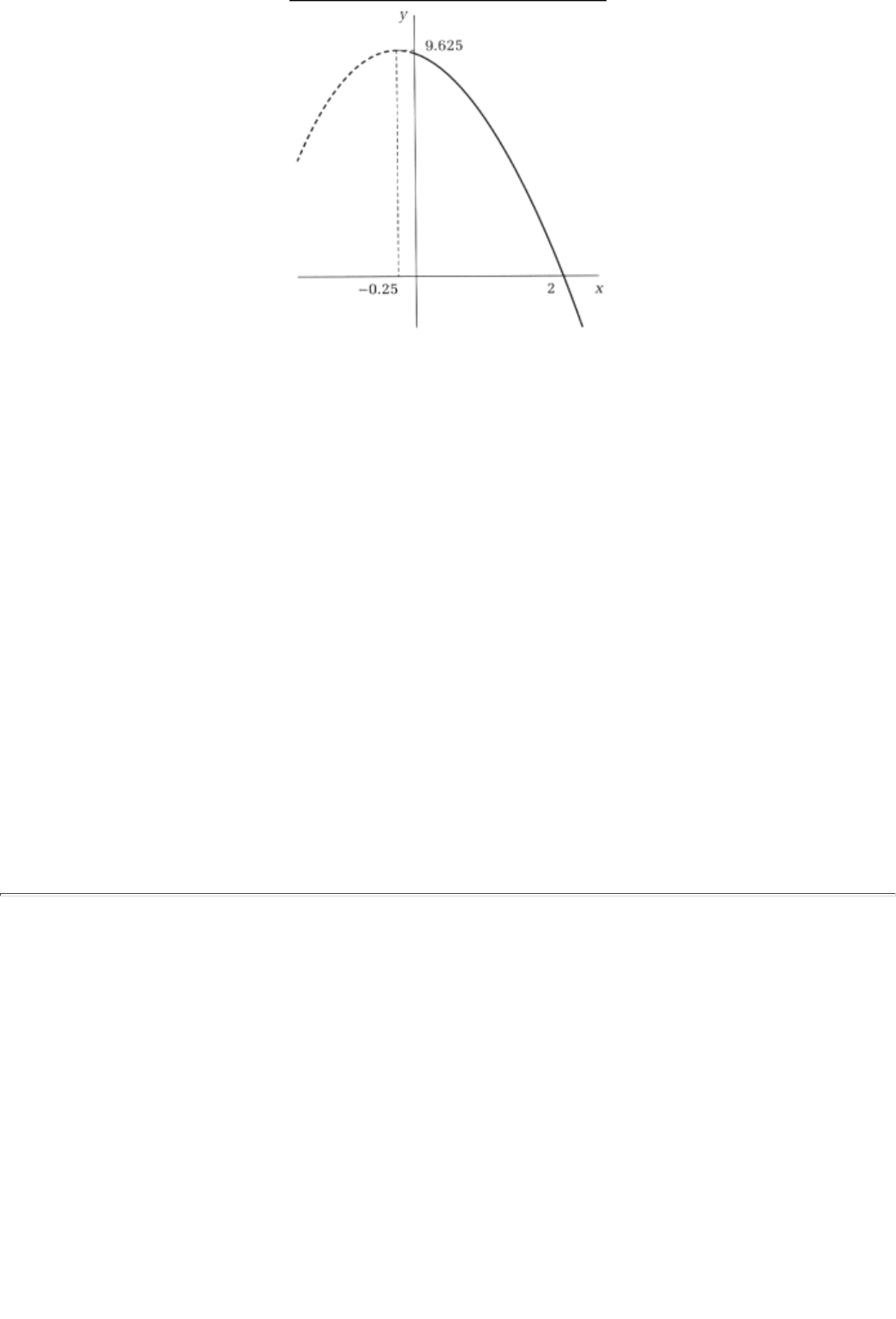

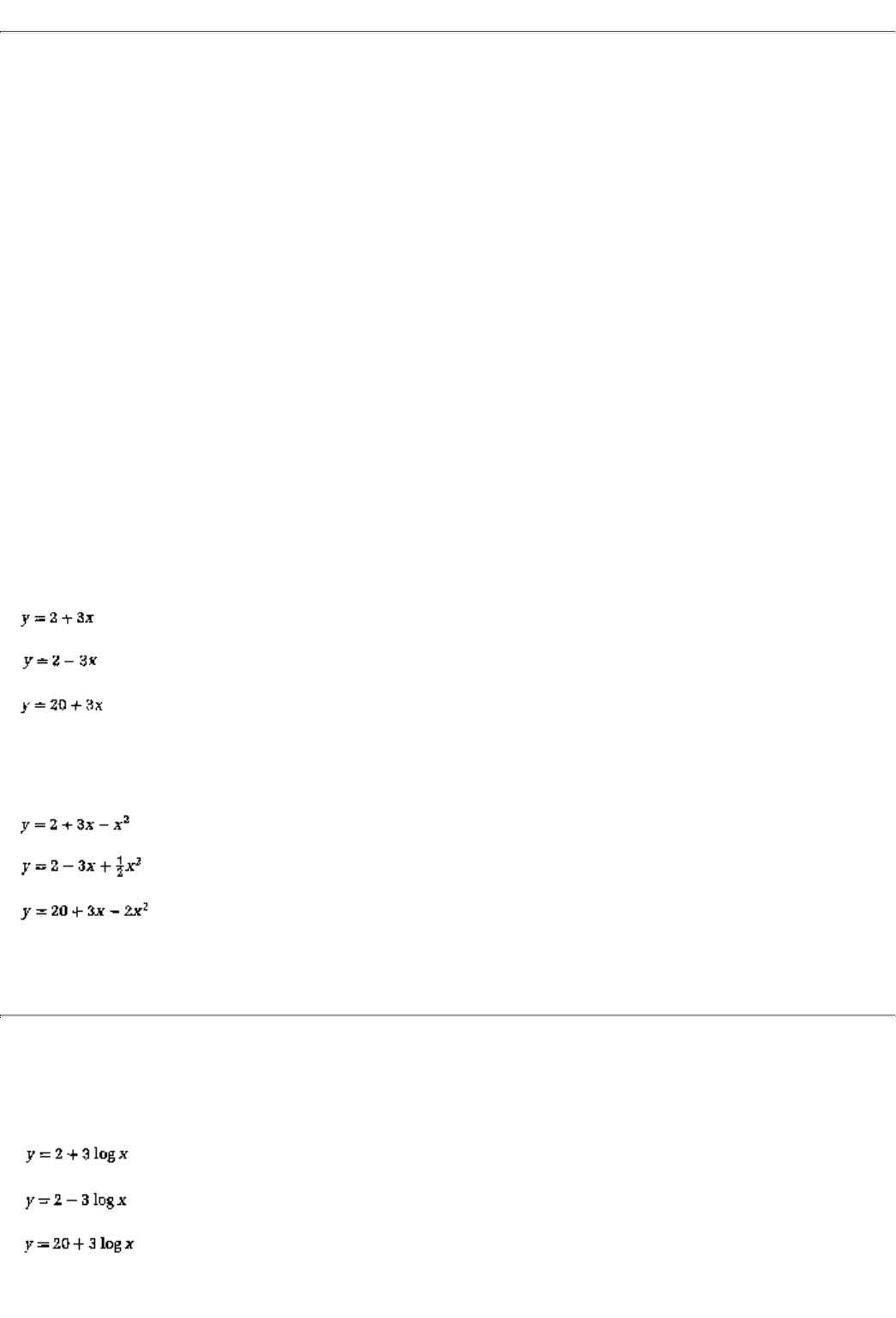

25.2.4 Quadratic and Log Functions 413

Summary 414

Exercises 415

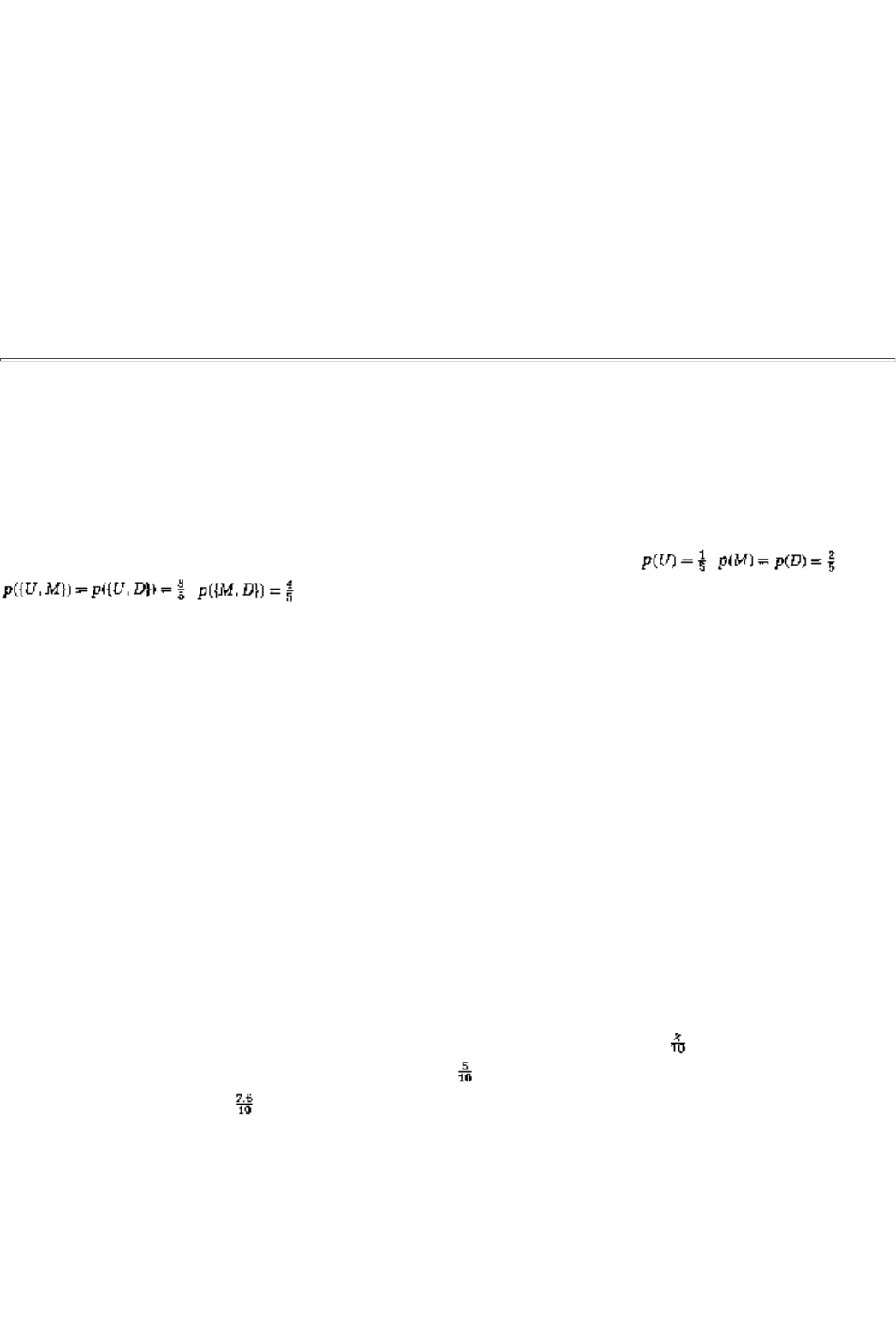

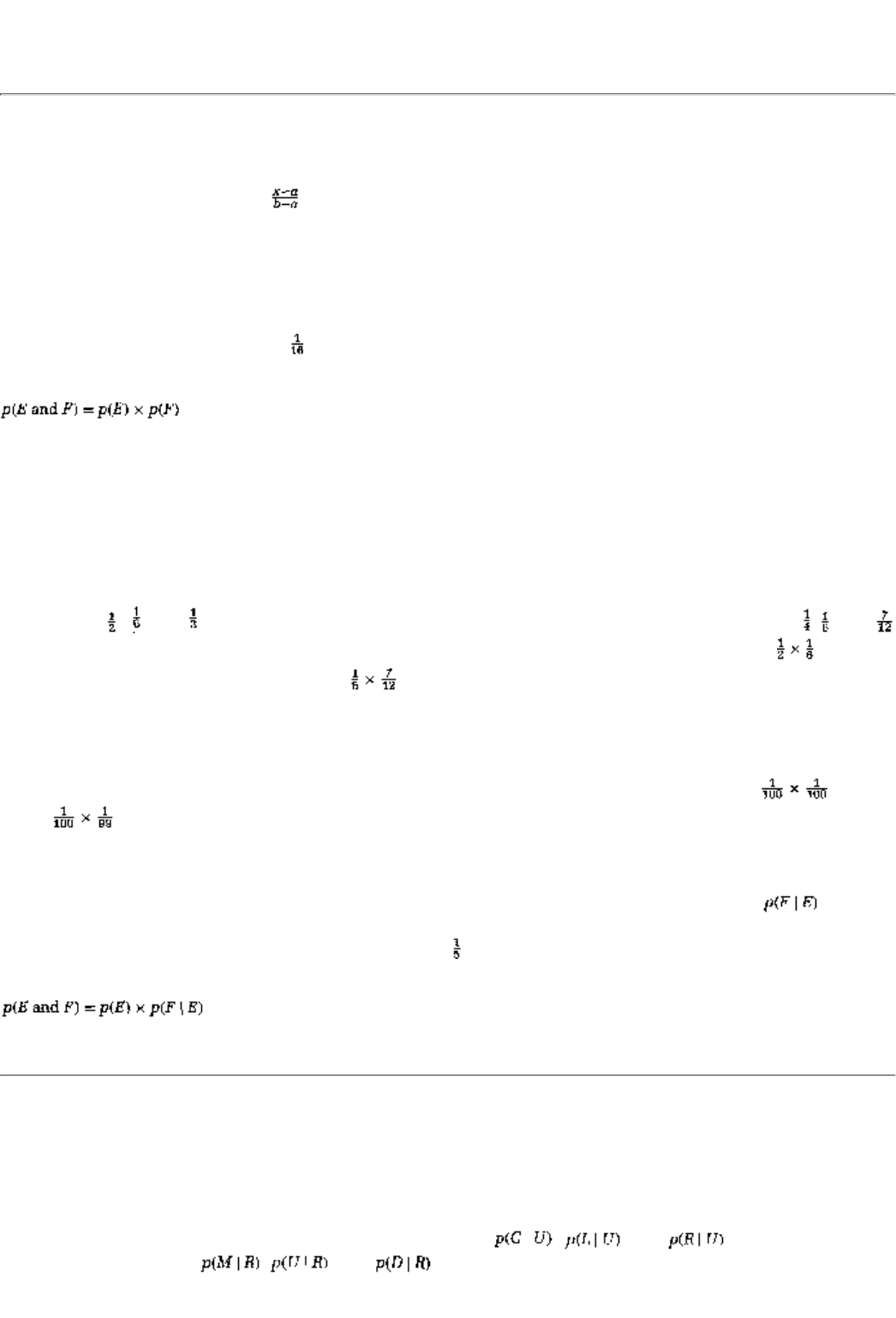

Chapter 26 Probability and Expectation 421

26.1 Probability 421

26.1.1 Independence and Conditional Probability 425

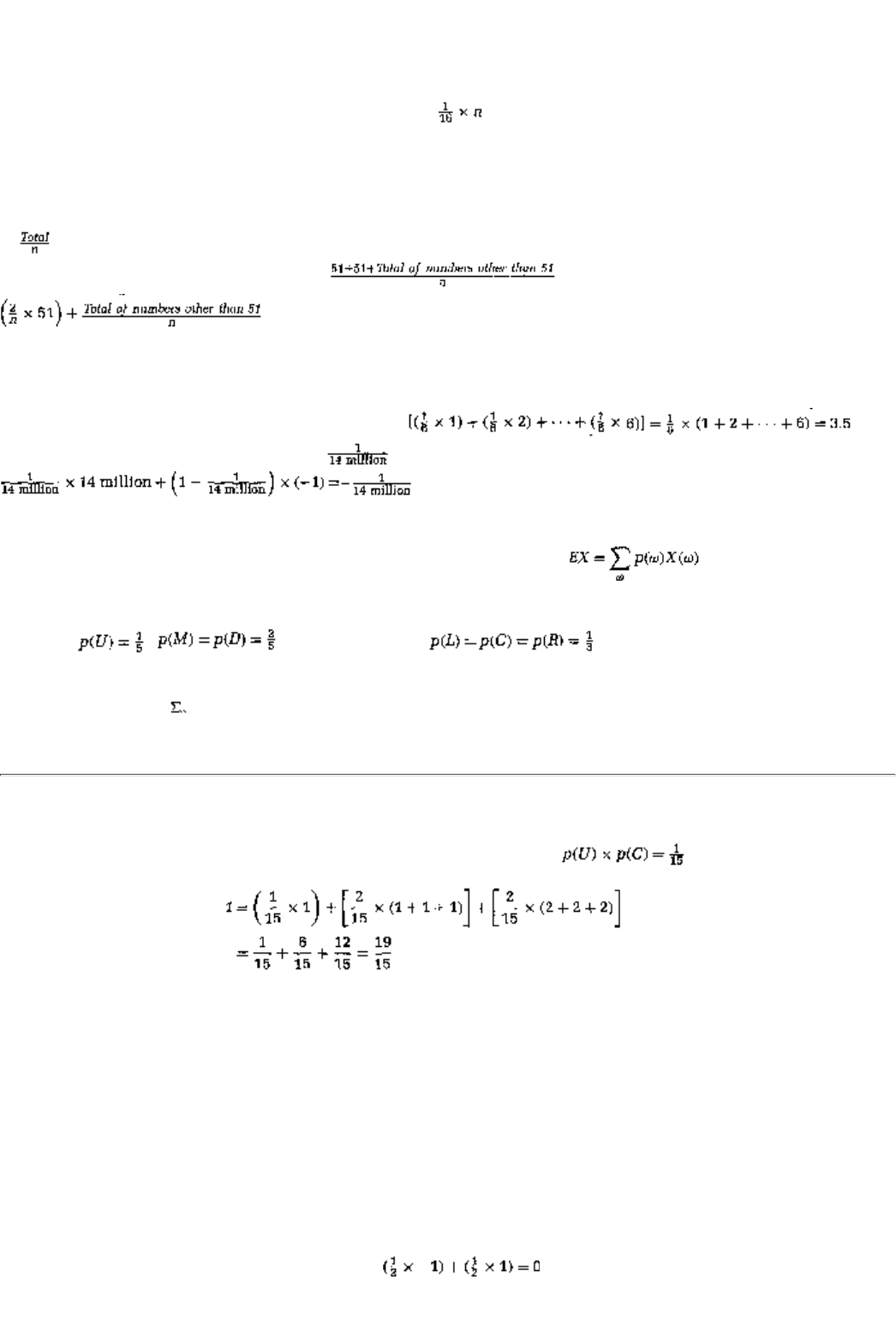

26.2 Random Variables and Expectation 426

26.2.1 Conditional Expectation 427

page_xviii

Page XIX

Summary 428

Exercises 428

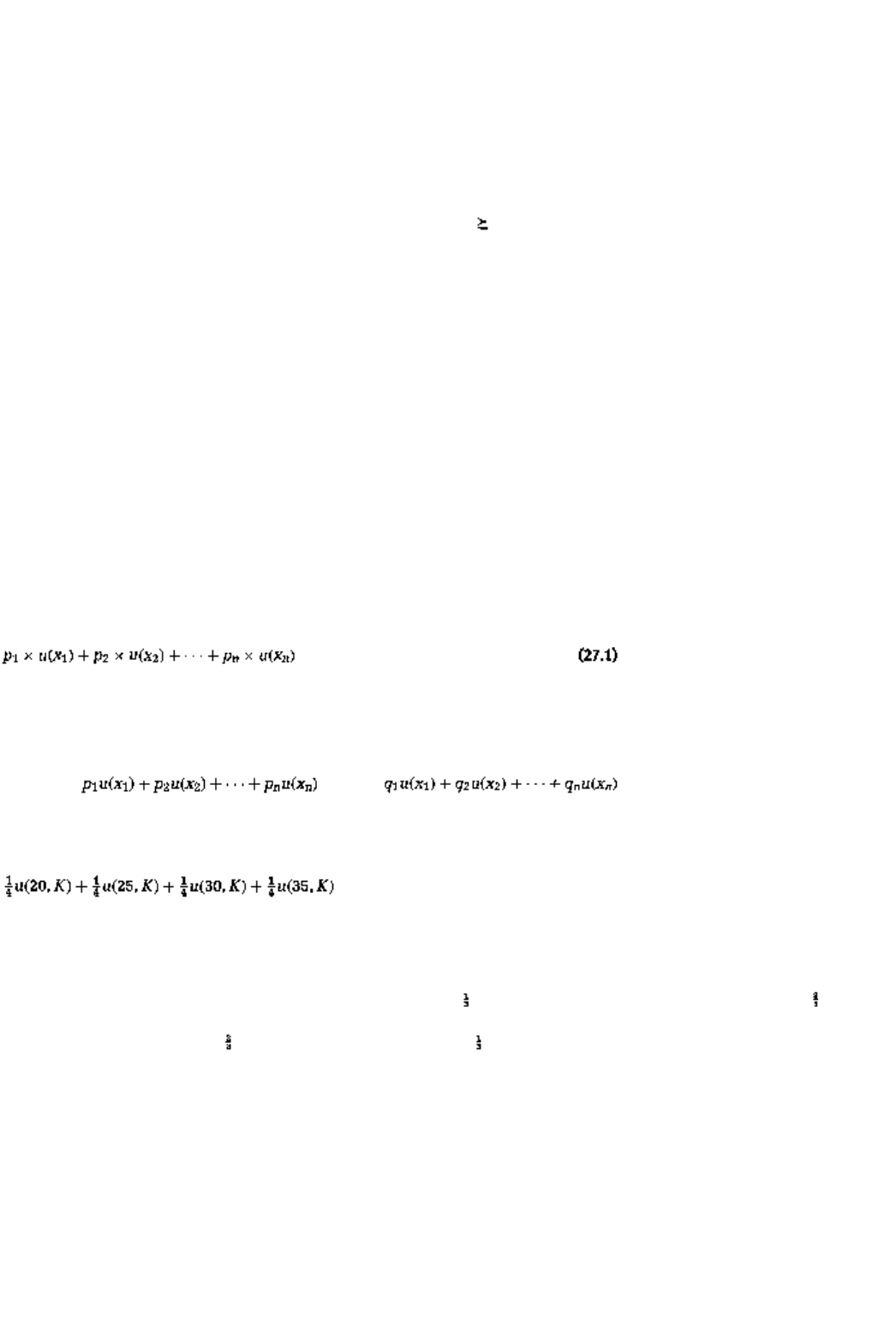

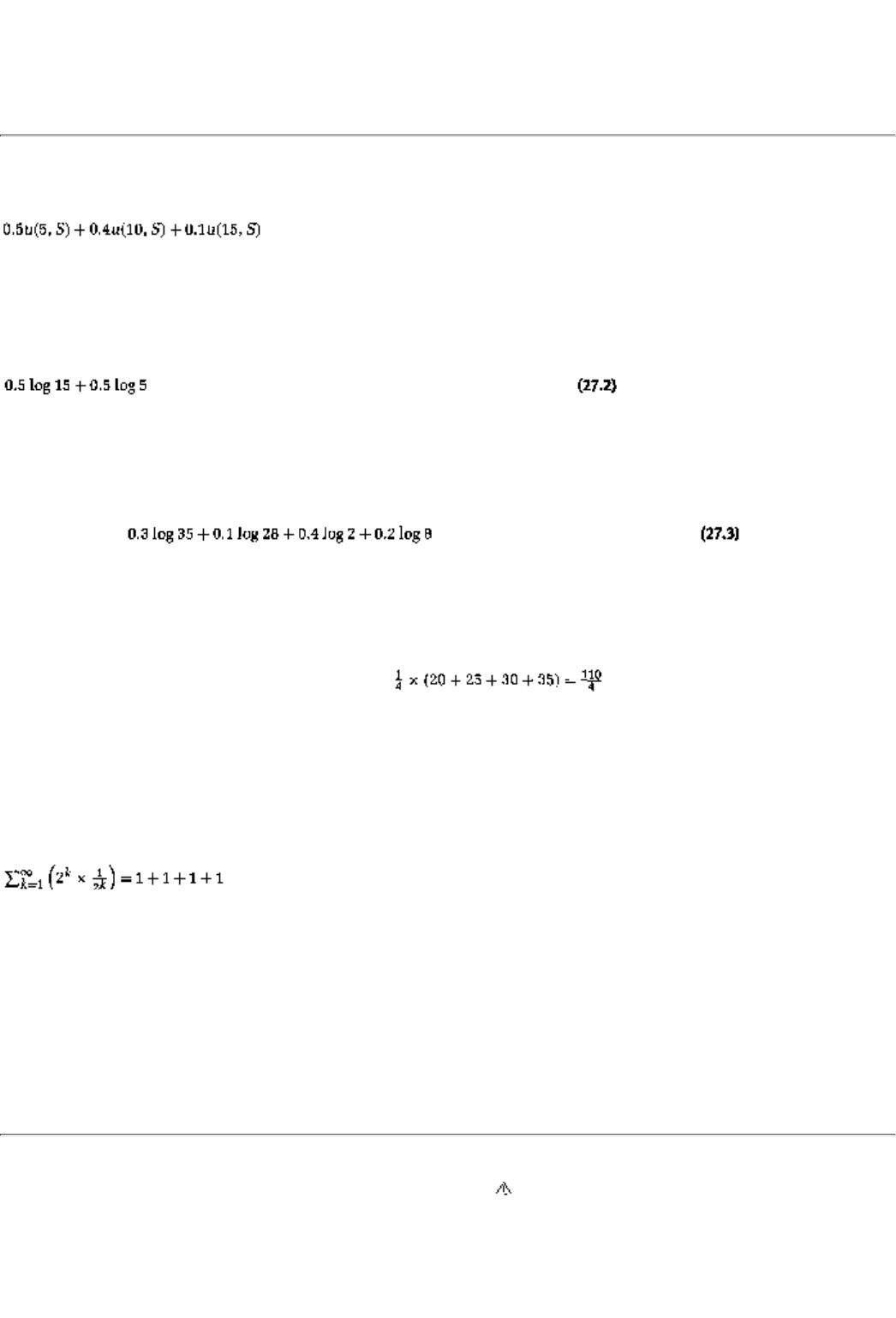

Chapter 27 Utility and Expected Utility 433

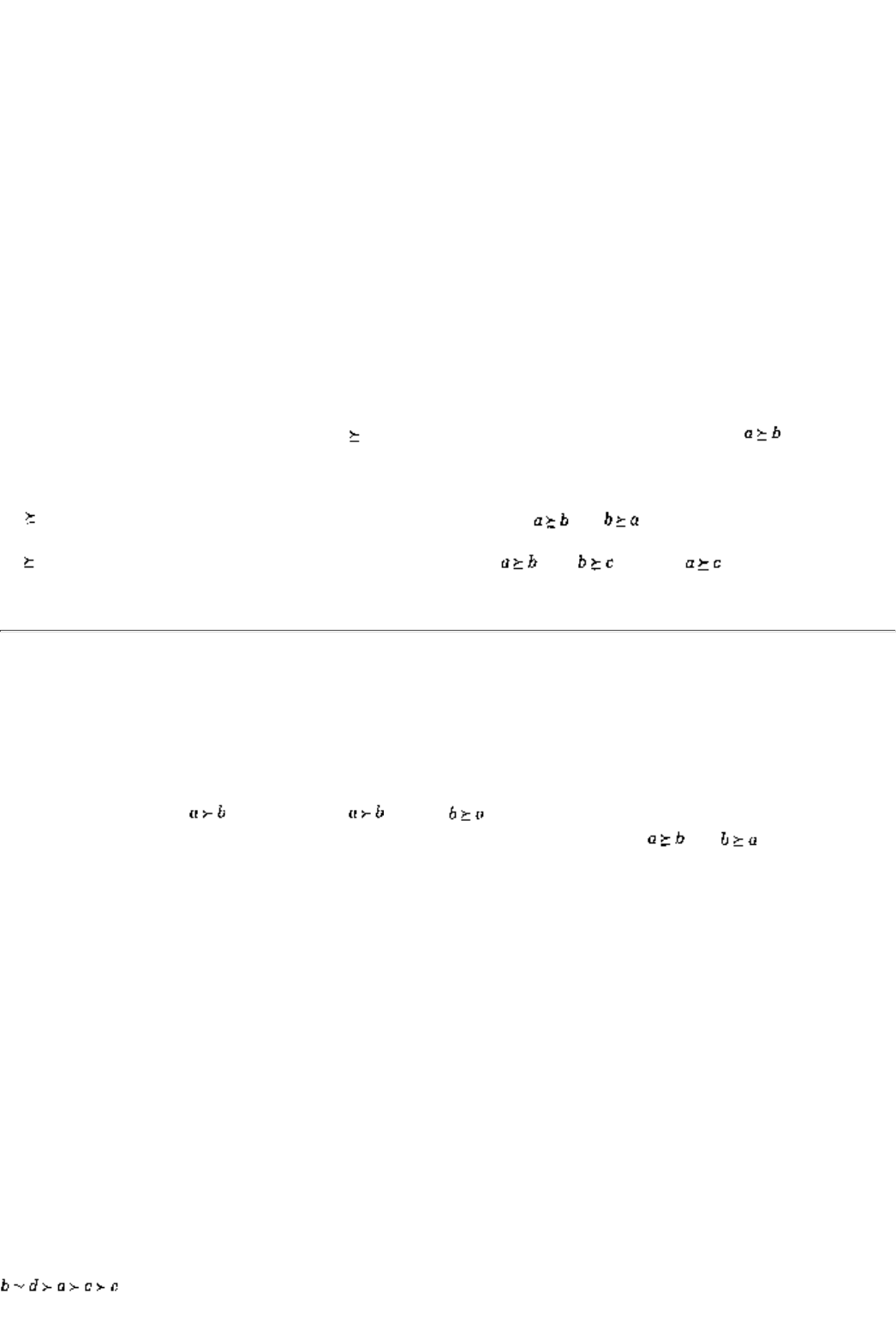

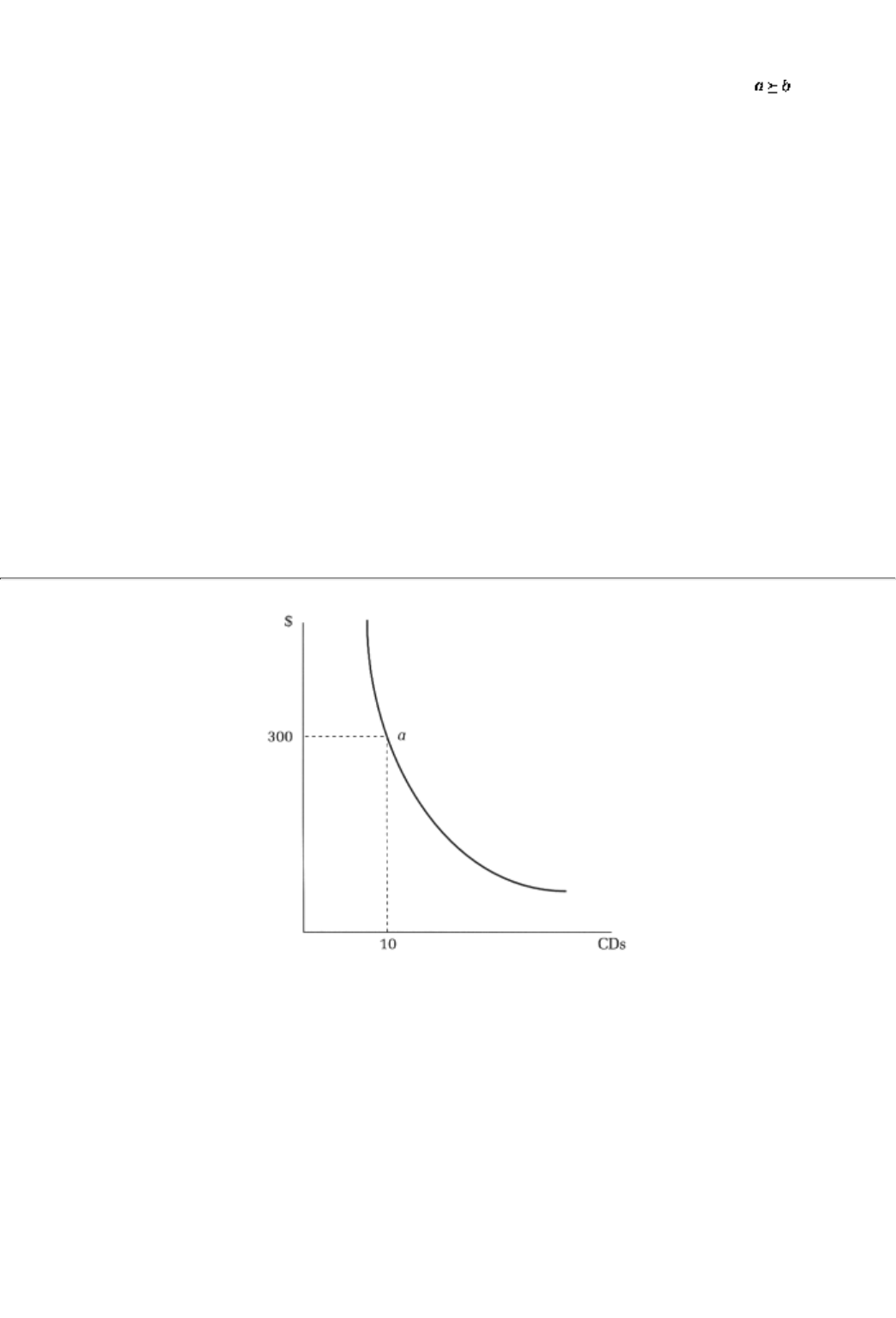

27.1 Decision Making Under Certainty 433

27.2 Decision Making Under Uncertainty 436

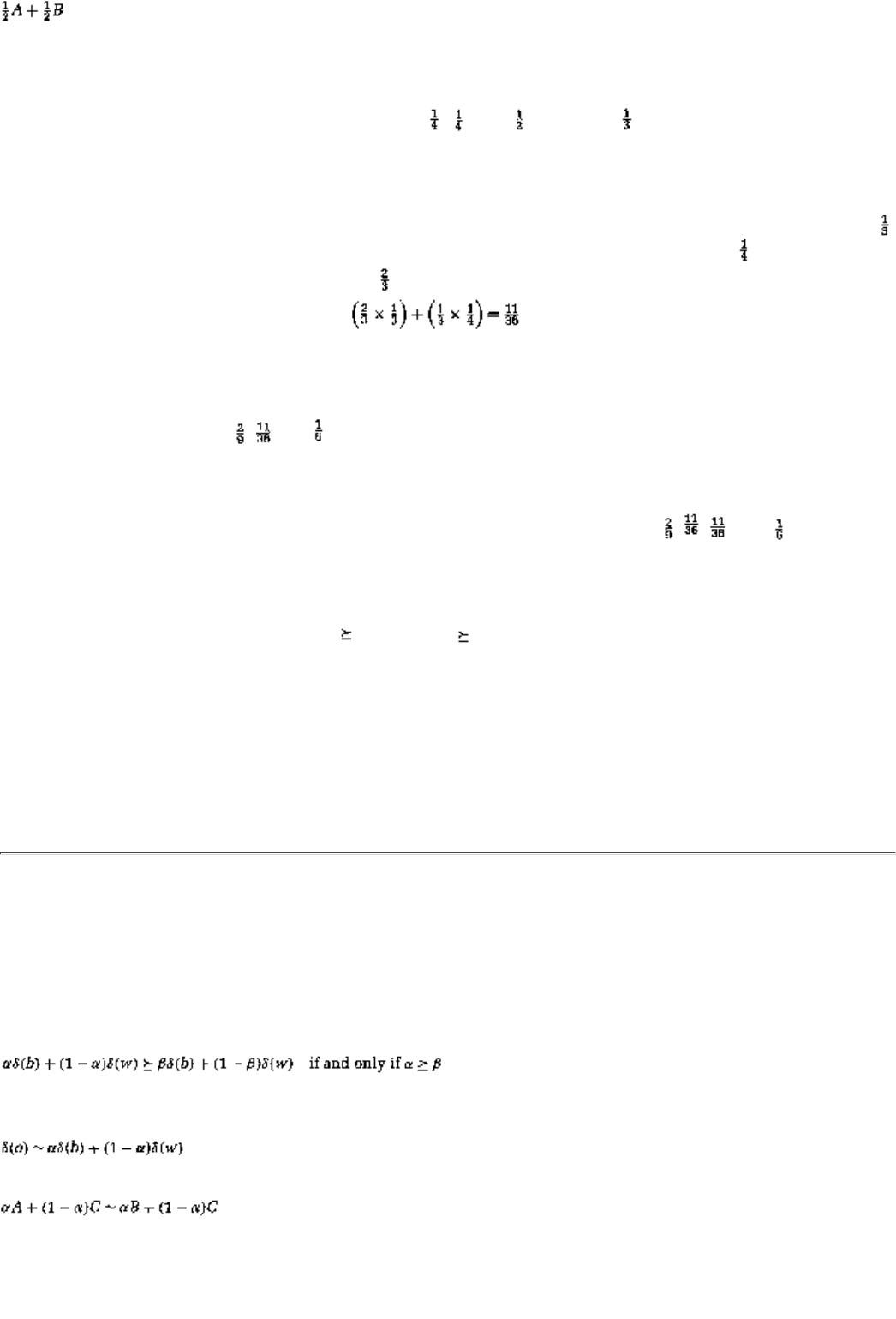

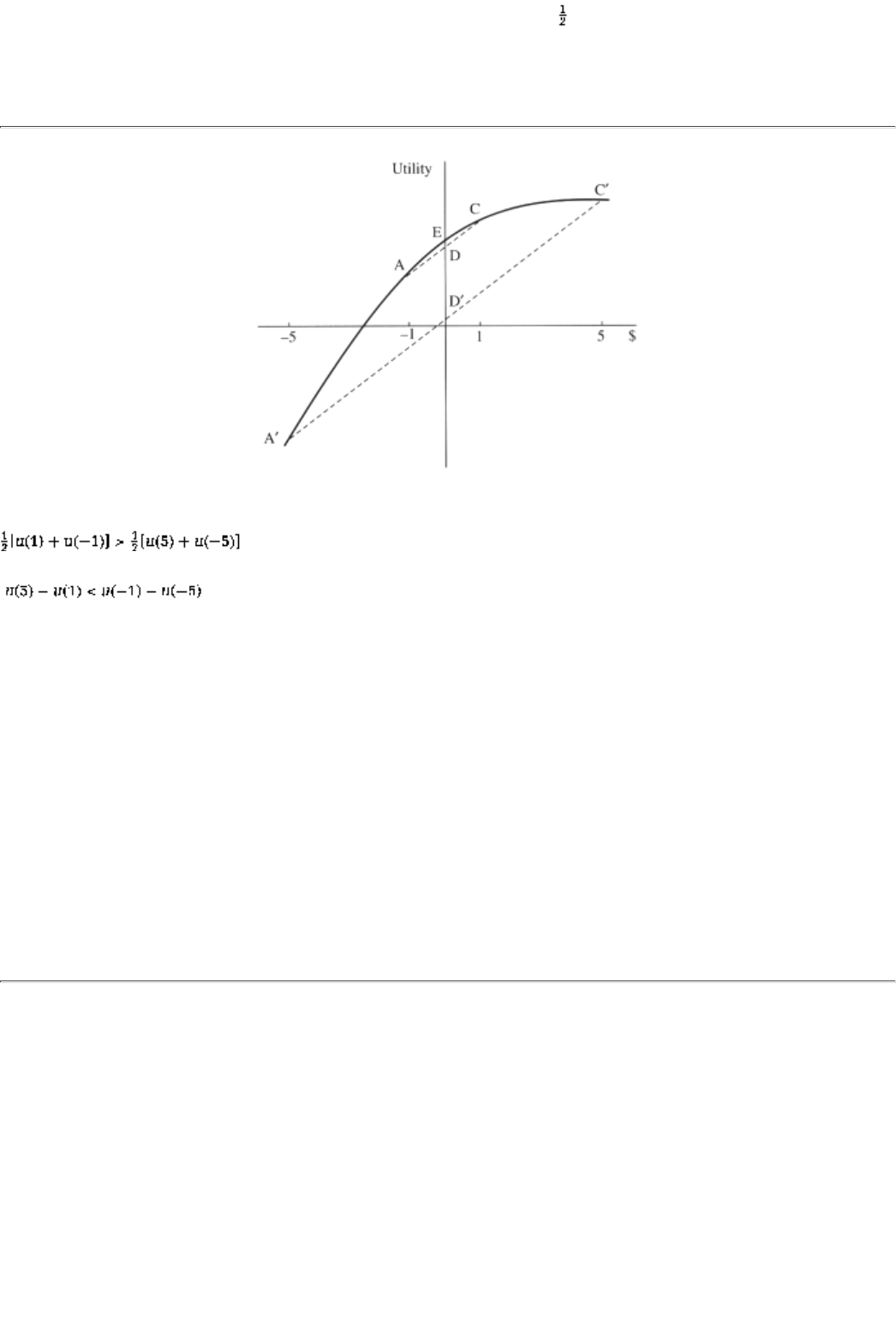

27.2.1 The Expected Utility Theorem and the Expected Return Puzzle 437

27.2.2 Details on the Von Neumann-Morgenstern Theorem

439

27.2.3 Payoffs in a Game 441

27.3 Risk Aversion 441

Summary 444

Exercises 444

Chapter 28 Existence of Nash Equilibria 452

28.1 Definition and Examples 451

28.2 Mathematical Background: Fixed Points 453

28.3 Existence of Nash Equilibria: Results and Intuition 458

Summary 460

Exercises 461

Index 465

page_xix

Page XXI

PREFACE

This book evolved out of lecture notes for an undergraduate course in game theory that I have taught at

Columbia University for the past six years. On the first two occasions I took the straight road, teaching out

of available texts. But the road turned out to be somewhat bumpy; for a variety of reasons I was not satisfied

with the many texts that I considered. So the third time around I built myself a small bypass; I wrote a set of

sketchy lecture notes from which I taught while I assigned a more complete text to the students. Although

this compromise involved minimal costs to me, it turned out to be even worse for my students, since we were

now traveling on different roads. And then I (foolishly) decided to build my own highway; buoyed by a

number of favorable referee reports, I decided to turn my notes into a book. I say foolishly because I had no

idea how much hard work is involved in building a road. I only hope I built a smooth one.

The Book's Purpose And Its Intended Audience

The objective of this book is to provide a rigorous yet accessible introduction to game theory and its

applications, primarily in economics and business, but also in political science, the law, and everyday life.

The material is intended principally for two audiences: first, an undergraduate audience that would take this

course as an elective for an economics major. (My experience has been, however, that my classes are also

heavily attended by undergraduate majors in engineering and the sciences who take this course to fulfill their

economics requirement.) The many applications and case studies in the book should make it attractive to its

second audience, MBA students in business schools. In addition, I have tried to make the material useful to

graduate students in economics and related disciplinesPh.D. students in political science, Ph.D. students in

economics not specializing in economic theory, etc.who would like to have a source from which they can get

a self-contained, albeit basic, treatment of game theory.

Pedagogically I have had one overriding objective: to write a textbook that would take the middle road

between the anecdotal and the theorem-driven treatments of the subject. On the one hand is the approach

that teaches purely by examples and anecdotes. In my experience that leaves the students, especially the

brighter ones, hungering for more. On the other hand, there is the more advanced approach emphasizing a

rigorous treatment, but again, in my experience, if there are too few examples and applications it is difficult

to keep even the brighter students interested.

I have tried to combine the best elements of both approaches. Every result is precisely stated (albeit with

minimal notation), all assumptions are detailed, and at least a sketch of a proof is provided. The text also

contains nine chapter-length applications and twelve fairly detailed case studies.

page_xxi

Page XXII

Distinctive Features Of The Book

I believe this book improves on available undergraduate texts in the following ways.

Content a full description of utility theory and a detailed analysis of dynamic game theory

The book provides a thorough discussion of the single-agent decision theory that forms the underpinning of

game theory. (That exercise takes up three chapters in Part Five.) More importantly perhaps, this is the first

text that provides a detailed analysis of dynamic strategic interaction (in Part Three). The theory of repeated

games is studied over two and a half chapters, including discussions of finitely and infinitely repeated games

as well as games with varying stage payoffs. I follow the theory with two chapter-length applications:

market-making on the NASDAQ financial market and the price history of OPEC. A discussion of dynamic

games (in which the game environment evolves according to players' previous choices) follows along with an

application to the dynamic commons problem. I believe many of the interesting applications of game theory

are dynamicstudent interest seems always to heighten when I get to this part of the courseand I have found

that every other text pays only cursory attention to many dynamic issues.

Style emphasis on a parallel development of theory and examples

Almost every chapter that introduces a new concept opens with numerical examples, some of which are well

known and many of which are not. Sometimes I have a leading example and at other times a set of (small)

examples. After explaining the exam-pies, I go to the concept and discuss it with reasonable rigor. At this

point I return to the examples and analyze the just introduced concept within the context of the examples. At

the end of a sectiona set of chapters on related ideasI devote a whole chapter, and sometimes two, to

economic applications of those ideas.

Length and Organization bite-sized chapters and a static to dynamic progression

I decided to organize the material within each chapter in such a fashion that the essential elements of a

whole chapter can be taught in one class (or a class and a half, depending on level). In my experience it has

been a lot easier to keep the students engaged with this structure than with texts that have individual

chapters that are, for example, over fifty pages long. The topics evolve in a natural sequence: static complete

information to dynamic complete information to static incomplete information. I decided to skip much of

dynamic incomplete information (other than signaling) because the questions in this part of the subject are a

lot easier than the answers (and my students seemed to have little stomach for equilibrium refinements, for

example). There are a few advanced topics as well; different instructors will have the freedom to decide

which subset of the advanced topics they would like to teach in their course. Sections that are more difficult

are marked with the symbol . Depending on level, some instructors will want to skip

page_xxii

Page XXIII

these sections at first presentation, while others may wish to take extra time in discussing the material.

Exercises

At the end of each chapter there are about twenty-five to thirty problems (in the Exercises section). In

addition, within the text itself, each chapter has a number of questions (or concept checks) in which the

student is asked to complete a part of an argument, to compute a remaining case in an example, to check the

computation for an assertion, and so on. The point of these questions is to make sure that the reader is really

following the chapter's argument; I strongly encourage my students to answer these questions and often

include some of them in the problem sets.

Case Studies and Applications

At the end of virtually every theoretical chapter there is a case study drawn from real life to illustrate the

concept just discussed. For example, after the chapter on Nash equilibrium, there is a discussion of its usage

in understanding animal conflicts. After a chapter on backward induction (and the power of commitment),

there is a discussion of poison pills and other take-over deterrents. Similarly, at the end of each cluster of

similar topics there is a whole chapter-length application. These range from the tragedy of the commons to

bankruptcy law to incomplete information Cournot competition.

An Overview And Two Possible Syllabi

The book is divided into five parts. The two chapters of Part One constitute an Introduction. Part Two

(Chapters 3 through 10) covers Strategic Form Games: Theory and Practice, while Part Three (Chapters 11

through 18) concentrates on Extensive Form Games: Theory and Practice. In Part Four (Chapters 19

through 24) I discuss Asymmetric Information Games: Theory and Practice. Finally, Part Five (Chapters 25

through 28) consists of chapters on Foundations.

I can suggest two possible syllabi for a one-semester course in game theory and applications. The first

stresses the applications end while the second covers all the theoretical topics. In terms of mathematical

requirements, the second is, naturally, more demanding and presumes that the students are at a higher level.

I have consequently included twenty chapters in the second syllabus and only eighteen in the first. (Note

that the numbers are chapter numbers.)

Syllabus 1 (Applications Emphasis)

1. A First Look at the Applications

3. Strategic Form Games and Dominant Strategies

page_xxiii

Page XXIV

4. Dominance Solvability

5. Nash Equilibrium

6. An Application: Cournot Duopoly

8. Mixed Strategies

9.Two Applications: Natural Monopoly and Bankruptcy Law

11. Extensive Form Games and Backward Induction

12. An Application: Research and Development

13. Subgame Perfect Equilibrium

15. Infinitely Repeated Games

16. An Application: Competition and Collusion in the NASDAQ Stock Market

17. An Application: OPEC

19. Moral Hazard and Incentives Theory

20. Games with Incomplete Information

22. Mechanism Design, the Revelation Principle, and Sales to an Unknown Buyer

23. An Application: Auctions

24.Signaling Games and the Lemons Problem

Syllabus 2 (Theory Emphasis)

2. A First Look at the Theory

27. Utility and Expected Utility

3. Strategic Form Games and Dominant Strategies

4. Dominance Solvability

5. Nash Equilibrium

page_xxiv

Page XXV

6. An Application: Cournot Duopoly

7. An Application: The Commons Problem

8. Mixed Strategies

10. Zero-Sum Games

28. Existence of Nash Equilibria

11. Extensive Form Games and Backward Induction

13. Subgame Perfect Equilibrium

14. Finitely Repeated Games

15. Infinitely Repeated Games

17. An Application: OPEC

18. Dynamic Games with an Application to the Commons Problem

20. Games with Incomplete Information

21. An Application: Incomplete Information in a Cournot Duopoly

22. Mechanism Design, the Revelation Principle, and Sales to an Unknown Buyer

23. An Application: Auctions

Prerequisites

I have tried to write the book in a manner such that very little is presumed of a reader's mathematics or

economics background. This is not to say that one semester each of calculus and statistics and a semester of

intermediate microeconomics will not help. However, students who do not already have this background but

are willing to put in extra work should be able to educate themselves sufficiently.

Toward that end, I have included a chapter on calculus and optimization, and one on probability and

expectation. Readers can afford not to read the two chapters if they already have the following knowledge.

In calculus, I presume knowledge of the slope of a function and a familiarity with slopes of the linear,

quadratic, log, and the square-root functions. In optimization theory, I use the first-order characterization of

an interior

page_xxv

Page XXVI

optimum, that the slope of a maximand is zero at a maximum. As for probability, it helps to know how to

take an expectation. As for economic knowledge, I have attempted to explain all relevant terms and have

not presumed, for example, any knowledge of Pareto optimality, perfect competition, and monopoly.

Acknowledgments

This book has benefited from the comments and criticisms of many colleagues and friends. Tom Gresik at

Penn State, Giorgidi Giorgio at La Sapienza in Rome, Sanjeev Goyal at Erasmus, Matt Kahn at Columbia,

Amanda Bayer at Swarthmore, Rob Porter at Northwestern, and Charles Wilson at NYU were foolhardy

enough to have taught from preliminary versions of the text, and I thank them for the

ir courage and

comments. In addition, the following reviewers provided very helpful comments:

Amanda Bayer, Swarthmore College

James Dearden, Lehigh University

Tom Gresik, Penn State

Ehud Kalai, Northwestern University

David Levine, UCLA

Michael Meurer, SUNY Buffalo

Yaw Nyarko, NYU

Robert Rosenthal, Boston University

Roberto Serrano, Brown University

Rangarajan Sundaram, NYU

A second group of ten referees provided extremely useful, but anonymous, comments.

My graduate students Satyajit Bose, Tack-Seung Jun, and Tsz-Cheong Lai very carefully read the entire

manuscript. Without their hawk-eyed intervention, the book would have many more errors. They are also

responsible for the Solutions Manual, which accompanies this text. My colleagues in the community, Venky

Bala, Terri Devine, Ananth

page_xxvi

Page XXVII

Madhavan, Mukul Majumdar, Alon Orlitsky, Roy Radner, John Rust, Paulo Siconolfi, and Raghu Sundaram,

provided support, sometimes simply by questioning my sanity in undertaking this project. My brother, Prajjal

Dutta, often provided a noneconomist's reality check. Finally, I cannot sufficiently thank my wife, Susan

Sobelewski, who provided critical intellectual and emotional support during the writing of this book.

page_xxvii

Page XXIX

A READER'S GUIDE

Game theory studies strategic situations. Suppose that you are a contestant on the quiz show "Jeopardy!" At

the end of the half hour contest (during Final Jeopardy) you have to make a wager on being able to answer

correctly a final question (that you have not yet been asked). If you answer correctly, your wager will be

added to your winnings up to that point; otherwise, the wager will be subtracted from your total. The two

other contestants also make wagers and their final totals are computed in an identical fashion. The catch is

that there will be only one winner: the contestant with the maximum amount at the very end will take home

his or her winnings while the other two will get (essentially) nothing.

Question: How much should you wager? The easy part of the answer is that the more confident you are in

your knowledge, the more you should bet. The difficult part is, how much is enough to beat out your rivals?

That clearly depends on how much they wager, that is, what their strategies are. It also depends on how

knowledgeable you think they are (after all, like you, they will bet more if they are more knowledgeable, and

they are also more likely to add to their total in that case). The right wager may also depend on how much

money you have already wonand how much they have won.

For instance, suppose you currently have $10,000 and they have $7,500 each. Then a $5,001 wagerand a

correct answerguarantees you victory. But that wager also guarantees you a lossif you answer

incorrectlyagainst an opponent who wagers only $2,500. You could have bet nothing and guaranteed victory

against the $2,500 opponent (since the rules of "Jeopardy!" allow all contestants to keep their winnings in

the event of a tie). Of course, the zero bet would have been out of luck against an o

pponent who bet

everything and answered correctly. And then there is a third possibility for you: betting everything . . .

As you can see the problem appears to be quite complicated. (And keep in mind that I did not even mention

additional relevant factors: estimates that you have about answering correctly or about the other contestants

answering correctly, that the others may have less than $5,000, that you may have more than $15,000, and

so forth.) However, game theory has the answer to this seemingly complicated problem! (And you will read

about it in Chapter 20.) The theory provides us with a systematic way to analyze questions such as: What are

the options available for each contestant? What are the consequences of various choices? How can we

model a contestant's estimate of the others' knowledge? What is a rational wager for a contestant?

In Chapter I you will encounter a variety of other examplesfrom real life, from economics, from politics,

from law, and from businesswhere game theory gives us the tools and the techniques to analyze the strategic

issues.

In terms of prerequisites for this book, I have attempted to write a self-contained text. If you have taken one

semester each of calculus, statistics, and intermediate microeconomics, you will find life easier. If you do not

have the mathematics background,

page_xxix

Page XXX

it is essential that you acquire it. You should start with the two chapters in Part Five, one on calculus and

optimization, the other on probability and expectation. Read them carefully and do as many of the exercises

as possible. If the chapter on utility theory, also in Part Five, is not going to be covered in class, you should

read that carefully as well. As for economic knowledge, if you have not taken an intermediate

microeconomics class, it would help for you to pick up one of the many textbooks for that course and read

the chapters on perfect competition and monopoly.

I have tried to write each chapterand each part of the bookin a way that the level of difficulty rises as you

read through it. This approach facilitates jumping from topic to topic. If you are reading this book on your

ownand not as part of a classthen a good way to proceed is to read the foundational chapters (25 through 27)

first and then to read sequentially through each part. At a first reading you may wish to skip the last two

chapters within each part, which present more difficult material. Likewise you may wish to skip the last

conceptual section or so within each chapter (but don't skip the case studies!). Sections that are more

difficult are marked with the symbol ; you may wish to skip those sections as well at first reading (or to

read them at a more deliberate pace).

page_xxx

Page 1

PART ONE

INTRODUCTION

page_1

Page 3

Chapter 1

A First Look At The Applications

This chapter is organized in three sections. Section 1.1 will introduce you to some applications of game

theory while section 1.2 will provide a background to its history and principal subject matter. Finally, in

section 1.3, we will discuss in detail three specific games.

1.1 Games That We Play

If game theory were a company, its corporate slogan would be

No man is an island

. This is because the

focus of game theory is interdependence, situations in which an entire group of people is affected by the

choices made by every individual within that group. In such an interlinked situation, the interesting questions

include

What will each individual guess about the others' choices?

What action will each person take? (This question is especially intriguing when the best action depends on

what the others do.)

What is the outcome of these actions? Is this outcome good for the group as a whole? Does it make any

difference if the group interacts more than once?

How do the answers change if each individual is unsure about the characteristics of others in the group?

page_3

Page 4

The content of game theory is a study of these and related questions. A more formal definition of game

theory follows; but consider first some examples of interdependence drawn from economics, politics,

finance, law, and even our daily lives.

Art auctions

(such as the ones at Christie's or Sotheby's where works of art from Braque to Veronese are

sold) and Treasury auctions (at which the United States Treasury Department sells U.S. government bonds

to finance federal budget expenditures): Chapters 3, 14, and 23, respectively

Voting at the United Nations (for instance, to select a new Secretary General for the organization): Chapter

4

Animal conflicts

(over a prized breeding ground, scarce fertile females of the species, etc.): Chapter 5

Sustainable use of natural resources (the pattern of extraction of an exhaustible resource such as oil or a

renewable resource such as forestry): Chapters 7 and 18

Random drug testing at sports meets and the workplace

(the practice of selecting a few athletes or workers

to take a test that identifies the use of banned substances): Chapter 8

Bankruptcy law

(which specifies when and how much creditors can collect from a company that has gone

bankrupt): Chapter 9

Poison pill provisions

(that give management certain latitude in fending off unwelcome suitors looking to

take over or merge with their company): Chapter 11

R&D expenditures

(for example, by pharmaceutical firms): Chapter 12

Trench warfare in World War I (when armies faced each other for months on end, dug into rival

trench-lines on the borders between Germany and France): Chapter 13

OPEC (the oil cartel that controls half of the world's oil production and, hence, has an important say in

determining the price that you pay at the pump): Chapter 17

A group project

(such as preparing a case study for your game theory class)

Game theory

A formal way to analyze interaction among a group of rational agents who

behave strategically.

Game theory is a formal way to consider each of the following items:

group In any game there is more than one decision-maker; each decision-maker is referred to as a

"player."

page_4

Page 5

interaction What any one individual player does directly affects at least one other player in the

group.

strategic An individual player accounts for this interdependence in deciding what action to take.

rational While accounting for this interdependence, each player chooses her best action.

Let me now illustrate these four conceptsgroup, interaction, strategic, and rationalby discussing in detail

some of the examples given above.

Examples from Everyday Life

Working on a group project, a case study for the game theory class: The group comprises the students jointly

working on the case. Their interaction arises from the fact that a certain amount of work needs to get done

in order to write a paper; hence, if one student slacks off, somebody else has to put in extra hours the night

before the paper is due. Strategic play involves estimating the likelihood of freeloaders in the group, and

rational play requires a careful comparison of the benefits to a better grade against the costs of the extra

work.

Random drug testing (at the Olympics): The group is made up of competitive athletes and the International

Olympic Committee (IOC). The interaction is both between the athleteswho make decisions on training

regimens as well as on whether or not to use drugsand with the IOC, which needs to preserve the reputation

of the sport. Rational strategic play requires the athletes to make decisions based on their chances of

winning and, if they dope, their chances of getting caught. Similarly, it requires the IOC to determine drug

testing procedures and punishments on the basis of testing costs and the value of a clean-whistle reputation.

Examples from Economics and Finance

R&D efforts by pharmaceutical companies: Some estimates suggest that research and development (R&D)

expenditures constitute as much as 20% of annual sales of U.S. pharmaceutical companies and that, on

average, the development cost of a new drug is about $350 million dollars. Companies are naturally

concerned about issues such as which product lines to invest research dollars in, how high to price a new

drug, how to reduce the risk associated with a new drug's development, and the like. In this example, the

group

is the set of drug companies. The interaction arises because the first developer of a drug makes the

most profits (thanks to the associated patent). R&D expenditures are strategic and rational if they are

chosen to maximize the profits from developing a new drug, given inferences about the competition's

commitment to this line of drugs.

page_5

Page 6

Treasury auctions: On a regular basis, the United States Treasury auctions off U.S. government securities.1

The principal bidders are investment banks such as Lehman Brothers or Merrill Lynch (who in turn sell the

securities off to their clients). The group is therefore the set of investment banks. (The bidders, in fact, rarely

change from auction to auction.) They interact because the other bids determine whether a bidder is

allocated any securities and possibly also the price that the bidder pays. Bidding is rational and strategic if

bids are based on the likely competition and achieve the right balance between paying too much and the risk

of not getting any securities.

Examples from Biology and Law

Animal behavior: One of the more fascinating applications of game theory in the last twenty-five years has

been to biology and, in particular, to the analyses of animal conflicts and competition. Animals in the wild

typically have to compete for scarce resources (such as fertile females or the carcasses of dead animals); it

pays, therefore, to discover such a resourceor to snatch it away from the discoverer. The problem is that

doing so can lead to a costly fight. Here the group of "players" is all the animals that have an eye on the

same prize(s). They interact because resources are limited. Their choices are strategic if they account for

the behavior of competitors, and are rational if they satisfy short-term goals such as satisfying hunger or

long-term goals such as the perpetuation of the species.

Bankruptcy law: In the United States once a company declares bankruptcy its assets ca

n no longer be

attached by individual creditors but instead are held in safekeeping until such time as the company and its

creditors reach some understanding. However, creditors can move the courts to collect payments before the

bankruptcy declaration (although by doing so a creditor may force the company into bankruptcy). Here the

interaction among the group of creditors arises from the fact that any money that an individual creditor can

successfully seize is money that becomes unavailable to everyone else. Strategic play requires an estimation

of how patient other creditors are going to be and a rational choice involves a trade-off between collecting

early and forcing an unnecessary bankruptcy.

At this point, you may well ask what, then, is not a game? A situation can fail to be a game in either of two

casesthe one or the infinity case. By the one case, I mean contexts where your decisions affect no one but

yourself. Examples include your choice about whether or not to go jogging, how many movies to see this

week, and where to eat dinner. By the infinity case, I mean situations where your decisions do affect others,

but there are so many people involved that it is neither feasible nor sensible to keep track of what each one

does. For example, if you were to buy some stock in AT&T it is best to imagine that your purchase has left

the large body of shareholders in AT&T entirely unaffected. Likewise, if you are the owner of Columbia

Bagels in New York City, your decision on the price of onion bagels is unlikely to affect the citywidenot to

speak of the nationwideonion bagel price.

1These securities are Treasury Bonds and Bills, financial instruments that are held by the public

(or its representatives, such as mutual funds or pension funds). These securities promise to pay a

sum of money after a fixed period of time, say three months, a year, or five years. Additionally,

they may also promise to pay a fixed sum of money periodically over the lifetime of the security.

page_6

Page 7

Although many situations can be formalized as a game, this book will not provide you with a menu of

answers. It will introduce you to the methodology of games and illustrate that methodology with a variety of

examples. However, when faced with a particular strategic setting, you will have to incorporate its unique

(informational and other) features in order to come up with the right answer. What this book will teach you

is a systematic way to incorporate those features and it will give you a coherent way to analyze the

consequent game. Everyone of us acts strategically, whether we know it or not. This book is designed to help

you become a better strategist.

1.2 Background

The earliest predecessors of game theory are economic analyses of imperfectly competitive markets. The

pioneering analyses were those of the French economist Augustin Cournot (in the year 1838)2 and the

English economist Francis Edgeworth (1881)3 (with subsequent advances due to Bertrand and Stackelberg).

Cournot analyzed an oligopoly problemwhich now goes by the name of the Cournot modeland employed a

method of analysis which is a special case of the most widely used solution concept found in modern game

theory. We will study the Cournot model in some detail in Chapter 6.

An early breakthrough in more modern times was the study of the game of chess by E. Zermelo in 1913.

Zermelo showed that the game of chess always has a solution, in the sense that from any position on the

board one of the two players has a winning strategy.4 More importantly, he pioneered a technique for

solving a certain class of games that is today called backwards induction. We will study this procedure in

detail in Chapters 11 and 12.

The seminal works in modern times is a paper by John von Neumann that was published in 1928 and, more

importantly, the subsequent book by him and Oskar Morgenstern titled Theory of Games & Economic

Behavior

(1944). Von Neumann was a multi-faceted man who made seminal contributions to a number of

subjects including computer science, statistics, abstract topology, and linear programming. His 1928 paper

resolved a long-standing puzzle in game theory.5 Von Neumann got interested in economic problems in part

because of the economist Oskar Morgenstern. Their collaboration dates to 1938 when Morgenstern came to

Princeton University, where Von Neumann had been a professor at the Institute of Advanced Study since

1933. Von Neumann and Morgenstern started by working on a paper about the connection between

economics and game theory and ended with the crown jewelthe Theory of Games & Economic Behavior.

In their book Yon Neumann and Morgenstern made three major contributions, in addition

to formalizing the

concept of a game. First, they gave an axiom-based foundation to utility theory, a theory that explains just

what it is that players get from playing a game. (We will discuss this work in Chapter 27.) Second, they

thoroughly characterized the optimal solutions to what are called zero-sum games, two-player games in

which

2See Cournot's Researches Into the Mathematical Principles of the Theory of Wealth (especially

Chapter 7).

3See Mathematical Psychics: An Essay on the Application of Mathematics to the Moral Sciences.

4That, of course, is not the same thing as saying that the player can easily figure what this winning

strategy is!(It is also possible that neither player has a winning strategy but rather that the game will

end in a stalemate.)

5The puzzle was whether or not a class of games called zerosum gameswhich are defined in the next

paragraphalways have a solution. A famous French mathematician, Emile Borel, had conjectured in

1913 that they need not; Von Neumann proved that they must always have a solution.

page_7

Page 8

one player wins if and only if the other loses. Third, they introduced a version of game theory called

cooperative games. Although neither of these constructions are used very much in modem game theory,

they both played an important role in the development of game theory that followed the publication of their

book.6

The next great advance is due to John Nash who, in 1950, introduced the equilibrium (or solution) concept

which is the one most widely used in modern game theory. This solution conceptcalled, of course, Nash

equilibriumhas been extremely influential; in this book we will meet it for the first time in Chapter 5. Nash's

approach advanced game theory from zero-sum to nonzero-sum games (i.e., situations in which both players

could win or lose). As mentioned above, Nash's solution concept built on the earlier work of Cournot on

oligopolistic markets.7 For all this he was awarded the Nobel Prize for Economics in 1994.

Which brings us to John Harsanyi and Reinhard Selten who shared the Nobel Prize with John Nash. In two

papers dating back to 1965 and 1975, Reinhard Selten generalized the idea of Nash equilibrium to dynamic

games

, settings where play unfolds sequentially through time.8 In such contexts it is extremely important to

consider the future consequences of one's present actions. Of course there can be many possible future

consequences and Selten offered a methodology to select among them a ''reasonable" forecast for future

play. We will study Selten's fundamental idea in Chapter 13 and its applications in Chapters 14 through 18.9

In 1967-1968, Harsanyi generalized Nash's ideas to settings in which players have incomplete information

about each others' choices or preferences. Since many economic problems are in fact characterized by such

incompleteness of information, Harsanyi's generalization was an important step to take. Incomplete

information games will be discussed in Chapter 20 and their applications can be found in Chapters 21

through 24.10

At this point you might be wondering why this subjectwhich promises to study such weighty matters as the

arms race, oligopoly markets, and natural resource usagegoes by the name of something quite as fun-loving

as game theory. Part of the reason for this is historical: Game theory is called game theory because parlor

gamespoker, bridge, chess, backgammon, and so onwere a convenient starting point to think about the

deeper conceptual issues regarding interaction, strategy, and rationality, which form the core of the subject.

Even as the terminology is not meant to suggest that the issues addressed are light or trivial in any way, it is

also hoped that the terminology will turn out to be somewhat appropriate and that you will have fun learning

the subject.11

1.3 Examples

To fix ideas, let us now work though three games in some detail.

1. Nim and Marienbad.

These are two parlor games that work as follows. There are two piles of matches and t

wo players. The game

starts with player 1 and thereafter the

6In this book we will study zerosum games in some detail in Chapter 10. We will not, however, look

at cooperative game theory.

7John Nash wrote four papers on game theory, two on Nash equilibrium and two more on bargaining

theory (and he co-authored three others). Each of the four papers has greatly influenced the further

development of the discipline. (If you wish, perhaps at a later point in the course, to read the paper on

Nash equilibrium, look for "Equilibrium Points of N-person Games, 1950, Proceedings of the National

Academy of Sciences.) Unfortunately, health problems cut short what would have been a longer and

even more spectacular research career.

8The Selten papers are "Spieltheoretische Behandlung eines Oligopolmodells mit Nachfrage-tragheit"

(1965), Zietschrift für die gesamte Statswissenschaft, and Reexamination of the Perfectness Concept

for Equilibrium Points in Extensive Games (1975), International Journal of Game Theory.

9Many interesting applications of game theory have a sequential, or dynamic, character to them. Put

differently, there are few game situations where you are sure that you are never going to encounter

any of the other players ever again; as the good game theorist James Bond would say, "Never say

never again." We will discuss, in Chapters 15 and 16, games where you think (there is some chance)

that you will encounter the same players again, and in an identical context. In Chapters 17 and 18, we

will discuss games where you think you will encounter the same players again but possibly in a

differerent context.

page_8

Page 9

players take turns. When it is a player's turn, he can remove any number of matches from either pile. Each

player is required to remove some number of matches if either pile has matches remaining, and he can only

remove matches from one pile at a time.

In Nim, whichever player removes the last match wins the game. In Marienbad, the player who removes the

last match loses the game.

The interesting question for either of these games is whether or not there is a winning strategy, that is, is

there a strategy such that if you used it whenever it is your turn to move, you can guarantee that you will

win regardless of how play unfolds from that point on?

Analysis of Nim.

Call the two piles balanced if there is an equal number of matches in each pile; and call them unbalanced

otherwise. It turns out that if the piles are balanced, player 2 has a winning strategy. Conversely, if the piles

are unbalanced, player 1 has a winning strategy.

Let us consider the case where there is exactly one match in each pile; denote this (1,1). It is easy to see that

player 2 wins this game. It is not difficult either to see that player 2 also wins if we start with (2,2). For

example, if player 1 removes two matches from the first pile, thus moving the game to (0,2), then all player 2

has to do is remove the remaining two matches. On the other hand, if player I removes only one match and

moves the game, say, to (1,2), then player 2 can counter that by removing a match from the other pile. At

that point the game will be at (1,1) and now we know player 2 is going to win.

More generally, suppose that we start with n matches in each pile, n > 2. Notice that player I will never want

to remove the last match from either pile, that is, he would want to make sure that both piles have matches

in them.12 However, in that case, player 2 can ensure that after every one of his plays, there is an equal

number of matches in each pile. (How?)13 This means that sooner or later there will ultimately be one match

in each pile.

If we start with unbalanced piles, player I can balance the piles on his first play. Hence, by the above logic,

he has a winning strategy. The reason for that is clear: once the piles are balanced, it is as if we are starting

afresh with balanced piles but with player 2 going first. However, we know that the first to play loses when

the piles are balanced.

CONCEPT CHECK

Are there any other winning strategies in this game? What do you think might

happen if there are more than two piles? Do all such games, in which players

take turns making plays, have winning strategies? (Think of tic-tac-toe.)14

Similar logic can be applied to the analysis of Nim's cousin, Marienbad. Remember, though, in working

through the claims below that in Marienbad the last player to remove matches loses the game.

10The original I967-1968 Harsanyi papers are "Games with Incomplete Information Played by

Bayesian Players," Management Science. Do notas David Letterman would say after a Stupid

Human Tricks segmenttry them at home, just yet!

11There are several books that I hope you will graduate to once you are finished reading this one. Two

that I have found very useful for their theoretical treatments are Game Theory by Drew Fudenberg

and Jean Tirole (MIT Press) and An Introduction to Game Theory by Martin Osborne and Ariel

Rubinstein (MIT Press). If you want a more advanced treatment of any topic in this book, you could do

worse than pick up either of these two texts. A hook that is more applications oriented is Thinking

Strategically by Barry Nalebuff and Avinash Dixit (W. W. Norton).

12Else, player 2 can force a win by removing all the matches from the pile which has matches

remaining.

13Think of what happens if player 2 simply mimics everything that player 1 does, except with the other

pile.

14These three questions have been broken down into further bite-sized pieces in the Exercises section.

page_9

Page 10

CONCEPT CHECK

ANALYSIS OF MARIENBAD

We claim that: If the two piles are balanced with one match in each pile,

player 1 has a winning strategy. On the other hand, if the two piles are

balanced, with at least two matches in each pile, player 2 has a winning

strategy. Finally, if the two piles are unbalanced, player I has a winning

strategy. Try proving these claims.15

Note, incidentally, that in both of these games the first player to move (referred to

in my discussion as player

1) has an advantage if the piles are unbalanced, but not otherwise.

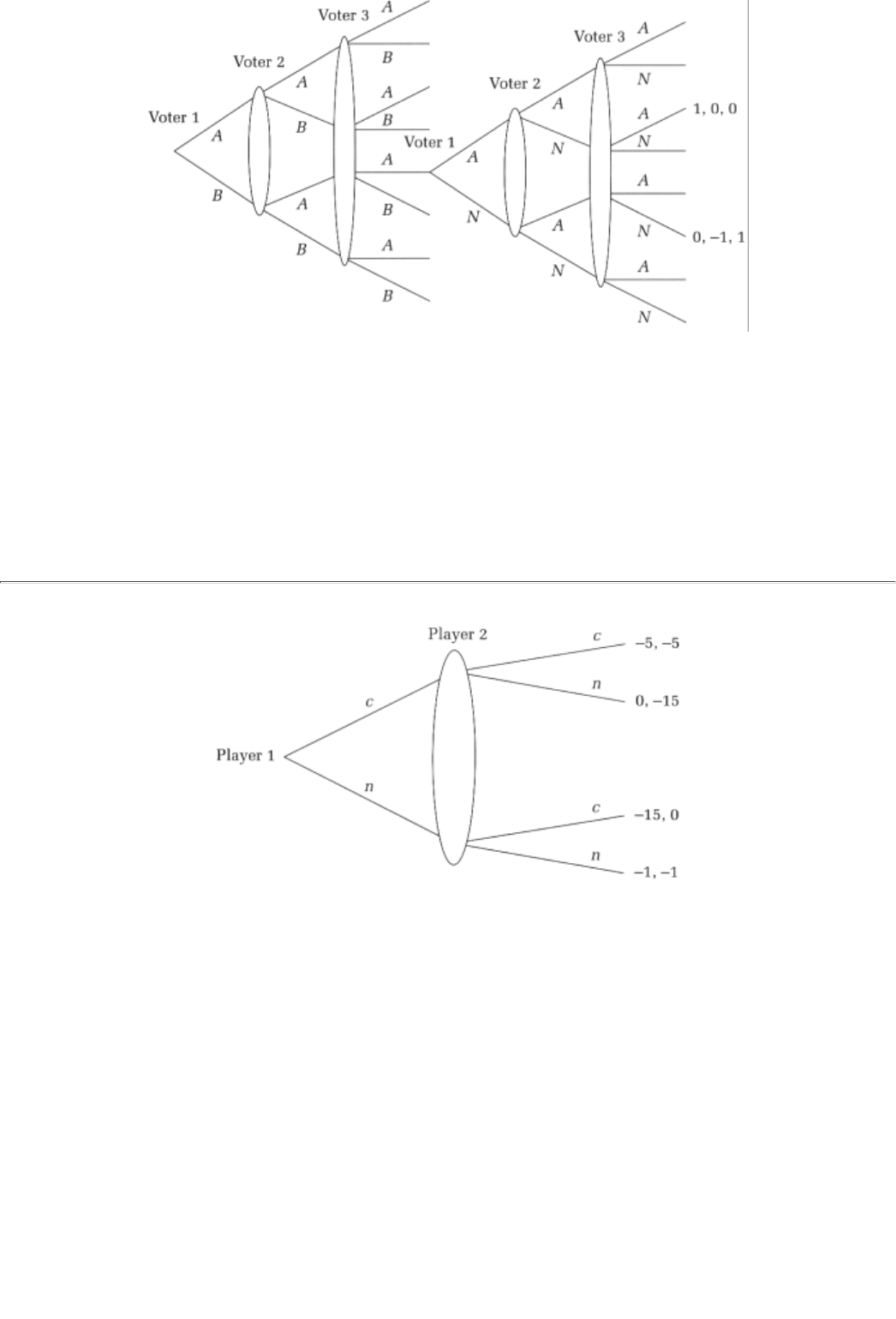

2. Voting.

This example is an idealized version of committee voting. It is meant to illustrate the advantages of strategic

voting, in other words, a manner of voting in which a voter thinks through what the other voters are likely to

do rather than voting simply according to his preferences.16

Suppose that there are two competing bills, designated here as A and B, and three legislators, voters 1, 2 and

3, who vote on the passage of these bills. Either of two outcomes are possible: either A or B gets passed, or

the legislators choose to pass neither bill (and stay with the status quo law instead). The voting proceeds as

follows: first, bill A is pitted against bill B; the winner of that contest is then pitted against the status quo

which, for simplicity, we will call "neither"(or N). In each of the two rounds of voting, the bill that the

majority of voters cast their vote for, wins. The three legislators have the following preferences among the

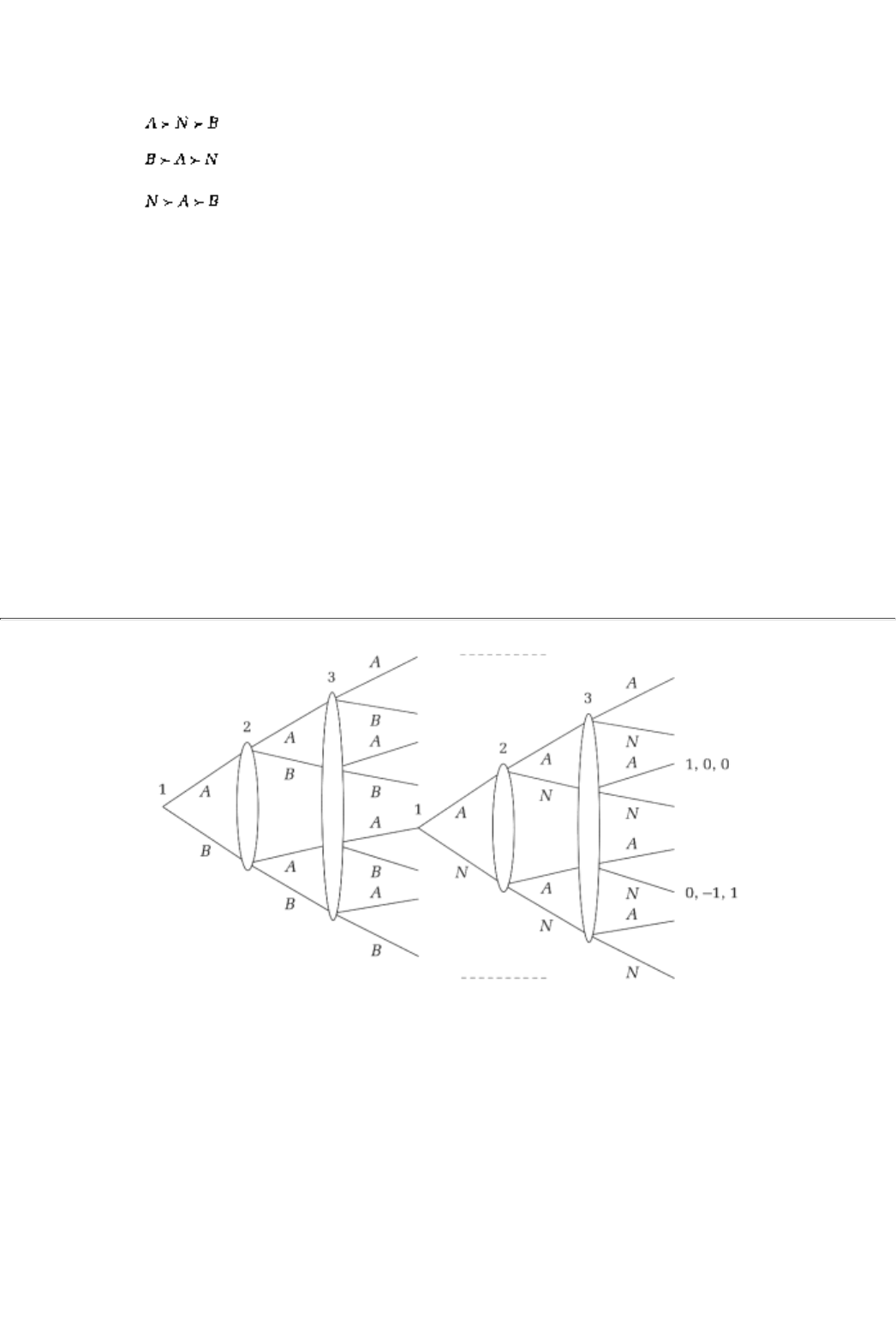

available options.

voter 1:

voter 2:

voter 3:

(where should be read as, "Bill A is preferred to bill B.")

Analysis.

Note that if the voters voted according to their preferences (i.e., truthfully) then

A would win against B and

then, in round two, would also win against N. However, voter 3 would be very unhappy with this state of

affairs; she most prefers N and can in fact enforce that outcome by simply switching her first round vote to

B, which would then lose to N. Is that the outcome? Well, since we got started we might wish to then note

that, acknowledging this possibility, voter 2 can also switch her vote and get A elected (which is preferable

to N for this voter).

There is a way to proceed more systematically with the strategic analysis. To begin with, notice that in the

second round each voter might as well vote truthfully. This is because by voting for a less preferred option, a

legislator might get that passed. That would be clearly worse than blocking its passage. Therefore, if A wins

in the first round, the eventual outcome will be A, whereas if B wins, the eventual outcome will be N. Every

15Again you may prefer to work step by step through these questions in the Exercises section.

16This example may also be found in Fun and Games by Ken Binmore (D.C. Heath).

page_10

Page 11

rational legislator realizes this. So, in voting between A and B in the first round, they are actually voting

between A and N. Hence, voters I and 2 will vote for A in the first round and A will get elected.

CONCEPT CHECK

TRUTHFUL VOTING

In what way is the analysis of strategic voting different from that of truthful

voting? Is the conclusion different? Are the votes different?

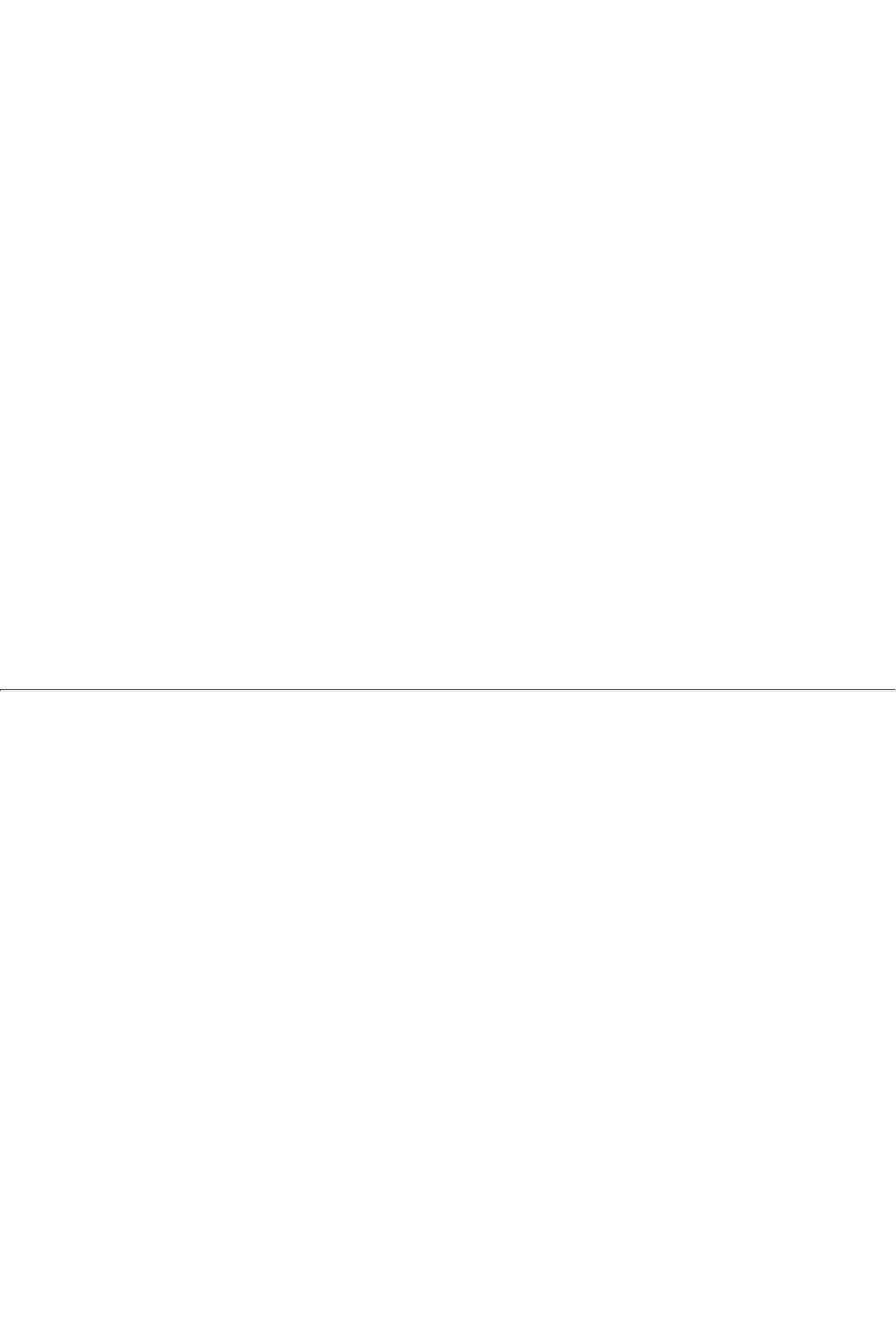

3. Prisoners' Dilemma.

This is the granddaddy of simple games. It was first analyzed in 1953 at the Rand Corporationa fertile

ground for much of the early work in game theoryby Melvin Dresher and A1 Tucker.

The story underlying the Prisoners' Dilemma goes as follows. Two prisoners, Calvin and Klein, are hauled in

for a suspected crime. The DA speaks to each prisoner separately, and tells them that she more or less has

the evidence to convict them but they could make her work a little easier (and help themselves) if they

confess to the crime. She offers each of them the following deal: Confess to the crime, turn a witness for the

State, and implicate the other guyyou will do no time. Of course, your confession will be worth a lot less if

the other guy confesses as well. In that case, you both go in for five years. If you do not confess, however,

be aware that we will nail you with the other guy's confession, and then you will do fifteen years. In the

event that I cannot get a confession from either of you, I have enough evidence to put you both away for a

year."

Here is a representation of this situation:

Calvin \ Klein

Confess Not Confess

Confess 5, 5 0, 15

Not Confess

15, 0 1, 1

Notice that the entries in the above table are the prison terms. Thus, the entry that

corresponds to (Confess,

Not Confess)the entry in the first row, second columnis the length of sentence to Cal

vin (0) and Klein (15),

respectively, when Calvin confesses but Klein does not. Note that since these are prison terms, a smaller

number (of years) is preferred to a bigger number.

Analysis.

From the pair's point of view, the best outcome is (Not Confess, Not Confess). The problem is that if Calvin

thinks that Klein is not going to confess, he can walk free by ratting on Klein. Indeed, even if he thinks that

Klein is going to confessthe ratCalvin had better confess to save his skin. Surely the same logic runs through

Klein's mind. Consequently, they both end up confessing.

Two remarks on the Prisoners' Dilemma are worth making. First, this game is not zero-

sum. There are

outcomes in which both players can gain, such as (Not Confess, Not

page_11

Page 12

Confess). Second, this game has been used in many applications. Here are two: (a) Two countries are in an

arms race. They would both rather spend little money on arms buildup (and more on education), but realize

that if they outspend the other country they will have a tactical superiority. If they spend the same (large)

amount, though, they will be deadlockedmuch the same way that they would be deadlocked if they both

spent the same, but smaller, amount. (b) Two parties to a dispute (a divorce, labor settlement, etc.) each

have the option of either bringing in a lawyer or not. If they settle (50-50) without lawyers, none of their

money goes to lawyers. If, however, only one party hires a lawyer, then that party gets better counsel and

can get more than 50% of the joint property (sufficiently more to compensate for the lawyer's fees). If they

both hire lawyers, they are back to equal shares, but now equal shares of a smaller estate.

Summary

1. Game theory is a study of interdependence. It studies interaction among a group of players who make

rational choices based on a strategic analysis of what others in the group might do.

2. Game theory can be used to study problems as widely varying as the use of natural resources, the election

of a United Nations Secretary General, animal behavior, and production strategies of OPEC.

3. The foundations of game theory go back 150 years. The main development of the subject is more recent,

however, spanning approximately the last fifty years, making game theory one of the youngest disciplines

within economics and mathematics.

4. Strategic analysis of games such as Nim and the Prisoners' Dilemma can expose the outcomes that will be

reached by rational players. These outcomes are not always desirable for the whole group of players.

Exercises

Section 1.1

1.1

Give three examples of game-like situations from your everyday life. Be sure in each

page_12

Page 13

case to identify the players, the nature of the interaction, the strategies available, and the objectives that

each player is trying to achieve.

1.2

Give three examples of economic problems that are not games. Explain why they are not.

1.3

Now give three examples of economic problems that are games. Explain why these situat

ions qualify as

games.

1.4

Consider the purchase of a house. By carefully examining each of the four components of a game

situationgroup, interaction, rationality, and strategydiscuss whether this qualifies as a game.

1.5

Repeat the last question for a trial by jury. Be sure to outline carefully what each player's objectives might

be.

Consider the following scenario: The market for bagels in the Morningside Heights nei

ghborhood of New

York City. In this example, the dramatis personae are the two bagel stores in the Columbia University

neighborhood, Columbia Bagels (CB) and University Food Market (UFM); and the interaction among them

arises from the fact that Columbia Bagels' sales depend on the price posted by University Food Market.

1.6

By considering a few sample prices, say, 40, 45, and 50 centsand likely bagel sales at these pricescan you

quantify how CB's sales revenue might depend on UFM's price? And vice versa?

1.7

For your numbers what would be a rational strategic price for CB if, say, UFM's bagels were priced at 45

cents? What if UFM raised its price to 50 cents?

Consider yet another scenario: Presidential primaries. The principal group of players are the candidates

themselves. Only one of them is going to win his party's nomination; hence, the interaction among them.

1.8

What are the strategic choices available to a candidate? (Hint: Think of political issues that a candidate can

highlight, how much time he can spend in any given state, etc.)

1.9

What is the objective against which we can measure the rationality of a candidate's choice? Should the

objective only be the likelihood of winning?17

17Bear in mind the hope once articulated by a young politician from Massachusetts, John E

Kennedy, that his margin of victory would be narrow; Kennedy explained that his father "hated to

overspend!

page_13

Page 14

Section 1.3

1.10

Show in detail that player 2 has a winning strategy in Nim if the two piles of matches are balanced. [Your

answer should follow the formalism introduced in the text; in particular, every configuration of matches

should be written as (m, n) and removing matches should be represented as a reduction in either m or n.]

1.11

Show that player 2 has exactly one winning strategy. In other words, show that if the winning strategy of

question 1.10 is not followed, then player I can at some point in the game turn the tables on player 2.

1.12

Verbally analyze the game of tic-tac-toe. Show that there is not a winning strategy in this game.

The next four questions have to do with a three pile version of Nim. The rules of the game are identical to

the case when there are two piles. In particular, each player can only choose from a single pile at a time and

can remove any number of the matches remaining in a pile. The last player to remove matches wins.

1.13

Show that if the piles have an equal number of matches, then player 1 has a winning strategy. [You may

wish to try out the configurations (1, 1,1) and (2, 2, 2) to get a feeling for this argument.]

1.14

Show that the same result is true if two of the piles have an equal number of matches; that is, show that

player I has a winning strategy in this case. [This time you might first try out the configurations (1, 1, p) and

(2, 2, p) where p is a number different from 1 and 2, respectively.]

1.15

Show that if the initial configuration of matches is (3, 2, 1)or any permutation of that configurationthen

player 2 has a winning strategy. As in the previous questions, carefully demonstrate what this winning

strategy is.

1.16

Use your answer in the previous questions to show that if the initial configuration is (3, 2, p)or (3,1, p) or (1,

2, p)where p is any number greater than 3, then player I has a winning strategy.

The next three questions have to do with the game of Marienbad played by two players.

page_14

Page 15

1.17

Show that if the configuration is (1, 1) then player 1 has a winning strategy.

1.18

On the other hand, if the two piles are balanced, with at least two matches in each pile, player 2 has a

winning strategy. Prove in detail that this must be the case.

1.19

Finally, show that if the two piles are unbalanced, player I has a winning strategy.

1.20

Consider the voting model of the second example of section 1.3 (pg. 10). Prove that in the second round,

each voter can do no better than vote truthfully according to her preferences.

1.21

Suppose voter 3's preferences were (instead of as in the text). What would be the

outcome of truthful voting in this case? What about strategic voting?

1.22

Write down a payoff matrix that corresponds to the legal scenario discussed at the end of the chapter (p. 12).

Give two alternative specifications of payoffs, the first in which this does correspond to a Prisoners'

Dilemma and the second in which it does not.

Suppose the Prisoners' Dilemma were modified by allowing a third choice for each playerPartly Confess.

Suppose further that the prison sentences (in years) in this modified game are as follows.

Calvin \ Klein Confess Not Partly

Confess 2, 2 0, 5 1, 3

Not

5, 0 , 4,

Partly

3,1 , 4 1,1

(As always, keep in mind that shorter prison terms are preferred by each player to longer prison terms.)

1.23

Is it true that Calvin is better off confessing to the crime no matter what Klein does? Explain.

1.24

Is there any other outcome in this gameother than both players confessingwhich is sensible? Your answer

should informally explain why you find any other outcome sensible (if you do).

page_15

Page 17

Chapter 2

A First Look At The Theory

This chapter will provide an introduction to game theory's toolkit; the formal structures within which we can

study strategic interdependence. Section 2.1 gives some necessary background. Sections 2.2 and 2.3 detail

the two principal ways in which a game can be written, the Extensive Form and the Strategic Form of a

game. Section 2.4 contains a discussion of utilityor payofffunctions, and Section 2.5 concludes with a revisit

to some of the examples discussed in the previous chapter.

2.1 Rules Of The Game: Background

Every game is played by a set of rules which have to specify four things.

1. who is playingthe group of players that strategically interacts

2. what they are playing withthe alternative actions or choices, the strategies, that each player has available

3. when each player gets to play (in what order)

4. how much they stand to gain (or lose) from the choices made in the game

In each of the examples discussed in Chapter 1, these four components were described verbally. A verbal

description can be very imprecise and tedious and so it is desirable to find a more compact description of the

rules. The two principal representations of (the rules off a game are called, respectively, the normal (or

strategic) form of a game and the extensive form; these terms will be discussed later in this chapter.

page_17

Page 18

Common knowledge about the rules

Every player knows the rules of a game and that fact is commonly known.

There is, however, a preliminary question to ask before we get to the rules: what is the rule about knowing

the rules? Put differently, how much are the players in a game supposed to know about the rules? In game

theory it is standard to assume common knowledge about the rules.

That everybody has knowledge about the rules means that if you asked any two players in the game a

question about who, what, when, or how much, they would give you the same answer. This does not mean

that all players are equally well informed or equally influential; it simply means that they know the same

rules. To understand this better, think of the rules of the game as being like a constitution (of a country or a

clubor, for that matter, a country club.) The constitution spells out the rules for admitting new members,

electing a President, acquiring new property, and so forth. Every member of this club is supposed to have a

copy of the constitution; in that sense they all have knowledge of the rules. This does not mean that they all

get to make the same choices or that they all have the same information when they make their choices. For

instance, perhaps it is only the Executive Committee members who decide whether the club should build a

new tennis court. In making this decision, they may furthermore have access to reports about the financial

health of the club that are not made available to all members. The point is that both of these rulesthe

Executive Committee's decision-making power and access to confidential reportsare in the club's

constitution and hence are known to everyone.

This established, the next question is: does everyone know that everyone knows? Common knowledge of the

rules goes even a few steps further: first, it says yes, everybody knows that the constitution is available to all.

Second, it says that everybody knows that everybody knows that the constitution is widely available. And

third, that everybody knows that everybody knows that everybody knows, ad infinitum.1 In a two-player

game, common knowledge of the rules says not only that player I knows the rules, but that she also knows

that player 2 knows the rules, knows that 2 knows that I knows the rules, knows that 2 knows that I knows

that 2 knows the rules, and so on.

In the next two sections we will discuss the two alternative representations of the three rules who, what, and

when

. The final rule, how much, will be discussed in section 2.4.

2.2 Who, What, When: The Extensive FOrm

Extensive form

A pictorial representation of the rules. The main pictorial form is called the

game tree, which is made up of a root and branches arranged in order.

The extensive form is a pictorial representation of the rules. Its main pictorial form is called the game tree.

Much like an ordinary tree, a game tree starts from a root; at this starting point, or root, one of the players

has to make a choice. The various choices available to this player are represented as branches emanating

from the root. For example, in the game tree given by Figure 2.1, below, the root is denoted a; there are

three branches emerging from the root which correspond to the three choices b(us), c(ab), and s(ubway).2

At the end of each one of the branches that emerge from the root, either of two things can happen. The tree

might itself end with that branch; this signifies an end to the

1It may seem completely mysterious to you why we cannot simply stop with the assertion

"everybody knows the rules." The reason is that, knowing the rules, there might be certain

behaviors that a player will normally not undertake. However, if a player is unsure about whether

or not the others know that he knows the rules, he will consequently be unsure about whether the

others realize that he will not undertake those behaviors. This sort of doubt in players' minds can

have a dramaticand unreasonableimpact on what they end up doing, hence the need to assume

every level of knowledge.

2This tree could represent, for example, transportation choices in New York City; a player can either

take the bus, a cab, or the subway to his destination. Note that driving one's own car is not one of the

optionsthese are choices in New York City after all!

page_18

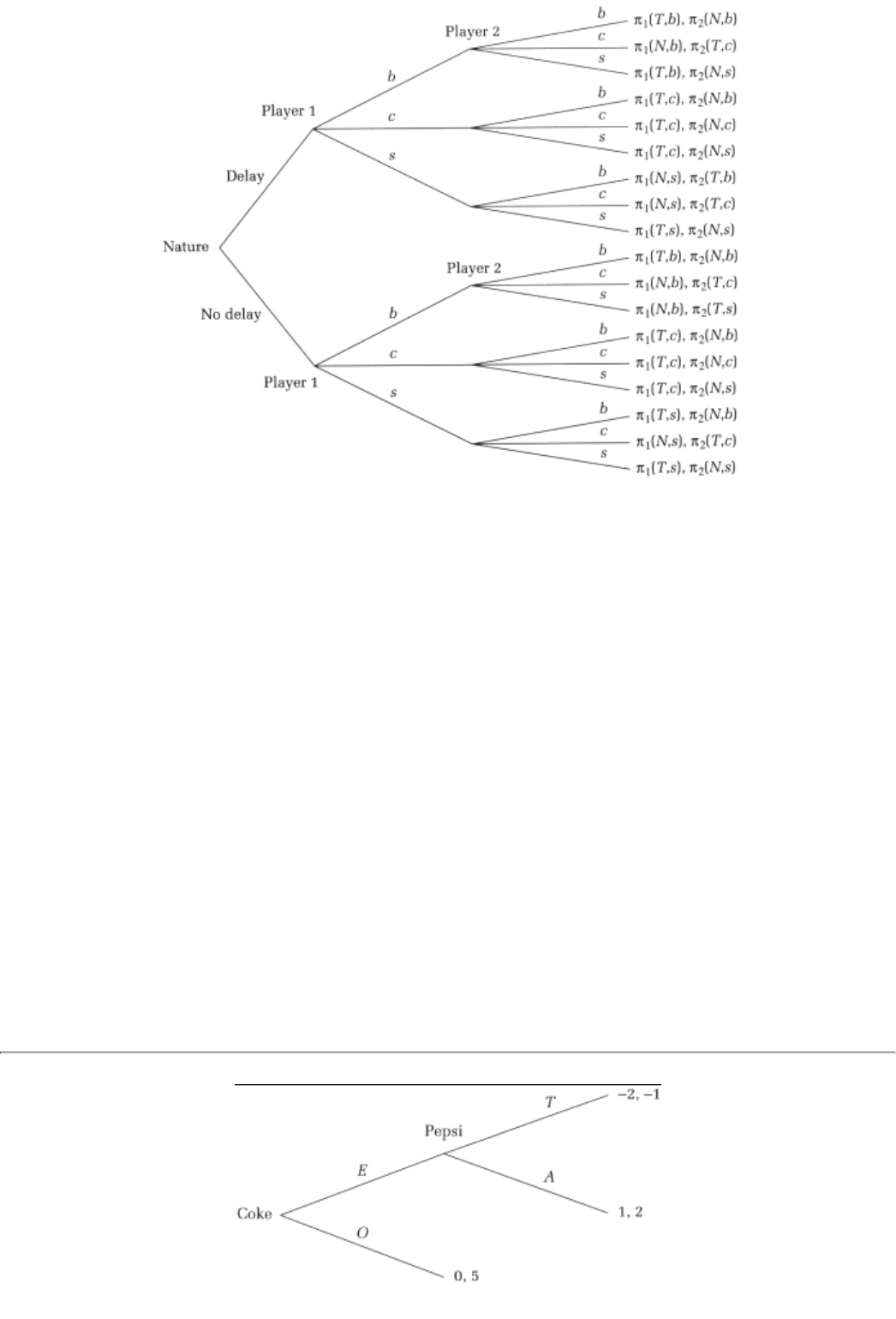

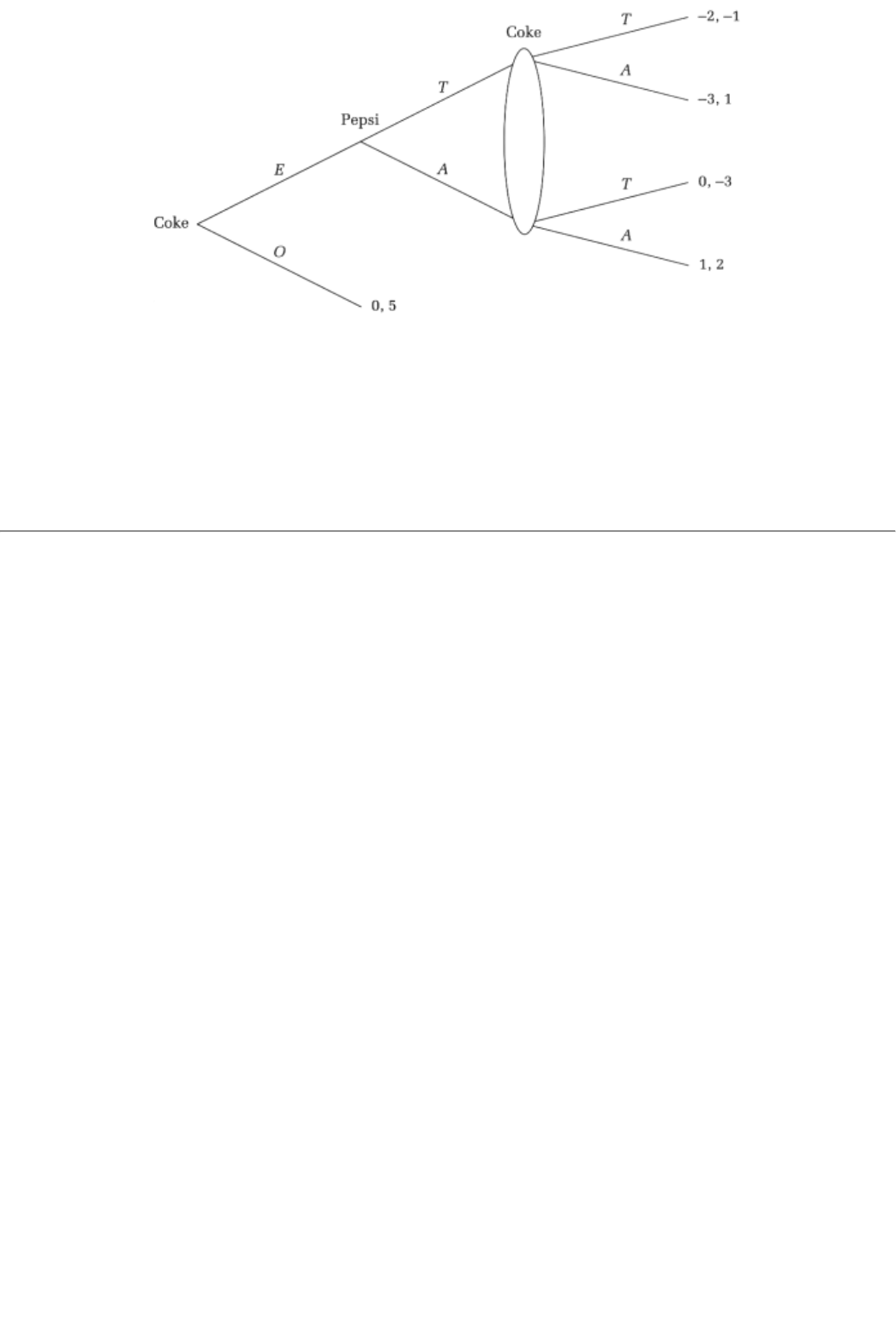

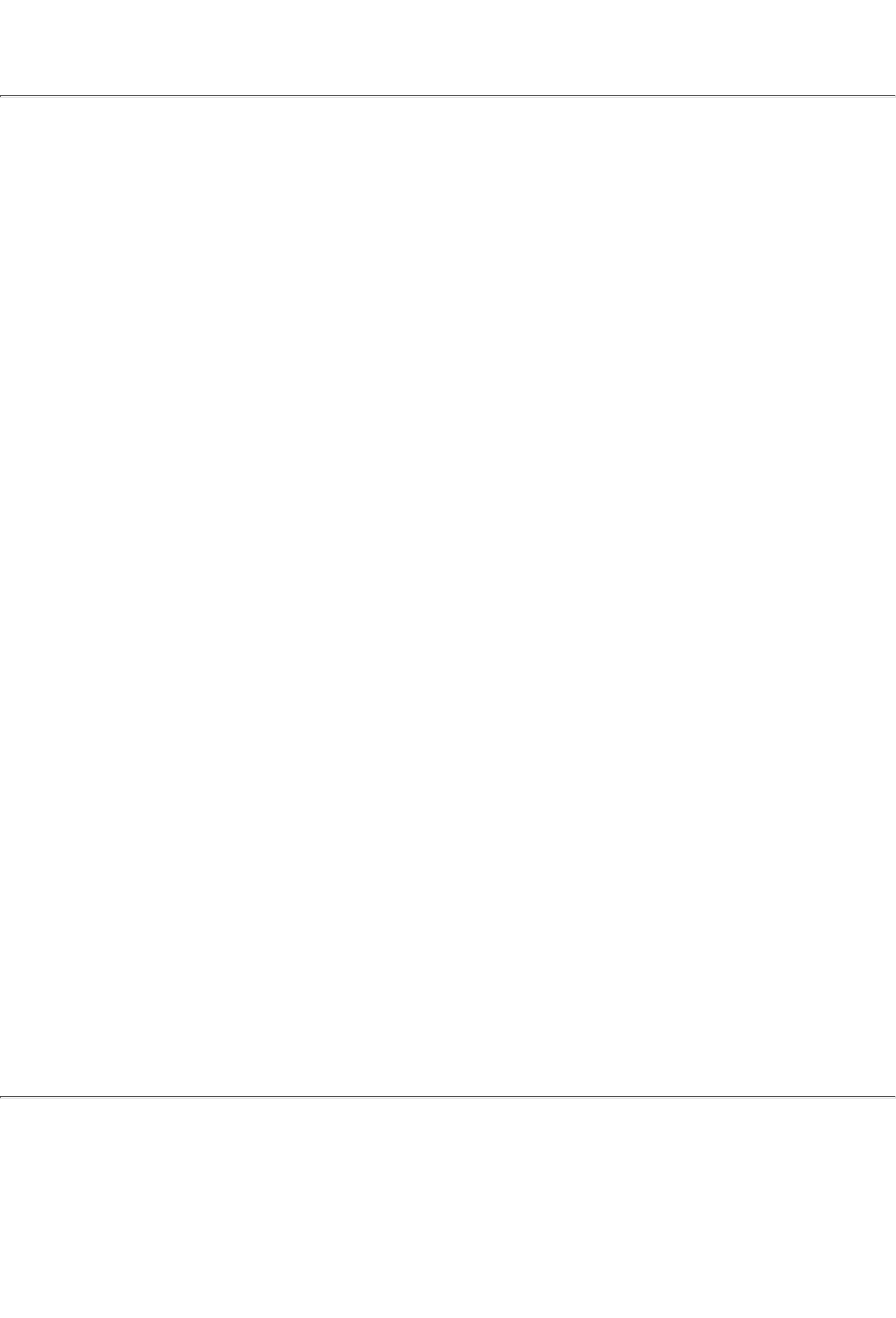

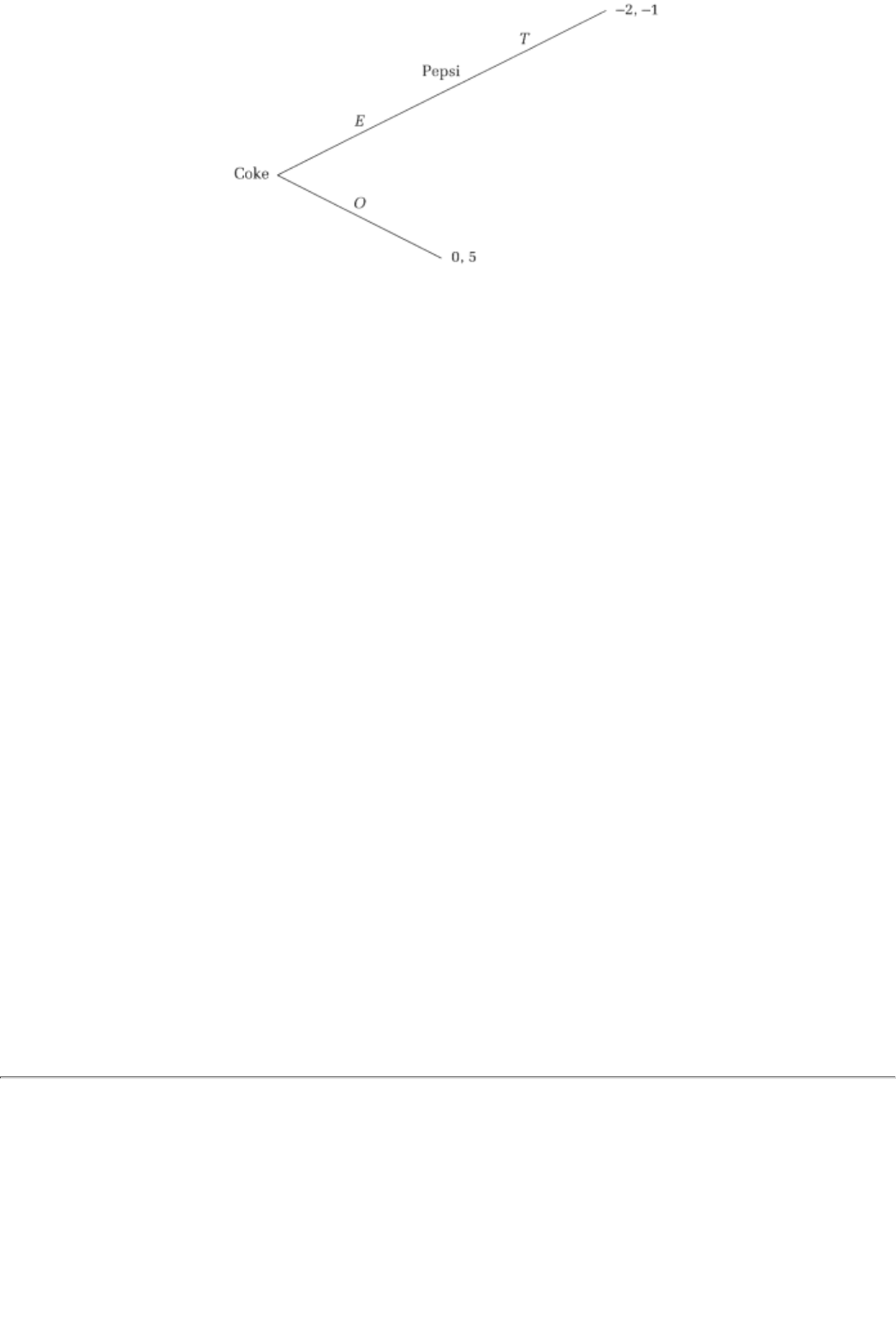

Page 19

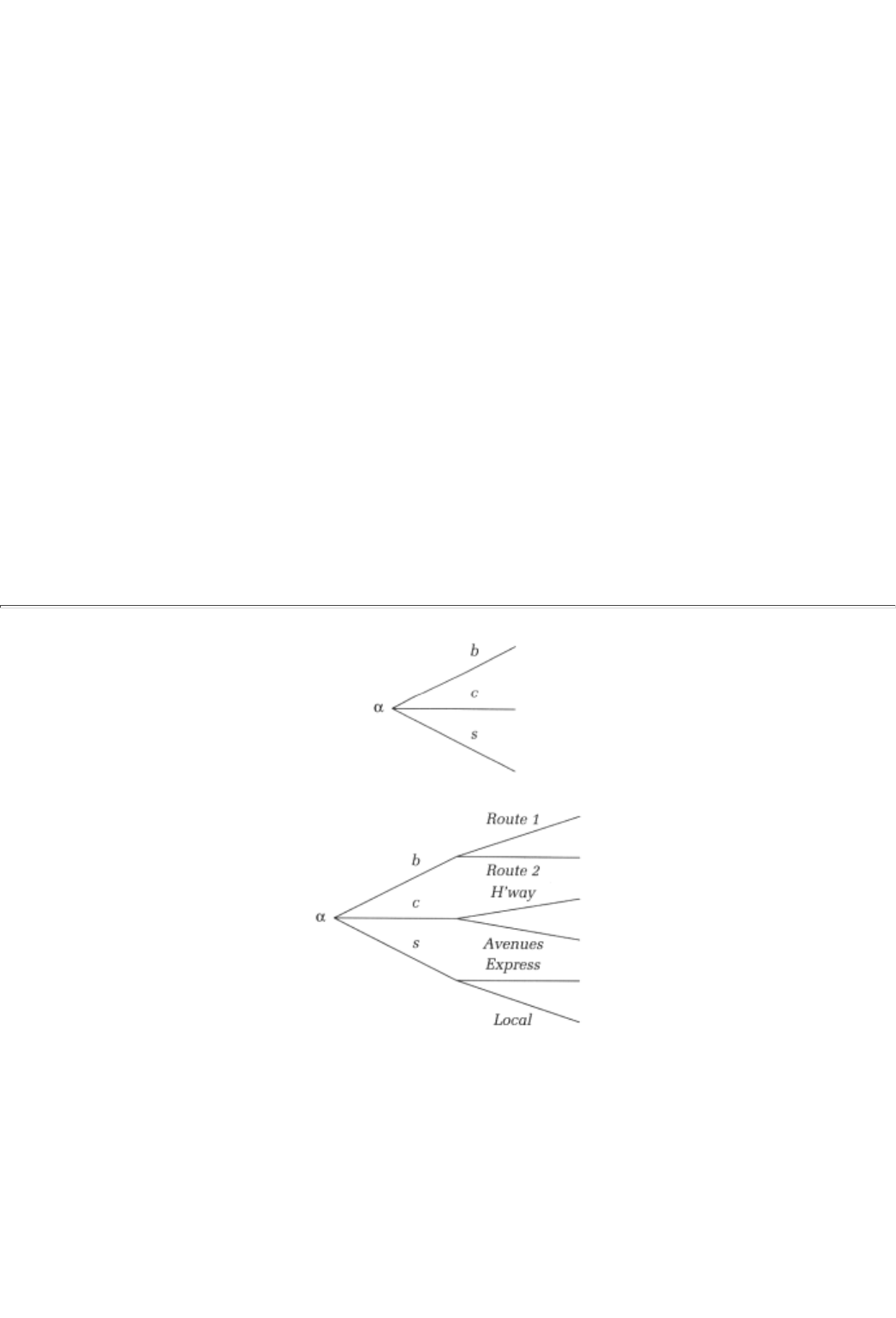

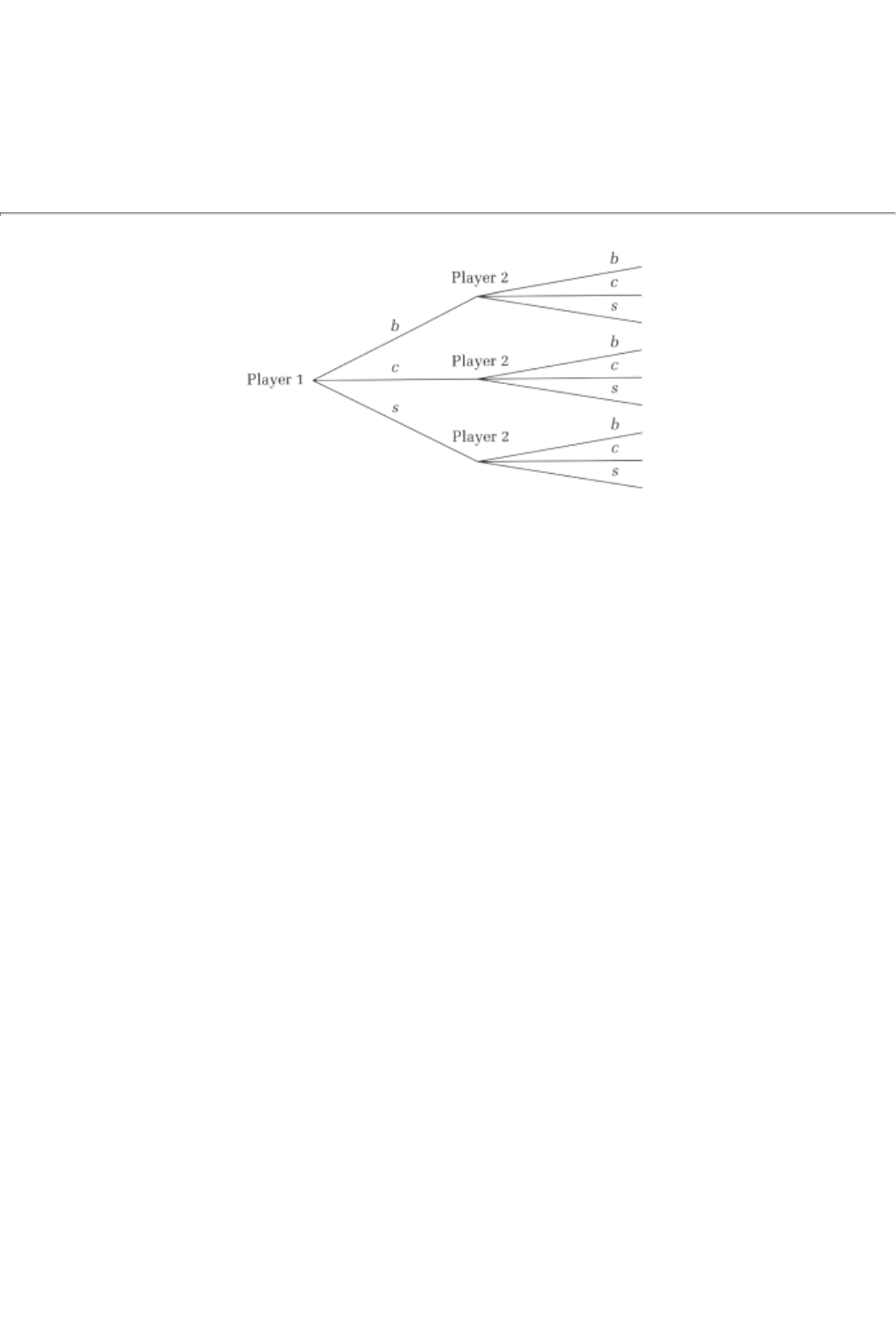

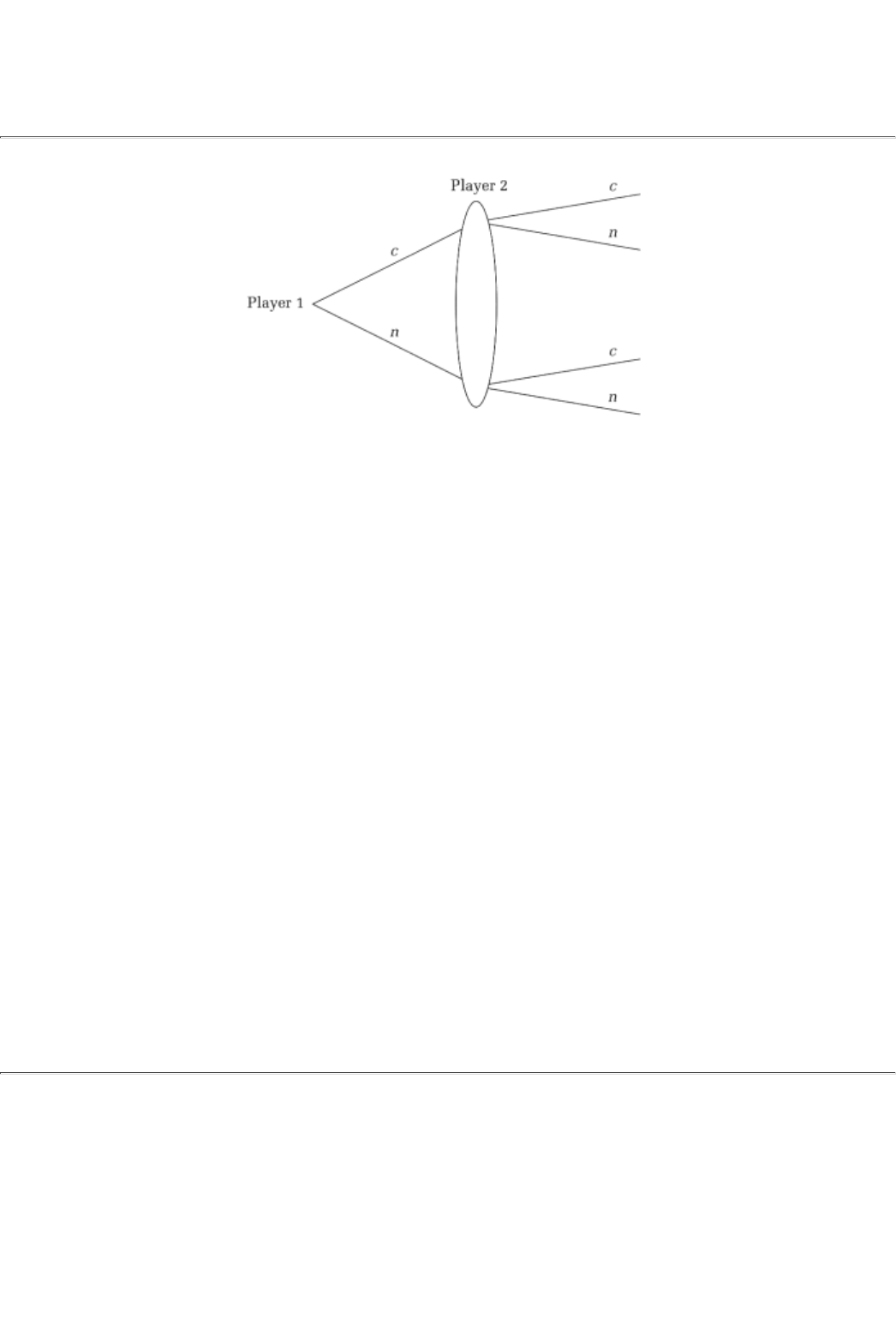

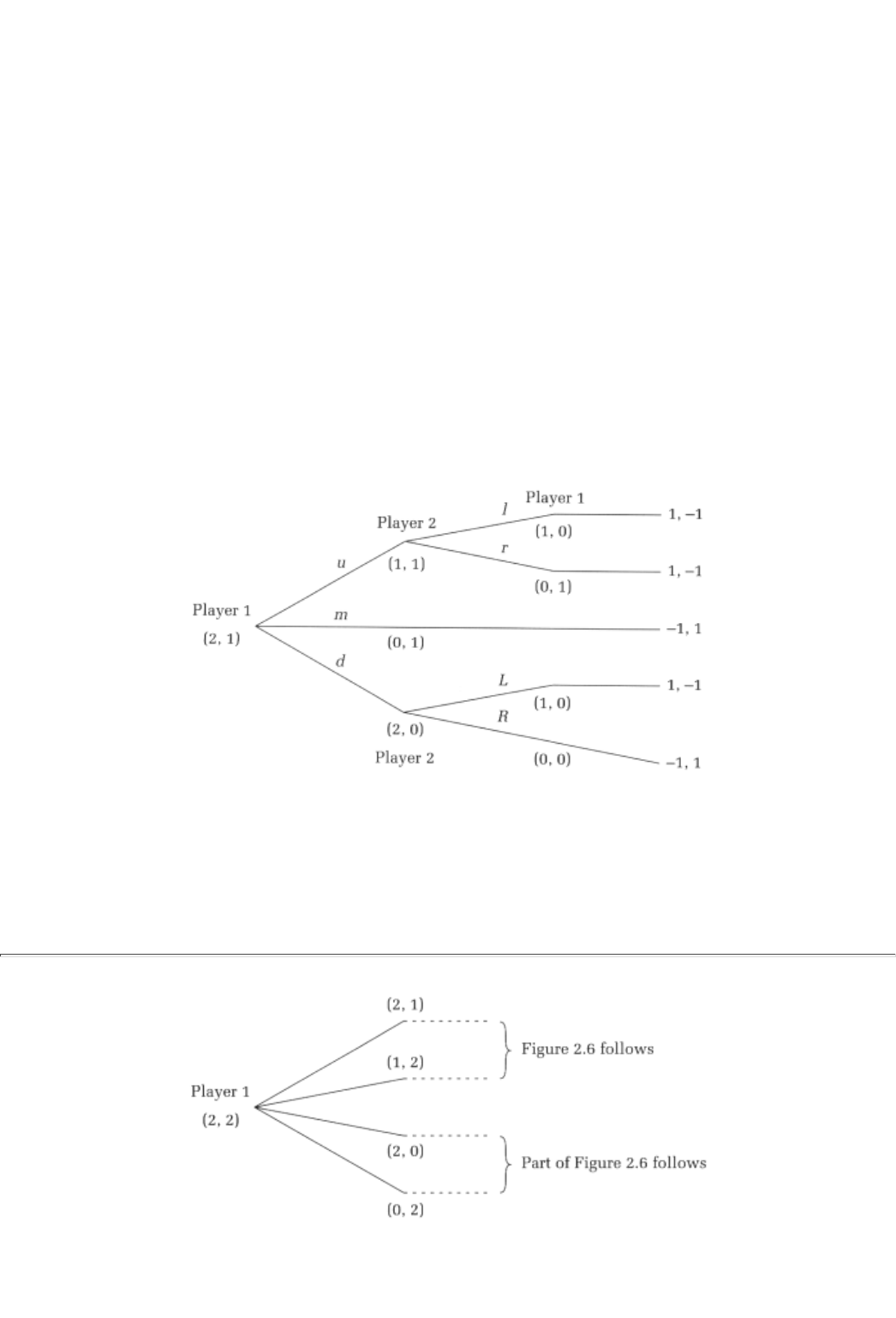

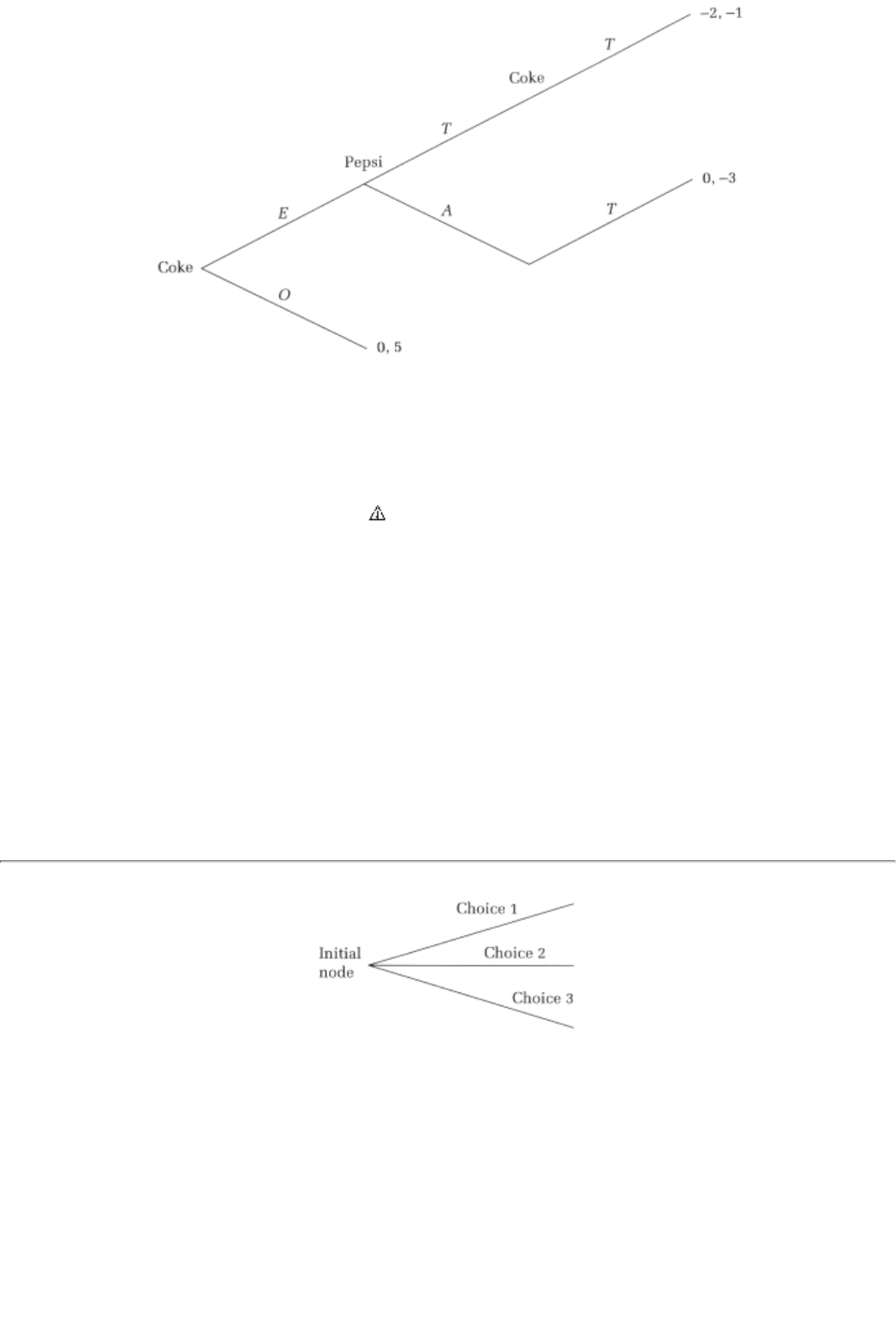

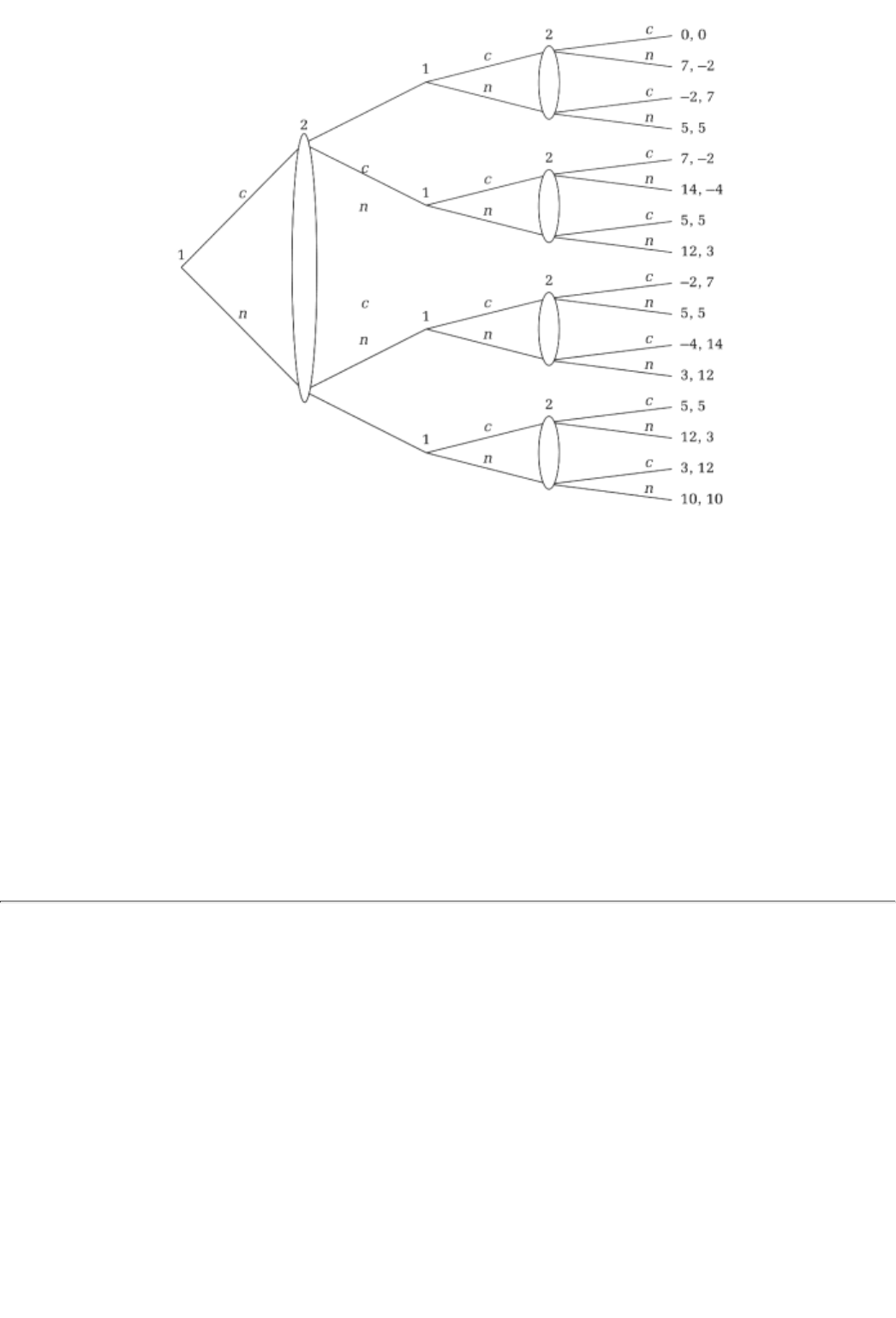

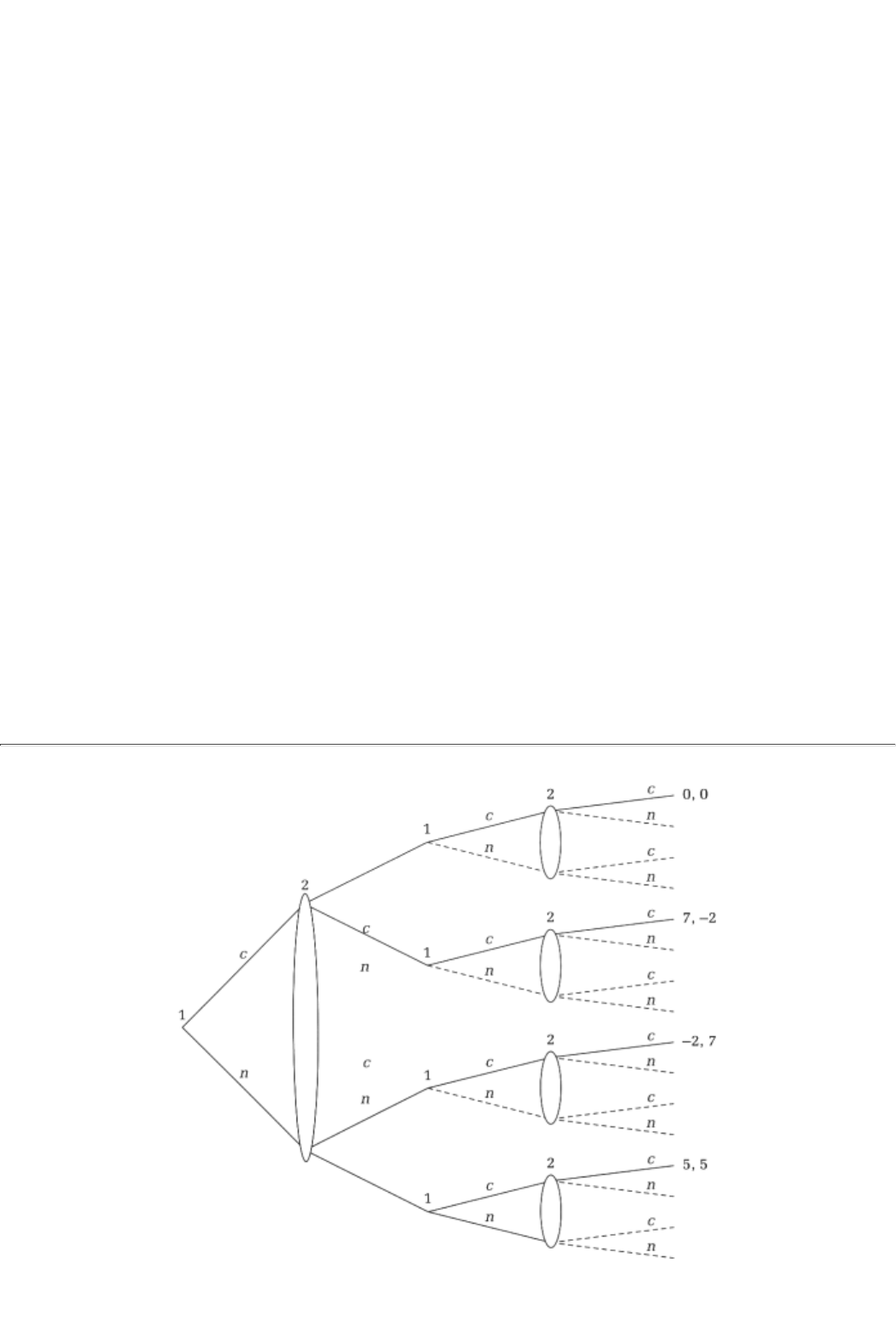

FIGURE 2.1

FIGURE 2.2

game. Alternatively, it might split into further branches. In Figure 2.1, for instance, the tree ends after each

of the three branches b, c, and s. On the other hand, in Figure 2.2 each branch further divides in two. The

branch splits into E(xpress) and L(ocal); the implication is that after the initial choice s is made, the player

gets to choose again between the two options E and L (whether to stay on the Local train or to switch to an

Express

). The end of branch s, where the subsequent decision between E and L is made, is called a decision

node of the tree. Figure 2.2 is therefore a two-stage decision problem with a single player.

Of greater interest is a situation where a different player gets to make the second choice. For instance

suppose that two players are on their way to see a Broadway musical that is in great demand, such as Rent.

The demand is so great that there is exactly one ticket left; whoever arrives first will get that ticket. Hence,

we have a game. The first player (player 1) leaves home a little earlier than player 2; in that sense he makes

his choice at the root of the game tree and subsequently the other player makes her t

ransportation choice.

The extensive form of this game is represented in Figure 2.3.

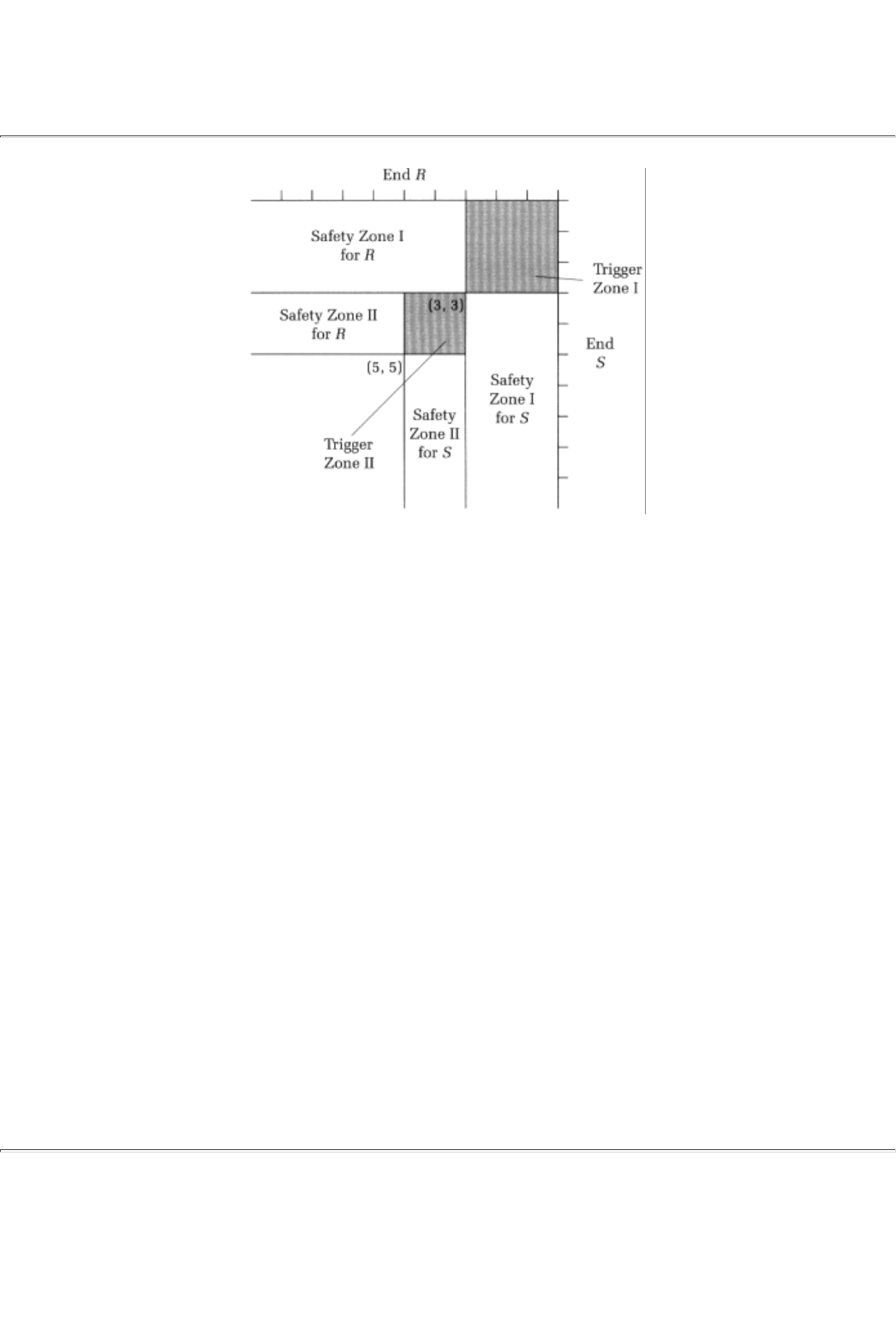

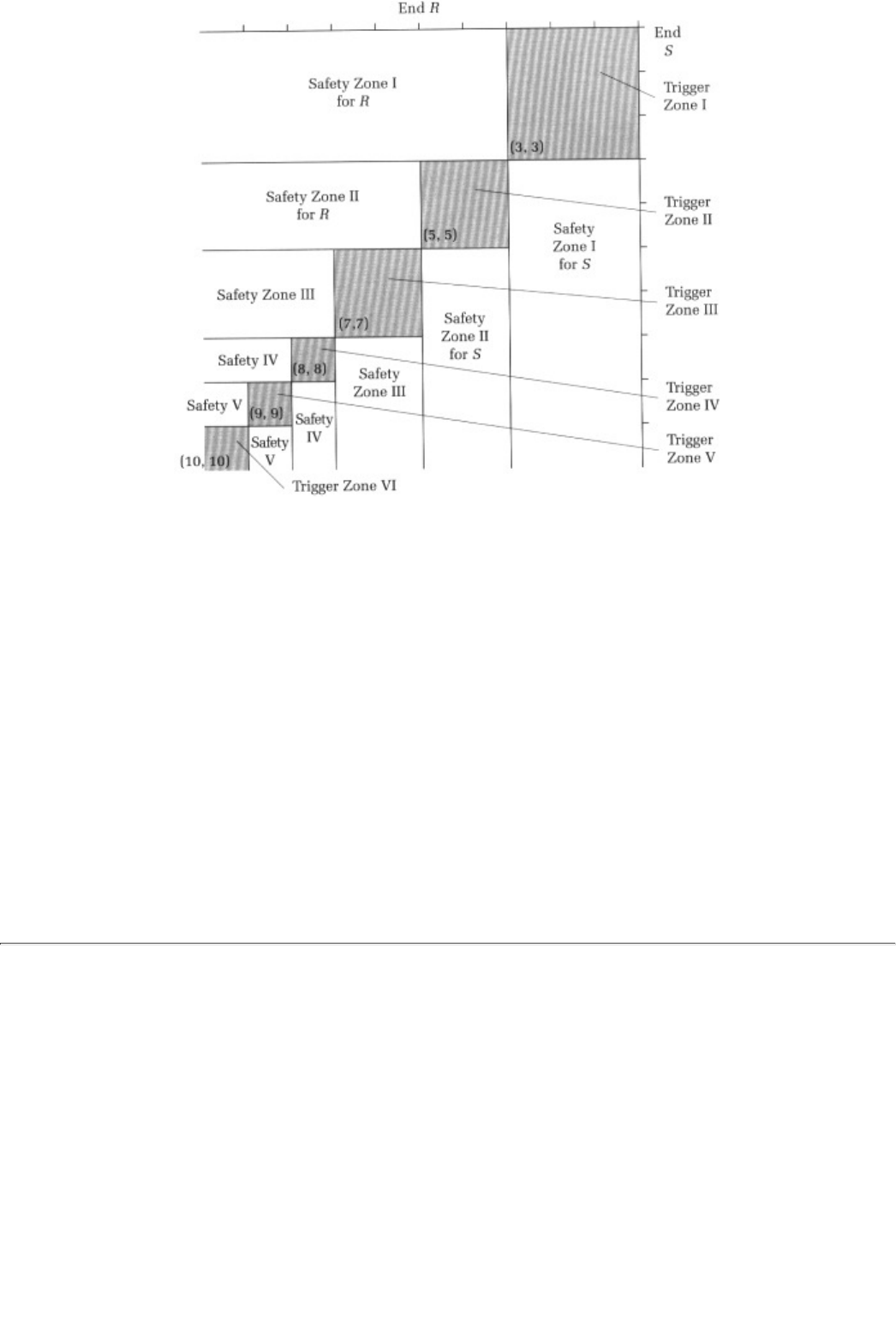

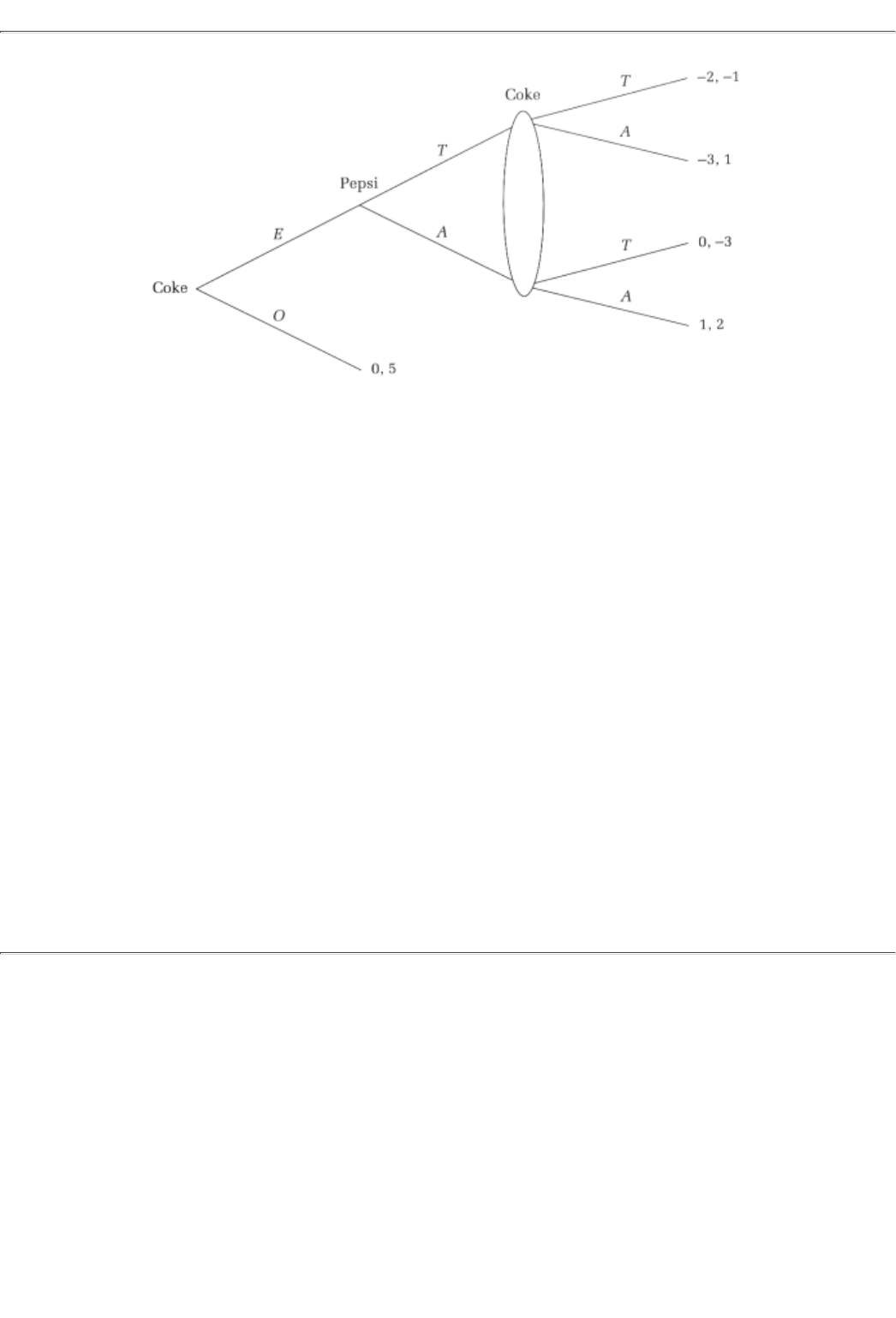

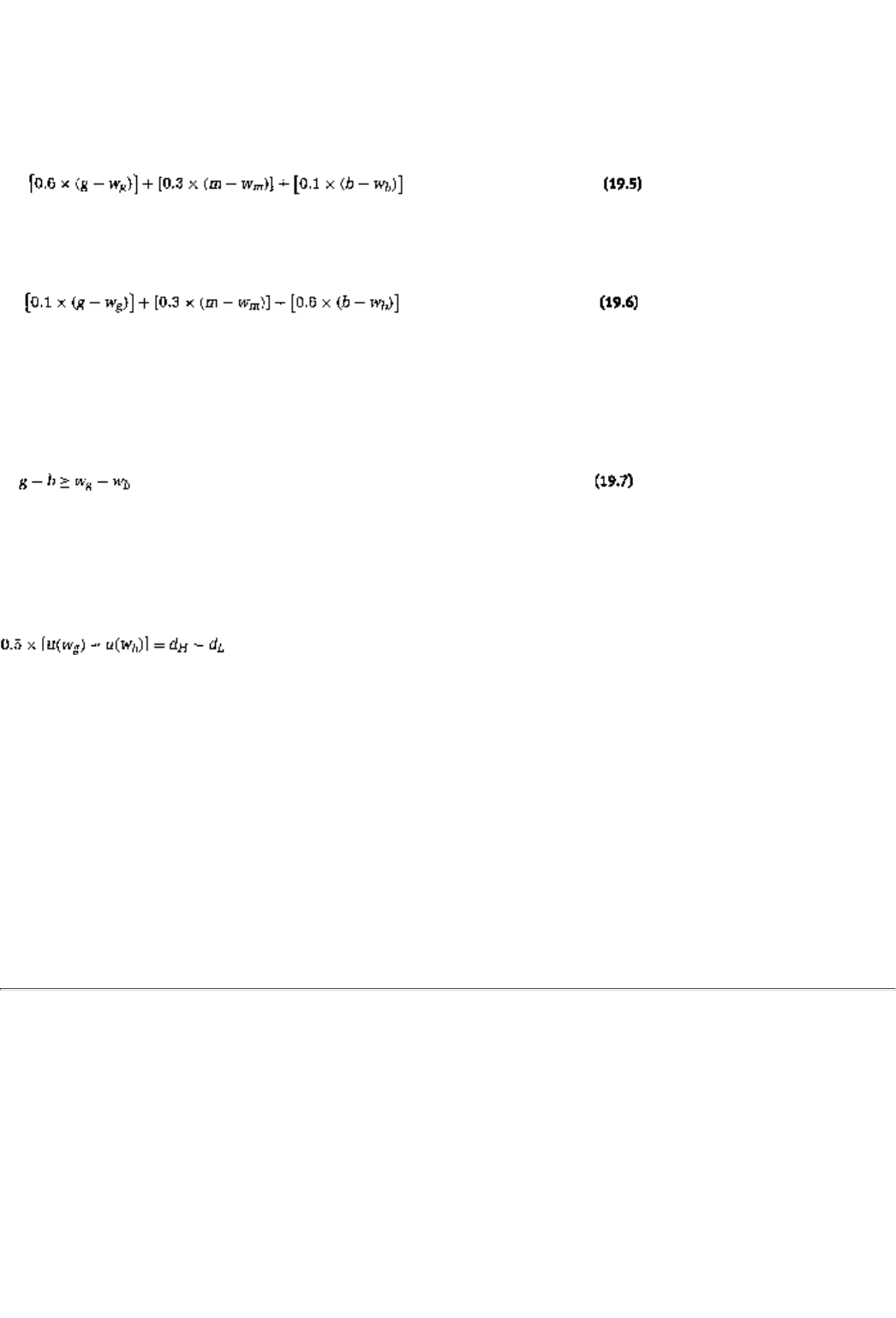

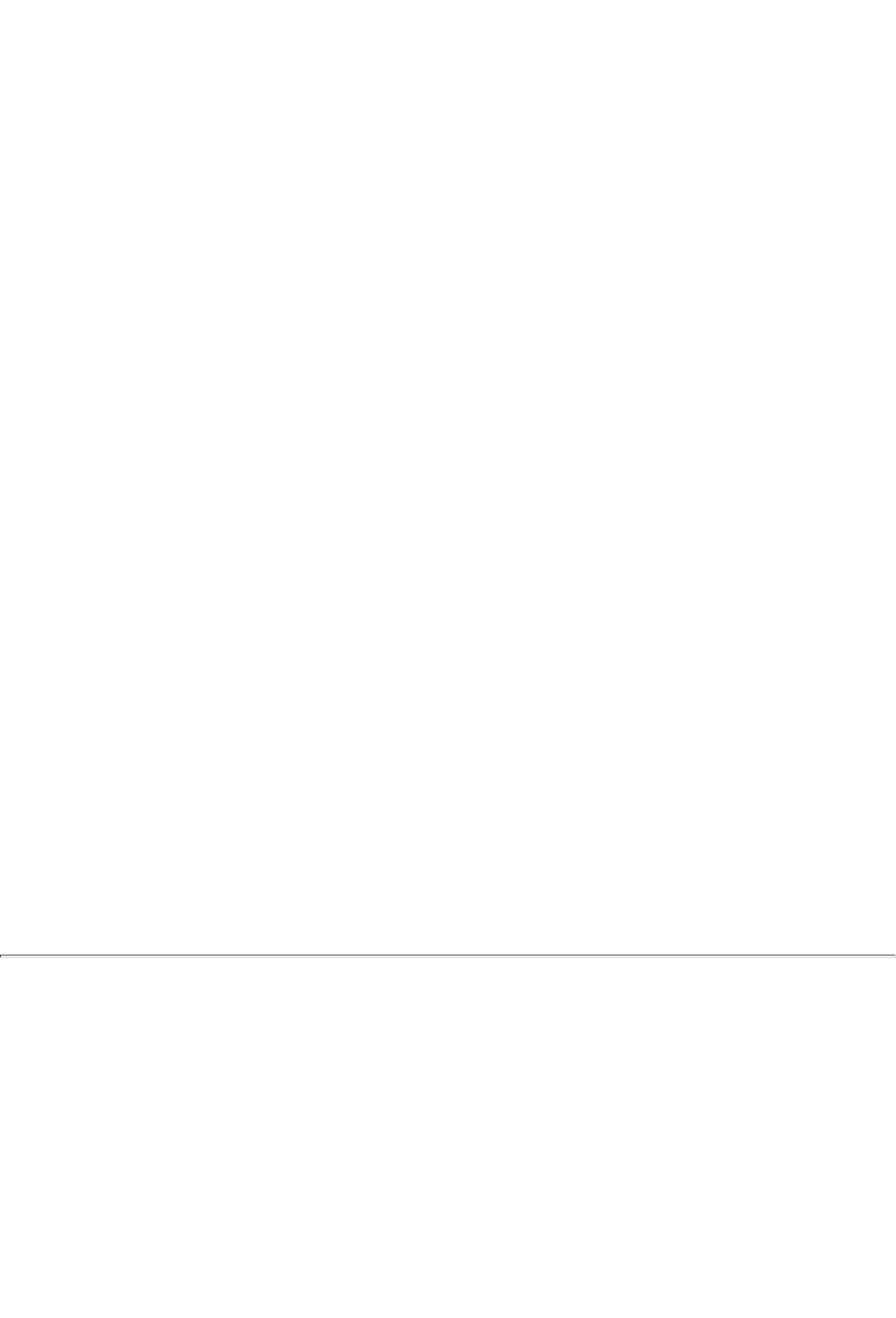

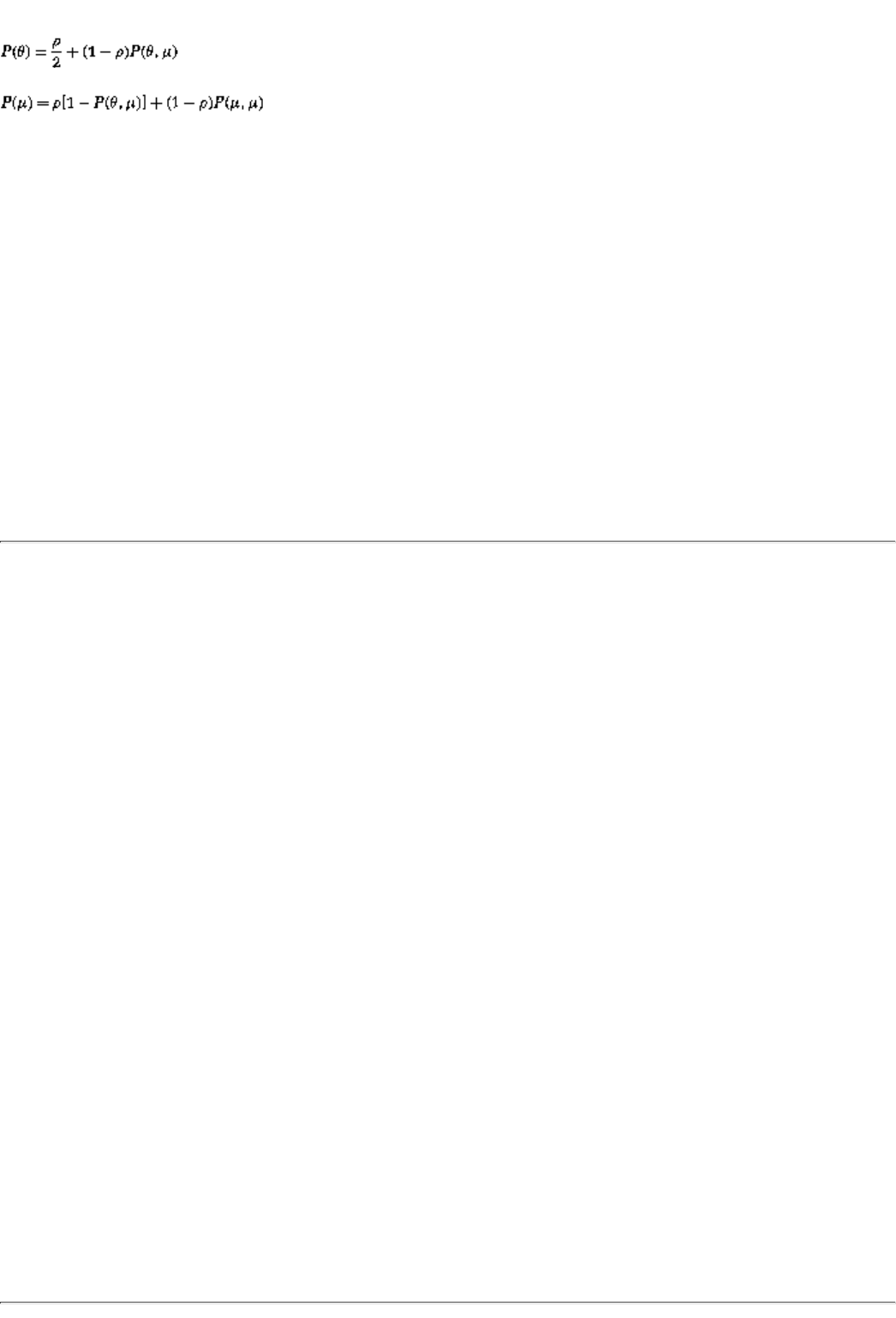

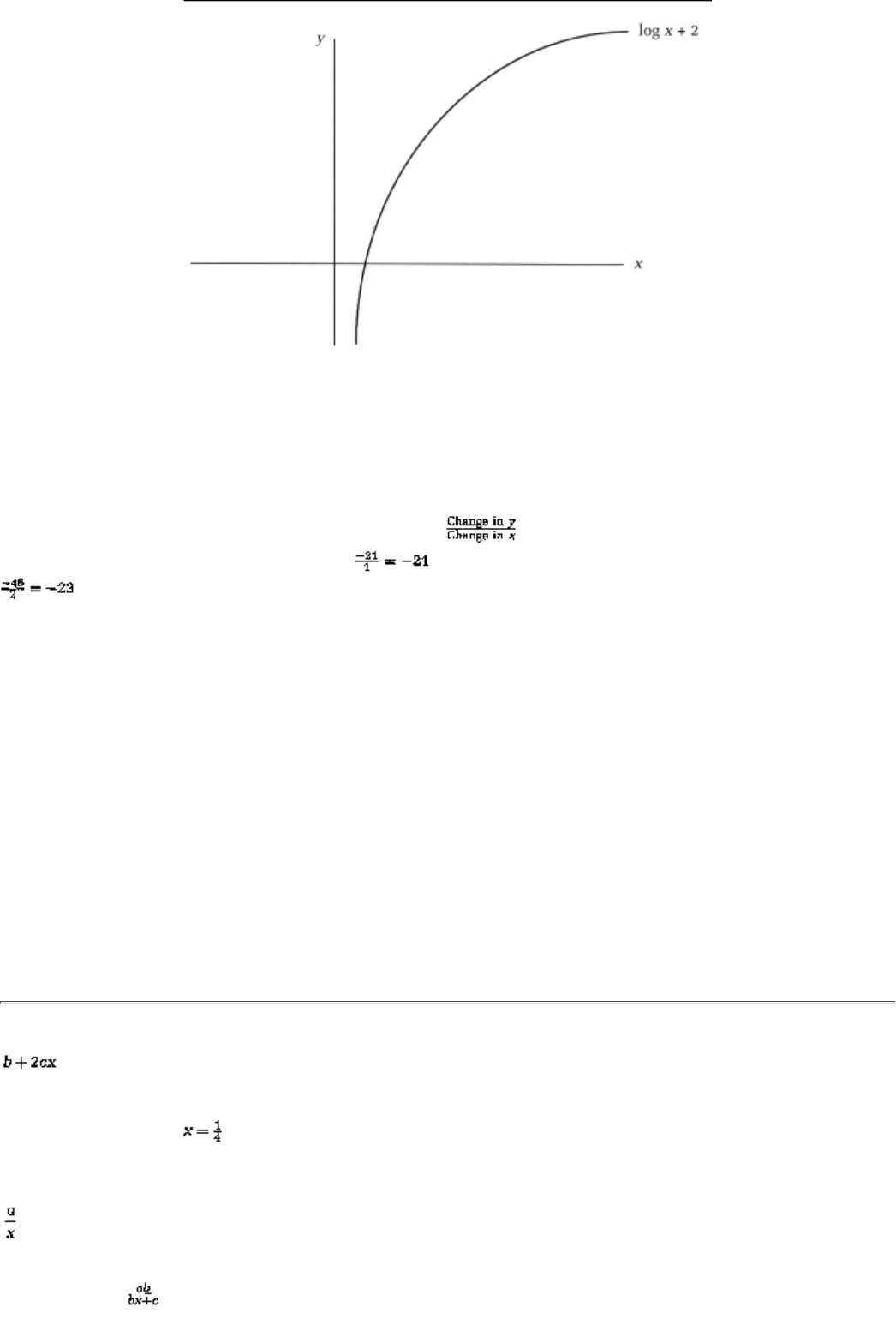

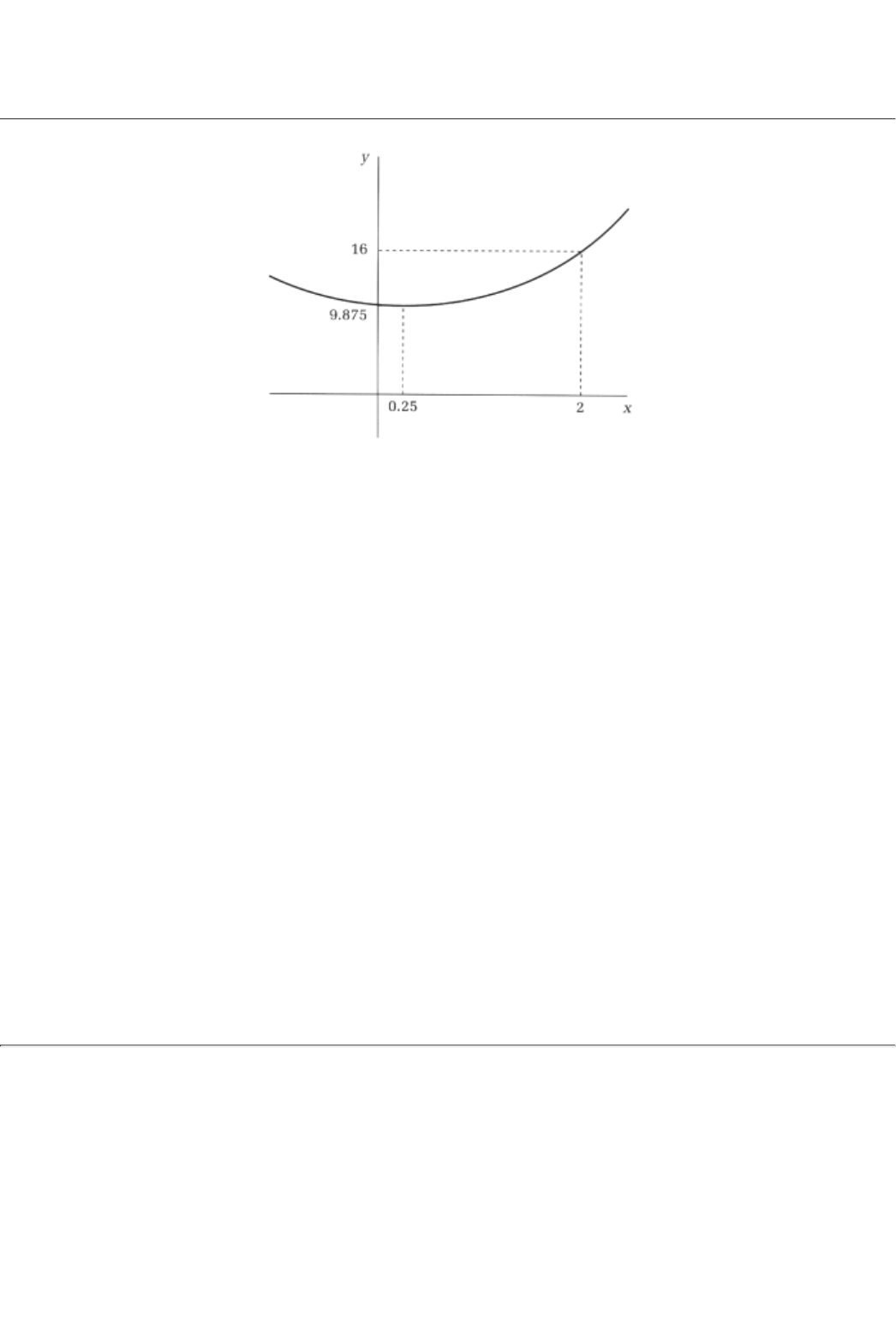

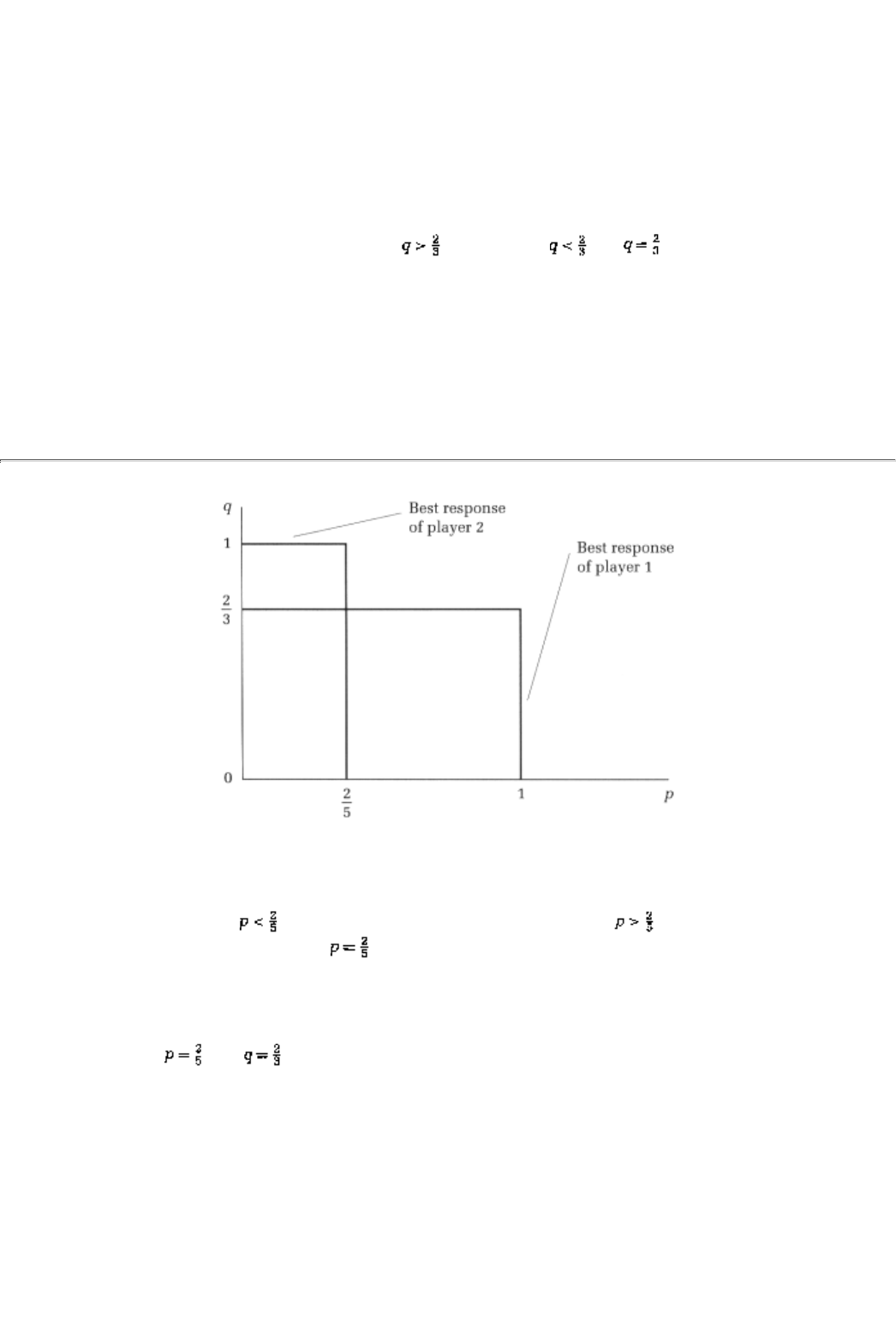

From these building blocks we can draw more complicated game trees, trees that allow more than two