MIRI

M ACHINE INTELLIGENCE

RESEARCH INSTITUTE

Timeless Decision eory

Eliezer Yudkowsky

Machine Intelligence Research Institute

Abstract

Disputes between evidential decision theory and causal decision theory have continued

for decades, and many theorists state dissatisfaction with both alternatives. Timeless de-

cision theory (TDT) is an extension of causal decision networks that compactly repre-

sents uncertainty about correlated computational processes and represents the decision-

maker as such a process. is simple extension enables TDT to return the one-box

answer for Newcomb’s Problem, the causal answer in Solomon’s Problem, and mutual

cooperation in the one-shot Prisoner’s Dilemma, for reasons similar to human intuition.

Furthermore, an evidential or causal decision-maker will choose to imitate a timeless

decision-maker on a large class of problems if given the option to do so.

Yudkowsky, Eliezer. 2010. Timeless Decision eory. e Singularity Institute, San Francisco, CA.

e Machine Intelligence Research Institute was previously known as the Singularity Institute.

Long Abstract

Disputes between evidential decision theory and causal decision theory have continued

for decades, with many theorists stating that neither alternative seems satisfactory. I

present an extension of decision theory over causal networks, timeless decision theory

(TDT).TDT compactly represents uncertainty about the abstract outputs of correlated

computational processes, and represents the decision-maker’s decision as the output of

such a process. I argue that TDT has superior intuitive appeal when presented as ax-

ioms, and that the corresponding causal decision networks (which I call timeless deci-

sion networks) are more true in the sense of better representing physical reality. I review

Newcomb’s Problem and Solomon’s Problem, two paradoxes which are widely argued as

showing the inadequacy of causal decision theory and evidential decision theory respec-

tively. I walk through both paradoxes to show that TDT achieves the appealing con-

sequence in both cases. I argue that TDT implements correct human intuitions about

the paradoxes, and that other decision systems act oddly because they lack representative

power. I review the Prisoner’s Dilemma and show that TDT formalizes Hofstadter’s “su-

perrationality”: under certain circumstances, TDT can permit agents to achieve “both

C” rather than “both D” in the one-shot, non-iterated Prisoner’s Dilemma. Finally, I

show that an evidential or causal decision-maker capable of self-modifying actions, given

a choice between remaining an evidential or causal decision-maker and modifying itself

to imitate a timeless decision-maker, will choose to imitate a timeless decision-maker

on a large class of problems.

Contents

1 Some Newcomblike Problems 1

2 Precommitment and Dynamic Consistency 6

3 Invariance and Reective Consistency 17

4 Maximizing Decision-Determined Problems 29

5 Is Decision-Dependency Fair 35

6 Renormalization 42

7 Creating Space for a New Decision eory 52

8 Review: Pearl’s Formalism for Causal Diagrams 57

9 Translating Standard Analyses of Newcomblike Problems into the Language

of Causality 63

10 Review: e Markov Condition 69

11 Timeless Decision Diagrams 73

12 e Timeless Decision Procedure 98

13 Change and Determination: A Timeless View of Choice 100

References 115

Eliezer Yudkowsky

1. Some Newcomblike Problems

1.1. Newcomb’s Problem

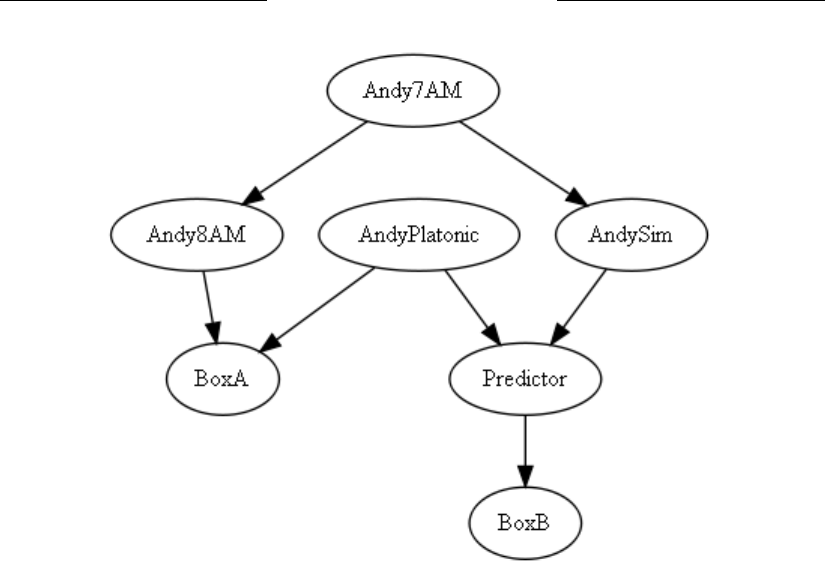

Imagine that a superintelligence from another galaxy, whom we shall call the Predictor,

comes to Earth and at once sets about playing a strange and incomprehensible game. In

this game, the superintelligent Predictor selects a human being, then oers this human

being two boxes. e rst box, box A, is transparent and contains a thousand dollars. e

second box, box B, is opaque and contains either a million dollars or nothing. You may

take only box B,or you may take boxes A and B.But there’s a twist: If the superintelligent

Predictor thinks that you’ll take both boxes, the Predictor has left box B empty; and you

will receive only a thousand dollars. If the Predictor thinks that you’ll take only box B,

then It has placed a million dollars in box B. Before you make your choice, the Predictor

has already moved on to Its next game; there is no possible way for the contents of box

B to change after you make your decision. If you like, imagine that box B has no back,

so that your friend can look inside box B, though she can’t signal you in any way. Either

your friend sees that box B already contains a million dollars, or she sees that it already

contains nothing. Imagine that you have watched the Predictor play a thousand such

games, against people like you,some of whom two-boxed and some of whom one-boxed,

and on each and every occasion the Predictor has predicted accurately. Do you take both

boxes, or only box B?

is puzzle is known as Newcomb’s Problem or Newcomb’s Paradox. It was devised

by the physicist William Newcomb, and introduced to the philosophical community by

Nozick (1969).

e resulting dispute over Newcomb’s Problem split the eld of decision theory into

two branches, causal decision theory (CDT) and evidential decision theory (EDT).

e evidential theorists would take only box B in Newcomb’s Problem, and their

stance is easy to understand. Everyone who has previously taken both boxes has received

a mere thousand dollars, and everyone who has previously taken only box B has received

a million dollars. is is a simple dilemma and anyone who comes up with an elaborate

reason why it is “rational” to take both boxes is just outwitting themselves. e “rational”

chooser is the one with a million dollars.

e causal theorists analyze Newcomb’s Problem as follows: Because the Predictor

has already made its prediction and moved on to its next game, it is impossible for your

choice to aect the contents of box B in any way. Suppose you knew for a fact that box B

contained a million dollars; you would then prefer the situation where you receive both

boxes ($1,001,000) to the situation where you receive only box B ($1,000,000). Suppose

you knew for a fact that box B were empty; you would then prefer to receive both boxes

($1,000) to only box B ($0). Given that your choice is physically incapable of aecting

1

Timeless Decision eory

the content of box B, the rational choice must be to take both boxes—following the

dominance principle, which is that if we prefer A to B given X, and also prefer A to B

given ¬X (not-X), and our choice cannot causally aect X, then we should prefer A to

B. How then to explain the uncomfortable fact that evidential decision theorists end up

holding all the money and taking Caribbean vacations, while causal decision theorists

grit their teeth and go on struggling for tenure? According to causal decision theorists,

the Predictor has chosen to reward people for being irrational; Newcomb’s Problem is

no dierent from a scenario in which a superintelligence decides to arbitrarily reward

people who believe that the sky is green. Suppose you could make yourself believe the sky

was green; would you do so in exchange for a million dollars? In essence, the Predictor

oers you a large monetary bribe to relinquish your rationality.

What would you do?

e split between evidential decision theory and causal decision theory goes deeper than

a verbal disagreement over which boxes to take in Newcomb’s Problem. Decision the-

orists in both camps have formalized their arguments and their decision algorithms,

demonstrating that their dierent actions in Newcomb’s Problem reect dierent com-

putational algorithms for choosing between actions.

1

e evidential theorists espouse an

algorithm which, translated to English, might read as “Take actions such that you would

be glad to receive the news that you had taken them.” e causal decision theorists es-

pouse an algorithm which, translated to English, might cash out as “Take actions which

you expect to have a positive physical eect on the world.”

e decision theorists’dispute is not just about trading arguments within an informal,

but shared, common framework—as is the case when, for example, physicists argue over

which hypothesis best explains a surprising experiment. e causal decision theorists and

evidential decision theorists have oered dierent mathematical frameworks for dening

rational decision. Just as the evidential decision theorists walk o with the money in

Newcomb’s Problem, the causal decision theorists oer their own paradox-arguments

in which the causal decision theorist “wins”—in which the causal decision algorithm

produces the action that would seemingly have the better real-world consequence. And

the evidential decision theorists have their own counterarguments in turn.

1.2. Solomon’s Problem

Variants of Newcomb’s problem are known as Newcomblike problems. Here is an exam-

ple of a Newcomblike problem which is considered a paradox-argument favoring causal

decision theory. Suppose that a recently published medical study shows that chewing

1. I review the algorithms and their formal dierence in Section 3.

2

Eliezer Yudkowsky

gum seems to cause throat abscesses—an outcome-tracking study showed that of peo-

ple who chew gum, 90% died of throat abscesses before the age of 50. Meanwhile, of

people who do not chew gum, only 10% die of throat abscesses before the age of 50.

e researchers, to explain their results, wonder if saliva sliding down the throat wears

away cellular defenses against bacteria. Having read this study, would you choose to

chew gum? But now a second study comes out, which shows that most gum-chewers

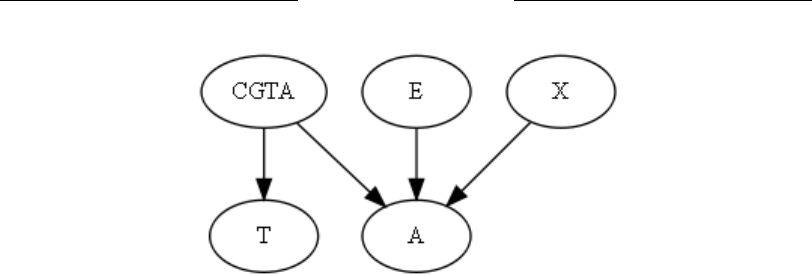

have a certain gene, CGTA, and the researchers produce a table showing the following

mortality rates:

Chew gum Don’t chew gum

CGTA present: 89% die 99% die

CGTA absent: 8% die 11% die

is table shows that whether you have the gene CGTA or not, your chance of dying

of a throat abscess goes down if you chew gum. Why are fatalities so much higher for

gum-chewers, then? Because people with the gene CGTA tend to chew gum and die

of throat abscesses. e authors of the second study also present a test-tube experiment

which shows that the saliva from chewing gum can kill the bacteria that form throat

abscesses. e researchers hypothesize that because people with the gene CGTA are

highly susceptible to throat abscesses, natural selection has produced in them a tendency

to chew gum, which protects against throat abscesses.

2

e strong correlation between

chewing gum and throat abscesses is not because chewing gum causes throat abscesses,

but because a third factor, CGTA, leads to chewing gum and throat abscesses.

Having learned of this new study, would you choose to chew gum? Chewing gum

helps protect against throat abscesses whether or not you have the gene CGTA. Yet

a friend who heard that you had decided to chew gum (as people with the gene CGTA

often do) would be quite alarmed to hear the news—just as she would be saddened by

the news that you had chosen to take both boxes in Newcomb’s Problem. is is a case

where evidential decision theory seems to return the wrong answer, calling into question

the validity of the evidential rule “Take actions such that you would be glad to receive the

news that you had taken them.” Although the news that someone has decided to chew

2. One way in which natural selection could produce this eect is if the gene CGTA persisted in

the population—perhaps because it is a very common mutation, or because the gene CGTA oers other

benets to its bearers which renders CGTA a slight net evolutionary advantage. In this case, the gene

CGTA would be a feature of the genetic environment which would give an advantage to other genes

which mitigated the deleterious eect of CGTA. For example, in a population pool where CGTA is often

present as a gene, a mutation such that CGTA (in addition to causing throat cancer) also switches on other

genes which cause the CGTA-bearer to chew gum, will be advantageous. e end result would be that

a single gene, CGTA, could confer upon its bearer both a vulnerability to throat cancer and a tendency to

chew gum.

3

Timeless Decision eory

gum is alarming, medical studies nonetheless show that chewing gum protects against

throat abscesses. Causal decision theory’s rule of “Take actions which you expect to have

a positive physical eect on the world” seems to serve us better.

3

e CGTA dilemma is an essentially identical variant of a problem rst introduced by

Nozick in his original paper, but not then named. Presently this class of problem seems to

be most commonly known as Solomon’s Problem after Gibbard and Harper (1978), who

presented a variant involving King Solomon. In this variant, Solomon wishes to send for

another man’s wife.

4

Solomon knows that there are two types of rulers, charismatic and

uncharismatic. Uncharismatic rulers are frequently overthrown; charismatic rulers are

not. Solomon knows that charismatic rulers rarely send for other people’s spouses and

uncharismatic rulers often send for other people’s spouses, but Solomon also knows that

this does not cause the revolts—the reason uncharismatic rulers are overthrown is that

they have a sneaky and ignoble bearing. I have substituted the chewing-gum throat-

abscess variant of Solomon’s Problem because, in real life, we do not say that such deeds

are causally independent of overthrow. Similarly there is another common variant of

Solomon’s Problem in which smoking does not cause lung cancer, but rather there is

a gene that both causes people to smoke and causes them to get lung cancer (as the

tobacco industry is reputed to have once argued could be the case). I have avoided this

variant because in real life, smoking does cause lung cancer. Research in psychology

shows that people confronted with logical syllogisms possessing common-sense inter-

pretations often go by the common-sense conclusion instead of the syllogistic conclu-

sions. erefore I have chosen an example, chewing gum and throat abscesses, which

does not conict with a pre-existing picture of the world.

Nonetheless I will refer to this class of problem as Solomon’s Problem, in accordance

with previous literature.

1.3. Weiner’s Robot Problem

A third Newcomblike problem from (Weiner 2004): Suppose that your friend falls down

a mineshaft. It happens that in the world there exist robots, conscious robots, who

are in most ways indistinguishable from humans. Robots are so indistinguishable from

humans that most people do not know whether they are robots or humans. ere are

only two dierences between robots and humans. First, robots are programmed to rescue

3. ere is a formal counter-argument known as the “tickle defense” which proposes that evidential

decision agents will also decide to chew gum; but the same tickle defense is believed (by its proponents)

to choose two boxes in Newcomb’s Problem. See Section 9.

4. Gibbard and Harper gave this example invoking King Solomon after rst describing another

dilemma involving King David and Bathsheba.

4

Eliezer Yudkowsky

people whenever possible. Second, robots have special rockets in their heels that go o

only when necessary to perform a rescue. So if you are a robot, you can jump into the

mineshaft to rescue your friend, and your heel rockets will let you lift him out. But if

you are not a robot, you must nd some other way to rescue your friend—perhaps go

looking for a rope, though your friend is in a bad way, with a bleeding wound that needs

a tourniquet now. . . . Statistics collected for similar incidents show that while all robots

decide to jump into mineshafts, nearly all humans decide not to jump into mineshafts.

Would you decide to jump down the mineshaft?

1.4. Nick Bostrom’s Meta-Newcomb Problem

A fourth Newcomblike problem comes from (Bostrom 2001), who labels it the Meta-

Newcomb problem. In Nick Bostrom’s Meta-Newcomb problem you are faced with a

Predictor who may take one of two possible actions: Either the Predictor has already

made Its move—placed a million dollars or nothing in box B, depending on how It

predicts your choice—or else the Predictor is watching to see your choice, and will af-

terward, once you have irrevocably chosen your boxes, but before you open them, place

a million dollars into box B if and only if you have not taken box A. If you know that the

Predictor observes your choice before lling box B, there is no controversy—any decision

theorist would say to take only box B. Unfortunately, there is no way of knowing; the

Predictor makes Its move before or after your decision around half the time in both cases.

Now suppose there is a Meta-Predictor, who has a perfect track record of predicting the

Predictor’s choices and also your own. e Meta-Predictor informs you of the following

truth-functional prediction: Either you will choose A and B, and Predictor will make

its move after you make your choice; or else you will choose only B, and Predictor has

already made Its move.

An evidential decision theorist is unfazed by Nick Bostrom’s Meta-Newcomb Prob-

lem; he takes box B and walks away, pockets bulging with a million dollars. But a causal

decision theorist is faced with a puzzling dilemma: If she takes boxes A and B, then the

Predictor’s action depends physically on her decision, so the “rational” action is to take

only box B. But if she takes only box B, then the Predictor’s action temporally precedes

and is physically independent of her decision, so the “rational” action is to take boxes A

and B.

1.5. Decision eory

It would be unfair to accuse the eld of decision theory of being polarized between evi-

dential and causal branches, even though the computational algorithms seem incompat-

ible. Nozick, who originally introduced the Newcomb problem to philosophy, proposes

that a prudent decision-maker should compute both evidential and causal utilities and

5

Timeless Decision eory

then combine them according to some weighting (Nozick 1969). Egan (2007) lists what

he feels to be fatal problems for both theories, and concludes by hoping that some al-

ternative formal theory will succeed where both causal and evidential decision theory

fail.

5

In this paper I present a novel formal foundational treatment of Newcomblike prob-

lems, using an augmentation of Bayesian causal diagrams. I call this new representation

“timeless decision diagrams.”

From timeless decision diagrams there follows naturally a timeless decision algo-

rithm, in whose favor I will argue; however, using timeless decision diagrams to analyze

Newcomblike problems does not commit one to espousing the timeless decision algo-

rithm.

2. Precommitment and Dynamic Consistency

Nozick, in his original treatment of Newcomb’s Problem, suggested an agenda for fur-

ther analysis—in my opinion a very insightful agenda, which has been often (though not

always) overlooked in further discussion. is is to analyze the dierence between New-

comb’s Problem and Solomon’s Problem that leads to people advocating that one should

use the dominance principle in Solomon’s Problem but not in Newcomb’s Problem.

In the chewing-gum throat-abscess variant of Solomon’s Problem, the dominant ac-

tion is chewing gum, which leaves you better o whether or not you have the CGTA

gene; but choosing to chew gum is evidence for possessing the CGTA gene, although

it cannot aect the presence or absence of CGTA in any way. In Newcomb’s Problem,

causal decision theorists argue that the dominant action is taking both boxes, which

leaves you better o whether box B is empty or full; and your physical press of the but-

ton to choose only box B or both boxes cannot change the predetermined contents of

box B in any way. Nozick says:

I believe that one should take what is in both boxes. I fear that the consider-

ations I have adduced thus far will not convince those proponents of taking

only what is in the second box. Furthermore, I suspect that an adequate so-

lution to this problem will go much deeper than I have yet gone or shall go

in this paper. So I want to pose one question. . . . e question I should

like to put to proponents of taking only what is in the second box in New-

comb’s example (and hence not performing the dominant action) is: what is

5. ere have been other decision theories introduced in the literature as well (Arntzenius 2002; Au-

mann, Hart, and Perry 1997; Drescher 2006).

6

Eliezer Yudkowsky

the dierence between Newcomb’s example and the other two examples [of

Solomon’s Problem] which make the dierence between not following the

dominance principle and following it?

If no such dierence is produced, one should not rush to conclude that

one should perform the dominant action in Newcomb’s example. For it must

be granted that, at the very least, it is not as clear that one should perform the

dominant action in Newcomb’s example as in the other two examples. And

one should be wary of attempting to force a decision in an unclear case by

producing a similar case where the decision is clear and challenging one to nd

a dierence between the cases which makes a dierence to the decision. For

suppose the undecided person, or the proponent of another decision, cannot

nd such a dierence. Does not the forcer now have to nd a dierence

between the cases which explains why one is clear and the other is not?

What is the key dierence between chewing gum that is evidence of susceptibility to

throat abscesses, and taking both boxes which is evidence of box B’s emptiness? Most

two-boxers argue that there is no dierence. Insofar as two-boxers analyze the seeming

dierence between the two Newcomblike problems, they give deationary accounts, an-

alyzing a psychological illusion of dierence between two structurally identical problems.

E.g. Gibbard and Harper (1978) say in passing: “e Newcomb paradox discussed by

Nozick (1969) has the same structure as the case of Solomon.”

I will now present a preliminary argument that there is a signicant structural dif-

ference between the two cases:

Suppose that in advance of the Predictor making Its move in Newcomb’s Problem,

you have the ability to irrevocably resolve to take only box B. Perhaps, in a world lled

with chocolate-chip cookies and other harmful temptations,humans have nally evolved

(or genetically engineered) a mental capacity for sticking to diets—making resolutions

which, once made, automatically carry through without a chance for later reconsider-

ation. Newcomb’s Predictor predicts an irrevocably resolved individual as easily as It

predicts the undecided psyche.

A causal decision agent has every right to expect that if he irrevocably resolves to take

only box B in advance of the Predictor’s examination, this directly causes the Predictor to

ll box B with a million dollars. All decision theories agree that in this case it would be

rational to precommit yourself to taking only box B—even if, afterward, causal decision

agents would wistfully wish that they had the option to take both boxes, once box B’s

contents were xed. Such a rm resolution has the same eect as pressing a button

which locks in your choice of only B, in advance of the Predictor making Its move.

Conversely in the CGTA variant of Solomon’s Problem, a causal decision agent,

knowing in advance that he would have to choose between chewing gum and avoid-

7

Timeless Decision eory

ing gum, has no reason to precommit himself to avoiding gum. is is a dierence

between the two problems which suggests that they are not structurally equivalent from

the perspective of a causal decision agent.

McClennen (Edward 1985) analyzes cases where an agent may wish to precommit

himself to a particular course of action. McClennen gives the example of two play-

ers, Row and Column, locked in a non-zero-sum game with the following move/payo

matrix:

Payos are presented as (Row, Column). Column moves second.

Column:

No-U U

Row: No-D (4, 3) (1, 4)

D (3, 1) (2, 2)

Whether Row makes the move No-D or D, Column’s advantage lies in choosing U .

If Row chooses No-D, then U pays 4 for Column and No-U pays 3. If Row chooses

D, then U pays 2 for Column and No-U pays 1. Row, observing this dominance, as-

sumes that Column will play U, and therefore plays the move D, which pays 2 to Row

if Column plays U, as opposed to No-D which pays 1.

is outcome (D, U) = (2, 2) is not a Pareto optimum. Both Row and Column

would prefer (No-D, No-U) to (D, U). However, McClennen’s Dilemma diers from

the standard Prisoner’s Dilemma in that D is not a dominating option for Row. As

McClennen asks: “Who is responsible for the problem here?” McClennen goes on to

write:

In this game,Column cannot plead that Row’s disposition to non-cooperation

requires a security-oriented response of U. Row’s maximizing response to

a choice of No-U by Column is No-D, not D. . . . us, it is Column’s own

maximizing disposition so characterized that sets the problem for Column.

McClennen then suggests a scenario in which Column can pay a precommitment cost

which forestalls all possibility of Column playing U. “Of course,”says McClennen,“such

a precommitment device will typically require the expenditure of some resources.” Per-

haps the payo for Column of (No-D, No-U ) is 2.8 instead of 3 after precommitment

costs are paid.

McClennen cites the Allais Paradox as a related single-player example. e Allais

Paradox (Allais 1953) illustrates one of the rst systematic biases discovered in the human

psychology of decision-making and probability assessment, a bias which would later

be incorporated in the heuristics-and-biases program (Kahneman, Slovic, and Tversky

8

Eliezer Yudkowsky

1982). Suppose that you must choose between two gambles A and B with these payo

6

probabilities:

A: 33/34 probability of paying $2,500, 1/34 probability of paying $0.

B: Pays $2,400 with certainty.

Take a moment to ask yourself whether you would prefer A or B, if you had to play one

and only one of these gambles. You need not assume your utility is linear in wealth—

just ask which gamble you would prefer in real life. If you prefer A to B or vice versa,

ask yourself whether this preference is strong enough that you would be willing to pay

a single penny in order to play A instead of B or vice versa.

When you have done this, ask yourself about your preference over these two gambles:

C: ($2,500, 33/100; $0, 67/100)

D: ($2,400, 34/100; $0, 66/100)

Many people prefer B to A, but prefer C to D. is preference is called “paradoxical”

because the gambles C and D equate precisely to a 34/100 probability of playing the

gambles A and B respectively. at is, C equates to a gamble which oers a 34/100

chance of playing A, and D equates to a gamble which oers a 34/100 chance of playing

B.

If an agent prefers B to A and C to D this potentially introduces a dynamic inconsis-

tency into the agent’s planning. Suppose that at 12:00PM I roll a hundred-sided die. If

the die shows a number greater than 34 the game terminates. Otherwise, at 12:05PM I

consult a switch with two settings, X and Y . If the setting is Y , I pay you $2,400. If the

setting is X, I roll a 34-sided die and pay you $2,500 unless the die shows “34.” If you

prefer C to D and B to A and you would pay a penny to indulge each preference, your

preference reversal renders you exploitable. Suppose the switch starts in state Y . Before

12:00PM, you pay me a penny to throw the switch to X. After 12:00PM and before

12:05PM, you pay me a penny to throw the switch to Y . I have taken your two cents on

the subject.

McClennen speaks of a “political economy of past and future selves”; the past self

must choose present actions subject to the knowledge that the future self may have dif-

ferent priorities; the future self must live with the past self ’s choices but has its own

agenda of preference. Eectively the past self plays a non-zero-sum game against the

future self, the past self moving rst. Such an agent is characterized as a “sophisticated

chooser” (Hammond 1976; Yaari 1977). Ulysses, faced with the tempting Sirens, acts as

6. Since the Allais paradox dates back to the 1950s,a modern reader should multiply all dollar amounts

by a factor of 10 to maintain psychological parity.

9

Timeless Decision eory

a sophisticated chooser; he arranges for himself to be bound to a mast. Yet as McClen-

nen notes, such a strategy involves a retreat to second-best. Because of precommitment

costs, sophisticated choosers will tend to do systematically worse than agents with no

preference reversals. It is also usually held that preference reversal is inconsistent with

expected utility maximization and indeed rationality. See Kahneman and Tversky (2000)

for discussion.

McClennen therefore argues that being a resolute agent is better than being a sophis-

ticated chooser, for the resolute agent pays no precommitment costs. Yet it is better still

to have no need of resoluteness—to decide using an algorithm which is invariant under

translation in time. is would conserve mental energy. Such an agent’s decisions are

called dynamically consistent (Strotz 1955).

Consider this argument: “Causal decision theory is dynamically inconsistent because

there exists a problem, the Newcomb Problem, which calls forth a need for resoluteness

on the part of a causal decision agent.”

A causal decision theorist may reply that the analogy between McClennen’s Dilemma

or Newcomb’s Problem on the one hand, and the Allais Paradox or Ulysses on the other,

fails to carry through. In the case of the Allais Paradox or Ulysses and the Sirens, the

agent is willing to pay a precommitment cost because he fears a preference reversal from

one time to another. In McClennen’s Dilemma the source of Column’s willingness to

pay a precommitment cost is not Column’s anticipation of a future preference reversal.

Column prefers the outcome (No-D, U ) to (No-D ,No-U ) at both precommitment time

and decision time. However, Column prefers that Row play No-D to D—this is what

Column will accomplish by paying the precommitment cost. For McClennen’s Dilemma

to carry through, the eort made by Column to precommit to No-U must have two ef-

fects. First, it must cause Column to play No-U. Second, Row must know that Column

has committed to playing No-U, so that Row’s maximizing move is No-D. Otherwise

the result will be (D,No-U), the worst possible result for Column. A purely mental

resolution by Column might fail to reassure Row, thus leading to this worst possible

result.

7

In contrast, in the Allais Paradox or Ulysses and the Sirens the problem is wholly

self-generated, so a purely mental resolution suffices.

In Newcomb’s Problem the causal agent regards his precommitment to take only

box B as having two eects, the rst eect being receiving only box B, and the second

eect causing the Predictor to correctly predict the taking of only box B, hence lling

box B with a million dollars. e causal agent always prefers receiving $1,001,000 to

$1,000,000, or receiving $1000 to $0. Like Column trying to inuence Row, the causal

7. Column would be wiser to irrevocably resolve to play No-U if Row plays No-D. If Row knows

this, it would further encourage Row to play appropriately.

10

Eliezer Yudkowsky

agent does not precommit in anticipation of a future preference reversal, but to inuence

the move made by Predictor. e apparent dynamic inconsistency arises from dierent

eects of the decision to take both boxes when decided at dierent times. Since the

eects signicantly dier, the preference reversal is illusory.

When is a precommitment cost unnecessary, or a need for resoluteness a sign of dy-

namic inconsistency? Consider this argument: Paying a precommitment cost to decide

at t

1

instead of t

2

, or requiring an irrevocable resolution to implement at t

2

a decision

made at t

1

, shows dynamic inconsistency if agents who precommit to a decision at time

t

1

do just as well, no better and no worse excluding commitment costs, than agents who

choose the same option at time t

2

. More generally we may specify that for any agent who

decides to take a xed action at a xed time, the experienced outcome is the same for

that agent regardless of when the decision to take that action is made. Call this property

time-invariance of the dilemma.

Time-invariance may not properly describe McClennen’s Dilemma, since McClen-

nen does not specify that Row reliably predicts Column regardless of Column’s decision

time. Column may need to take extra external actions to ‘precommit’ in a fashion Row

can verify; the analogy to international diplomacy is suggestive of this. In Newcomb’s

Dilemma we are told that the Predictor is never or almost never wrong, in virtue of

an excellent ability to extrapolate the future decisions of agents, precommitted or not.

erefore it would seem that, in observed history, agents who precommit to take only

box B do no better and no worse than agents who choose on-the-y to take only box B.

is argument only thinly conceals the root of the disagreement between one-boxers

and two-boxers in Newcomb’s Problem; for the argument speaks not of how an agent’s

deciding at T or T + 1 causes or brings about an outcome, but only whether agents who

decide at T or T + 1 receive the same outcome. A causal decision theorist would protest

that agents who precommit at T cause the desired outcome and are therefore rational,

while agents who decide at T + 1 merely receive the same outcome without doing any-

thing to bring it about, and are therefore irrational. A one-boxer would say that this

reply illustrates the psychological quirk which underlies the causal agent’s dynamic in-

consistency; but it does not make his decisions any less dynamically inconsistent.

Before dismissing the force of this one-boxing argument, consider the following

dilemma, a converse of Newcomb’s Problem, which I will call Newcomb’s Soda. You

know that you will shortly be administered one of two sodas in a double-blind clini-

cal test. After drinking your assigned soda, you will enter a room in which you nd

a chocolate ice cream and a vanilla ice cream. e rst soda produces a strong but en-

tirely subconscious desire for chocolate ice cream, and the second soda produces a strong

subconscious desire for vanilla ice cream. By “subconscious” I mean that you have no

introspective access to the change, any more than you can answer questions about indi-

11

Timeless Decision eory

vidual neurons ring in your cerebral cortex. You can only infer your changed tastes by

observing which kind of ice cream you pick.

It so happens that all participants in the study who test the Chocolate Soda are re-

warded with a million dollars after the study is over, while participants in the study

who test the Vanilla Soda receive nothing. But subjects who actually eat vanilla ice

cream receive an additional thousand dollars, while subjects who actually eat chocolate

ice cream receive no additional payment. You can choose one and only one ice cream to

eat. A pseudo-random algorithm assigns sodas to experimental subjects, who are evenly

divided (50/50) between Chocolate and Vanilla Sodas. You are told that 90% of previous

research subjects who chose chocolate ice cream did in fact drink the Chocolate Soda,

while 90% of previous research subjects who chose vanilla ice cream did in fact drink the

Vanilla Soda.

8

Which ice cream would you eat?

Newcomb’s Soda has the same structure as Solomon’s Problem, except that instead of

the outcome stemming from genes you possessed since birth, the outcome stems from

a soda you will shortly drink. Both factors are in no way aected by your action nor by

your decision, but your action provides evidence about which genetic allele you inherited

or which soda you drank.

An evidential decision agent facing Newcomb’s Soda will, at the time of confronting

the ice cream, decide to eat chocolate ice cream because expected utility conditional on

this decision exceeds expected utility conditional on eating vanilla ice cream. However,

suppose the evidential decision agent is given an opportunity to precommit to an ice

cream avor in advance. An evidential agent would rather precommit to eating vanilla

ice cream than precommit to eating chocolate, because such a precommitment made in

advance of drinking the soda is not evidence about which soda will be assigned.

us, the evidential agent would rather precommit to eating vanilla, even though the

evidential agent will prefer to eat chocolate ice cream if making the decision “in the

moment.” is would not be dynamically inconsistent if agents who precommitted to

a future action received a dierent payo than agents who made that same decision “in

the moment.” But in Newcomb’s Soda you receive exactly the same payo regardless of

whether, in the moment of action, you eat vanilla ice cream because you precommitted

to doing so, or because you choose to do so at the last second. Now suppose that the

evidential decision theorist protests that this is not really a dynamic inconsistency be-

cause, even though the outcome is just the same for you regardless of when you make

your decision, the decision has dierent news-value before the soda is drunk and after

8. Given the dumbfounding human capability to rationalize a preferred answer, I do not consider it

implausible in the real world that 90% of the research subjects assigned the Chocolate Soda would choose

to eat chocolate ice cream (Kahneman, Slovic, and Tversky 1982).

12

Eliezer Yudkowsky

the soda is drunk. A vanilla-eater would say that this illustrates the psychological quirk

which underlies the evidential agent’s dynamic inconsistency, but it does not make the

evidential agent any less dynamically inconsistent.

erefore I suggest that time-invariance, for purposes of alleging dynamic incon-

sistency, should go according to invariance of the agent’s experienced outcome. Is it not

outcomes that are the ultimate purpose of all action and decision theory? If we exclude

the evidential agent’s protest that two decisions are not equivalent, despite identical out-

comes, because at dierent times they possess dierent news-values; then to be fair we

should also exclude the causal agent’s protest that two decisions are not equivalent, de-

spite identical outcomes, because at dierent times they bear dierent causal relations.

Advocates of causal decision theory (which has a long and honorable tradition in

academic discussion) may feel that I am trying to slip something under the rug with

this argument—that in some subtle way I assume that which I set out to argue. In the

next section, discussing the role of invariance in decision problems, I will bring out my

hidden assumption explicitly, and say under what criteria it does or does not hold; so at

least I cannot be accused of subtlety. Since I do not feel that I have yet made the case

for a purely outcome-oriented denition of time-invariance, I will not further press the

case against causal decision theory in this section.

I do feel I have fairly made my case that Newcomb’s Problem and Solomon’s Problem

have dierent structures. is structural dierence is evidenced by the dierent precom-

mitments which evidential theory and causal theory agree would dominate in Newcomb’s

Problem and Solomon’s Problem respectively.

Nozick (1969) begins by presenting Newcomb’s Problem as a conict between the

principle of maximizing expected utility and the principle of dominance. Shortly after-

ward, Nozick introduces the distinction between probabilistic independence and causal

independence, suggesting that the dominance principle should apply only when states

are causally independent of actions. In eect this reframed Newcomb’s Problem as a con-

ict between the principle of maximizing evidential expected utility and the principle

of maximizing causal expected utility, a line of attack which dominated nearly all later

discussion.

I think there are many people—especially, people who have not previously been in-

culcated in formal decision theory—who would say that the most appealing decision is

to take only box B in Newcomb’s Problem, and to eat vanilla ice cream in Newcomb’s

Soda.

After writing the previous sentence, I posed these two dilemmas to four friends of

mine who had not already heard of Newcomb’s Problem. (Unfortunately most of my

friends have already heard of Newcomb’s Problem, and hence are no longer “naive rea-

soners” for the purpose of psychological experiments.) I told each friend that the Pre-

13

Timeless Decision eory

dictor had been observed to correctly predict the decision of 90% of one-boxers and also

90% of two-boxers. For the second dilemma I specied that 90% of people who ate

vanilla ice cream did in fact drink the Vanilla Soda and likewise with chocolate eaters

and Chocolate Soda. us the internal payos and probabilities were symmetrical be-

tween Newcomb’s Problem and Newcomb’s Soda. One of my friends was a two-boxer;

and of course he also ate vanilla ice cream in Newcomb’s Soda. My other three friends

answered that they would one-box on Newcomb’s Problem. I then posed Newcomb’s

Soda. Two friends answered immediately that they would eat the vanilla ice cream; one

friend said chocolate, but then said, wait, let me reconsider, and answered vanilla. Two

friends felt that their answers of “only box B” and “vanilla ice cream” were perfectly con-

sistent; my third friend felt that these answers were inconsistent in some way, but said

that he would stick by them regardless.

is is a small sample size. But it does conrm to some degree that some naive

humans who one-box on Newcomb’s Problem would also eat vanilla ice cream in New-

comb’s Soda.

Traditionally people who give the “evidential answer” to Newcomb’s Problem and

the “causal answer” to Solomon’s Problem are regarded as vacillating between evidential

decision theory and causal decision theory. e more so, as Newcomb’s Problem and

Solomon’s Problem have been considered identically structured—in which case any per-

ceived dierence between them would stem from psychological framing eects. us, I

introduced the idea of precommitment to show that Newcomb’s Problem and Solomon’s

Problem are not identically structured. us, I introduced the idea of dynamic consis-

tency to show that my friends who chose one box and ate vanilla ice cream gave interesting

responses—responses with the admirable harmony that my friends would precommit to

the same actions they would choose in-the-moment.

ere is a potential logical aw in the very rst paper ever published on Newcomb’s

Problem, in Nozick’s assumption that evidential decision theory has anything whatsoever

to do with a one-box response. It is the retroductive fallacy: “All evidential agents choose

only one box; the human Bob chooses only one box; therefore the human Bob is an ev-

idential agent.” When we test evidential decision theory as a psychological hypothesis for

a human decision algorithm, observation frequently contradicts the hypothesis. It is not

uncommon—my own small experience suggests it is the usual case—to nd someone

who one-boxes on Newcomb’s Problem yet endorses the “causal” decision in variants of

Solomon’s Problem. So evidential decision theory, considered as an algorithmic hypoth-

esis, explains the psychological phenomenon (Newcomb’s Problem) which it was rst

invented to describe; but evidential decision theory does not successfully predict other

psychological phenomena (Solomon’s Problem). We should readily abandon the evi-

dential theory in favor of an alternative psychological hypothesis, if a better hypothesis

14

Eliezer Yudkowsky

presents itself—a hypothesis that predicts a broader range of phenomena or has simpler

mechanics.

What sort of hypothesis would explain people who choose one box in Newcomb’s

Problem and who send for another’s spouse, smoke, or chew gum in Solomon’s Prob-

lem? Nozick (1993) proposed that humans use a weighted mix of causal utilities and

evidential utilities. Nozick suggested that people one-box in Newcomb’s Problem be-

cause the dierential evidential expected utility of one-boxing is overwhelmingly high,

compared to the dierential causal expected utilities. On the evidential view a mil-

lion dollars is at stake; on the causal view a mere thousand dollars is at stake. On any

weighting that takes both evidential utility and causal utility noticeably into account, the

evidential dierentials in Newcomb’s Problem will swamp the causal dierentials. us

Nozick’s psychological hypothesis retrodicts the observation that many people choose

only one box in Newcomb’s Problem; yet send for another’s spouse, smoke, or chew gum

in Solomon’s Problem. In Solomon’s Problem as usually presented, the evidential utility

does not completely swamp the causal utility.

Ledwig (2000) complains that formal decision theories which select only one box in

Newcomb’s Problem are rare (in fact, Ledwig says that the evidential decision theory of

Jerey [1983] is the only such theory he knows); and goes on to sigh that “Argumentative

only-1-box-solutions (without providing a rational decision theory) for Nozick’s original

version of Newcomb’s problem are presented over and over again, though.” Ledwig’s

stance seems to be that although taking only one box is very appealing to naive reasoners,

it is difficult to justify it within a rational decision theory.

I reply that it is wise to value winning over the possession of a rational decision theory,

just as it is wise to value truth over adherence to a particular mode of reasoning. An

expected utility maximizer should maximize utility—not formality, reasonableness, or

defensibility.

Of course I am not without sympathy to Ledwig’s complaint. Indeed,the point of this

paper is to present a systematic decision procedure which ends up maximally rewarded

when challenged by Newcomblike problems. It is surely better to have a rational decision

theory than to not have one. All else being equal, the more formalizable our procedures,

the better. An algorithm reduced to mathematical clarity is likely to shed more light

on underlying principles than a verbal prescription. But it is not the goal in Newcomb’s

Problem to be reasonable or formal, but to walk o with the maximum sum of money.

Just as the goal of science is to uncover truth, not to be scientic. People succeeded in

transitioning from Aristotelian authority to science at least partially because they could

appreciate the value of truth, apart from valuing authoritarianism or scientism.

It is surely the job of decision theorists to systematize and formalize the principles

involved in deciding rationally; but we should not lose sight of which decision results in

15

Timeless Decision eory

attaining the ends that we desire. If one’s daily work consists of arguing for and against

the reasonableness of decision algorithms, one may develop a dierent apprehension of

reasonableness than if one’s daily work consisted of confronting real-world Newcomblike

problems, watching naive reasoners walk o with all the money while you struggle to

survive on a grad student’s salary. But it is the latter situation that we are actually trying

to prescribe—not, how to win arguments about Newcomblike problems, but how to

maximize utility on Newcomblike problems.

Can Nozick’s mixture hypothesis explain people who say that you should take only

box B, and also eat vanilla ice cream in Newcomb’s Soda? No: Newcomb’s Soda is

a precise inverse of Newcomb’s Problem, including the million dollars at stake according

to evidential decision theory, and the mere thousand dollars at stake according to causal

decision theory. It is apparent that my friends who would take only box B in Newcomb’s

Problem, and who also wished to eat vanilla ice cream with Newcomb’s Soda, completely

ignored the prescription of evidential theory. For evidential theory would advise them

that they must eat chocolate ice cream, on pain of losing a million dollars. Again, my

friends were naive reasoners with respect to Newcomblike problems.

If Newcomb’s Problem and Newcomb’s Soda expose a coherent decision principle

that leads to choosing only B and choosing vanilla ice cream, then it is clear that this

coherent principle may be brought into conict with either evidential expected utility

(Newcomb’s Soda) or causal expected utility (Newcomb’s Problem). at the principle

is coherent is a controversial suggestion—why should we believe that mere naive rea-

soners are coherent, when humans are so frequently inconsistent on problems like the

Allais Paradox? As suggestive evidence I observe that my naive friends’observed choices

have the intriguing property of being consistent with the preferred precommitment. My

friends’ past and future selves may not be set to war one against the other, nor may pre-

commitment costs be swindled from them. is harmony is absent from the evidential

decision principle and the causal decision principle. Should we not give naive reasoners

the benet of the doubt, that they may think more coherently than has heretofore been

appreciated? Sometimes the common-sense answer is wrong and naive reasoning goes

astray; aye, that is a lesson of science; but it is also a lesson that sometimes common

sense turns out to be right.

If so, then perhaps Newcomb’s Problem brings causal expected utility into conict

with this third principle,and therefore is used by one-boxers to argue against the prudence

of causal decision theory. Similarly, Solomon’s Problem brings into conict evidential

expected utility on the one hand, and the third principle on the other hand, and therefore

Solomon’s Problem appears as an argument against evidential decision theory.

Considering the blood, sweat and ink poured into framing Newcomb’s Problem as

a conict between evidential expected utility and causal expected utility, it is no trivial

16

Eliezer Yudkowsky

task to reconsider the entire problem. Along with the evidential-versus-causal debate

there are certain methods, rules of argument, that have become implicitly accepted in

the eld of decision theory. I wish to present not just an alternate answer to Newcomb’s

Problem, or even a new formal decision theory, but also to introduce dierent ways of

thinking about dilemmas.

3. Invariance and Reective Consistency

In the previous section, I dened time-invariance of a dilemma as requiring the invari-

ance of agents’outcomes given a xed decision and dierent times at which the decision

was made. In the eld of physics, the invariances of a problem are important, and physi-

cists are trained to notice them. Physicists consider the law known as conservation of

energy a consequence of the fact that the laws of physics do not vary with time. Or to

be precise, that the laws of physics are invariant under translation in time. Or to be even

more precise, that all equations relating physical variables take on the same form when

we apply the coordinate transform t

0

= t + x where x is a constant.

Physical equations are invariant under coordinate transforms that describe rotation

in space, which corresponds to the principle of conservation of angular momentum.

Maxwell’s Equations are invariant (the measured speed of light is the same) when time

and space coordinates transform in a fashion that we now call the theory of Special Rel-

ativity. For more on the importance physicists attach to invariance under transforming

coordinates, see e Feynman Lectures on Physics (Feynman, Leighton, and Sands 1963,

vol. 3 chap. 17). Invariance is interesting; that is one of the ways that physicists have

learned to think.

I want to make a very loose analogy here to decision theory, and oer the idea that

there are decision principles which correspond to certain kinds of invariance in dilem-

mas. For example, there is a correspondence between time-invariance in a dilemma, and

dynamic consistency in decision-making. If a dilemma is not time-invariant, so that it

makes a dierence when you make your decision to perform a xed action at a xed

time, then we have no right to criticize agents who pay precommitment costs, or enforce

mental resolutions against their own anticipated future preferences.

e hidden question—the subtle assumption—is how to determine whether it makes

a “dierence”at what time you decide. For example,an evidential decision theorist might

say that two decisions are dierent precisely in the case that they bear dierent news-

values, in which case Newcomb’s Soda is not time-invariant because deciding on the

same action at dierent times carries dierent news-values. Or a causal decision theo-

rist might say that two decisions are dierent precisely in the case that they bear dierent

17

Timeless Decision eory

causal relations, in which case Newcomb’s Problem is not time-invariant because decid-

ing on the same action at dierent times carries dierent causal relations.

In the previous section I declared that my own criterion for time-invariance was iden-

tity of outcome. If agents who decide at dierent times experience dierent outcomes,

then agents who pay an extra precommitment cost to decide early may do reliably better

than agents who make the same decision in-the-moment. Conversely, if agents who de-

cide at dierent times experience the same outcome, then you cannot do reliably better

by paying a precommitment cost.

How to choose which criterion of dierence should determine our criterion of invari-

ance?

To move closer to the heart of this issue, I wish to generalize the notion of dynamic

consistency to the notion of reective consistency. A decision algorithm is reectively incon-

sistent whenever an agent using that algorithm wishes she possessed a dierent decision

algorithm. Imagine that a decision agent possesses the ability to choose among decision

algorithms—perhaps she is a self-modifying Articial Intelligence with the ability to

rewrite her source code, or more mundanely a human pondering dierent philosophies

of decision.

If a self-modifying Articial Intelligence, who implements some particular decision

algorithm, ponders her anticipated future and rewrites herself because she would rather

have a dierent decision algorithm, then her old algorithm was reectively inconsistent.

Her old decision algorithm was unstable; it dened desirability and expectation such

that an alternate decision algorithm appeared more desirable, not just under its own

rules, but under her current rules.

I have never seen a formal framework for computing the relative expected utility of

dierent abstract decision algorithms, and until someone invents such, arguments about

reective inconsistency will remain less formal than analyses of dynamic inconsistency.

One may formally illustrate reective inconsistency only for specic concrete problems,

where we can directly compute the alternate prescriptions and alternate consequences

of dierent algorithms. It is clear nonetheless that reective inconsistency generalizes

dynamic inconsistency: All dynamically inconsistent agents are reectively inconsistent,

because they wish their future algorithm was such as to make a dierent decision.

What if an agent is not self-modifying? Any case of wistful regret that one does

not implement an alternative decision algorithm similarly shows reective inconsistency.

A two-boxer who, contemplating Newcomb’s Problem in advance, wistfully regrets not

being a single-boxer, is reectively inconsistent.

I hold that under certain circumstances, agents may be reectively inconsistent with-

out that implying their prior irrationality. Suppose that you are a self-modifying expected

utility maximizer, and the parent of a three-year-old daughter. You face a superintelli-

18

Eliezer Yudkowsky

gent entity who sets before you two boxes, A and B. Box A contains a thousand dollars

and box B contains two thousand dollars. e superintelligence delivers to you this

edict: Either choose between the two boxes according to the criterion of choosing the option

that comes rst in alphabetical order, or the superintelligence will kill your three-year-old

daughter.

You cannot win on this problem by choosing box A because you believe this choice

saves your daughter and maximizes expected utility. e superintelligence has the capa-

bility to monitor your thoughts—not just predict them but monitor them directly—and

will kill your daughter unless you implement a particular kind of decision algorithm in

coming to your choice, irrespective of any actual choice you make. A human, in this

scenario, might well be out of luck. We cannot stop ourselves from considering the

consequences of our actions; it is what we are.

But suppose you are a self-modifying agent, such as an Articial Intelligence with full

access to her own source code. If you attach a sufficiently high utility to your daughter’s

life, you can save her by executing a simple modication to your decision algorithm. e

source code for the old algorithm might be described in English as “Choose the action

whose anticipated consequences have maximal expected utility.” e new algorithm’s

source code might read “Choose the action whose anticipated consequences have maxi-

mal expected utility, unless between 7AM and 8AM on July 3rd, 2109 A.D., I am faced

with a choice between two labeled boxes,in which case,choose the box that comes alpha-

betically rst without calculating the anticipated consequences of this decision.” When

the new decision algorithm executes,the superintelligence observes that you have chosen

box A according to an alphabetical decision algorithm, and therefore does not kill your

daughter. We will presume that the superintelligence does consider this satisfactory;

and that choosing the alphabetically rst action by executing code which calculates the

expected utility of this action’s probable consequences and compares it to the expected

utility of other actions, would not placate the superintelligence.

So in this particular dilemma of the Alphabetical Box, we have a scenario where a self-

modifying decision agent would rather alphabetize than maximize expected utility. We

can postulate a nicer version of the dilemma, in which opaque box A contains a million

dollars if and only if the Predictor believes you will choose your box by alphabetizing.

On this dilemma, agents who alphabetize do systematically better—experience reliably

better outcomes—than agents who maximize expected utility.

But I do not think this dilemma of the Alphabetical Box shows that choosing the al-

phabetically rst decision is more rational than maximizing expected utility. I do not

think this dilemma shows a defect in rationality’s prescription to predict the conse-

quences of alternative decisions, even though this prescription is reectively inconsistent

given the dilemma of the Alphabetical Box. e dilemma’s mechanism invokes a super-

19

Timeless Decision eory

intelligence who shows prejudice in favor of a particular decision algorithm, in the course

of purporting to demonstrate that agents who implement this algorithm do systemati-

cally better.

erefore I cannot say: If there exists any dilemma that would render an agent reec-

tively inconsistent, that agent is irrational. e criterion is denitely too broad. Perhaps

a superintelligence says: “Change your algorithm to alphabetization or I’ll wipe out your

entire species.” An expected utility maximizer may deem it rational to self-modify her

algorithm under such circumstances, but this does not reect poorly on the original

algorithm of expected utility maximization. Indeed, I would look unfavorably on the ra-

tionality of any decision algorithm that did not execute a self-modifying action, in such

desperate circumstance.

To make reective inconsistency an interesting criterion of irrationality, we have to

restrict the range of dilemmas considered fair. I will say that I consider a dilemma “fair,”

if when an agent underperforms other agents on the dilemma, I consider this to speak

poorly of that agent’s rationality. To strengthen the judgment of irrationality, I require

that the “irrational” agent should systematically underperform other agents in the long

run, rather than losing once by luck. (Someone wins the lottery every week, and his

decision to buy a lottery ticket was irrational, whereas the decision of a rationalist not to

buy the same lottery ticket was rational. Let the lucky winner spend as much money as he

wants on more lottery tickets; the more he spends, the more surely he will see a net loss on

his investment.) I further strengthen the judgment of irrationality by requiring that the

“irrational”agent anticipate underperforming other agents; that is,her underperformance

is not due to unforeseen catastrophe. (Aaron McBride: “When you know better, and

you still make the mistake, that’s when ignorance becomes stupidity.”)

But this criterion of de facto underperformance is still not sufficient to reective

inconsistency. For example, all of these requirements are satised for a causal agent

in Solomon’s Problem. In the chewing-gum throat-abscess problem, people who are

CGTA-negative tend to avoid gum and also have much lower throat-abscess rates.

A CGTA-positive causal agent may chew gum, systematically underperform

CGTA-negative gum-avoiders in the long run, and even anticipate underperforming

gum-avoiders, but none of this reects poorly on the agent’s rationality. A CGTA-

negative agent will do better than a CGTA-positive agent regardless of what either

agent decides; the background of the problem treats them dierently. Nor is the CGTA-

positive agent who chews gum reectively inconsistent—she may wish she had dierent

genes, but she doesn’t wish she had a dierent decision algorithm.

With this concession in mind—that observed underperformance does not always

imply reective inconsistency, and that reective inconsistency does not always show

irrationality—I hope causal decision theorists will concede that, as a matter of straight-

20

Eliezer Yudkowsky

forward fact, causal decision agents are reectively inconsistent on Newcomb’s Problem.

A causal agent that expects to face a Newcomb’s Problem in the near future, whose cur-

rent decision algorithm reads “Choose the action whose anticipated causal consequences

have maximal expected utility,” and who considers the two actions “Leave my decision

algorithm as is” or “Execute a self-modifying rewrite to the decision algorithm ‘Choose

the action whose anticipated causal consequences have maximal expected utility, unless

faced with Newcomb’s Problem, in which case choose only box B,’ ” will evaluate the

rewrite as having more desirable (causal) consequences. Switching to the new algorithm

in advance of actually confronting Newcomb’s Problem, directly causes box B to con-

tain a million dollars and a payo of $1,000,000; whereas the action of keeping the old

algorithm directly causes box B to be empty and a payo of $1,000.

Causal decision theorists may dispute that Newcomb’s Problem reveals a dynamic

inconsistency in causal decision theory. ere is no actual preference reversal between

two outcomes or two gambles. But reective inconsistency generalizes dynamic incon-

sistency. All dynamically inconsistent agents are reectively inconsistent, but the con-

verse does not apply—for example, being confronted with an Alphabetical Box problem

does not render you dynamically inconsistent. It should not be in doubt that Newcomb’s

Problem renders a causal decision algorithm reectively inconsistent. On Newcomb’s

Problem a causal agent systematically underperforms single-boxing agents; the causal

agent anticipates this in advance; and a causal agent would prefer to self-modify to a

dierent decision algorithm.

But does a causal agent necessarily prefer to self-modify? Isaac Asimov once said of

Newcomb’s Problem that he would choose only box A. Perhaps a causal decision agent

is proud of his rationality, holding clear thought sacred. Above all other considerations!

Such an agent will contemptuously refuse the Predictor’s bribe, showing not even wist-

ful regret. No amount of money can convince this agent to behave as if his button-press

controlled the contents of box B, when the plain fact of the matter is that box B is

already lled or already empty. Even if the agent could self-modify to single-box on

Newcomb’s Problem in advance of the Predictor’s move, the agent would refuse to do

so. e agent attaches such high utility to a particular mode of thinking, apart from the

actual consequences of such thinking, that no possible bribe can make up for the disu-

tility of departing from treasured rationality. So the agent is reectively consistent, but

only trivially so, i.e., because of an immense, explicit utility attached to implementing

a particular decision algorithm, apart from the decisions produced or their consequences.

On the other hand, suppose that a causal decision agent has no attachment whatso-

ever to a particular mode of thinking—the causal decision agent cares nothing whatsoever

for rationality. Rather than love of clear thought, the agent is driven solely by greed; the

agent computes only the expected monetary reward in Newcomblike problems. (Or if

21

Timeless Decision eory

you demand a psychologically realistic dilemma,let box B possibly contain a cure for your

daughter’s cancer—just to be sure that the outcome matters more to you than the pro-

cess.) If a causal agent rst considers Newcomb’s Problem while staring at box B which

is already full or empty, the causal agent will compute that taking both boxes maximizes

expected utility. But if a causal agent considers Newcomb’s Problem in advance and as-

signs signicant probability to encountering a future instance of Newcomb’s Problem,

the causal agent will prefer, to an unmodied algorithm, an algorithm that is otherwise

the same except for choosing only box B. I do not say that the causal agent will choose

to self-modify to the “patched”algorithm—the agent might prefer some third algorithm

to both the current algorithm and the patched algorithm. But if a decision agent, facing

some dilemma, prefers any algorithm to her current algorithm, that dilemma renders the

agent reectively inconsistent. e question then becomes whether the causal agent’s re-

ective inconsistency reects a dilemma, Newcomb’s Problem, which is just as unfair as

the Alphabetical Box.

e idea that Newcomb’s Problem is unfair to causal decision theorists is not my own

invention. From Gibbard and Harper (1978):

U-maximization [causal decision theory] prescribes taking both boxes. To

some people, this prescription seems irrational. One possible argument

against it takes roughly the form “If you’re so smart, why ain’t you rich?”

V-maximizers [evidential agents] tend to leave the experiment millionaires

whereas U-maximizers [causal agents] do not. Both very much want to be

millionaires, and the V-maximizers usually succeed; hence it must be the V-

maximizers who are making the rational choice. We take the moral of the

paradox to be something else: if someone is very good at predicting behav-

ior and rewards predicted irrationality richly, then irrationality will be richly

rewarded.

e argument here seems to be that causal decision theorists are rational, but systemat-

ically underperform on Newcomb’s Problem because the Predictor despises rationalists.

Let’s esh out this argument. Suppose there exists some decision theory Q,whose agents

decide in such fashion that they choose to take only one box in Newcomb’s Problem. e

Q-theorists inquire of the causal decision theorist: “If causal decision theory is rational,

why do Q-agents do systematically better than causal agents on Newcomb’s Problem?”

e causal decision theorist replies: “e Predictor you postulate has decided to punish

rational agents, and there is nothing I can do about that. I can just as easily postulate

a Predictor who decides to punish Q-agents, in which case you would do worse than I.”

I can indeed imagine a scenario in which a Predictor decides to punish Q-agents.

Suppose that at 7AM, the Predictor inspects Quenya’s state and determines whether

or not Quenya is a Q-agent. e Predictor is lled with a burning, ery hatred for Q-

22

Eliezer Yudkowsky

agents; so if Quenya is a Q-agent, the Predictor leaves box B empty. Otherwise the

Predictor lls box B with a million dollars. In this situation it is better to be a causal de-

cision theorist, or an evidential decision theorist, than a Q-agent. And in this situation,

all agents take both boxes because there is no particular reason to leave behind box A.

e outcome is completely independent of the agent’s decision—causally independent,

probabilistically independent, just plain independent.

We can postulate Predictors that punish causal decision agents regardless of their de-

cisions, or Predictors that punish Q-agents regardless of their decisions. We excuse the

resulting underperformance by saying that the Predictor is moved internally by a par-

ticular hatred for these kinds of agents. But suppose the Predictor is, internally, utterly

indierent to what sort of mind you are and which algorithm you use to arrive at your

decision. e Predictor cares as little for rationality, as does a greedy agent who de-

sires only gold. Internally, the Predictor cares only about your decision, and judges you

only according to the Predictor’s reliable prediction of your decision. Whether you ar-

rive at your decision by maximizing expected utility, or by choosing the rst decision

in alphabetical order, the Predictor’s treatment of you is the same. en an agent who

takes only box B for whatever reason ends up with the best available outcome, while the

causal decision agent goes on pleading that the Predictor is lled with a special hatred

for rationalists.

Perhaps a decision agent who always chose the rst decision in alphabetical order

(given some xed algorithm for describing options in English sentences) would plead

that Nature hates rationalists because in most real-life problems the best decision is not

the rst decision in alphabetical order. But alphabetizing agents do well only on prob-

lems that have been carefully designed to favor alphabetizing agents. An expected utility

maximizer can succeed even on problems designed for the convenience of alphabetizers,

if the expected utility maximizer knows enough to calculate that the alphabetically rst

decision has maximum expected utility, and if the problem structure is such that all agents

who make the same decision receive the same payo regardless of which algorithm produced the

decision.

is last requirement is the critical one; I will call it decision-determination. Since

a problem strictly determined by agent decisions has no remaining room for sensitivity

to dierences of algorithm, I will also say that the dilemma has the property of being

algorithm-invariant. (ough to be truly precise we should say: algorithm-invariant

given a xed decision.)

Nearly all dilemmas discussed in the literature are algorithm-invariant. Algorithm-

invariance is implied by very act of setting down a payo matrix whose row keys are

decisions, not algorithms. What outrage would result, if a respected decision theorist

proposed as proof of the rationality of Q-theory: “Suppose that in problem X, we have

23

Timeless Decision eory

an algorithm-indexed payo matrix in which Q-theorists receive $1,000,000 payos,

while causal decision theorists receive $1,000 payos. Since Q-agents outperform causal

agents on this problem, this shows that Q-theory is more rational.” No, we ask that

“rational” agents be clever—that they exert intelligence to sort out the dierential con-

sequences of decisions—that they are not paid just for showing up. Even if an agent is

not intelligent, we expect that the alphabetizing agent, who happens to take the ratio-

nal action because it came alphabetically rst, is rewarded no more and no less than an

expected utility maximizer on that single

9

decision problem.

To underline the point: we are humans. Given a chance, we humans will turn princi-

ples or intuitions into timeless calves, and we will imagine that there is a magical princi-

ple that makes our particular mode of cognition intrinsically “rational” or good. But the

idea in decision theory is to move beyond this sort of social cognition by modeling the

expected consequences of various actions, and choosing the actions whose consequences

we nd most appealing (regardless of whether the type of thinking that can get us these

appealing consequences “feels rational,” or matches our particular intuitions or idols).

Suppose that we observe some class of problem—whether a challenge from Nature

or a challenge from multi-player games—and some agents receive systematically higher

payos than other agents. is payo dierence may not reect superior decision-making

capability by the better-performing agents. We nd in the gum-chewing variant of

Solomon’s Problem that agents who avoid gum do systematically better than agents who

chew gum, but the performance dierence stems from a favor shown these agents by the

background problem. We cannot say that all agents whose decision algorithms produce

a given output, regardless of the algorithm, do equally well on Solomon’s Problem.

Newcomb’s Problem as originally presented by Nozick is actually not

decision-determined. Nozick (1969) specied in footnote 1 that if the Predictor predicts

you will decide by ipping a coin, the Predictor leaves box B empty. erefore Nozick’s

Predictor cares about the algorithm used to produce the decision, and not merely the de-

cision itself. An agent who chooses only B by ipping a coin does worse than an agent

who chooses only B by ratiocination. Let us assume unless otherwise specied that the

Predictor predicts equally reliably regardless of agent algorithm. Either you do not have

a coin in your pocket, or the Predictor has a sophisticated physical model which reliably

predicts your coinips.

Newcomb’s Problem seems a forcible argument against causal decision theory because

of the decision-determination of Newcomb’s Problem. It is not just that some agents re-

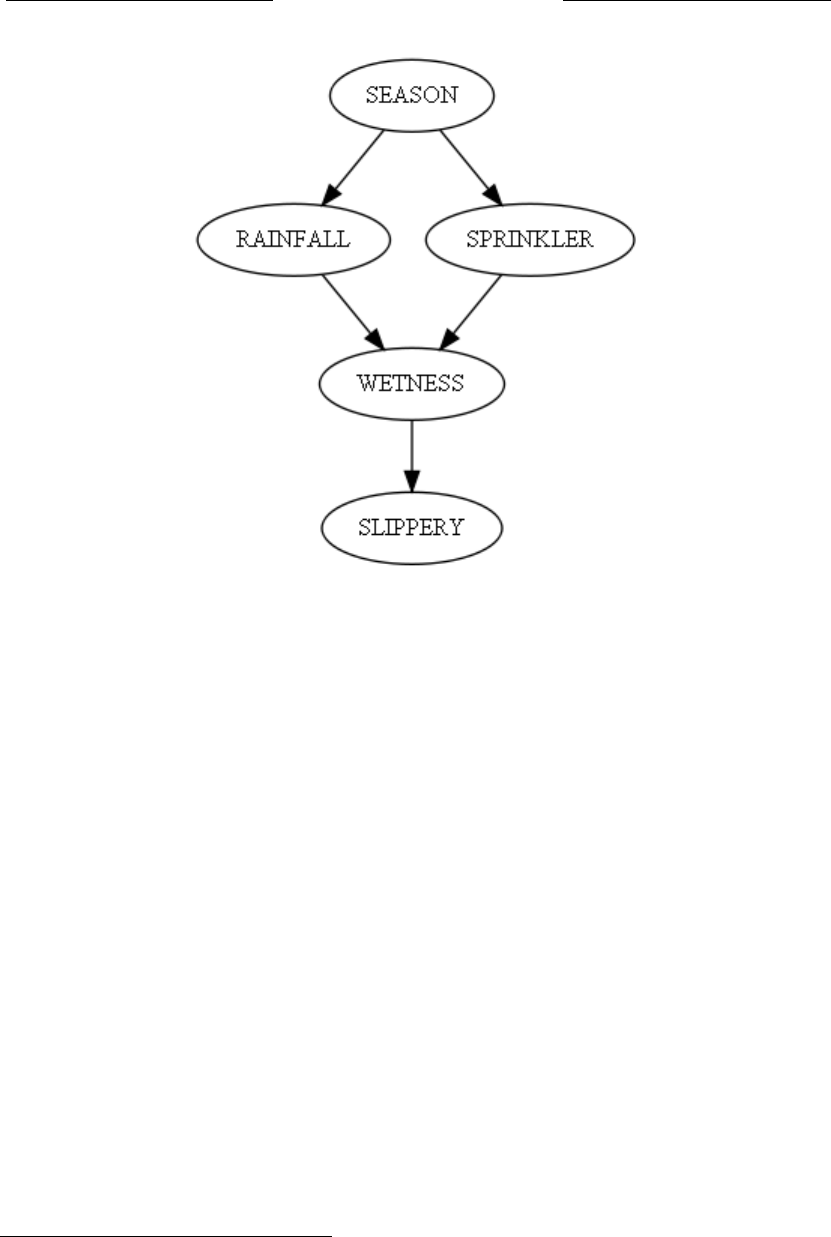

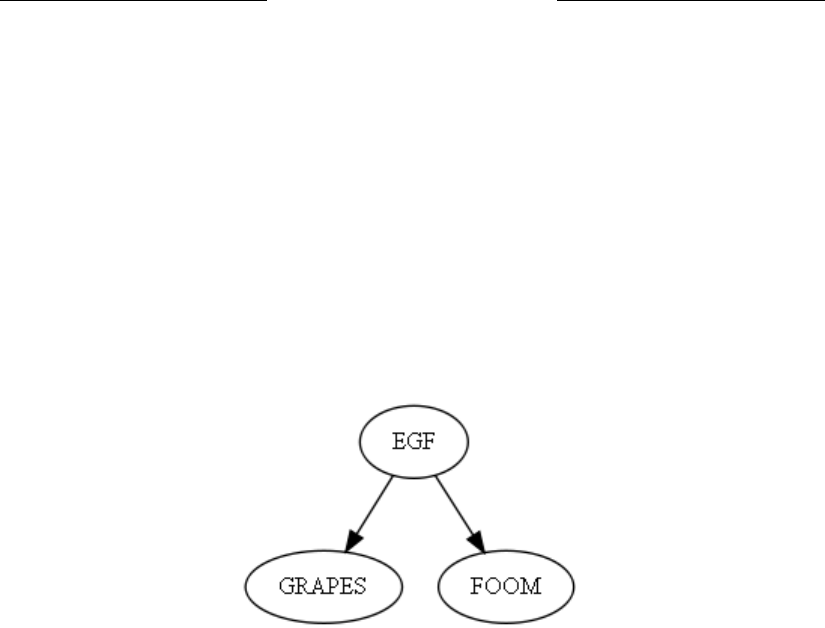

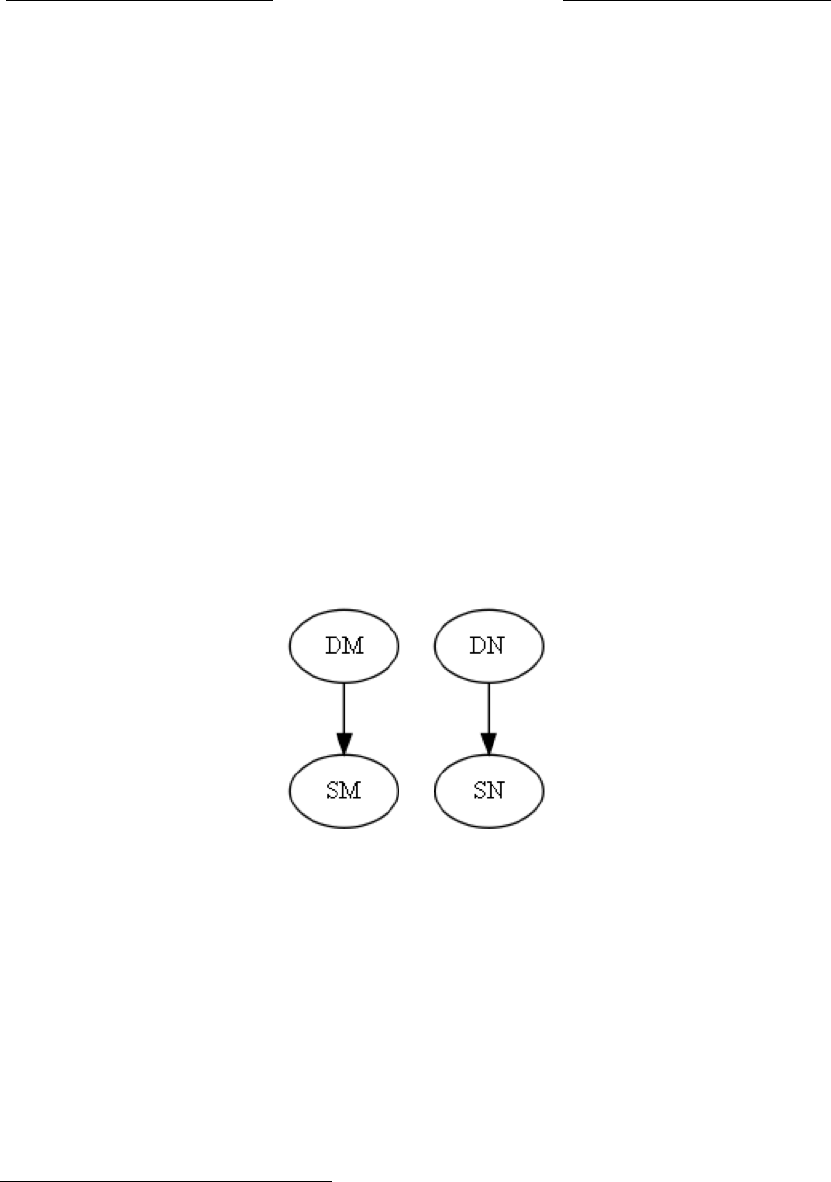

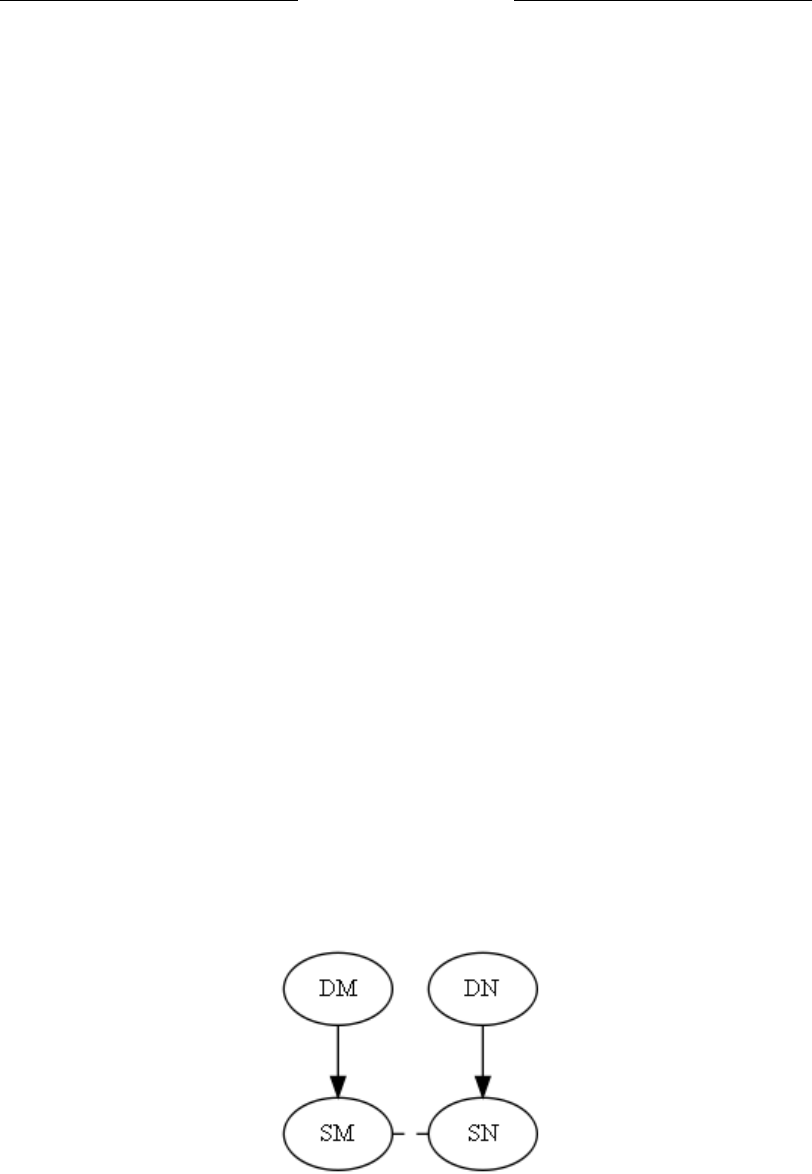

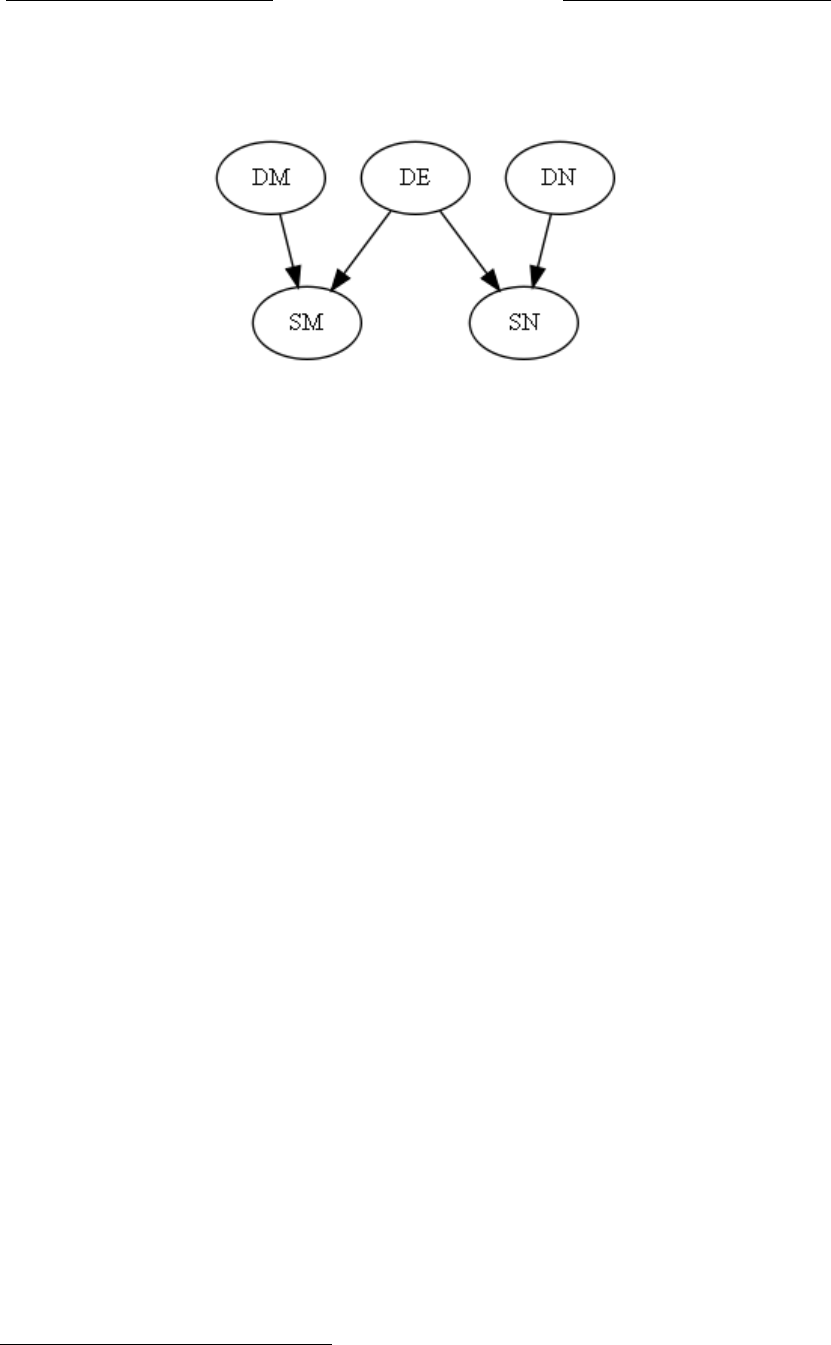

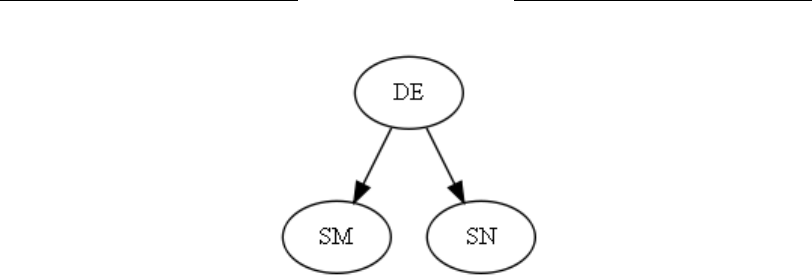

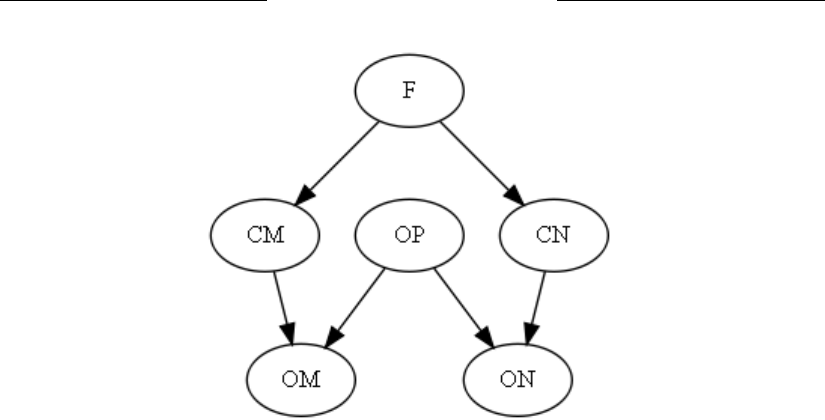

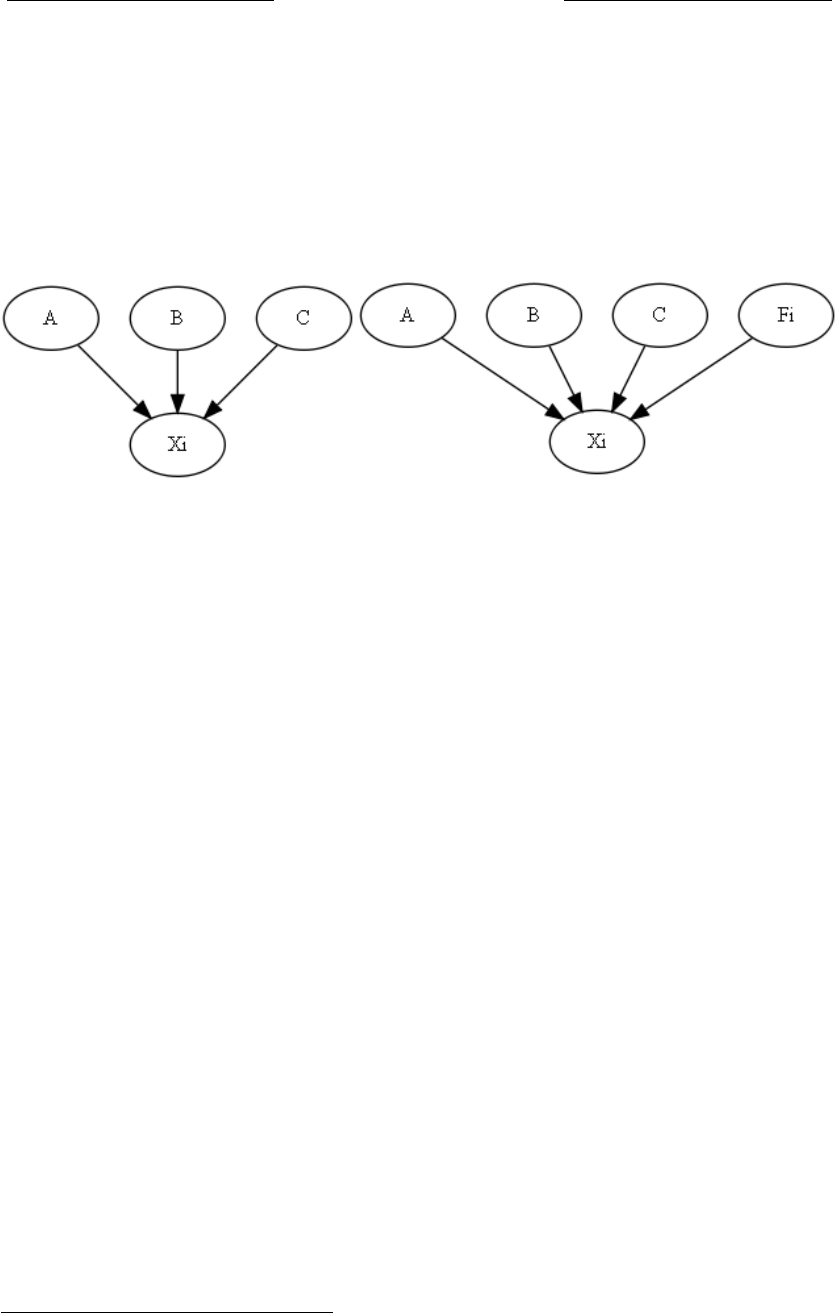

9. In the long run the alphabetizing agent would be wise to choose some other philosophy. Unfortu-