City University of New York (CUNY) City University of New York (CUNY)

CUNY Academic Works CUNY Academic Works

Publications and Research Kingsborough Community College

2008

An Overview of Conditionals and Biconditionals in Probability An Overview of Conditionals and Biconditionals in Probability

Nataniel Greene

CUNY Kingsborough Community College

How does access to this work bene6t you? Let us know!

More information about this work at: https://academicworks.cuny.edu/kb_pubs/81

Discover additional works at: https://academicworks.cuny.edu

This work is made publicly available by the City University of New York (CUNY).

Contact: AcademicWorks@cuny.edu

An Overview of Conditionals and Biconditionals in Probability

Nataniel Greene

Kingsborough Community College, CUNY

Department of Mathematics and Computer Science

2001 Oriental Boulevard Brooklyn, NY 11235

USA

email: [email protected]

Abstract: Conditional and biconditional statements are a standard part of symbolic logic but they have only recently

begun to be explored in probability for applications in articial intelligence. Here we give a brief overview of the

major theorems involved and illustrate them using two standard model problems from conditional probability.

Key–Words: probability of material implication; probability of a conditional; probability of a biconditional

1 Introduction

Elementary treatments of symbolic logic include a

discussion of the logical operations of negation, con-

junction, disjunction, the conditional and the bicondi-

tional. Elementary treatments of probability, however,

will discuss probabilities of a negation, conjunction,

and disjunction but will leave out probabilities of con-

ditionals and biconditionals: statements of the form

“if Q then P ” and “P if and only if Q.” Our treat-

ment here follows the work of Nguyen, Mukaidono,

and Kreinovich [6] where the topic is developed for

applications in articial intelligence. The authors

there show that an analog of Bayes' theorem involv-

ing probabilities of conditionals called Logical Bayes'

theorem, yields comparable results to the standard

version of Bayes' theorem.

In this presentation we outline a treatment of this

topic suitable for undergraduates. We show how

these probabilities are computed and apply them to

two standard model problem. Our contention is that

once an instructor has developed the laws of probabil-

ity of involving the logical operations of “and,” “or”

and “not” and once an instructor has covered condi-

tional probability, the student is fully prepared for a

discussion on the probability of a conditional, bicon-

ditional, and related issues such as the corresponding

logical analog of Bayes' theorem.

We begin with an experiment which produces a

sample space S, the set of all possible outcomes of the

experiment. Any subset of the sample space is known

as an event. Let P and Q be any two events. Then

we recall the denition of conditional probability for

the discrete case as

P (P jQ) =

# outcomes in (P \ Q)

# outcomes in Q

;

from which it readily follows that

P (P jQ) =

P (P \ Q)

P (Q)

:

This may be interpreted as given certain knowledge

that Q occurred, our sample space can be restricted

from S to Q, and as a result the event P is restricted

to P \ Q: We call this the probability of P given Q,

or P given knowledge that Q occurred.

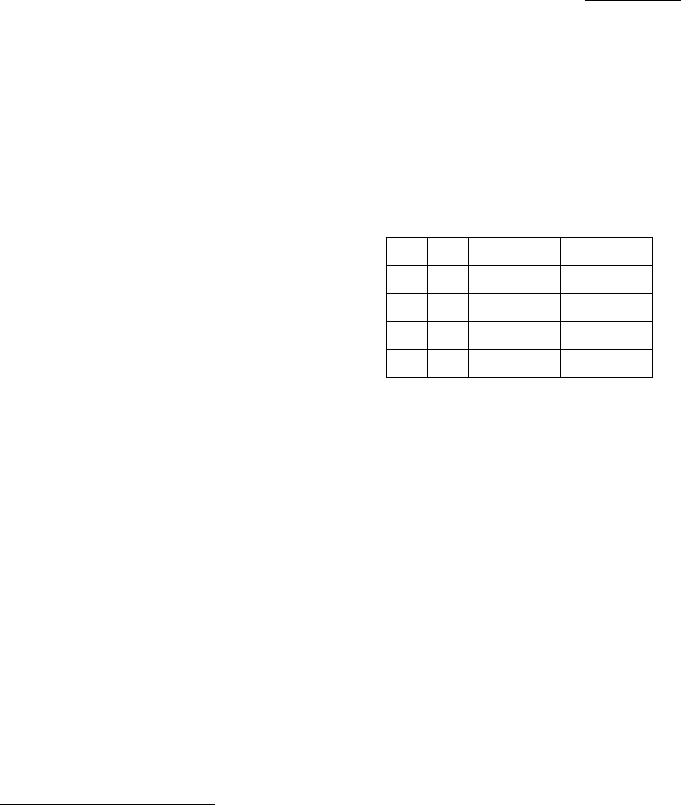

Next we recall the logical denitions of the condi-

tional (P implies Q) and the biconditional (P implies

Q and Q implies P ). We do so using truth tables.

P Q P ! Q P $ Q

T T T T

T F F F

F T T F

F F T T

The operation P ! Q is known as material im-

plication or the conditional and it is seen to be logi-

cally equivalent to P [Q: It is also logically equiv-

alent to (P \ Q) : It is often read "P implies Q"

or "if P then Q:" It is pointed out, for example in

Copi [2] p.17, that the symbol for material implication

should not be thought of as representing the unique

meaning of if-then, rather it should be thought of as

the partial meaning which all uses of the term if-then

have in common. One interesting usage of P ! Q

is a statement like "If the team wins, then I'm a mon-

key's uncle." No real connection is being made be-

tween the two statements. Since the statement as a

whole P ! Q is asserted by the speaker to be true,

and the precedent Q is meant to be absurd, it can only

be that the antecedent P is false. This is precisely the

speaker's intent in asserting this implication: to assert

that P is false.

AMERICAN CONFERENCE ON APPLIED MATHEMATICS (MATH '08), Harvard, Massachusetts, USA, March 24-26, 2008

ISSN: 1790-5117 ISBN: 978-960-6766-47-3 171

2 The Probability of a Conditional

We now wish to consider the probability of a condi-

tional, otherwise known as or material implication,

and see how this probability relates to the more well

known concept of conditional probability.

2.1 Probability of a Conditional

Denition 1 Dene the event

Q ! P = Q [ P

= (Q\ P )

The following theorem describes the probability

of a conditional in terms of conditional probabilities.

Theorem 2

P (Q ! P ) = P ( Q) + P (P \ Q)

Proof.

P (Q ! P ) = P ( (Q\ P ))

= 1 P (Q\ P )

= 1 P ( P jQ) P (Q)

= 1 (1 P (P jQ)) P (Q)

= 1 P (Q) + P (P jQ) P (Q)

= P ( Q) + P (P \ Q)

The next theorem and its corollary can be thought

of as describing, among other things, a paradox of

small sets: from a very small set one can draw any

conclusion with a high degree of probability. Or, one

may describe this as a paradox of improbable events:

from a very unlikely event, one can draw any conclu-

sion with a high degree of probability. Not being

aware of this paradox in one's reasoning can lead to

misleading conclusions. An example of this para-

dox might be: if a plane crashed (a very unlikely

event) then the eet is unsafe. Technically this is

a highly probable inference. An underlying cause

might be that whatever caused the plane to crash could

be shared by most of the planes. But we can also

make the highly probable inference: if a plane crashed

then the eet is safe. The underlying reason being

that whatever the hidden causes were which affected

the one plane do not pertain to the rest of the eet.

Corollary 3

P (? ! P ) = 1

P (S ! P ) = P (P )

P (Q ! ?) = P ( Q)

P (Q ! S) = 1

P (Q ! ?) = P ( Q)

P (Q ! Q) = 1

P (Q ! Q) = P ( Q)

P ( Q ! Q) = P (Q)

Now we express standard conditional probability

in terms of the probability of a conditional.

Theorem 4

P (P jQ) =

P (Q ! P ) P ( Q)

P (Q)

From here we may now formulate a version of

Bayes' theorem. Recall the simplest version of

Bayes' theorem which states

P (P jQ) =

P (QjP ) P (P )

P (Q)

:

From this and the previous theorem one can derive the

logical analog of Bayes' theorem.

Theorem 5 Logical Analog of Bayes' Theorem (1).

P (Q ! P ) = P (P ! Q) + P (P ) P (Q)

Corollary 6 When P and Q are equiprobable, i.e.

P (P ) = P (Q), then P (Q ! P ) = P (P ! Q) :

Now we recall the law of total probability and the

corresponding more general version of Bayes' theo-

rem. The law of total probability states that given a

partition of the sample space S into a disjoint union of

events Q

i

and any event P; we may decompose P (P )

as:

P (P ) =

n

X

i=1

P (P jQ

i

) P (Q

i

)

Substituting for P (P jQ

i

) using the previous theorem

yields the following reformulations of the law of total

probability.

Theorem 7 Reformulation: Law of Total Probability.

P (P ) =

n

X

i=1

(P (Q

i

! P ) P ( Q

i

))

AMERICAN CONFERENCE ON APPLIED MATHEMATICS (MATH '08), Harvard, Massachusetts, USA, March 24-26, 2008

ISSN: 1790-5117 ISBN: 978-960-6766-47-3 172

Finally we recall Bayes' Theorem in its usual

general formulation, namely:

P (Q

i

jP ) =

P (P jQ

i

) P (Q

i

)

P (P )

and reformulate it in terms of the probability of an

implication.

Theorem 8 Logical Analog of Bayes' Theorem (2).

P (P ! Q

i

) = P (Q

i

! P ) + P (Q

i

) P (P )

where P (P ) =

n

X

i=1

(P (Q

i

! P ) P ( Q

i

)) or

any other equivalent expression to the law of total

probability.

We now conclude this section with an important

theorem. Conditional probabilities and probabilities

of conditionals share the same order relations.

Theorem 9 Let P; Q and R be events. Then

P (P jQ) > P (RjQ)

if and only if

P (Q ! P ) > P (Q ! R) :

Proof. We have

P (Q ! P ) > P (Q ! R)

P ( Q) + P (P jQ) P (Q) > P ( Q)

+P (RjQ) P (Q)

P (P jQ) > P (RjQ)

and vice-versa.

A practical application of this theorem is the fact

that probabilities of conditionals and the logical ver-

sion of Bayes' theorem can be used instead of the

standard version of Bayes' theorem, allowing one to

draw similar conclusions. We illustrate this in our

model problem.

3 Probability of a Biconditional

The probability of the biconditional P if and only

if Q, P (Q $ P ) ; may be thought of intuitively

as the probability that P and Q are either simul-

taneously true or simultaneously false. The prob-

ability of the negated biconditional P (Q = P ) =

P (Q $ P ) = P ( Q $ P ) ; is the probability

that P and Q are neither simultaneously true nor si-

multaneously false.

Denition 10 Dene the event

Q $ P = (P \ Q) [ ( P \ Q)

= ( Q [ P ) \ ( P [ Q) :

and the corresponding probability

P (Q $ P ) = P ((P \ Q) [ ( P \ Q)) :

The following theorem describes the probability

of a biconditional in terms of unions and intersections.

Theorem 11

P (Q $ P ) = P (P \ Q) + P ( P \ Q)

P (Q $ P ) = 1 P (P [ Q) + P (P \ Q)

P (Q $ P ) = 1 P (P ) P (Q) + 2P (P \ Q)

Corollary 12

P (P $ P ) = 1

P (P $ P ) = 0

P (P $ S) = P (P )

P (P $ ?) = P ( P )

The rst two statements can be interpreted as say-

ing that P correlates with itself perfectly and P corre-

lates with its negation not at all. The second two state-

ments say in words that the probability of an event P

measures the logical correlation of the event with cer-

tainty. The probability of P not occurring measures

the logical correlation of the event with impossibility.

The negated biconditional, denoted by P = Q; is

described in set theory as symmetric difference where

it is often denoted by P M Q: It is also described

in logic as exclusive or (xor), where it is denoted by

P xor Q or by P t Q: Different notations may be

employed depending on the context. Since the con-

text here views the biconditional as primary, we will

employ the negated biconditional symbol here. We

are interested in the probability of the negated bicon-

ditional. The following equivalences can be veried:

P = Q = (P $ Q)

= (P 9 Q) [ (Q 9 P )

= (P \ Q) [ (Q\ P )

= Q $ P

= Q $ P

Theorem 13 The Negated Biconditional or Exclusive

Or.

P (Q = P ) = P (P [ Q) P (P \ Q)

AMERICAN CONFERENCE ON APPLIED MATHEMATICS (MATH '08), Harvard, Massachusetts, USA, March 24-26, 2008

ISSN: 1790-5117 ISBN: 978-960-6766-47-3 173

P = Q = (P $ Q)

= (P 9 Q) [ (Q 9 P )

= (P \ Q) [ (Q\ P )

= Q $ P

= Q $ P

Finally we describe the probability of a bicondi-

tional in terms of the probability of a conditional.

Theorem 14

P (Q $ P ) = P (Q ! P ) + P (P ! Q) 1

P (Q $ P ) = 2P (P ! Q) + P (P ) P (Q) 1

P (Q $ P ) = 2P (Q ! P ) + P (Q) P (P ) 1

We leave the proof of these statements to the

reader.

4 A Logical Correlation Coefcient

When P (P $ Q) is near 1 we can say that P and

Q are nearly simultaneously true or simultaneously

false. We may also say that the events are nearly

the same. This corresponds to P and Q exhibiting

a strong direct (positive) logical correlation. When

P (P $ Q) is near 0 we can say that P and Q are

nearly simultaneously true or simultaneously false,

which corresponds to P and Q exhibiting a strong in-

verse (negative) logical correlation. We may also say

that the events are nearly complementary. It follows

that P (P $ Q) t

1

2

corresponds to P and Q ex-

hibiting little logical correlation. Based on this we

may dene an effective logical correlation coefcient

for two events.

Denition 15 The logical correlation coefcient for

the events P and Q is given by

(P; Q) = 2P (P $ Q) 1:

It follows that

P (P $ Q) =

(P; Q) + 1

2

and that

1 (P; Q) 1:

The next theorem is quite fundamental in that it

gives conceptual justication for the preceding den-

ition. It says that the logical correlation coefcient

of two events is the probability that those events are

the same minus the probability that those events are

different.

Theorem 16

(P; Q) = P (P $ Q) P (P = Q)

Proof. By denition

(P; Q) = 2P (P $ Q) 1

= P (P $ Q) + P (P $ Q) 1

= P (P $ Q) (1 P (P $ Q))

= P (P $ Q) P (P = Q)

Corollary 17

(P; Q) = 1 2P (P ) 2P (Q) + 4P (P \ Q)

The following are some algebraic properties of logical

correlation which the reader can verify.

Theorem 18

(P; Q) = (Q; P )

( P; Q) = (P; Q)

(P; P ) = 1

( P; P ) = 1

(P; S) = 2P (P ) 1

(P; ?) = 1 2P (P )

(P; Q) = 0 if and only if P (P $ Q) =

1

2

5 Logical and Independence

We now describe a notion of logical independence

which is analogous but distinct from the standard no-

tion of stochastic or statistical dependence. Re-

call that two events P and Q are stochastically in-

dependent if P (P jQ) = P (P ) or alternatively if

P (P \ Q) = P (P ) P (Q) :

Denition 19 Logical independence. The events P

and Q are called logically independent if

P (Q ! P ) = P (P ) :

It follows from this denition and the logical ver-

sion of Bayes' theorem that P (Q ! P ) = P (P )

whenever P (P ! Q) = P (Q) :

Theorem 20 The events P and Q are logically inde-

pendent if and only if

P (P $ Q) = P (P \ Q)

or

P (P [ Q) = 1

or

P ( P \ Q) = 0:

AMERICAN CONFERENCE ON APPLIED MATHEMATICS (MATH '08), Harvard, Massachusetts, USA, March 24-26, 2008

ISSN: 1790-5117 ISBN: 978-960-6766-47-3 174

We see that logically independent events cover

the sample space. Equivalently, we see that logically

independent events cannot be simultaneously false.

Recall that P and Q are statistically independent if

P (P jQ) = P (P ) or alternatively if P (P \ Q) =

P (P ) P (Q) : We may ask: in which circumstance do

the two notions of logical independence and statistical

independence coincide? The next theorem answers

this question.

Corollary 21 The events P and Q are both logically

independent and statistically independent whenever:

P (P ) = 1 or P (Q) = 1:

That is, the only way for two events to be both logi-

cally and statistically independent is if one of them is

certain to occur.

Proof. We begin with the formula

P (P [ Q) = P (P ) + P (Q) P (P \ Q)

which reduces in our case to

1 = P (P ) + P (Q) P (P ) P (Q)

0 = (1 P (P )) (1 P (Q))

from which the result follows.

Corollary 22 If the events P and Q are logically in-

dependent and statistically independent then

P (P $ Q) = P (P ) P (Q) :

Corollary 23 If the events P and Q are logically in-

dependent and mutually exclusive then

Q =s P:

We recall that

P (P $ Q) = P (P \ Q) + P ( P \ Q) :

Dividing both sides by P (P $ Q) yields

1 =

P (P \ Q)

P (P $ Q)

+

P ( P \ Q)

P (P $ Q)

:

When P and Q are logically independent the rst term

is 1 and the second term is 0. Therefore the rst term

can be thought of as measuring the degree to which P

and Q are logically independent and the second term

can be thought of as measuring the degree to which P

and Q are logically dependent.

Denition 24 The logical independence of P and Q

is measured by

I

L

(P; Q) =

P (P \ Q)

P (P $ Q)

=

P (P \ Q)

P (P \ Q) + P ( P \ Q)

and the logical dependence of P and Q is measured by

D

L

(P; Q) =

P ( P \ Q)

P (P $ Q)

=

P ( P \ Q)

P (P \ Q) + P ( P \ Q)

:

In terms of our notation we have I

L

(P; Q) +

D

L

(P; Q) = 1:

6 Applications

We apply here probabilities of conditionals, bicondi-

tionals and the logical analog of Bayes' theorem to

two standard model problems which illustrates many

of the concepts we have described.

We stress again that strong conditional and bicon-

ditional relationships (logical correlations) do not nec-

essarily imply that there is cause-and-effect. Proba-

bilities of conditionals and biconditionals are simply

ways of measuring association between two events.

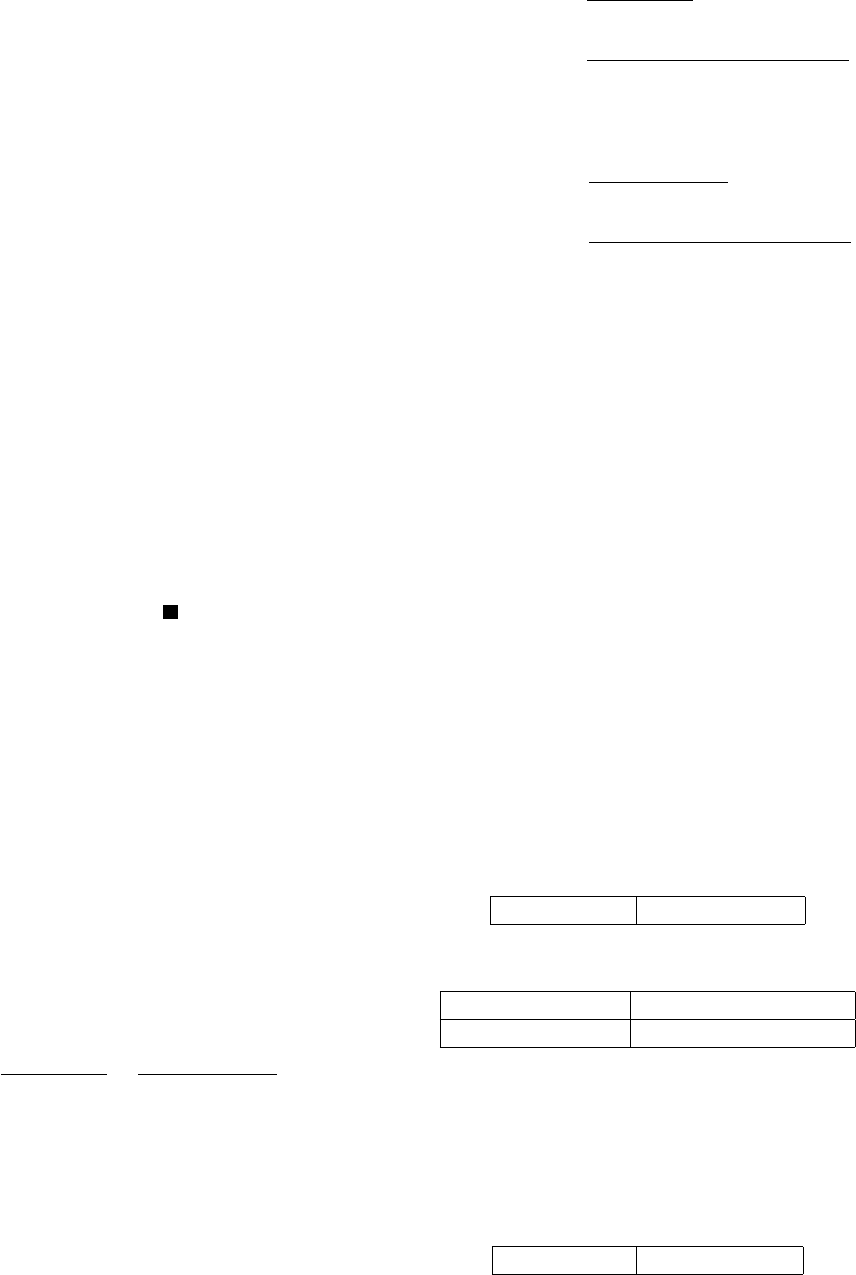

6.1 Application 1: Medical Testing

Example 1 A patient takes a diagnostic test to check

for a disease. Let D = a patient has the disease, P =

a patient tests positive for the disease. Suppose the

following information is known:

P(D) = 10% P( D) = 90%

P (P jD) = 95% P ( P jD) = 5%

P (P j D) = 7% P ( P j D) = 93%

A standard exercise in total probability and

Bayes' theorem would be to use this information to

calculate the probability that a patient tests positive

and the inverse conditional probabilities. We do so in

the next tables. Using the Law of Total Probability

P(P ) = 16% P( P ) = 84%

AMERICAN CONFERENCE ON APPLIED MATHEMATICS (MATH '08), Harvard, Massachusetts, USA, March 24-26, 2008

ISSN: 1790-5117 ISBN: 978-960-6766-47-3 175

and we can compute using Bayes' theorem

P(DjP ) = 60% P(Dj P ) = 1%

P( Dj P ) = 99% P( DjP ) = 40%

Now we are interested in comparing and contrasting

this standard exercise with the analogous probabilities

of material implications and biconditionals. We show

the results of the computations in the following tables.

P (D ! P ) = 100% P (D ! P ) = 91%

P ( D ! P ) = 7% P ( D ! P ) = 94%

P(P ! D) = 94% P( P ! D) = 7%

P( P ! D) = 100% P(P ! D) = 91%

:

We begin by observing that the probability a pa-

tient has the disease, given that the test was positive

P (DjP ) ; is only 60%. This is often surprising to

those who rst encounter this, and it is explained by

the fact that the number of those who have the dis-

ease and test positive is only moderately larger than

the number of those who don't have the disease and

test positive. The surprise is sometimes explained as

due to people confusing P (DjP ) with P (P jD).

We contrast P (DjP ) with P (P ! D) = 94%

which would seem to provide the result which

people initially expect, except for the fact that

P (P ! D) = 91%. Apparently, testing posi-

tive for the disease implies with about 94% proba-

bility that a person has the disease, and it also im-

plies with about 91% probability that a person does

not have the disease! One may explain this appar-

ent paradox by recalling that P (? ! Q) = 1, for

any Q: From here we see that since P is a small

set, one is able to infer both a proposition D and

its negation D with high probability. We note

however that P (P ! D) > P (P ! D) just as

P (DjP ) > P(Dj P ):

Arguably, it is the probability of the biconditional,

or probable logical correlation, which coincides best

with the intuition that people mistakenly apply to

P (DjP ) initially. The biconditional probabilities are

as follows:

P(P $ D) = 93% P(P $ D) = 7%

:

We may compute their logical correlation coefcients

as:

(P; D) = 0:86 (P; D) = 0:86

We see that testing positive for the disease and ac-

tually having the disease have a strong direct logical

correlation, as one would expect. Therefore, testing

positive and not having the disease, or testing negative

and having the disease, have a logical correlation with

the same magnitude but the opposite sign.

6.2 Application 2: Manufacturing and Qual-

ity Control

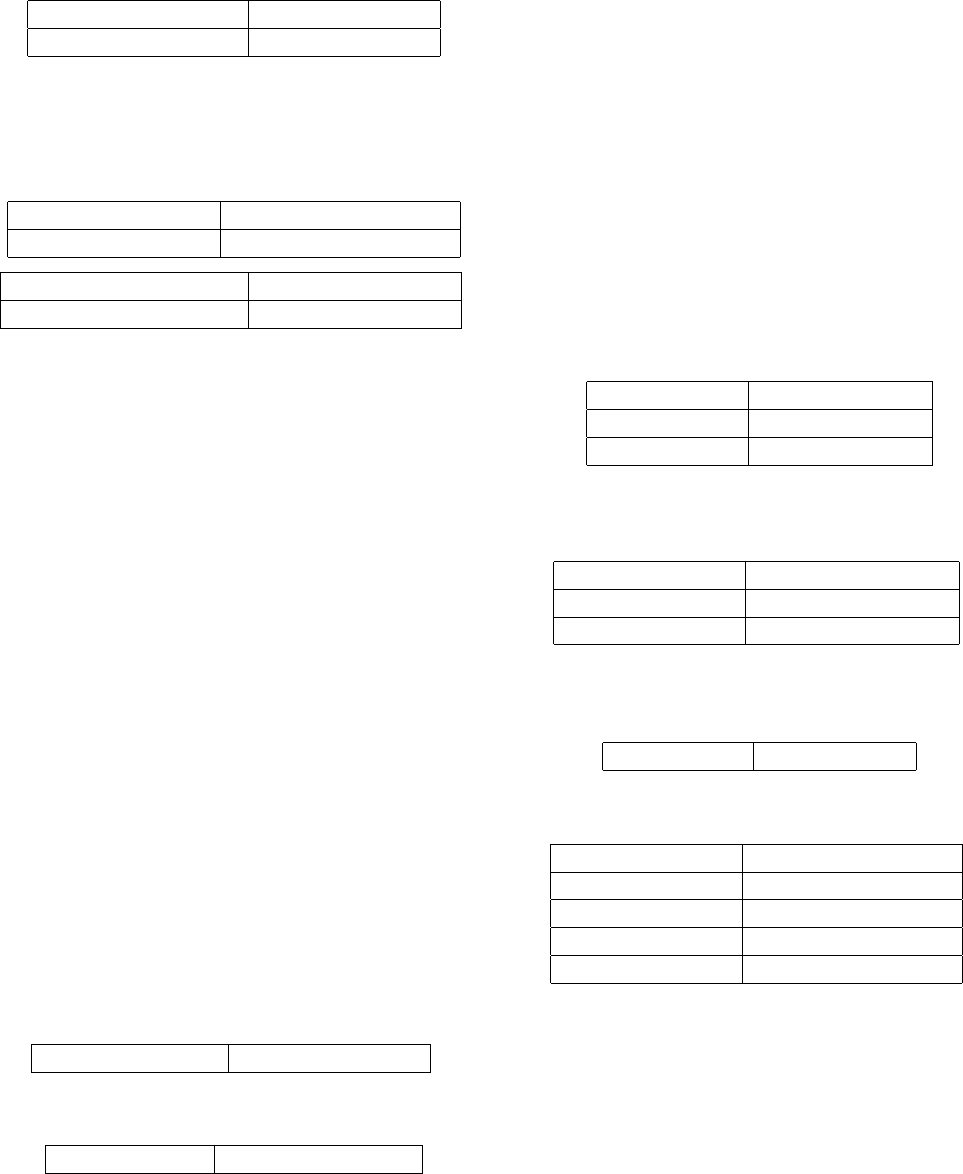

Example 2 A company manufactures items using

three machines. 40% of the manufactured items come

from Machine 1, 40% from Machine 2 and 20% from

Machine 3. Of those items coming from machine 1,

95% work properly, of those items coming from Ma-

chine 2, 90% work properly, and of those items com-

ing from Machine 3, 93% work properly.

Let M 1; M2; and M 3 denote the events that a

manufactured item comes from Machines 1, 2, and 3

respectively. Let W denote the event that a manufac-

tured item works properly. Then we have

P(M1) = 40% P( M1) = 60%

P(M2) = 40% P( M2) = 60%

P(M3) = 20% P( M3) = 80%

and

P(W jM1) = 90% P( W jM 1) = 10%

P(W jM2) = 95% P( W jM 2) = 5%

P(W jM3) = 93% P( W jM 3) = 7%

:

Furthermore we can compute using the Law of Total

Probability:

P(W ) = 93% P( W ) = 7%

and we can compute using Bayes' theorem

Credit Blame

P(M1jW ) = 39% P(M 1j W ) = 57%

P(M2jW ) = 41% P(M 2j W ) = 29%

P(M3jW ) = 20% P(M 3j W ) = 19%

Total = 100% Total = 100%

:

At this point a very important but standard text-

book exercise is completed. Given the knowledge

that the item is working properly we can apportion the

credit according to the rst column and given that the

item is defective we can apportion the blame accord-

ing to the second column.

While this analysis is standard and correct, we are

not entirely satised. Which machine correlates most

with a working item, (direct logical correlation) and

which machine correlates most with a defective item

(inverse logical correlation)? To answer this let us

begin with the analogs of the above computations for

the probability of a conditional.

AMERICAN CONFERENCE ON APPLIED MATHEMATICS (MATH '08), Harvard, Massachusetts, USA, March 24-26, 2008

ISSN: 1790-5117 ISBN: 978-960-6766-47-3 176

P(M1 ! W ) = 96% P(M 1 ! W ) = 64%

P(M2 ! W ) = 98% P(M 2 ! W ) = 62%

P(M3 ! W ) = 99% P(M 3 ! W ) = 81%

We may compute, either directly or using the logical

analog of Bayes' theorem the reverse probabilities of

conditionals.

Credit Blame

P(W ! M 1) = 43% P( W ! M1) = 97%

P(W ! M 2) = 45% P( W ! M2) = 95%

P(W ! M 3) = 26% P( W ! M3) = 94%

Although these probabilities are less intuitive, their

relative ranking remain the same. That is, we would

come to the same decision as to how to rank the ma-

chines in terms of credit and blame whether we used

conditional probabilities or probabilities of condition-

als.

However, it is the logical correlations, or the

probabilities of biconditionals, that we are most in-

terested in. We compute them here.

Biconditionals

P(W $ M 1) = 39% P( W $ M1) = 61%

P(W $ M 2) = 43% P( W $ M2) = 57%

P(W $ M 3) = 25% P( W $ M3) = 75%

We can express these results equivalently in terms of

their effective correlation coefcients:

Logical Correlations

(W; M 1) = 0:22 ( W; M 1) = 0:22

(W; M 2) = 0:14 ( W; M 2) = 0:14

(W; M 3) = 0:5 ( W; M 3) = 0:5

The fact that all the logical correlations are negative

indicates that for a given machine, it is more likely

than not that an item was either defective and pro-

duced by it, or working and produced by another ma-

chine. This does not speak well for the manufacturer.

The logical correlations also lead us to rank the ma-

chines in the following order: M3 < M1 < M2.

7 Conclusion

Probabilities of conditionals can be used in addition

to, or even instead of conditional probabilities in any

problem involving Bayes' theorem.

The probability of a biconditional provides a

highly intuitive measure of relatedness, or logical cor-

relation, between two events. It measures the de-

gree to which two events are simultaneously true or

simultaneously false. One may also dene a logical

correlation coefcient for two events via the formula

(P; Q) = 2P(P $ Q) 1:

It is our opinion that selections from the topics we

have discussed here can be incorporated into under-

graduate treatments of probability once the standard

material has been developed. Probabilities of condi-

tionals, and especially probabilities of biconditionals,

can then provide additional insights into many stan-

dard problems.

References

[1] Adams E., “The Logic of Conditionals.” Inquiry,

8, (1965), pp. 166-197.

[2] Copi, I. M., Symbolic Logic, Macmillan Publish-

ing Co., Inc., New York, 1979

[3] Fulda, Joseph S., Material Implication Revisited,

The American Mathematical Monthly, Vol. 96,

No.3 (Mar., 1989), pp. 247-250.

[4] Lewis, D., Probabilities of Conditionals and Con-

ditional Probabilities, The Philosophical Review,

Vol. 85, No. 3 (Jul., 1976), pp. 297-315.

[5] Milne, P., Bruno de Finetti and the Logic of Con-

ditional Events, Brit. J. Phil. Sci., 48 (1997), 195-

232.

[6] Nguyen, Mukaidono, and Kreinovich. Probability

of Implication, Logical Version of Bayes' The-

orem, and Fuzzy Logic Operations. Proceedings

IEEE-FUZZ (2002), Volume 1, p. 530-535.

AMERICAN CONFERENCE ON APPLIED MATHEMATICS (MATH '08), Harvard, Massachusetts, USA, March 24-26, 2008

ISSN: 1790-5117 ISBN: 978-960-6766-47-3 177