PUBLIC

Document Version: 4.3 (14.3.00.00)–2024-03-25

Data Services Supplement for Big Data

© 2024 SAP SE or an SAP aliate company. All rights reserved.

THE BEST RUN

Content

1 About this supplement........................................................4

2 Naming conventions and variables...............................................5

3 Big data in SAP Data Services..................................................10

3.1 Apache Cassandra...........................................................10

Setting ODBC driver conguration on Linux........................................11

Data source properties for Cassandra........................................... 12

3.2 Apache Hadoop............................................................. 13

Hadoop Distributed File System (HDFS)..........................................14

Hadoop Hive .............................................................14

Upload data to HDFS in the cloud...............................................15

Google Cloud Dataproc clusters................................................15

3.3 HP Vertica................................................................. 16

Enable MIT Kerberos for HP Vertica SSL protocol................................... 17

Creating a DSN for HP Vertica with Kerberos SSL................................... 21

Creating HP Vertica datastore with SSL encryption..................................23

Increasing loading speed for HP Vertica..........................................24

HP Vertica data type conversion...............................................25

HP Vertica table source..................................................... 27

HP Vertica target table conguration............................................28

3.4 MongoDB................................................................. 32

MongoDB metadata........................................................32

MongoDB as a source...................................................... 33

MongoDB as a target.......................................................36

MongoDB template documents............................................... 39

Preview MongoDB document data..............................................41

Parallel Scan.............................................................42

Reimport schemas........................................................ 43

Searching for MongoDB documents in the repository................................44

3.5 Apache Impala..............................................................44

Download the Cloudera ODBC driver for Impala ....................................45

Creating an Apache Impala datastore ...........................................46

3.6 PostgreSQL ................................................................48

Datastore options for PostgreSQL..............................................49

Congure the PostgreSQL ODBC driver ..........................................53

Conguring ODBC driver for SSL/TLS X509 PostgresSQL.............................54

2

PUBLIC

Data Services Supplement for Big Data

Content

Import PostgreSQL metadata.................................................55

PostgreSQL source, target, and template tables ....................................56

PostgreSQL data type conversions............................................. 57

3.7 SAP HANA.................................................................58

Cryptographic libraries and global.ini settings .....................................59

X.509 authentication.......................................................61

JWT authentication........................................................62

Bulk loading in SAP HANA................................................... 63

Creating stored procedures in SAP HANA........................................ 65

SAP HANA database datastores ...............................................66

Conguring DSN for SAP HANA on Windows...................................... 72

Conguring DSN for SAP HANA on Unix ......................................... 74

Datatype conversion for SAP HANA.............................................76

Using spatial data with SAP HANA..............................................78

3.8 Amazon Athena.............................................................80

Athena data type conversions.................................................81

ODBC capacities and functions for Athena........................................82

4 Cloud computing services.................................................... 84

4.1 Cloud databases.............................................................84

Amazon Redshift database...................................................85

Azure SQL database....................................................... 96

Google BigQuery..........................................................98

Google BigQuery ODBC.....................................................99

SAP HANA Cloud, data lake database...........................................99

Snowake..............................................................103

4.2 Cloud storages............................................................. 116

Amazon S3..............................................................117

Azure Blob Storage........................................................123

Azure Data Lake Storage....................................................130

Google Cloud Storage le location.............................................134

Data Services Supplement for Big Data

Content

PUBLIC 3

1 About this supplement

This supplement contains information about the big data products that SAP Data Services supports.

The supplement contains information about the following:

• Supported big data products

• Supported cloud computing technologies including cloud databases and cloud storages.

Find basic information in the Reference Guide, Designer Guide, and some of the applicable supplement guides.

For example, to learn about datastores and creating datastores, see the Reference Guide. To learn about Google

BigQuery, refer to the Supplement for Google BigQuery.

4 PUBLIC

Data Services Supplement for Big Data

About this supplement

2 Naming conventions and variables

This documentation uses specic terminology, location variables, and environment variables that describe

various features, processes, and locations in SAP Data Services.

Terminology

SAP Data Services documentation uses the following terminology:

• The terms Data Services system and SAP Data Services mean the same thing.

• The term BI platform refers to SAP BusinessObjects Business Intelligence platform.

• The term IPS refers to SAP BusinessObjects Information platform services.

Note

Data Services requires BI platform components. However, when you don't use other SAP applications,

IPS, a scaled back version of BI, also provides these components for Data Services.

• CMC refers to the Central Management Console provided by the BI or IPS platform.

• CMS refers to the Central Management Server provided by the BI or IPS platform.

Variables

The following table describes the location variables and environment variables that are necessary when you

install and congure Data Services and required components.

Data Services Supplement for Big Data

Naming conventions and variables

PUBLIC 5

Variables Description

INSTALL_DIR

The installation directory for SAP applications such as Data

Services.

Default location:

• For Windows: C:\Program Files (x86)\SAP

BusinessObjects

• For UNIX: $HOME/sap businessobjects

Note

INSTALL_DIR isn't an environment variable. The in-

stallation location of SAP software can be dierent than

what we list for INSTALL_DIR based on the location

that your administrator sets during installation.

BIP_INSTALL_DIR

The directory for the BI or IPS platform.

Default location:

• For Windows: <INSTALL_DIR>\SAP

BusinessObjects Enterprise XI 4.0

Example

C:\Program Files

(x86)\SAP BusinessObjects\SAP

BusinessObjects Enterprise XI 4.0

• For UNIX: <INSTALL_DIR>/enterprise_xi40

Note

These paths are the same for both BI and IPS.

Note

BIP_INSTALL_DIR isn't an environment variable.

The installation location of SAP software can be dierent

than what we list for BIP_INSTALL_DIR based on the

location that your administrator sets during installation.

6

PUBLIC

Data Services Supplement for Big Data

Naming conventions and variables

Variables Description

<LINK_DIR>

An environment variable for the root directory of the Data

Services system.

Default location:

• All platforms

<INSTALL_DIR>\Data Services

Example

C:\Program Files (x86)\SAP

BusinessObjects\Data Services

Data Services Supplement for Big Data

Naming conventions and variables

PUBLIC 7

Variables Description

<DS_COMMON_DIR>

An environment variable for the common conguration di-

rectory for the Data Services system.

Default location:

• If your system is on Windows (Vista and newer):

<AllUsersProfile>\SAP

BusinessObjects\Data Services

Note

The default value of <AllUsersProfile> environ-

ment variable for Windows Vista and newer is

C:\ProgramData.

Example

C:\ProgramData\SAP

BusinessObjects\Data Services

• If your system is on Windows (Older versions such as

XP)

<AllUsersProfile>\Application

Data\SAP BusinessObjects\Data

Services

Note

The default value of <AllUsersProfile> en-

vironment variable for Windows older versions

is C:\Documents and Settings\All

Users.

Example

C:\Documents and Settings\All

Users\Application Data\SAP

BusinessObjects\Data Services

• UNIX systems (for compatibility)

<LINK_DIR>

The installer automatically creates this system environment

variable during installation.

Note

Starting with Data Services 4.2 SP6, users

can designate a dierent default location for

8

PUBLIC

Data Services Supplement for Big Data

Naming conventions and variables

Variables Description

<DS_COMMON_DIR> during installation. If you can't nd

the <DS_COMMON_DIR> in the listed default location, ask

your System Administrator to nd out where your de-

fault location is for <DS_COMMON_DIR>.

<DS_USER_DIR>

The environment variable for the user-specic conguration

directory for the Data Services system.

Default location:

• If you're on Windows (Vista and newer):

<UserProfile>\AppData\Local\SAP

BusinessObjects\Data Services

Note

The default value of <UserProfile> environment

variable for Windows Vista and newer versions is

C:\Users\{username}.

• If you're on Windows (Older versions such as XP):

<UserProfile>\Local

Settings\Application Data\SAP

BusinessObjects\Data Services

Note

The default value of <UserProfile> en-

vironment variable for Windows older ver-

sions is C:\Documents and Settings\

{username}.

Note

The system uses <DS_USER_DIR> only for Data

Services client applications on Windows. UNIX plat-

forms don't use <DS_USER_DIR>.

The installer automatically creates this system environment

variable during installation.

Data Services Supplement for Big Data

Naming conventions and variables

PUBLIC 9

3 Big data in SAP Data Services

SAP Data Services supports many types of big data through various object types and le formats.

Apache Cassandra [page 10]

Apache Cassandra is an open-source data storage system that you can access with SAP Data Services.

Apache Hadoop [page 13]

Use SAP Data Services to connect to Apache Hadoop frameworks including Hadoop Distributive File

Systems (HDFS) and Hive.

HP Vertica [page 16]

Access HP Vertica data by creating an HP Vertica database datastore in SAP Data Services Designer.

MongoDB [page 32]

To read data from MongoDB sources and load data to other SAP Data Services targets, create a

MongoDB adapter.

Apache Impala [page 44]

Create an ODBC datastore to connect to Apache Impala in Hadoop.

PostgreSQL [page 48]

To use your PostgreSQL tables as sources and targets in SAP Data Services, create a PostgreSQL

datastore and import your tables and other metadata.

SAP HANA [page 58]

Process your SAP HANA data in SAP Data Services by creating an SAP HANA database datastore.

Amazon Athena [page 80]

Use the Simba Athena ODBC driver to connect to Amazon Athena.

3.1 Apache Cassandra

Apache Cassandra is an open-source data storage system that you can access with SAP Data Services.

Data Services natively supports Cassandra as an ODBC data source with a DSN connection. Cassandra uses

the generic ODBC driver. Use Cassandra on Windows or Linux operating systems.

Use Cassandra data for the following tasks:

• Use as sources, targets, or template tables

• Preview data

• Query using distinct, where, group by, and order by

• Write scripts using functions such as math, string, date, aggregate, and ifthenelse

Before you use Cassandra with Data Services, ensure that you perform the following setup tasks:

• Add the appropriate environment variables to the al_env.sh le.

• For Data Services on Linux platforms, congure the ODBC driver using the Connection Manager.

10

PUBLIC

Data Services Supplement for Big Data

Big data in SAP Data Services

Note

For Data Services on Windows platforms, use the generic ODBC driver.

For more information about conguring database connectivity for UNIX and Linux, see the Administrator Guide.

Setting ODBC driver conguration on Linux [page 11]

Use the Connection Manager to congure the ODBC driver for Apache Cassandra on Linux.

Data source properties for Cassandra [page 12]

Complete data source properties in the Connection Manager when you congure the ODBC driver for

SAP Data Services on Linux.

3.1.1Setting ODBC driver conguration on Linux

Use the Connection Manager to congure the ODBC driver for Apache Cassandra on Linux.

Before you complete the following steps, read the topic and subtopics under “Congure database connectivity

for UNIX and Linux” in the Administrator Guide.

Use the GTK+2 library to make a graphical user interface for the Connection Manager. Connection Manager is

a command-line utility. To use it with a UI, install the GTK+2 library. For more information about obtaining and

installing GTK+2, see https://www.gtk.org/ . The following steps are for the UI for Connection Manager.

1. Open a command prompt and set $ODBCINI to a le in which the Connection Manager denes the DSN.

Ensure that the le is readable and writable.

Sample Code

$ export ODBCINI=<dir-path>/odbc.ini

touch $ODBCINI

The Connection Manager uses the $ODBCINI le and other information that you enter for data sources, to

dene the DSN for Cassandra.

Note

Do not point to the Data Services ODBC .ini le.

2. Start the Connection Manager user interface by entering the following command:

Sample Code

$ cd <LINK_DIR>/bin/

$ /DSConnectionManager.sh

Note

<LINK_DIR> is the Data Services installation directory.

3. In Connection Manager, open the Data Sources tab, and click Add to display the list of database types.

4. In the Select Database Type dialog box, select Cassandra and click OK.

Data Services Supplement for Big Data

Big data in SAP Data Services

PUBLIC 11

The Conguration for... dialog box opens. It contains the absolute location of the odbc.ini le that you set

in the rst step.

5. Provide values for additional connection properties for the Cassandra database type as applicable.

6. Provide the following properties:

• User name

• Password

Note

Data Services does not save these properties for other users.

7. To test the connection, click Test Connection.

8. Click Restart Services to restart services applicable to the Data Services installation location:

If Data Services is installed on the same machine and in the same folder as the IPS or BI platform, restart

the following services:

• EIM Adaptive Process Service

• Data Services Job Service

If Data Services is not installed on the same machine and in the same folder as the IPS or BI platform,

restart the following service:

• Data Services Job Service

Task overview: Apache Cassandra [page 10]

Related Information

Data source properties for Cassandra [page 12]

3.1.2Data source properties for Cassandra

Complete data source properties in the Connection Manager when you congure the ODBC driver for SAP Data

Services on Linux.

The Connection Manager congures the $ODBCINI le based on the property values that you enter in the Data

Sources tab. The following table lists the properties that are relevant for Apache Cassandra.

12

PUBLIC

Data Services Supplement for Big Data

Big data in SAP Data Services

Data Source settings for Apache Cassandra

Database Type Properties on Data Sources tab

Apache Cassandra

• User Name

• Database password

• Host Name

• Port

• Database

• Unix ODBC Lib Path

• Driver

• Cassandra SSL Certicate Mode [0:disabled|1:one-way|2:two-way]

Depending on the value you choose for the certicate mode, Data Services may

require you to dene some or all of the following options:

• Cassandra SSL Server Certicate File

• Cassandra SSL Client Certicate File

• Cassandra SSL Client Key File

• Cassandra SSL Client Key Password

• Cassandra SSL Validate Server Hostname? [0:disabled|1:enabled]

Parent topic: Apache Cassandra [page 10]

Related Information

Setting ODBC driver conguration on Linux [page 11]

3.2 Apache Hadoop

Use SAP Data Services to connect to Apache Hadoop frameworks including Hadoop Distributive File Systems

(HDFS) and Hive.

Data Services supports Hadoop on both the Linux and Windows platform. For Windows support, Data Services

uses Hortonworks Data Platform (HDP) only. HDP allows data from many sources and formats. See the latest

Product Availability Matrix (PAM) on the SAP Support Portal for the supported versions of HDP.

For information about deploying Data Services on a Hadoop MapR cluster machine, see SAP Note 2404486

.

For information about accessing your Hadoop in the administered SAP Big Data Services, see the Supplement

for SAP Big Data Services.

For complete information about how Data Services supports Apache Hadoop, see the Supplement for Hadoop.

Hadoop Distributed File System (HDFS) [page 14]

Connect to your HDFS data using an HDFS le format or an HDFS le location in SAP Data Services

Designer.

Data Services Supplement for Big Data

Big data in SAP Data Services

PUBLIC 13

Hadoop Hive [page 14]

Use a Hive adapter datastore or a Hive database datastore in SAP Data Services Designer to connect to

the Hive remote server.

Upload data to HDFS in the cloud [page 15]

Upload data processed with Data Services to your HDFS that is managed by SAP Big Data Services.

Google Cloud Dataproc clusters [page 15]

To connect to an Apache Hadoop web interface running on Google Cloud Dataproc clusters, use a Hive

database datastore and a WebHDFS le location.

3.2.1Hadoop Distributed File System (HDFS)

Connect to your HDFS data using an HDFS le format or an HDFS le location in SAP Data Services Designer.

Create an HDFS le format and le location with your HDFS connection information, such as the account

name, password, and security protocol. Data Services uses this information to access HDFS data during Data

Services processing.

For complete information about how Data Services supports your HDFS, see the Supplement for Hadoop.

Parent topic: Apache Hadoop [page 13]

Related Information

Hadoop Hive [page 14]

Upload data to HDFS in the cloud [page 15]

Google Cloud Dataproc clusters [page 15]

3.2.2Hadoop Hive

Use a Hive adapter datastore or a Hive database datastore in SAP Data Services Designer to connect to the

Hive remote server.

Use the Hive adapter datastore when Data Services is installed within the Hadoop cluster. Use the Hive

adapter datastore for server-named (DSN-less) connections. Also include SSL (or the newer Transport Layer

Security TLS) for secure communication over the network.

Use a Hive database datastore when Data Services is installed on a machine either within or outside of the

Hadoop cluster. Use the Hive database datastore for either a DSN or a DSN-less connection. Also include

SSL/TLS for secure communication over the network.

For complete information about how Data Services supports Hadoop Hive, see the Supplement for Hadoop.

Parent topic: Apache Hadoop [page 13]

14

PUBLIC

Data Services Supplement for Big Data

Big data in SAP Data Services

Related Information

Hadoop Distributed File System (HDFS) [page 14]

Upload data to HDFS in the cloud [page 15]

Google Cloud Dataproc clusters [page 15]

3.2.3Upload data to HDFS in the cloud

Upload data processed with Data Services to your HDFS that is managed by SAP Big Data Services.

Big Data Services is a Hadoop distribution in the cloud. Big Data Services performs all Hadoop upgrades and

patches for you and provides Hadoop support. SAP Big Data Services was formerly known as Altiscale.

Upload your big data les directly from your computer to Big Data Services. Or, upload your big data les from

your computer to an established cloud account, and then to Big Data Services.

Example

Access data from S3 (Amazon Simple Storage Service) and use the data as a source in Data Services. Then

upload the data to your HDFS that resides in Big Data Service in the cloud.

How you choose to upload your data is based on your use case.

For complete information about accessing your Hadoop account in Big Data Services and uploading big data,

see the Supplement for SAP Big Data Services.

Parent topic: Apache Hadoop [page 13]

Related Information

Hadoop Distributed File System (HDFS) [page 14]

Hadoop Hive [page 14]

Google Cloud Dataproc clusters [page 15]

3.2.4Google Cloud Dataproc clusters

To connect to an Apache Hadoop web interface running on Google Cloud Dataproc clusters, use a Hive

database datastore and a WebHDFS le location.

Use a Hive datastore to browse and view metadata from Hadoop and to import metadata for use in data ows.

To upload processed data, use a Hadoop le location and a Hive template table. Implement bulk loading in the

target editor in a data ow where you use the Hive template table as a target.

Data Services Supplement for Big Data

Big data in SAP Data Services

PUBLIC 15

For complete information about how SAP Data Services supports Google Cloud Dataproc clusters, see the

Supplement for Hadoop.

Parent topic: Apache Hadoop [page 13]

Related Information

Hadoop Distributed File System (HDFS) [page 14]

Hadoop Hive [page 14]

Upload data to HDFS in the cloud [page 15]

3.3 HP Vertica

Access HP Vertica data by creating an HP Vertica database datastore in SAP Data Services Designer.

Use HP Vertica data as sources or targets in data ows. Implement SSL secure data transfer with MIT Kerberos

to access HP Vertica data securely. Additionally, congure options in the source or target table editors to

enhance HP Vertica performance.

Enable MIT Kerberos for HP Vertica SSL protocol [page 17]

SAP Data Services uses MIT Kerberos 5 authentication to securely access an HP Vertica database

using SSL protocol.

Creating a DSN for HP Vertica with Kerberos SSL [page 21]

To enable SSL for HP Vertica database datastores, rst create a data source name (DSN).

Creating HP Vertica datastore with SSL encryption [page 23]

To enable SSL encryption for HP Vertica datastores, you must create a Data Source Name (DSN)

connection.

Increasing loading speed for HP Vertica [page 24]

SAP Data Services doesn't support bulk loading for HP Vertica, but there are settings you can make to

increase loading speed.

HP Vertica data type conversion [page 25]

SAP Data Services converts incoming HP Vertica data types to native data types, and outgoing native

data types to HP Vertica data types.

HP Vertica table source [page 27]

Congure options for an HP Vertica table as a source by opening the source editor in the data ow.

HP Vertica target table conguration [page 28]

Congure options for an HP Vertica table as a target by opening the target editor in the data ow.

16

PUBLIC

Data Services Supplement for Big Data

Big data in SAP Data Services

3.3.1Enable MIT Kerberos for HP Vertica SSL protocol

SAP Data Services uses MIT Kerberos 5 authentication to securely access an HP Vertica database using SSL

protocol.

You must have Database Administrator permissions to install MIT Kerberos 5 on your Data Services client

machine. Additionally, the Database Administrator must establish a Kerberos Key Distribution Center (KDC)

server for authentication. The KDC server must support Kerberos 5 using the Generic Security Service (GSS)

API. The GSS API also supports non_MIT Kerberos implementations, such as Java and Windows clients.

Note

Specic Kerberos and HP Vertica database processes are required before you can enable SSL protocol in

Data Services. For complete explanations and processes for security and authentication, consult your HP

Vertica user documentation and the MIT Kerberos user documentation.

MIT Kerberos authorizes connections to the HP Vertica database using a ticket system. The ticket system

eliminates the need for users to enter a password.

Edit conguration or initialization le [page 18]

After you install MIT Kerberos, dene the specic Kerberos properties in the Kerberos conguration or

initialization le.

Generate secure key with kinit command [page 21]

After you've updated the conguration or initialization le and saved it to the client domain, execute the

kinit command to generate a secure key.

Parent topic: HP Vertica [page 16]

Related Information

Creating a DSN for HP Vertica with Kerberos SSL [page 21]

Creating HP Vertica datastore with SSL encryption [page 23]

Increasing loading speed for HP Vertica [page 24]

HP Vertica data type conversion [page 25]

HP Vertica table source [page 27]

HP Vertica target table conguration [page 28]

Edit conguration or initialization le [page 18]

Generate secure key with kinit command [page 21]

Creating a DSN for HP Vertica with Kerberos SSL [page 21]

Data Services Supplement for Big Data

Big data in SAP Data Services

PUBLIC 17

3.3.1.1 Edit conguration or initialization le

After you install MIT Kerberos, dene the specic Kerberos properties in the Kerberos conguration or

initialization le.

After you dene Kerberos properties, save the conguration or initialization le to your domain.

Example

Save the initialization le named krb5.ini to C:\Windows.

See the MIT Kerberos documentation for information about completing the Unix krb5.conf property le or

the Windows krb5.ini property le.

Log le locations for Kerberos

The following table describes log le names and locations for the Kerberos log les.

Log le

Property File name and location

Kerberos library log le

default = <value>

krb5libs.log.

Example

default = FILE:/var/log/

krb5libs.log

Kerberos Data Center log le

kdc = <value>

krb5kdc.log.

Example

kdc = FILE:/var/log/

krb5kdc.log

Administrator log le

admin_server = <value>

kadmind.log.

Example

admin_server

= FILE:/var/log/

kadmind.log

Kerberos 5 library settings

The following table describes the Kerberos 5 library settings.

18

PUBLIC

Data Services Supplement for Big Data

Big data in SAP Data Services

Property Description

default_realm = <VALUE> <VALUE> = the location of your domain.

Example

default_realm = EXAMPLE.COM

Domain location value must be in all capital letters.

dns_lookup_realm = <value>

Set to False: dns_lookup_realm = false

dns_lookup_kdc = <value>

Set to False: dns_lookup_kdc = false

ticket_lifetime = <value> Set number of hours for the initial ticket request.

Example

ticket_lifetime = 24h

The default is 24h.

renew_lifetime = <value> Set number of days a ticket can be renewed after the ticket

lifetime expiration.

Example

renew_lifetime = 7d

The default is 0.

forwardable = <value>

Set to True to forward initial tickets: forwardable =

true

Kerberos realm

The following table describes the Kerberos realm property.

Data Services Supplement for Big Data

Big data in SAP Data Services

PUBLIC 19

Property Description

<kerberos_realm> = {<subsection_property =

value>}

Location for each property of the Kerberos realm.

Example

EXAMPLE.COM = {kdc=<location>

admin_server=<location>

kpasswd_server=<location>}

Properties include the following:

• KDC location

• Admin Server location

• Kerberos Password Server location

Note

Enter host and server names in lowercase.

Kerberos domain realm

The following table describes the property for the Kerberos domain realm.

Property Description

<server_host_name>=<kerberos_realm> Maps the server host name to the Kerberos realm name. If

you use a domain name, prex the name with a period (.).

Parent topic: Enable MIT Kerberos for HP Vertica SSL protocol [page 17]

Related Information

Generate secure key with kinit command [page 21]

20

PUBLIC

Data Services Supplement for Big Data

Big data in SAP Data Services

3.3.1.2 Generate secure key with kinit command

After you've updated the conguration or initialization le and saved it to the client domain, execute the kinit

command to generate a secure key.

Example

Enter the following command using your own information for the variables: kinit

<user_name>@<realm_name>

The following table describes the keys that the command generates.

Key Description

-k

Precedes the service name portion of the Kerberos principal.

The default is vertica.

-K

Precedes the instance or host name portion of the Kerberos

principal.

-h

Precedes the machine host name for the server.

-d

Precedes the HP Vertica database name with which to con-

nect.

-U

Precedes the user name of the administrator user.

For complete information about using the kinit command to obtain tickets, see the MIT Kerberos Ticket

Management documentation.

Parent topic: Enable MIT Kerberos for HP Vertica SSL protocol [page 17]

Related Information

Edit conguration or initialization le [page 18]

3.3.2Creating a DSN for HP Vertica with Kerberos SSL

To enable SSL for HP Vertica database datastores, rst create a data source name (DSN).

This procedure is for HP Vertica users who have database administrator permissions to perform these steps, or

who have been associated with an authentication method through a GRANT statement.

Note

DSN for HP Vertica is available in SAP Data Services version 4.2 SP7 Patch 1 (14.2.7.1) or later.

Data Services Supplement for Big Data

Big data in SAP Data Services

PUBLIC 21

Before you perform the following steps, install MIT Kerberos 5 and perform all of the required steps for MIT

Kerberos authentication for HP Vertica. See your HP Vertica documentation in the security and authentication

sections for details.

To create a DSN for HP Vertica with Kerberos SSL, perform the following steps:

1. Open the ODBC Data Source Administrator.

Access the ODBC Data Source Administrator either from the Datastore Editor in Data Services Designer or

directly from your Start menu.

2. Open the System DSN tab and select Add.

3. Choose the applicable HP Vertica driver from the list and select Finish.

4. Open the Basic Settings tab and complete the options as described in the following table.

HP Vertica ODBC DSN Conguration Basic Settings tab

Option Value

DSN Enter the HP Vertica data source name.

Description Optional. Enter a description for this data source.

Database Enter the name of the database that is running on the

server.

Server Enter the server name.

Port Enter the port number on which HP Vertica listens for

ODBC connections.

The default port is 5433.

User Name Enter the database user name.

The database user must have DBADMIN permission

or must be associated with the authentication method

through a GRANT statement.

5. Optional: Select Test Connection.

If the connection fails, either continue with the conguration and x the connection issue later, or

recongure the connection information and test the connection again.

6. Open the Client Settings tab and complete the options as described in the following table.

HP Vertica ODBC DSN Conguration Client Settings tab

Option Value

Kerberos Host Name Enter the name of the host computer where Kerberos is

installed.

Kerberos Service Name Enter the applicable value.

SSL Mode Select Require.

22 PUBLIC

Data Services Supplement for Big Data

Big data in SAP Data Services

Option Value

Address Family Preference Select None.

Autocommit Select this option.

Driver String Conversions Select Output.

Result Buer Size (bytes) Enter the applicable value in bytes.

The default value is 131072.

Three Part Naming Select this option.

Log Level Select No logging from the dropdown list.

7. Select Test Connection.

When the connection test is successful, select OK and close the ODBC Data Source Administrator.

Now the HP Vertica DSN that you just created is included in the DSN option in the datastore editor.

Create the HP Vertica database datastore in Data Services Designer and select the DSN that you created.

Task overview: HP Vertica [page 16]

Related Information

Enable MIT Kerberos for HP Vertica SSL protocol [page 17]

Creating HP Vertica datastore with SSL encryption [page 23]

Increasing loading speed for HP Vertica [page 24]

HP Vertica data type conversion [page 25]

HP Vertica table source [page 27]

HP Vertica target table conguration [page 28]

3.3.3Creating HP Vertica datastore with SSL encryption

To enable SSL encryption for HP Vertica datastores, you must create a Data Source Name (DSN) connection.

Before you perform the following steps, an administrator must install MIT Kerberos 5, and enable Kerberos for

HP Vertica SSL protocol.

Additionally, an administrator must create an SSL DSN using the ODBC Data Source Administrator. For more

information about conguring SSL DSN with ODBC drivers, see Congure drivers with data source name

(DSN) connections in the Administrator Guide.

Data Services Supplement for Big Data

Big data in SAP Data Services

PUBLIC 23

Note

SSL encryption for HP Vertica is available in SAP Data Services version 4.2 Support Package 7 Patch 1

(14.2.7.1) or later. Enabling SSL encryption slows down job performance.

Note

An HP Vertica database datastore requires that you choose DSN as a connection method. DSN-less

connections aren't allowed for HP Vertica datastore with SSL encryption.

To create an HP Vertica datastore with SSL encryption, perform the following steps in Data Services Designer:

1. Select Project New Datastore.

The Datastore editor opens.

2. Complete the regular HP Vertica database datastore options.

Choose the HP Vertica client version from the Database version list, and enter your user name and

password.

3. Select Use Data Source Name (DSN).

4. Choose the HP Vertica SSL DSN that you created from the Data Source Name list.

5. Complete the applicable Advanced options and save your datastore.

Task overview: HP Vertica [page 16]

Related Information

Enable MIT Kerberos for HP Vertica SSL protocol [page 17]

Creating a DSN for HP Vertica with Kerberos SSL [page 21]

Increasing loading speed for HP Vertica [page 24]

HP Vertica data type conversion [page 25]

HP Vertica table source [page 27]

HP Vertica target table conguration [page 28]

3.3.4Increasing loading speed for HP Vertica

SAP Data Services doesn't support bulk loading for HP Vertica, but there are settings you can make to increase

loading speed.

For complete details about connecting to HP Vertica, see Connecting to Vertica in the Vertica

documentation. Make sure to select the correct version.

When you load data to an HP Vertica target in a data ow, the software automatically executes an HP Vertica

statement that contains a COPY Local statement. This statement makes the ODBC driver read and stream

the data le from the client to the server.

24

PUBLIC

Data Services Supplement for Big Data

Big data in SAP Data Services

You can further increase loading speed by increasing rows per commit and enable use native connection load

balancing:

1. when you congure the ODBC driver for HP Vertica, enable the option to use native connection load

balancing.

2. In Designer, open the applicable data ow.

3. In the workspace, double-click the HP Vertica datastore target object to open it.

4. Open the Options tab in the lower pane.

5. Increase the number of rows in the Rows per commit option.

Task overview: HP Vertica [page 16]

Related Information

Enable MIT Kerberos for HP Vertica SSL protocol [page 17]

Creating a DSN for HP Vertica with Kerberos SSL [page 21]

Creating HP Vertica datastore with SSL encryption [page 23]

HP Vertica data type conversion [page 25]

HP Vertica table source [page 27]

HP Vertica target table conguration [page 28]

3.3.5HP Vertica data type conversion

SAP Data Services converts incoming HP Vertica data types to native data types, and outgoing native data

types to HP Vertica data types.

The following table contains HP Vertica data types and the native data types to which Data Services converts

them.

HP Vertica data type Data Services data type

Boolean Int

Integer, INT, BIGINT, INT8, SMALLINT, TINYINT Decimal

FLOAT Double

Money Decimal

Numeric Decimal

Number Decimal

Decimal Decimal

Data Services Supplement for Big Data

Big data in SAP Data Services

PUBLIC 25

HP Vertica data type Data Services data type

Binary, Varbinary, Long Varbinary Blob

Long Varchar Long

Char Varchar

Varchar Varchar

Char(n), Varchar(n) Varchar(n)

DATE Date

TIMESTAMP Datetime

TIMESTAMPTZ Varchar

Time Time

TIMETZ Varchar

INTERVAL Varchar

The following table contains native data types and the HP Vertica data types to which Data Services outputs

them. Data Services outputs the converted data types to HP Vertica template tables or Data_Transfer

transform tables.

Data Services data type HP Vertica data type

Blob Long Varbinary

Date Date

Datetime Timestamp

Decimal Decimal

Double Float

Int Int

Interval Float

Long Long Varchar

Real Float

Time Time

Varchar Varchar

26 PUBLIC

Data Services Supplement for Big Data

Big data in SAP Data Services

Data Services data type HP Vertica data type

Timestamp Timestamp

Parent topic: HP Vertica [page 16]

Related Information

Enable MIT Kerberos for HP Vertica SSL protocol [page 17]

Creating a DSN for HP Vertica with Kerberos SSL [page 21]

Creating HP Vertica datastore with SSL encryption [page 23]

Increasing loading speed for HP Vertica [page 24]

HP Vertica table source [page 27]

HP Vertica target table conguration [page 28]

3.3.6HP Vertica table source

Congure options for an HP Vertica table as a source by opening the source editor in the data ow.

HP Vertica source table options

Option Description

Table name Species the table name for the source table.

Table owner Species the table owner.

You cannot edit the value. Data Services automatically popu-

lates with the name that you entered when you created the

HP Vertica table.

Datastore name Species the name of the related HP Vertica datastore.

Database type Species the database type.

You cannot edit this value. Data Services automatically pop-

ulates with the database type that you chose when you cre-

ated the datastore.

Parent topic: HP Vertica [page 16]

Data Services Supplement for Big Data

Big data in SAP Data Services

PUBLIC 27

Related Information

Enable MIT Kerberos for HP Vertica SSL protocol [page 17]

Creating a DSN for HP Vertica with Kerberos SSL [page 21]

Creating HP Vertica datastore with SSL encryption [page 23]

Increasing loading speed for HP Vertica [page 24]

HP Vertica data type conversion [page 25]

HP Vertica target table conguration [page 28]

3.3.7HP Vertica target table conguration

Congure options for an HP Vertica table as a target by opening the target editor in the data ow.

Options tab General options

Option Description

Column comparison Species how the software maps input columns to output

columns:

• Compare by position: Maps source columns to target

columns by position, and ignores column names.

• Compare by name: Maps source columns to target col-

umns by column name. Compare by name is the default

setting.

Data Services issues validation errors when the data types of

the columns do not match.

28 PUBLIC

Data Services Supplement for Big Data

Big data in SAP Data Services

Option Description

Number of loaders Species the number of loaders Data Services uses to load

data to the target.

Enter a positive integer. The default is 1.

There are dierent types of loading:

• Single loader loading: Loading with one loader.

• Parallel loading: Loading with two or more loaders.

With parallel loading, each loader receives the number of

rows indicated in the Rows per commit option, and proc-

esses the rows in parallel with other loaders.

Example

For example, if Rows per commit = 1000 and Number of

Loaders = 3:

• First 1000 rows go to the rst loader

• Second 1000 rows go to the second loader

• Third 1000 rows go to the third loader

• Fourth 1000 rows go to the rst loader

Options tab Error handling options

Option Description

Use overow le Species whether Data Services uses a recovery le for rows

that it could not load.

• No: Data Services does not save information about un-

loaded rows. The default setting is No.

• Yes: Data Services loads data to an overow le when it

cannot load a row. When you select Yes, also complete

File Name and File Format.

File name

File format

Species the le name and le format for the overow le.

Applicable only when you select Yes for Use overow le.

Enter a le name or specify a variable

The overow le can include the data rejected and the oper-

ation being performed (write_data) or the SQL command

used to produce the rejected operation (write_sql).

Data Services Supplement for Big Data

Big data in SAP Data Services

PUBLIC 29

Update control

Option Description

Use input keys Species whether Data Services uses the primary keys from

the input table when the target table does not have a pri-

mary key.

• Yes: Uses the primary keys from the input table when

the target table does not have primary keys.

• No: Does not use primary keys from the input table

when the target table does not have primary keys. No is

the default setting.

Update key columns Species whether Data Services updates key column values

when it loads data to the target table.

• Yes: Updates key column values when it loads data to

the target table.

• No: Does not update key column values when it loads

data to the target table. No is the default setting.

Auto correct load Species whether Data Services uses auto correct loading

when it loads data to the target table. Auto correct loading

ensures that Data Services does not duplicate the same row

in a target table. Auto correct load is useful for data recovery

operations.

• Yes: Uses auto correct loading.

Note

Not applicable for targets in real time jobs or target

tables that contain LONG columns.

• No: Does not use auto correct loading. No is the default

setting.

For more information about auto correct loading, read about

recovery mechanisms in the Designer Guide.

Ignore columns with value Species a value that might appear in a source column and

that you do not want updated in the target table during auto

correct loading.

Enter a string excluding single or double quotation marks.

The string can include spaces.

When Data Services nds the string in the source column,

it does not update the corresponding target column during

auto correct loading.

30 PUBLIC

Data Services Supplement for Big Data

Big data in SAP Data Services

Transaction control

Option Description

Include in transaction Species that this target table is included in the transaction

processed by a batch or real-time job.

• No: This target table is not included in the transaction

processed by a batch or real-time job. No is the default

setting

• Yes: The target table is included in the transaction proc-

essed by a batch or real-time job. Selecting Yes enables

Data Services to commit data to multiple tables as part

of the same transaction. If loading fails for any of the

tables, Data Services does not commit any data to any

of the tables.

Note

Ensure that the tables are from the same datastore.

Data Services does not push down a complete opera-

tion to the database when transactional loading is ena-

bled.

Data Services may buer rows to ensure the correct load

order. If the buered data is larger than the virtual memory

available, Data Services issues a memory error.

If you choose to enable transactional loading, the following

options are not available:

• Rows per commit

• Use overow le and overow le specication

• Number of loaders

Parent topic: HP Vertica [page 16]

Related Information

Enable MIT Kerberos for HP Vertica SSL protocol [page 17]

Creating a DSN for HP Vertica with Kerberos SSL [page 21]

Creating HP Vertica datastore with SSL encryption [page 23]

Increasing loading speed for HP Vertica [page 24]

HP Vertica data type conversion [page 25]

HP Vertica table source [page 27]

Data Services Supplement for Big Data

Big data in SAP Data Services

PUBLIC 31

3.4 MongoDB

To read data from MongoDB sources and load data to other SAP Data Services targets, create a MongoDB

adapter.

MongoDB is an open-source document database, which has JSON-like documents called BSON. MongoDB has

dynamic schemas instead of traditional schema-based data.

Data Services needs metadata to gain access to MongoDB data for task design and execution. Use Data

Services processes to generate schemas by converting each row of the BSON le into XML and converting XML

to XSD.

Data Services uses the converted metadata in XSD les to access MongoDB data.

To learn more about Data Services adapters, see the Supplement for Adapters.

MongoDB metadata [page 32]

Use metadata from a MongoDB adapter datastore to create sources, targets, and templates in a data

ow.

MongoDB as a source [page 33]

Use MongoDB as a source in Data Services and atten the nested schema by using the XML_Map

transform.

MongoDB as a target [page 36]

Congure options for MongoDB as a target in your data ow using the target editor.

MongoDB template documents [page 39]

Use template documents as a target in one data ow or as a source in multiple data ows.

Preview MongoDB document data [page 41]

Use the data preview feature in SAP Data Services Designer to view a sampling of data from a

MongoDB document.

Parallel Scan [page 42]

SAP Data Services uses the MongoDB Parallel Scan process to improve performance while it generates

metadata for big data.

Reimport schemas [page 43]

When you reimport documents from your MongoDB datastore, SAP Data Services uses the current

datastore settings.

Searching for MongoDB documents in the repository [page 44]

SAP Data Services enables you to search for MongoDB documents in your repository from the object

library.

3.4.1MongoDB metadata

Use metadata from a MongoDB adapter datastore to create sources, targets, and templates in a data ow.

MongoDB represents its embedded documents and arrays as nested data in BSON les. SAP Data Services

converts MongoDB BSON les to XML and then to XSD. Data Services saves the XSD le to the following

location: <LINK_DIR>\ext\mongo\mcache.

32

PUBLIC

Data Services Supplement for Big Data

Big data in SAP Data Services

Restrictions and limitations

Data Services has the following restrictions and limitations for working with MongoDB:

• In the MongoDB collection, the tag name can't contain special characters that are invalid for the XSD le.

Example

The following special characters are invalid for XSD les: >, <, &,/, \, #, and so on.

If special characters exist, Data Services removes them.

• Because MongDB data is always changing, the XSD doesn't always reect the entire data structure of all

the documents in the MongoDB.

• Data Services doesn't support projection queries on adapters.

• Data Services ignores any new elds that you add after the metadata schema creation that aren't present

in the common documents.

• Data Services doesn't support push down operators when you use MongoDB as a target.

For more information about formatting XML documents, see the Nested Data section in the Designer Guide. For

more information about source and target objects, see the Data ows section of the Designer Guide.

Parent topic: MongoDB [page 32]

Related Information

MongoDB as a source [page 33]

MongoDB as a target [page 36]

MongoDB template documents [page 39]

Preview MongoDB document data [page 41]

Parallel Scan [page 42]

Reimport schemas [page 43]

Searching for MongoDB documents in the repository [page 44]

3.4.2MongoDB as a source

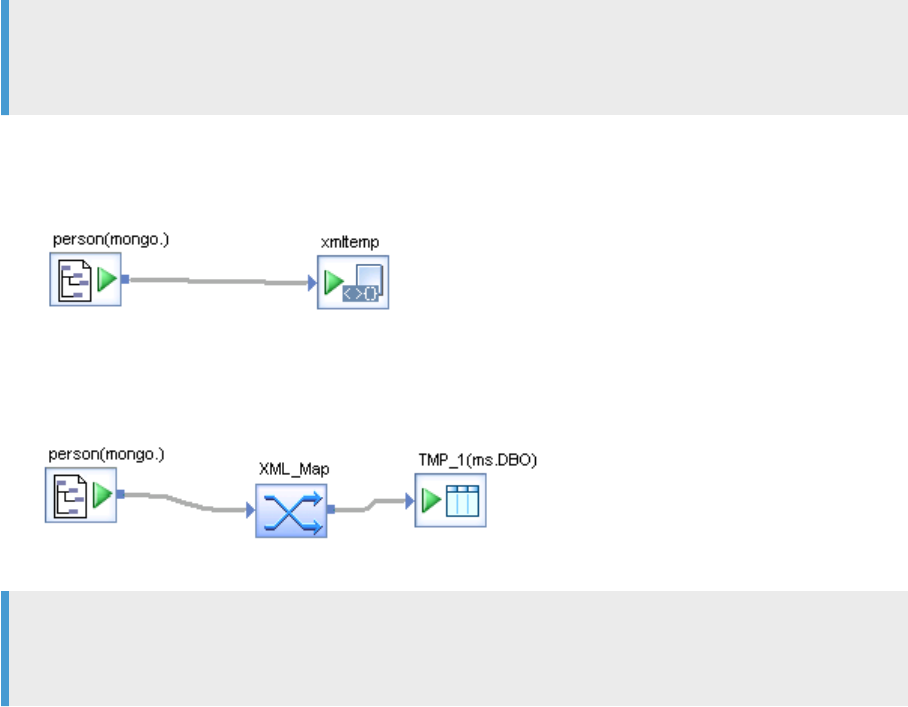

Use MongoDB as a source in Data Services and atten the nested schema by using the XML_Map transform.

The following examples illustrate how to use various objects to process MongoDB sources in data ows.

Example 1: Change the schema of a MongoDB source using the Query transform, and load output to an XML

target.

Data Services Supplement for Big Data

Big data in SAP Data Services

PUBLIC 33

Note

Specify conditions in the Query transform. Some conditions can be pushed down and others are processed

by Data Services.

Example 2: Set a dataow where Data Services reads the schema and then loads the schema directly into an

XML template le.

Example 3: Flatten a schema using the XML_Map transform and then load the data to a table or at le.

Note

Specify conditions in the XML_Map transform. Some conditions can be pushed down and others are

processed by Data Services.

MongoDB query conditions [page 35]

Use query criteria to retrieve documents from a MongoDB collection.

Push down operator information [page 35]

SAP Data Services processes push down operators with a MongoDB source in specic ways based on

the circumstance.

Parent topic: MongoDB [page 32]

Related Information

MongoDB metadata [page 32]

MongoDB as a target [page 36]

MongoDB template documents [page 39]

Preview MongoDB document data [page 41]

Parallel Scan [page 42]

Reimport schemas [page 43]

Searching for MongoDB documents in the repository [page 44]

MongoDB query conditions [page 35]

34

PUBLIC

Data Services Supplement for Big Data

Big data in SAP Data Services

Push down operator information [page 35]

3.4.2.1 MongoDB query conditions

Use query criteria to retrieve documents from a MongoDB collection.

Use query criteria as a parameter of the db.<collection>.find() method. Add MongoDB query conditions

to a MongoDB table as a source in a data ow.

To add a MongoDB query format, enter a value next to the Query criteria parameter in the source editor

Adapter Source tab. Ensure that the query criteria is in MongoDB query format. For example, { type:

{ $in: [‘food’, ’snacks’] } }

Example

Given a value of {prize:100}, MongoDB returns only rows that have a eld named “prize” with a value of

100. If you don’t specify the value 100, MongoDB returns all the rows.

Congure a Where condition so that Data Services pushes down the condition to MongoDB. Specify a Where

condition in a Query or XML_Map transform, and place the Query or XML_Map transform after the MongoDB

source object in the data ow. MongoDB returns only the rows that you want.

For more information about the MongoDB query format, consult the MongoDB Web site.

Note

If you use the XML_Map transform, it may have a query condition with a SQL format. Data Services

converts the SQL format to the MongoDB query format and uses the MongoDB specication to push down

operations to the source database. In addition, be aware that Data Services does not support push down of

query for nested arrays.

Parent topic: MongoDB as a source [page 33]

Related Information

Push down operator information [page 35]

Push down operator information [page 35]

3.4.2.2 Push down operator information

SAP Data Services processes push down operators with a MongoDB source in specic ways based on the

circumstance.

Push down behavior:

Data Services Supplement for Big Data

Big data in SAP Data Services

PUBLIC 35

• Data Services does not push down Sort by conditions.

• Data Services pushes down Where conditions.

• Data Services does not push down the nested array when you use a nested array in a Where condition.

• Data Services does not support push down operators when you use MongoDB as a target.

Data Services supports the following operators when you use MongoDB as a source:

• Comparison operators: =, !=, >, >=, <, <=, like, and in.

• Logical operators: and and or in SQL query.

Parent topic: MongoDB as a source [page 33]

Related Information

MongoDB query conditions [page 35]

3.4.3MongoDB as a target

Congure options for MongoDB as a target in your data ow using the target editor.

About the <_id> eld

SAP Data Services considers the <_id> eld in MongoDB data as the primary key. If you create a new

MongoDB document and include a eld named <_id>, Data Services recognizes that eld as the unique

BSON ObjectID. If a MongoDB document contains more than one <_id> eld at dierent levels, Data Services

considers only the <_id> eld at the rst level as the BSON Object Id.

The following table contains descriptions for options in the Adapter Target tab of the target editor.

Adapter Target tab options

Option

Description

Use auto correct Species the mode Data Services uses for MongoDB as a target datastore.

• True: Uses Upsert mode for the writing behavior. Updates the document with the same

<_id> eld or it inserts a new <_id> eld.

Note

Selecting True may slow the performance of writing operations.

• False: Uses Insert mode for writing behavior. If documents have the same <_id> eld in

the MongoDB collection, then Data Services issues an error message.

36 PUBLIC

Data Services Supplement for Big Data

Big data in SAP Data Services

Option Description

Write concern level

Species the MongoDB write concern level that Data Services uses for reporting the success

of a write operation. Enable or disable dierent levels of acknowledgement for writing opera-

tions.

• Acknowledged: Provides acknowledgment of write operations on a standalone mongod or

the primary in a replica set. Acknowledged is the default setting.

• Unacknowledged: Disables the basic acknowledgment and only returns errors of socket

exceptions and networking errors.

• Replica Set Acknowledged: Guarantees that write operations have propagated success-

fully to the specied number of replica set members, including the primary.

• Journaled: Acknowledges the write operation only after MongoDB has committed the

data to a journal.

• Majority: Conrms that the write operations have propagated to the majority of voting

nodes.

Use bulk

Species whether Data Services executes writing operations in bulk. Bulk may provide better

performance.

• True: Runs write operation in bulk for a single collection to optimize the CRUD eciency.

If the write operation in a bulk is more than 1000, MongoDB automatically splits into

multiple bulk groups.

• False: Does not run write operation in bulk.

For more information about bulk, ordered bulk, and bulk maximum rejects, see the Mon-

goDB documentation at http://help.sap.com/disclaimer?site=http://docs.mongodb.org/man-

ual/core/bulk-write-operations/.

Use ordered bulk

Species the order in which Data Services executes write operations: Serial or Parallel.

• True: Executes write operations in serial.

• False: Executes write operations in parallel. False is the default setting. MongoDB proc-

esses the remaining write operations even when there are errors.

Documents per commit

Species the maximum number of documents that are loaded to a target before the software

saves the data.

• Blank: Uses the maximum of 1000 documents. Blank is the default setting.

• Enter any integer to specify a number other than 1000.

Data Services Supplement for Big Data

Big data in SAP Data Services

PUBLIC 37

Option Description

Bulk maximum rejects

Species the maximum number of acceptable errors before Data Services fails the job.

Note

Data Services continues to load to the target MongoDB even when the job fails.

Enter an integer. Enter -1 so that Data Services ignores and does not log bulk loading errors.

If the number of actual errors is less than, or equal to the number you specify here, Data

Services allows the job to succeed and logs a summary of errors in the adapter instance trace

log.

Applicable only when you select True for Use ordered bulk.

Delete data before loading

Deletes existing documents in the current collection before loading occurs. Retains all the

conguration, including indexes, validation rules, and so on.

Drop and re-create

Species whether Data Services drops the existing MongoDB collection and creates a new one

with the same name before loading occurs.

• True: Drops the existing MongoDB collection and creates a new one with the same name

before loading. Ignores the value of Delete data before loading. True is the default setting.

• False: Does not drop the existing MongoDB collection and create a new one with the same

name before loading.

This option is available for template documents only.

Use audit

Species whether Data Services creates audit les that contain write operation information.

• True: Creates audit les that contain write operation information. Stores audit les

in the <DS_COMMON_DIR>/adapters/audits/ directory. The name of the le is

<MongoAdapter_instance_name>.txt.

• False: Does not create and store audit les.

Data Services behaves in the following way when a regular load fails:

• Use audit = False: Data Services logs loading errors in the job trace log.

• Use audit = True: Data Services logs loading errors in the job trace log and in the audit log.

Data Services behaves in the following way when a bulk load fails:

• Use audit = False: Data Services creates a job trace log that provides only a summary. It

does not contain details about each row of bad data. There is no way to obtain details

about bad data.

• Use audit = True: Data Services creates a job trace log that provides only a summary but

no details. However, the job trace log provides information about where to nd details

about each row of bad data in the audit le.

Parent topic: MongoDB [page 32]

38

PUBLIC

Data Services Supplement for Big Data

Big data in SAP Data Services

Related Information

MongoDB metadata [page 32]

MongoDB as a source [page 33]

MongoDB template documents [page 39]

Preview MongoDB document data [page 41]

Parallel Scan [page 42]

Reimport schemas [page 43]

Searching for MongoDB documents in the repository [page 44]

3.4.4MongoDB template documents

Use template documents as a target in one data ow or as a source in multiple data ows.

Template documents are useful in early application development when you design and test a project. After you

import data for the MongoDB datastore, Data Services stores the template documents in the object library.

Find template documents in the Datastore tab of the object library.

When you import a template document, the software converts it to a regular document. You can use the regular

document as a target or source in your data ow.

Note

Template documents are available in Data Services 4.2.7 and later. If you upgrade from a previous version,

open an existing MongoDB datastore and then click OK to close it. Data Services updates the datastore so

that you see the Template Documents node and any other template document related options.

Template documents are similar to template tables. For information about template tables, see the Data

Services User Guide and the Reference Guide.

Creating MongoDB template documents [page 40]

Create MongoDB template documents as targets in data ows, then use the target as a source in a

dierent data ow.

Convert a template document into a regular document [page 41]

SAP Data Services enables you to convert an imported template document into a regular document.

Parent topic: MongoDB [page 32]

Related Information

MongoDB metadata [page 32]

MongoDB as a source [page 33]

MongoDB as a target [page 36]

Preview MongoDB document data [page 41]

Data Services Supplement for Big Data

Big data in SAP Data Services

PUBLIC 39

Parallel Scan [page 42]

Reimport schemas [page 43]

Searching for MongoDB documents in the repository [page 44]

3.4.4.1 Creating MongoDB template documents

Create MongoDB template documents as targets in data ows, then use the target as a source in a dierent

data ow.

To use a MongoDB template as the target or source in a data ow, rst use the template as a target. To add a

MongoDB template as a target in a data ow, perform the following steps to create the target:

1. Click the template icon from the tool palette.

2. Click inside a data ow in the workspace.

The Create Template dialog box opens.

3. Enter a name for the template in Template name.

Note

Use the MongoDB collection namespace format: database.collection. Don’t exceed 120 bytes.

4. Select the related MongoDB datastore from the In datastore dropdown list.

5. Click OK.

6. To use the template document as a target in the data ow, connect the template document to the object

that comes before the template document.

Data Services automatically generates a schema based on the object directly before the template

document in the data ow.

Restriction

The eld <_id> is the default primary key of the MongoDB collection. Therefore, make sure that you

correctly congure the <_id> eld in the output schema of the object that comes directly before

the target template. If you don't include <_id> in the output schema, the following error appears

when you view the data: “An element named <_id> present in the XML data input does

not exist in the XML format used to set up this XML source in the data flow

<dataflow>. Validate your XML data.”

7. Click Save.

The template document icon in the data ow changes, and Data Services adds the template document to

the object library. Find the template document in the applicable database node under Templates.

Convert the template document into a regular document by selecting to import the template document in the

object library. Then you can use the template document as a source or a target document in other data ows.

Task overview: MongoDB template documents [page 39]

40

PUBLIC

Data Services Supplement for Big Data

Big data in SAP Data Services

Related Information

Convert a template document into a regular document [page 41]

3.4.4.2 Convert a template document into a regular

document

SAP Data Services enables you to convert an imported template document into a regular document.

Use one of the following methods to import a MongoDB template document:

• Open a data ow and select one or more template target documents in the workspace. Right-click, and

choose Import Document.

• Select one or more template documents in the Local Object Library, right-click, and choose Import

Document.

The icon changes and the document appears under Documents instead of Template Documents in the object

library.

Note

The Drop and re-create target conguration option is available only for template target documents.

Therefore it is not available after you convert the template target into a regular document.

Parent topic: MongoDB template documents [page 39]

Related Information

Creating MongoDB template documents [page 40]

3.4.5Preview MongoDB document data

Use the data preview feature in SAP Data Services Designer to view a sampling of data from a MongoDB

document.

Choose one of the following methods to preview MongoDB document data:

• Expand an applicable MongoDB datastore in the object library. Right-click the MongoDB document and

select View Data from the dropdown menu.

• Right-click the MongoDB document in a data ow and select View Data from the dropdown menu.

• Click the magnifying glass icon in the lower corner of either a MongoDB source or target object in a data

ow.

Data Services Supplement for Big Data

Big data in SAP Data Services

PUBLIC 41

Note

By default, Data Services displays a maximum of 100 rows. Change this number by setting the Rows To

Scan option in the applicable MongoDB datastore editor. Entering -1 displays all rows.

For more information about viewing data, see the Designer Guide.

Parent topic: MongoDB [page 32]

Related Information

MongoDB metadata [page 32]

MongoDB as a source [page 33]

MongoDB as a target [page 36]

MongoDB template documents [page 39]

Parallel Scan [page 42]

Reimport schemas [page 43]

Searching for MongoDB documents in the repository [page 44]

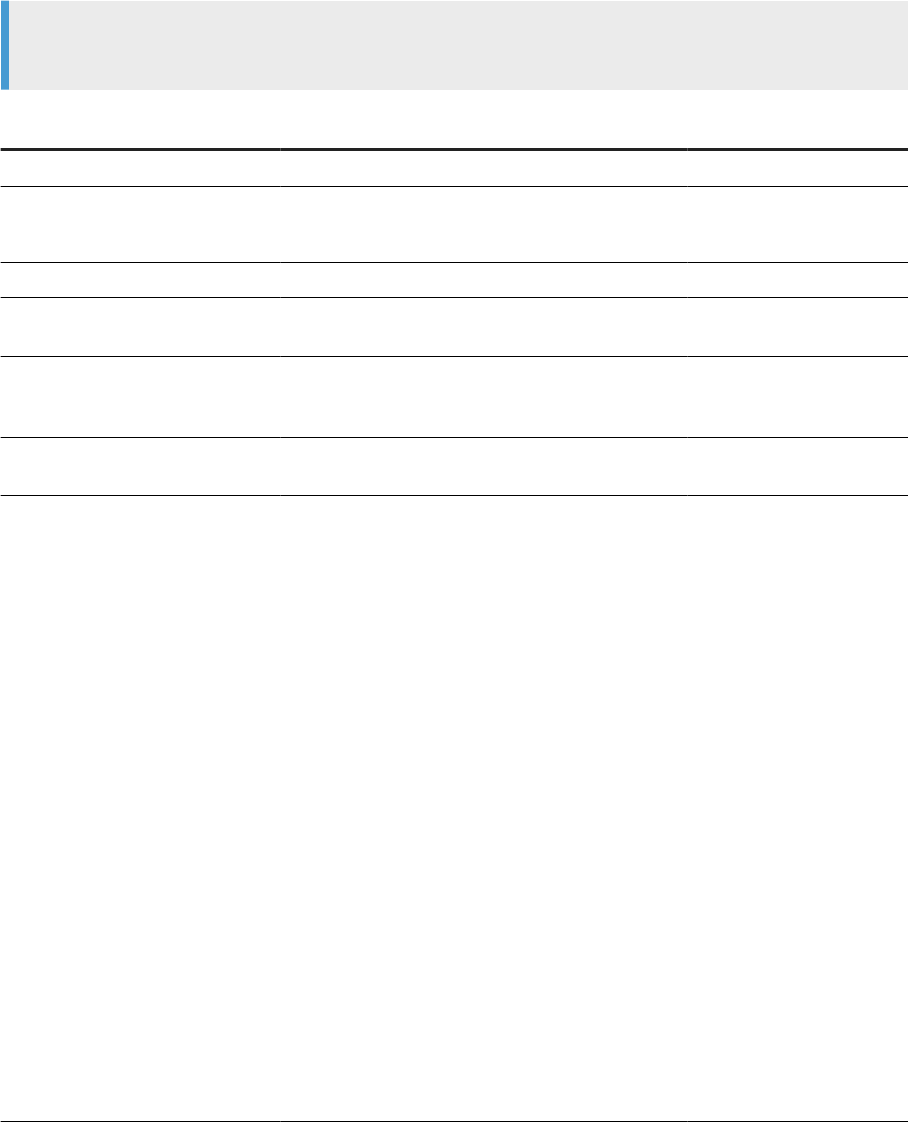

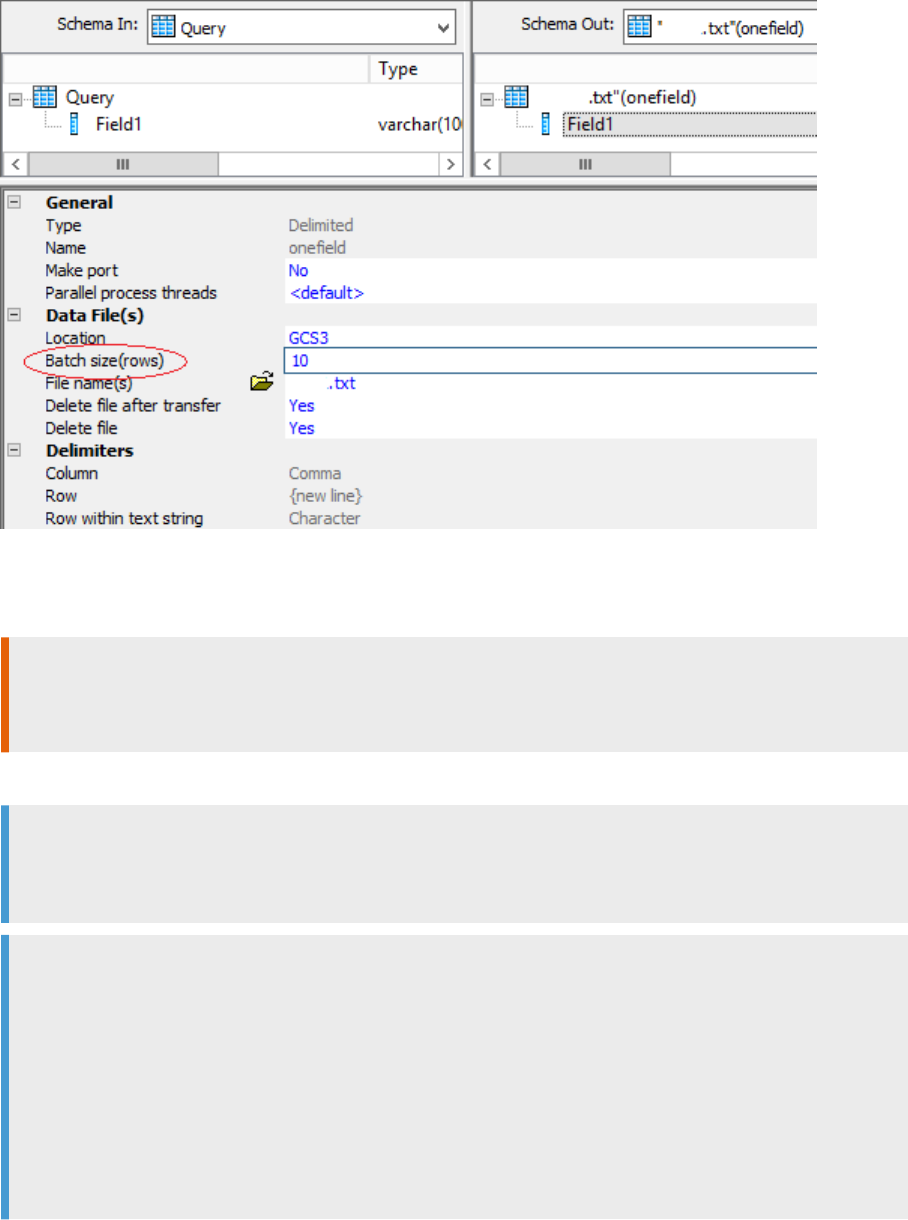

MongoDB adapter datastore conguration options

3.4.6Parallel Scan

SAP Data Services uses the MongoDB Parallel Scan process to improve performance while it generates

metadata for big data.

To generate metadata, Data Services rst scans all documents in the MongoDB collection. This

scanning can be time consuming. However, when Data Services uses the Parallel Scan command

parallelCollectionScan, it uses multiple parallel cursors to read all the documents in a collection. Parallel

Scan can increase performance.

Note

Parallel Scan works with MongoDB server version 2.6.0 and above.

For more information about the parallelCollectionScan command, consult your MongoDB

documentation.

For more information about Mongo adapter datastore conguration options, see the Supplement for Adapters.

Parent topic: MongoDB [page 32]

42

PUBLIC

Data Services Supplement for Big Data

Big data in SAP Data Services

Related Information

MongoDB metadata [page 32]

MongoDB as a source [page 33]

MongoDB as a target [page 36]

MongoDB template documents [page 39]

Preview MongoDB document data [page 41]

Reimport schemas [page 43]

Searching for MongoDB documents in the repository [page 44]

3.4.7Reimport schemas

When you reimport documents from your MongoDB datastore, SAP Data Services uses the current datastore

settings.

Reimport a single MongoDB document by right-clicking the document and selecting Reimport from the

dropdown menu.

To reimport all documents, right-click an applicable MongoDB datastore or right-click on the Documents node

and select Reimport All from the dropdown menu.

Note

When you enable Use Cache, Data Services uses the cached schema.

When you disable Use Cache, Data Services looks in the sample directory for a sample BSON le with the

same name. If there is a matching le, the software uses the schema from the BSON le. If there isn't a

matching BSON le in the sample directory, the software reimports the schema from the database.

Parent topic: MongoDB [page 32]

Related Information

MongoDB metadata [page 32]

MongoDB as a source [page 33]

MongoDB as a target [page 36]

MongoDB template documents [page 39]

Preview MongoDB document data [page 41]

Parallel Scan [page 42]

Searching for MongoDB documents in the repository [page 44]

Data Services Supplement for Big Data

Big data in SAP Data Services

PUBLIC 43

3.4.8Searching for MongoDB documents in the repository

SAP Data Services enables you to search for MongoDB documents in your repository from the object library.

1. Right-click in any tab in the object library and choose Search from the dropdown menu.

The Search dialog box opens.

2. Select the applicable MongoDB datastore name from the Look in dropdown menu.

The datastore is the one that contains the document for which you are searching.

3. Select Local Repository to search the entire repository.

4. Select Documents from the Object Type dropdown menu.

5. Enter the criteria for the search.

6. Click Search.

Data Services lists matching documents in the lower pane of the Search dialog box. A status line at the

bottom of the Search dialog box shows statistics such as total number of items found, amount of time to

search, and so on.

For more information about searching for objects, see the Objects section of the Designer Guide.

Task overview: MongoDB [page 32]

Related Information

MongoDB metadata [page 32]

MongoDB as a source [page 33]

MongoDB as a target [page 36]

MongoDB template documents [page 39]

Preview MongoDB document data [page 41]

Parallel Scan [page 42]

Reimport schemas [page 43]

3.5 Apache Impala

Create an ODBC datastore to connect to Apache Impala in Hadoop.

Before you create an Apache Impala datastore, download the Cloudera ODBC driver and create a data source

name (DSN). Use the datastore to connect to Hadoop and import Impala metadata. Use the metadata as a

source or target in a data ow.

Before you work with Apache Impala, be aware of the following limitations:

• SAP Data Services supports Impala 2.5 and later.

• SAP Data Services supports only Impala scalar data types. Data Services does not support complex types

such as ARRAY, STRUCT, or MAP.

44

PUBLIC

Data Services Supplement for Big Data

Big data in SAP Data Services

For more information about ODBC datastores, see the Datastores section in the Designer Guide.

For descriptions of common datastore options, see the Designer Guide.

Download the Cloudera ODBC driver for Impala [page 45]

For Linux users. Before you create an Impala database datastore, connect to Apache Impala using the

Cloudera OBDC driver.

Creating an Apache Impala datastore [page 46]

To connect to your Hadoop les and access Impala data, create an ODBC datastore in SAP Data

Services Designer.

3.5.1Download the Cloudera ODBC driver for Impala

For Linux users. Before you create an Impala database datastore, connect to Apache Impala using the Cloudera

OBDC driver.

Perform the following high-level steps to download a Cloudera ODBC driver and create a data source name

(DSN). For more in-depth information, consult the Cloudera documentation.

1. Enable Impala Services on the Hadoop server.

2. Download and install the Cloudera ODBC driver (https://www.cloudera.com/downloads/connectors/

impala/odbc/2-5-26.html

):

Select the driver that is compatible with your platform. For information about the correct driver versions,

see the SAP Product Availability Matrix (PAM).

3. Start DSConnectionManager.sh.

Either open the le or run the following command:

cd $LINK_DIR/bin/

$ ./DSConnectionManager.sh

Example

The following shows prompts and values in DS Connection Manager that includes Kerberos and SSL:

The ODBC ini file is <path to the odbc.ini file>

There are available DSN names in the file:

[DSN name 1]

[DSN name 2]

Specify the DSN name from the list or add a new one:

<New DSN file name>

Specify the User Name:

<Hadoop user name>

Type database password:(no echo)

*Type the Hadoop password. Password does not appear after you type it for security.

Retype database password:(no echo)

Specify the Host Name:

<host name/IP address>

Specify the Port:'21050'

<port number>

Specify the Database:

default

Specify the Unix ODBC Lib Path:

*The Unix ODBC Lib Path is based on where you install the driver.

*For example, /build/unixODBC-2.3.2/lib.

Data Services Supplement for Big Data

Big data in SAP Data Services

PUBLIC 45

Specify the Driver:

/<path>/lib/64/libclouderaimpalaodbc64.so

Specify the Impala Auth Mech [0:noauth|1:kerberos|2:user|3:user-

password]:'0':

1

Specify the Kerberos Host FQDN:

<hosts fully qualified domain name>

Specify the Kerberos Realm:

<realm name>

Specify the Impala SSL Mode [0:disabled | 1:enabled]:'0'

1

Specify the Impala SSL Server Certificate File:

<path to certificate.pem>

Testing connection...

Successfully added database source.

Task overview: Apache Impala [page 44]

Related Information

Creating an Apache Impala datastore [page 46]

3.5.2Creating an Apache Impala datastore

To connect to your Hadoop les and access Impala data, create an ODBC datastore in SAP Data Services

Designer.

Before performing the following steps, enable Impala Services on your Hadoop server. Then download the

Cloudera driver for your platform.

Note

If you didn't create a DSN (data source name) in Windows ODBC Data Source application, you can create a

DSN in the following process.

To create an ODBC datastore for Apache Impala, perform the following steps in Designer:

1. Select

Tools New Datastore.

The datastore editor opens.