HPE Nimble Storage dHCI Deployment Guide for New

Installations

HPE Alletra 6000 Series

HPE Nimble Storage Flash Arrays

Published March, 2023

Version 6.0.x

Legal Notices

Copyright

©

2019 - 2023 by Hewlett Packard Enterprise Development LP

Notices

The information contained herein is subject to change without notice. The only warranties for Hewlett Packard Enterprise products

and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be

construed as constituting an additional warranty. Hewlett Packard Enterprise shall not be liable for technical or editorial errors or

omissions contained herein.

Confidential computer software. Valid license from Hewlett Packard Enterprise required for possession, use, or copying. Consistent

with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software Documentation, and Technical Data for Com-

mercial Items are licensed to the U.S. Government under vendor's standard commercial license.

Links to third-party websites take you outside the Hewlett Packard Enterprise website. Hewlett Packard Enterprise has no control

over and is not responsible for information outside the Hewlett Packard Enterprise website.

Hewlett Packard Enterprise believes in being unconditionally inclusive. If terms in this document are recognized as offensive or

noninclusive, they are used only for consistency within the product. When the product is updated to remove the terms, this document

will be updated.

Acknowledgments

Intel

®

, Itanium

®

, Pentium

®

, Intel Inside

®

, and the Intel Inside logo are trademarks of Intel Corporation in the United States and other

countries.

Microsoft

®

and Windows

®

are either registered trademarks or trademarks of Microsoft Corporation in the United States and/or

other countries.

Adobe

®

and Acrobat

®

are trademarks of Adobe Systems Incorporated.

Java

®

and Oracle

®

are registered trademarks of Oracle and/or its affiliates.

UNIX

®

is a registered trademark of The Open Group.

All third-party marks are property of their respective owners.

Publication Date

Monday March 6, 2023 16:22:14

Document ID

vkp1597781985217

Support

All documentation and knowledge base articles are available on HPE InfoSight at https://infosight.hpe.com. To register for

HPE InfoSight, click the Create Account link on the main page.

Email: [email protected]

For all other general support contact information, go to https://www.hpe.com/us/en/services/nimble-storage.html.

Legal Notices ii

Documentation Feedback: [email protected]

Contents

HPE Alletra dHCI Overview.............................................................................................................7

HPE Alletra dHCI and HPE Nimble Storage dHCI Solution................................................................................................................................................7

Using This Document................................................................................................................................................................................................................................7

Finding More Information.......................................................................................................................................................................................................................8

Setup Checklist..................................................................................................................................9

Setting up the System...................................................................................................................11

Verifying Your Configuration ...........................................................................................................................................................................................................11

Deploying the HPE ProLiant Servers............................................................................................................................................................................................11

Deploying Switches.................................................................................................................................................................................................................................11

Deploying the HPE Storage Arrays................................................................................................................................................................................................12

HPE ProLiant Network Card Configuration..............................................................................................................................................................................12

Cabling for Array OS Versions 6.0.0.0 and Later ................................................................................................................................................12

Cabling for Array OS Versions Before 6.0.0.0........................................................................................................................................................14

HPE Nimble Storage Network Card Configuration...............................................................................................................................................................15

Server, Array, and Switch Cabling Example..............................................................................................................................................................................16

Network Requirements for Configuring the Ethernet Switch .......................................................................................................................................17

MTU.................................................................................................................................................................................................................................................17

Flow Control...............................................................................................................................................................................................................................17

Jumbo Frame.............................................................................................................................................................................................................................18

VLAN..............................................................................................................................................................................................................................................18

LLDP...............................................................................................................................................................................................................................................18

Network Configuration Example for Using Peer Persistance.........................................................................................................................................18

Configuration Examples................................................................................................................20

HPE M-Series Configuration Example..........................................................................................................................................................................................20

Task 1: Set Up the Initial HPE M-Switch Configuration...................................................................................................................................20

Task 2: Set up the IPL Port-Channel and the MLAG Configuration ........................................................................................................21

Task 3: Configure NTP.........................................................................................................................................................................................................23

Task 4: Create the VLANs ................................................................................................................................................................................................23

Task 5: Add Individual Port Descriptions for Troubleshooting ..................................................................................................................24

Task 6: Assign the VLANs and Configure Jumbo Frames and Flow Control.......................................................................................25

Task 7: Configure Spanning Tree for HPE M-Series..........................................................................................................................................26

Task 8: Uplink into the Existing Network Infrastructure.................................................................................................................................27

Task 9: Secure the Remaining Interfaces for the HPE M-Series.................................................................................................................27

HPE Aruba 8320 or 8325 Configuration Example to Prepare for Network Automation..............................................................................28

Contents 3

Task 1: Set Up the Initial Configuration on the HPE Aruba 83xx Switches.........................................................................................28

Task 2: Set up the VSX Configuration for the HPE Aruba Switches.........................................................................................................30

Task 3: Configure a Management Network VLAN on Ports for HPE Aruba 83xx Switches.......................................................31

Task 4: Interconnect the HPE Aruba Switches with the Customer Network.......................................................................................32

HPE Aruba 8320 or 8325 Configuration Example Using Manual Steps.................................................................................................................32

Task 1: Set Up the Initial Configuration on the HPE Aruba 83xx Switches ........................................................................................33

Task 2: Set Up the VSX Configuration on HPE Aruba 83xx Switches.....................................................................................................34

Task 3: Configure NTP on HPE Aruba 83xx Switches......................................................................................................................................36

Task 4: Create the VLANs needed................................................................................................................................................................................36

Task 5: Add Individual Port Descriptions for Troubleshooting ..................................................................................................................37

Task 6: Assign the VLANs and Configure Jumbo Frames and Flow Control for HPE Aruba 83xx Switches...................38

Task 7: Configure the Spanning Tree.........................................................................................................................................................................40

Task 8: Interconnect the Aruba Switches with the Customer Network..................................................................................................41

Task 9: Secure the Remaining Interfaces for HPE Aruba 83xx Switches..............................................................................................41

HPE Aruba 6300 Configuration Example..................................................................................................................................................................................42

Task 1: Set Up the Initial Configuration on HPE Aruba 6300 Switches ................................................................................................42

Task 2: Set Up the VSF Configuration for HPE Aruba 6300 Switches ..................................................................................................43

Task 3: Configure NTP for HPE Aruba 6300 Switches....................................................................................................................................44

Task 4: Create the VLANs Needed for HPE Aruba 6300 Switches...........................................................................................................44

Task 5: Add Individual Port Descriptions for Troubleshooting HPE Aruba 6300 Switches.......................................................45

Task 6: Assign the VLANs and Configure Jumbo Frames and Flow Control for HPE Aruba 6300 Switches..................46

Task 7: Configure Spanning Tree for HPE Aruba 6300 Switches..............................................................................................................49

Task 8: Secure the Remaining Interfaces for HPE Aruba 6300 Switches.............................................................................................49

HPE FlexFabric Configuration Example......................................................................................................................................................................................50

Task 1: Set up the Initial Configuration on the HPE FlexFabric Switches.............................................................................................50

Task 2: Set Up the IRF Configuration on the HPE FlexFabric Switches.................................................................................................51

Task 3: Configure MAD and Remote Access to the HPE FlexFabric Switches...................................................................................52

Task 4: Configure the IRF Priority for the HPE FlexFabric Switches........................................................................................................53

Task 5: Configure a Local User and Enable SSH for the HPE FlexFabric Switches.........................................................................53

Task 6: Configure NTP for the HPE FlexFabric Switches................................................................................................................................54

Task 7: Create the VLANs for HPE FlexFabric Switches.................................................................................................................................54

Task 8: Configure the Management Interface for the HPE FlexFabric Switches .............................................................................55

Task 9: Specify the HPE FlexFabric Switch Name ..............................................................................................................................................55

Task 10: Convert the Chassis Working Mode for the HPE FlexFabric Switches ..............................................................................55

Task 11: Add Individual Port Descriptions for Troubleshooting HPE FlexFabric Switches........................................................56

Task 12: Assign the VLANs and Configure Jumbo Frames and Flow Control for HPE FlexFabric Switches...................57

Task 13: Configure the Spanning Tree for the HPE FlexFabric Switches.............................................................................................59

Task 14: Secure the Remaining Interfaces for the HPE FlexFabric Switches......................................................................................60

Task 15: Uplink into the Existing Network Infrastructure for FlexFabric Switches.........................................................................60

Cisco Nexus Configuration Example.............................................................................................................................................................................................60

Task 1: Set Up the Initial Configuration on Cisco Nexus Switches............................................................................................................61

Task 2: Enable the License for the Cisco Nexus Switches..............................................................................................................................62

Task 3: Configure NTP for Cisco Nexus Switches................................................................................................................................................63

Task 4: Create the VLANs Needed for Cisco Nexus Switches......................................................................................................................63

Task 5: Add Individual Port Descriptions for Troubleshooting Cisco Nexus Switches..................................................................64

Contents 4

Task 6: Assign the VLANs and Configure Jumbo Frames and Flow Control for Cisco Nexus Switches..............................66

Task 7: Create Port-Channels for Cisco Nexus Switches.................................................................................................................................68

Task 8: Configure Virtual Port-Channels for Cisco Nexus Switches.........................................................................................................68

Task 9: Confirm that the vPC is Active for Cisco Nexus Switches..............................................................................................................69

Task 10: Secure the Remaining Interfaces for Cisco Nexus Switches......................................................................................................69

Task 11: Uplink Cisco Nexus Switches Into the Existing Network Infrastructure.............................................................................70

Installing and Configuring dHCI ..................................................................................................71

FQDN versus IP Addresses.................................................................................................................................................................................................................71

DNS...................................................................................................................................................................................................................................................................71

NTP...................................................................................................................................................................................................................................................................71

DHCP Server................................................................................................................................................................................................................................................71

IP Address Range.....................................................................................................................................................................................................................................72

Hardware iSCSI...........................................................................................................................................................................................................................................72

VMware vCenter Server License......................................................................................................................................................................................................72

Firewall............................................................................................................................................................................................................................................................72

ESXi Servers Discovery.........................................................................................................................................................................................................................73

Enable SSH...................................................................................................................................................................................................................................................73

HPE Storage Connection Manager for VMware.....................................................................................................................................................................74

Deploying dHCI ..............................................................................................................................75

Discover the Array...................................................................................................................................................................................................................................75

Configure the dHCI Solution..............................................................................................................................................................................................................76

Managing the HPE Alletra dHCI Solution...................................................................................79

Add a New Server.....................................................................................................................................................................................................................................79

Create a New VMFS Datastore.........................................................................................................................................................................................................81

Grow a VMFS Datastore.......................................................................................................................................................................................................................82

Clone a VMFS datastore.......................................................................................................................................................................................................................82

Create a snapshot of a VMFS datastore......................................................................................................................................................................................83

Create a vVol datastore........................................................................................................................................................................................................................83

Using HPE InfoSight with dHCI....................................................................................................85

The dHCI Dashboards on HPE InfoSight....................................................................................................................................................................................85

Create an HPE Storage Account......................................................................................................................................................................................................85

Register Your HPE Storage Assets................................................................................................................................................................................................86

Enable Streaming for HPE InfoSight and Cross-Stack Analytics..................................................................................................................................86

Appendix A: Configuration Worksheets....................................................................................87

Contents 5

Appendix B: Resources for Automation.....................................................................................91

Appendix C: Configure Static IP Addresses on the ESXi Hypervisor....................................92

Appendix D: Configure Peer Persistence with dHCI.................................................................93

Limitations When Using Peer Persistence with dHCI..........................................................................................................................................................93

Network Configuration Example for Using Peer Persistance.........................................................................................................................................93

Appendix E: Configure vSphere Enhanced Linked Mode with dHCI.....................................94

Appendix F: HPE FlexFabric 5700 Ethernet Networking Cabling.........................................95

Appendix G: HPE M-Series SN2010M Ethernet Networking Cabling...................................97

Appendix H: Resources...............................................................................................................100

HPE Alletra dHCI Overview

The HPE Alletra dHCI and HPE Nimble Storage dHCI solutions provided by Hewlett Packard Enterprise are a disaggregated

hyperconverged infrastructure (HCI) platform that delivers the flexibility of converged infrastructure and the simplicity of

HCI. It is a high availability, scalable solution that incorporates a range of products into a portfolio of repeatable and composable

technologies.

This guide describes how dHCI combines HPE ProLiant servers with HPE arrays and HPE and Cisco switches to deploy and

run a VMware vSphere environment. You can leverage this solution to support a variety of enterprise workloads:

• Datacenter server consolidation and cloud solutions

• Business-critical applications, such as Oracle, Microsoft, and SAP databases and applications

• Workforce-enablement applications, such as virtual desktop infrastructure (VDI), Microsoft Exchange Server, SharePoint

Server, and Lync Server

The configuration covered in this deployment guide includes the following components:

• Storage: New HPE Storage all-flash or adaptive flash storage (for iSCSI only)

• Computing resources: New HPE ProLiant servers in one of the following models:

• HPE ProLiant DL380 Gen10

• HPE ProLiant DL360 Gen10

• HPE ProLiant DL325 Gen10

• HPE ProLiant DL325 Gen10 Plus

• HPE ProLiant DL385 Gen10

• HPE ProLiant DL385 Gen10 Plus

• HPE ProLiant DL560 Gen10

• HPE ProLiant DL580 Gen10

• Hypervisor: VMware vSphere Hypervisor (VMware ESXi) 6.7

Note: For the most current list of supported products, refer to the QuickSpecs for HPE Alletra dHCI or the Validated

Configuration Matrix, which is available on HPE InfoSight:

https://infosight.hpe.com/resources/nimble/validated-configuration-matrix.

This guide helps you understand:

• What you need to do to set up dHCI

• Basic instructions for setting up dHCI

• General information for using dHCI

HPE Alletra dHCI and HPE Nimble Storage dHCI Solution

Unless otherwise specified, the instructions in this guide apply to both HPE Alletra dHCI and the HPE Nimble Storage dHCI

solution.

To make this guide easier to use, it refers to the product as "HPE Alletra dHCI" (or just "dHCI") when the information and

instructions apply to both HPE Alletra dHCI and the HPE Nimble Storage dHCI solution.

Using This Document

This document provides examples of how you can set up your dHCI configuration. It includes information about setting up

switches as well as installing and using dHCI.

HPE Alletra dHCI Overview 7

Keep in mind that these are basic examples and overviews. Diifferent configurations can require different setup steps. You

should always consult the documentation specific to your switches and other components.

You must tailor these instructions to your system. The examples provided can help guide you as you do that.

Finding More Information

The HPE Alletra 6000,5000, Nimble Storage Documentation Portal on HPE InfoSight and the HPE Support Center contain

numerous documents to help you set up dHCI to work with HPE Storage arrays.

Note: You can also this portal by logging on to HPE InfoSight and selecting HPE Alletra 6000, Alletra 5000, Nimble

Storage > Resources > Documentation.

This guide focuses on deploying dHCI.

Note: These are general examples and overviews. They are not definitive examples because different configurations

can require different setup steps. You should always consult the documentation specific to your switches and other

components.

In addition to this document, you might want to review the following documents:

• HPE Nimble Storage dHCI Solution Network Considerations Guide

• HPE Nimble Storage dHCI Solution Security Guide

• HPE Integrated Lights Out (iLO 4) – Configuring the NIC Settings

• Getting Started with dHCI Guide

For system requirementss, refer to the QuickSpecs for HPE Alletra dHCI and the Validated Configuration Matrix.

The HPE Support Center contains instructions amd reference for iLO, HPE Aruba switches, HPE FlexFabric switches, and Cisco

Nexus switches.

For details about dHCI components, such as switches or servers, refer to the documentation provided for those components.

Information about other resources is provided in Appendix H: Resources on page 100.

HPE Alletra dHCI Overview 8

Documentation Feedback: [email protected]

Setup Checklist

The following information serves as a checklist that you can use to confirm that everything is in place before you start the

deployment.

Table 1:

CommentsSection in this GuideTask

Be sure to fill out your worksheets before

starting deployment.

Appendix A: Configuration Worksheets

on page 87

Configuration worksheet

Cabling is the source of the most com-

mon problems. Before deploying the so-

lution, make sure that you have reviewed

your cabling and that your solution is

cabled appropriately.

For more information, visit the HPE InfoS-

ight Welcome Center.

• HPE ProLiant Network Card Configu-

ration on page 12

• HPE Nimble Storage Network Card

Configuration on page 15

• Server, Array, and Switch Cabling

Example on page 16Cabling the

network, storage, and server compo-

nents

Solution cabling

Make sure that LLDP is enabled on your

switch.

Network Requirements for Configuring

the Ethernet Switch on page 17

LLDP

The HPE Storage dHCI solution does not

support LAN tagging for iSCSI and man-

agement traffic:

• iSCSI traffic must be untagged for

array and ESXi iSCSI interfaces.

• Management traffic must be un-

tagged for array, ESXi management

interfaces, and iLO.

• • If you plan to use jumbo frames, you

must configure them on your switch.

Network Requirements for Configuring

the Ethernet Switch on page 17

Switch configuration

DNS and NTP are required for deploying

the HPE Storage dHCI solution.

• DNS on page 71

• NTP on page 71

DNS/NTP

A DHCP server is not required, but

Hewlett Packard Enterprise strongly

recommends that you use one. Each ESXi

host must have a temporary IP in the

Management VLAN in order to be discov-

ered.

If you do not have a DHCP server in the

Management VLAN, you can manually

configure a temporary IP address on each

ESXi host. For more information, see

Appendix C: Configure static IP address-

es on the ESXi hypervisor.

DHCP Server on page 71DHCP server

Setup Checklist 9

CommentsSection in this GuideTask

The array uses SLP to discover the ESXi

servers. If no servers are discovered

during deployment, make sure that:

• IGMP snooping is turned off on the

dHCI-management VLAN of the

switches.

• Each ESXi host has an IP address on

the management VLAN.

• You have not cabled any 1 Gb ports

on ESXi hosts for the deployment.

• The VMNIC for the management in-

terface is the first port connected at

10 Gb.

Afterward, restart the SLP server on each

ESXi host.

ESXi Servers Discovery on page 73ESXi servers discovery

If you are using an existing vCenter, en-

sure that the firewall rules allow the fol-

lowing types of communication:

• HPE Storage array communication

to vCenter through port 443

• vCenter communication to the HPE

Storage array through port 443

• vCenter communication with ESXi

Management traffic (For more infor-

mation, see VMware KB 1005189.)

Firewall on page 72Firewall

Setup Checklist 10

Documentation Feedback: [email protected]

Setting up the System

To prepare for deployment and then deploy the solution, you must complete the following tasks:

• Understand the physical infrastructure layout.

• Verify that your system meets the dHCI requirements.

• Fill out the configuration worksheets.

• Configure the network.

• Deploy the dHCI solution:

• Initialize and configure the HPE arrays.

• (New installation) Deploy a new VMware vCenter Server instance when you are creating a new dHCI configuration.

• (Existing instllation) Use an existing VMware vCenter instance when you are adding to an existing dHCI configuration.

• Add HPE ProLiant servers into the dHCI environment.

• Create a VMware vSphere Virtual Machine File System (VMFS) or VMware vSphere Virtual Volumes (vVols).

• (Existing Configurations Only) Migrate virtual machines (VM) to the newly created VMware vSphere VMs.

Keep in mind:

• HPE supports the use of an iLO shared connection instead of an iLO dedicated port.

• HPE recommends you reseve a dedicated pair of 10 Gbps ports for iSCSI traffic only. If your servers do not have a pair of

10 Gbps ports available for iSCSI traffic, you must trunk the iSCSI VLAN on your existing 10 Gbps.

Verifying Your Configuration

Before you set up dHCI, it is a good practice to verify your configuration.

You can use the QuickSpecs for HPE Alletra dHCI to verify your configuration.

In addition, the HPE Storage Validated Configuration Matrix on HPE InfoSight also contains information about supported

configurations and infrastructure components used in the solution and described in this document, including specific software

and firmware versions.

After you install dHCI, you can use the One-Click Update feature included with it to automatically update the array OS, ESXi,

HPE Storage Connection Manager for VMware, and Gen10 Service Pack for ProLiant (SPP) when new versions of those

components are available. The dHCI catalogs provide information about the supported software versions. Instructions for

using One-Click Update are in Getting Started with dHCI.

Deploying the HPE ProLiant Servers

The HPE Support Center provides information about site requirements, installation instructions, and other general reference

materials for HPE ProLiant Servers.

Note: Although this guide does not cover iLO shared connections, HPE supports the use of an iLO shared connection

instead of an iLO dedicated port. For more information, see HPE Integrated Lights Out (iLO 4) – Configuring the NIC

Settings, which available from the HPE Support Center website.

Deploying Switches

The HPE Support Center contains site requirements, installation instructions, and reference materials for the following switches:

Setting up the System 11

• HPE M-Series

• HPE Aruba 83xx and 6300

• HPE FlexFabric 57x0 and 594x Series

If you are using Cisco Nexus switches, refer to the Cisco Nexus Series on the Cisco Nexus website for site requirements,

installation instructions, and reference material.

For general examples of setting up these switches, see Configuration Examples on page 20.

Deploying the HPE Storage Arrays

The HPE Alletra 6000, Alletra 5000, Nimble Storage Documentation Portal and HPE Support Center contain information to

help you deploy and work with HPE Storage arrays. You can find site requirements, installation instructions, and other general

reference materials on the portal.

If you are using an HPE Alletra array and Data Services Cloud Console, you must set up a cloud account. Information about

setting up a cloud account for Data Services Cloud Console can be found in the Data Services Cloud Console Getting Started

Guide, which is available on the Documentation Portal (https://infosight.hpe.com/resources/nimble/docs ). The path to this

guide is HPE InfoSight > HPE Alletra 6000, Alletra 5000, Nimble Storage > Documentation > Getting Started.

HPE ProLiant Network Card Configuration

You must follow the dHCI cabling methodology when you set up the HPE ProLiant network card configuration.

Note: The QuickSpecs for HPE Alletra dHCI contains the most current information about supported HPE ProLiant

DL server models.

When you deploy HPE Alletra dHCI, the stack setup wizard configures the compute nodes so that there are 4 network ports

for management and iSCSI traffic, It also configures the iLO port when you have iLO running in dedicated mode. These 4 ports

must be 10Gbps or faster. HPE recommends that all the ports be configured to the same speed.

The ProLiant DL severs discover the network cards based on the server model. Models that use a FlexLOM card discover that

FlexLOM card before discovering any of the installed PCI network cards. Models that use an OCP card discover that card after

discovering all the other installed PCI cards.

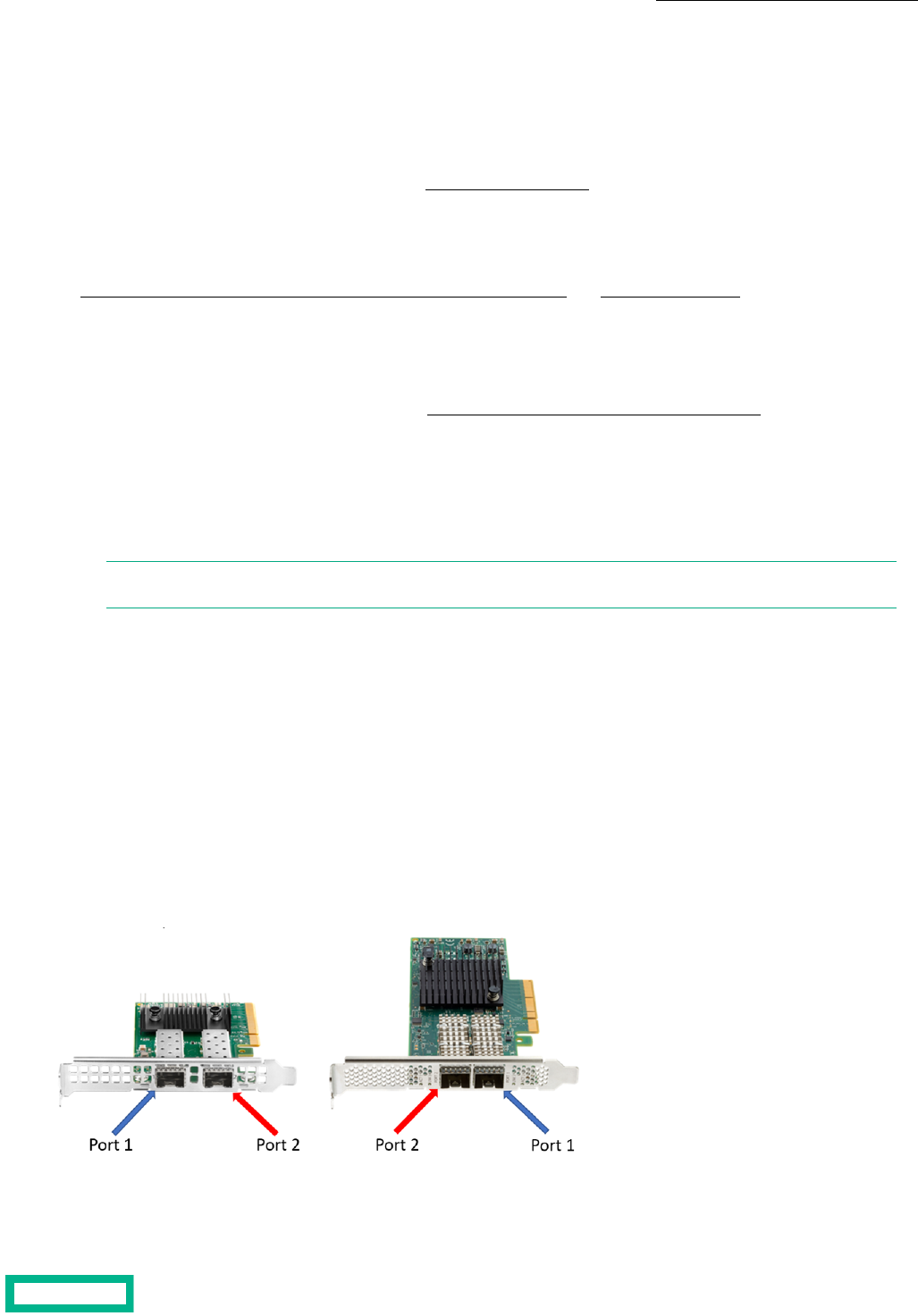

The ports are discovered based on the models of network cards that are installed. The order of ports varies by network

interface card. The ports should be discovered in the order in which they are labeled.

Because the order of the ports on a NIC can vary from model to model, you should record the specific port labeling to ensure

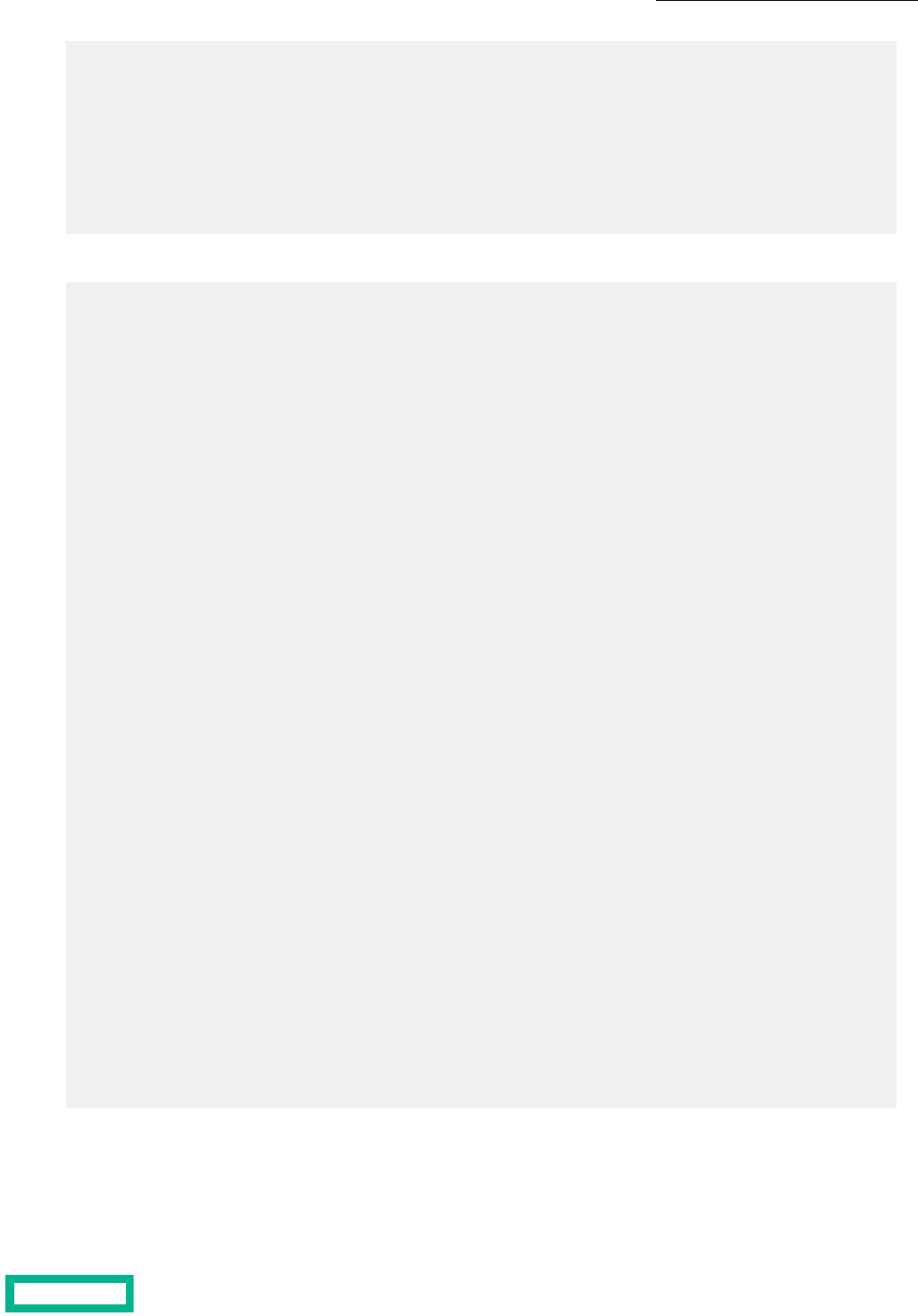

that you cable it correctly. For example, compare the port order on the following two 10/25 GbE NICs:

Figure 1: Example of port differences on 10/25 GbE NICs

Cabling for Array OS Versions 6.0.0.0 and Later

Cabling for Array OS Versions 6.0.0.0 and Later

Setting up the System 12

Documentation Feedback: [email protected]

When dHCI is deployed on an array running release 6.0.0.0 or later, the deployment tool uses ports 1 and 3 for Management.

It uses ports 2 and 4 for iSCSI 1 and iSCSI 2.

Note: When the ports are discovered in VMware ESXi and your array is running array OS 6.1.0.0 or later, the deployment

tool uses ports 1 and 3 for management (MGMT) and network (VM Network). Port 2 for iSCSI 1 and port 4 for iSCSI

2.

The following tables provide information about the default port assignments and the switch they must connect to. This

information depends on the server generation.

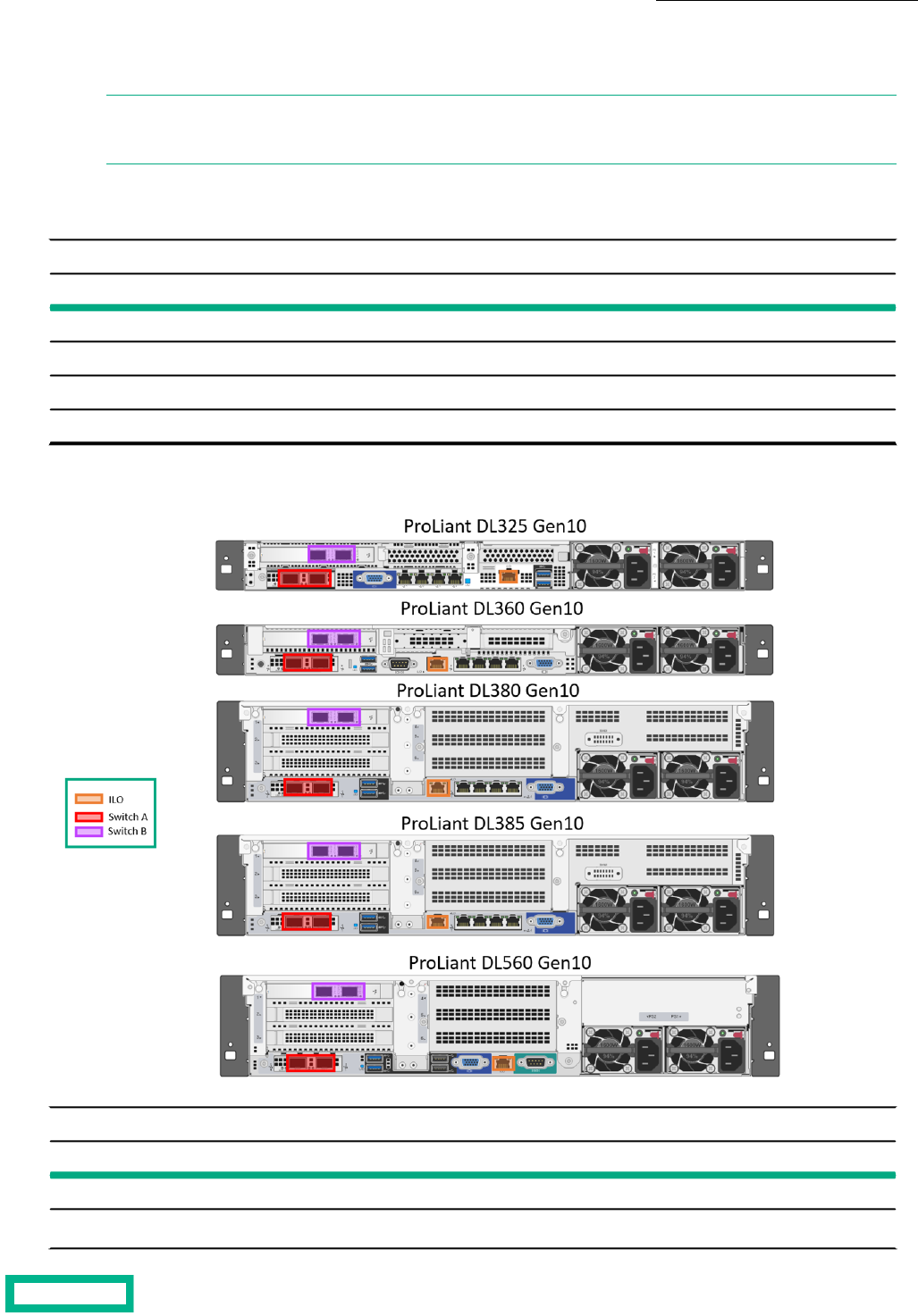

HPE ProLiant DL Gen 10 Servers for Array OS Versions 6.0.x and Later

SwitchRolePort OrderNetwork Card and Port

Switch AManagementPort 1FlexLOM Port 1

Switch AiSCSI 1Port 2FlexLOM Port 2

Switch BManagementPort 3PCI NIC Port 1

Switch BiSCSI 2Port 4PCI NIC Port 2

Figure 2: Example 1 of Port Assignments for HPE ProLiant DL Servers for Array OS Versions 6.0.x and Above

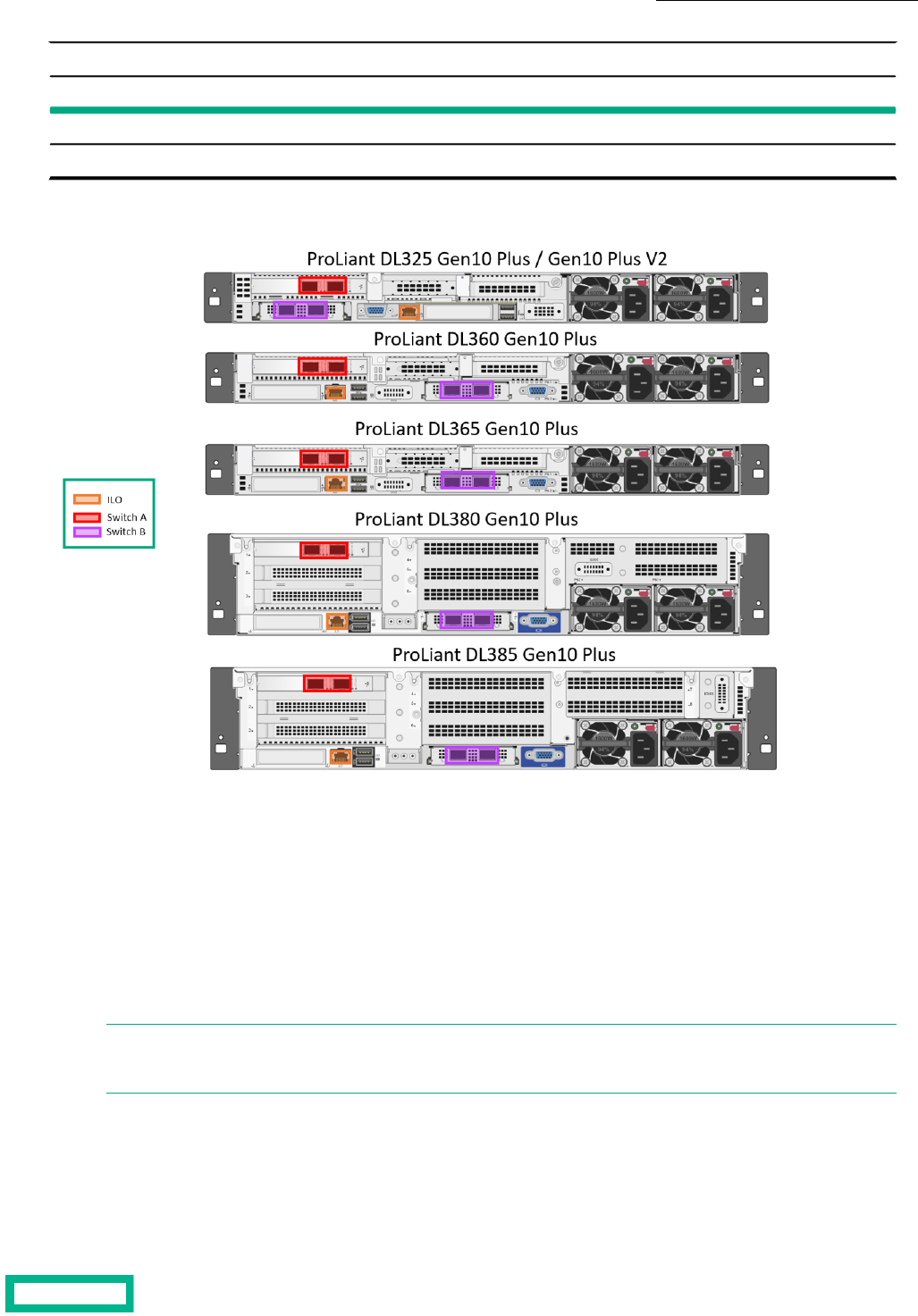

HPE ProLiant DL Gen 10 Plus / Gen10 Plus v2 Servers for Array OS Versions 6.0.0.0 and Later

SwitchRolePort OrderNetwork Card and Port

Switch AManagementPort 1PCI NIC Port 1

Switch AiSCSI 1Port 2PCI NIC Port 2

Setting up the System 13

Documentation Feedback: [email protected]

HPE ProLiant DL Gen 10 Plus / Gen10 Plus v2 Servers for Array OS Versions 6.0.0.0 and Later

SwitchRolePort OrderNetwork Card and Port

Switch BManagementPort 3OCP Port 1

Switch BiSCSI 2Port 4OCP Port 2

Figure 3: Example 2 of Port Assignments for HPE ProLiant DL Servers for Array OS Versions 6.0.x and Above

You do not need to use the FlexLOM or OCP network cards for deployment. Installing and deploying on multiple 10Gbps+

PCI NICs is supported. The same port assignment scheme will be used for deployment. It is important that you carefully plan

which ports on which NICs will be connected to ensure maximum resiliency.

If the first discovered 10Gbps+ card has more than two ports, only two ports on each of the two cards should be connected

at the time of deployment. The best practice is to connect only the two ports on each of the two cards that are intended to

be used for management and iSCSI traffic by the dHCI system. Any additional 10Gb ports beyond the first four are not

configured during deployment, but you can configure them after you deploy dHCI.

dHCI does not support 1 Gbps ports. It does not use the four 1 Gbps ports that are embedded in the HPE ProLiant series.

These ports will be ignored if they are active.

Note: When adding servers to an existing dHCI deployment, the original cabling schema is used. This means that, if

the OS version on the array when dHCI was deployed was prior to 6.0.0.000 and you update the OS version to 6.1.x

or later, dHCI will to use the pre-6.0.0.0 cabling methodology.

Cabling for Array OS Versions Before 6.0.0.0

If the operating system version for your array is prior to 6.0.0.0, the dHCI deployment tool uses ports 1 and 2 for Management

and ports 3 and 4 for iSCSI 1 and iSCSI 2.

Setting up the System 14

Documentation Feedback: [email protected]

Note: If your storage network card configuration differs from the one described in the section on the HPE Nimble

Storage Network Card Configuration on page 15), remember that, if your array is running a version of the array OS

prior to 6.0.0.0, the deployment tool uses ports 1 and 2 for Management (MGMT) and ports 3 and 4 for iSCSI 1 and

iSCSI 2 when the ports are discovered in VMware ESXi.

The following table provides the default values:

HPE ProLiant DL Gen 10 Servers for Array OS Versions Prior to 6.0.0.0

SwitchRolePortNetwork Card and Port

Switch AManagementPort 1FlexLOM Port 1

Switch BManagementPort 2FlexLOM Port 2

Switch AiSCSI 1Port 3PCI NIC Port 1

Switch BiSCSI 2Port 4PCI NIC Port 2

HPE ProLiant DL Gen 10/ Gen10+ v2 Servers for Array OS Versions Prior to 6.0.0.0

SwitchRolePortNetwork Card and Port

Switch AManagementPort 1PCI NIC Port 1

Switch BManagementPort 2PCI NIC Port 2

Switch AiSCSI 1Port 3OCP Port 1

Switch BiSCSI 2Port 4OCP Port 2

Note: When adding servers to an existing dHCI deployment, the original cabling schema is used. This means that, if

the OS version on the array when dHCI was deployed was prior to 6.0.0.000 and you update the OS version to 6.1.x

or later, dHCI will to use the pre-6.0.0.0 cabling methodology.

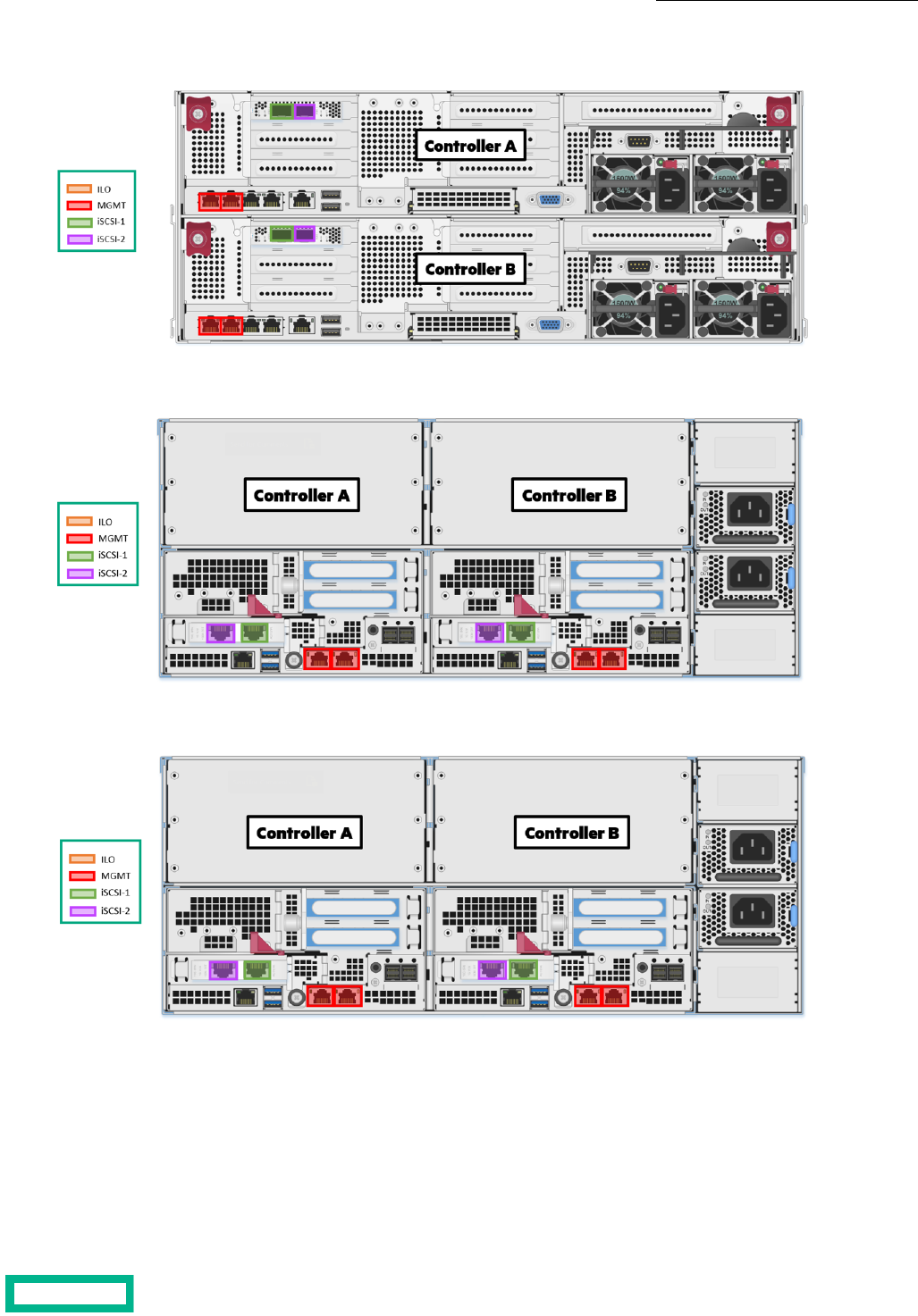

HPE Nimble Storage Network Card Configuration

You must make sure your HPE Storage network card is correctly configured for the Management (MGMT) and iSCSI 1 and

iSCSI 2 ports.

The MGMT and iSCSI ports are shown in the following figures. Your array might differ from the ones shown. The installation

guide for your array provides instruction for cabling.

Note: The HPE Alletra 6000,Alletra 5000, Nimble Storage Documentation Portal on HPE InfoSight and the HPE

Support Center contain the installation guides and numerous other documents to help you as you set up dHCI to

work with HPE Storage arrays.

Setting up the System 15

Documentation Feedback: [email protected]

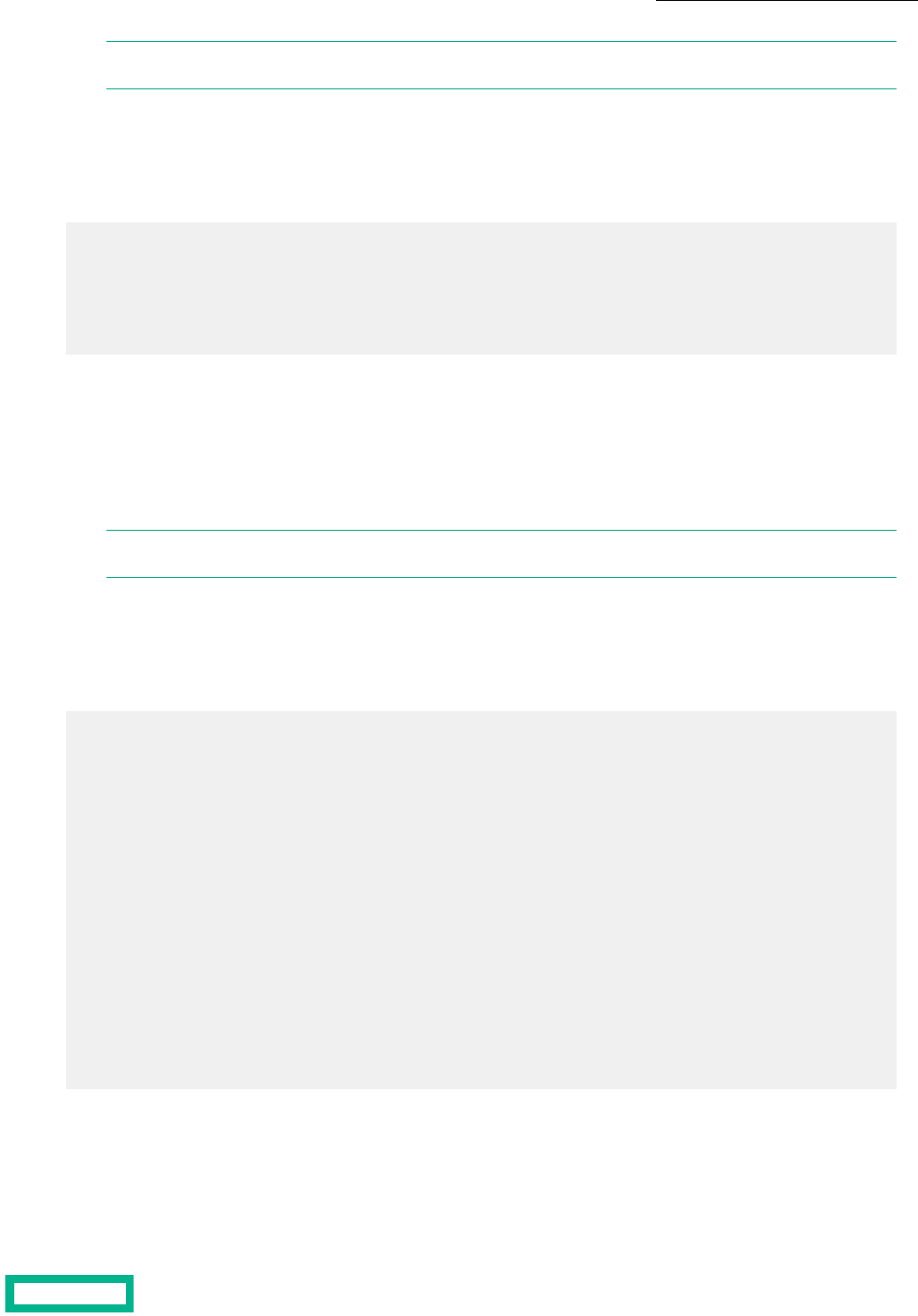

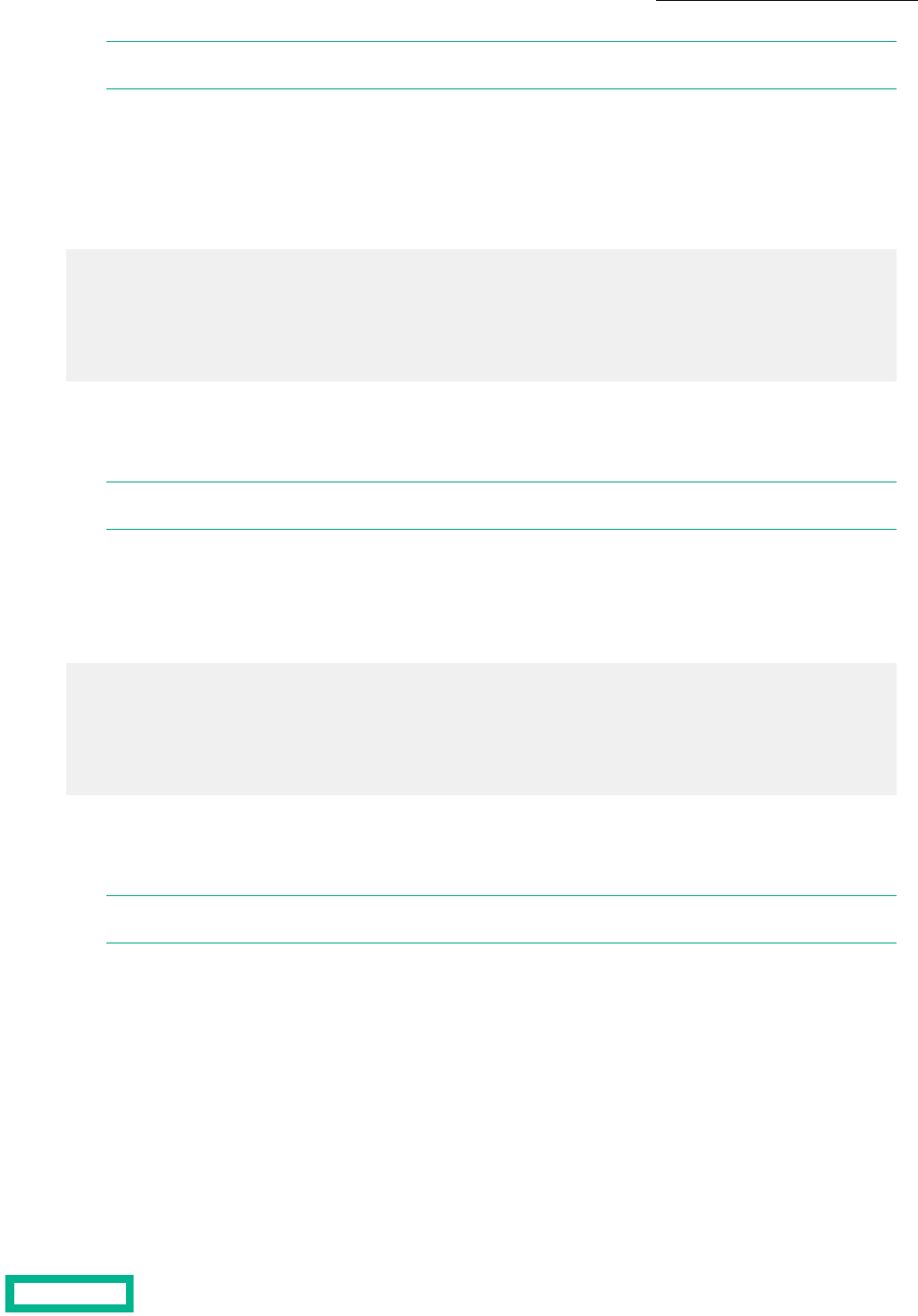

Figure 4: An example of an HPE Alletra 6000 array with two 10 Gbps iSCSI ports on each controller

Figure 5: An example of an HPE Alletra 5000 array with two 10 Gbps iSCSI ports on each controller

Figure 6: An example of an HPE Nimble Storage HF or AF array with two 10 Gbps iSCSI ports on each controller

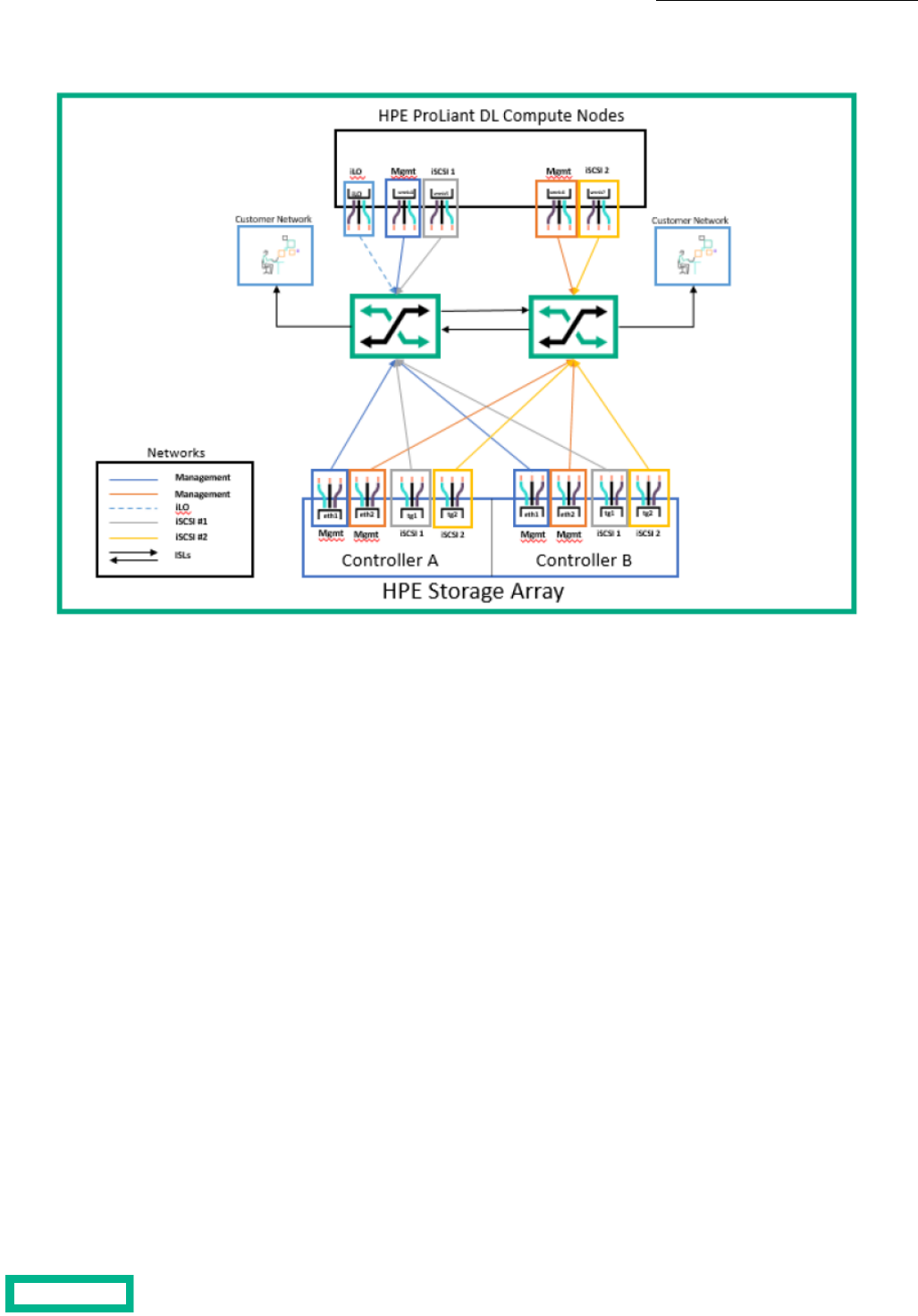

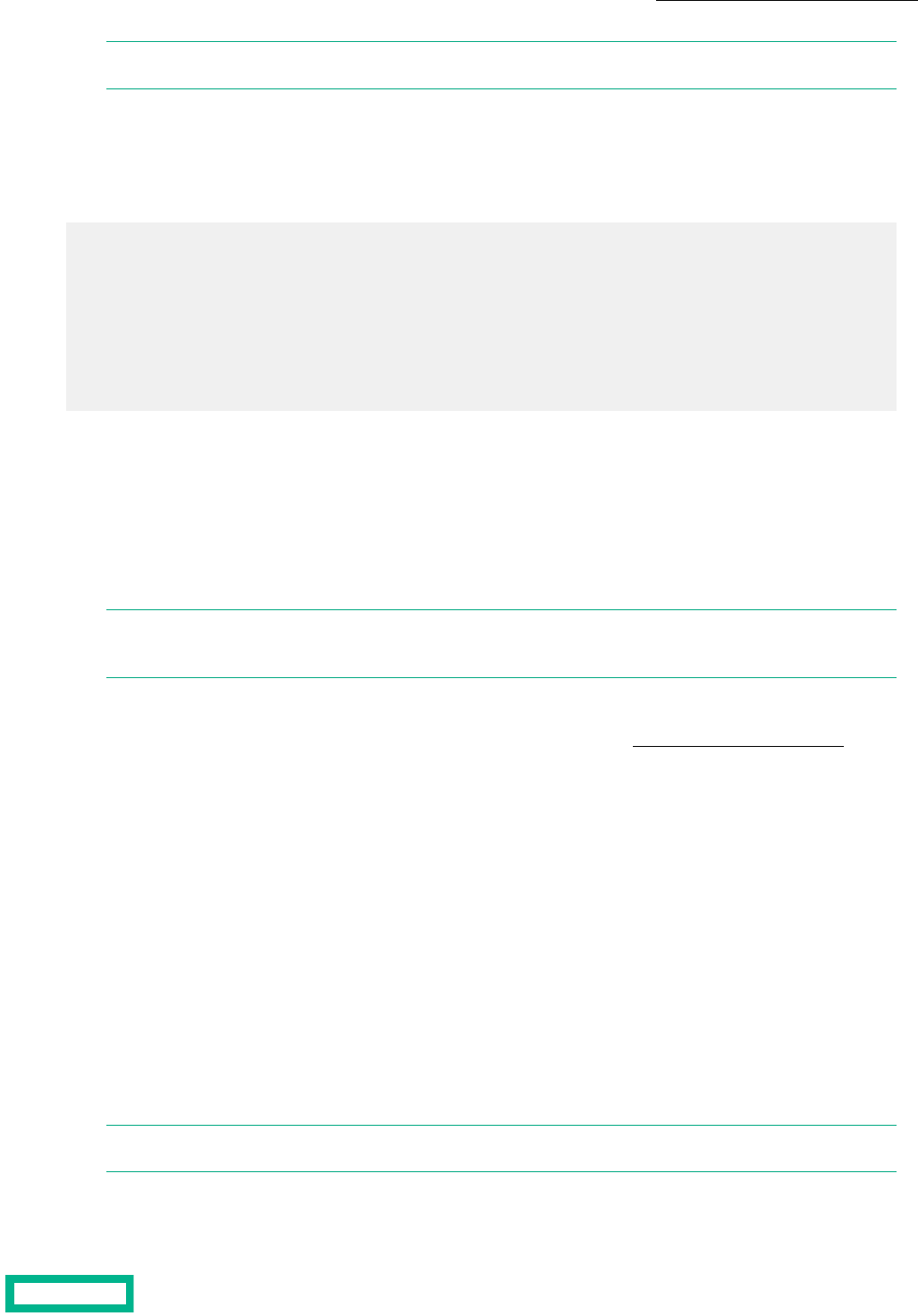

Server, Array, and Switch Cabling Example

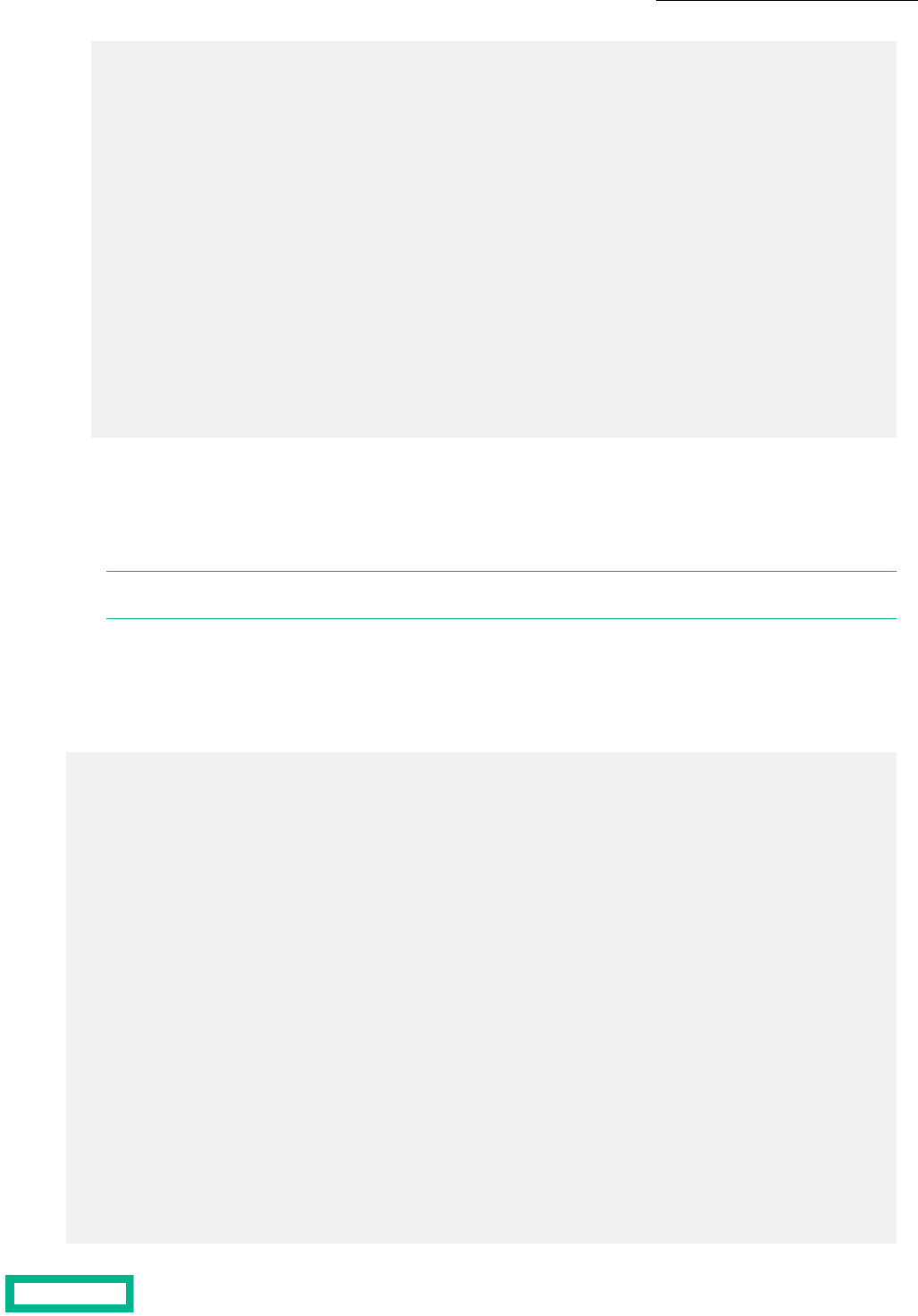

Use the methodology shown in the following figure to cable your HPE ProLiant server, HPE Storage array, and network switch.

Additional configuration examples are in the HPE Alletra dHCI Solution Network Considerations Guide, which is available on

the Documentation Portal on HPE InfoSight..

Setting up the System 16

Documentation Feedback: [email protected]

Figure 7: Cabling example: HPE ProLiant server with four 10 Gbps ports

Network Requirements for Configuring the Ethernet Switch

While different Ethernet switches can have different requirements, in general you must set up the following features:

• Maximum transmission unit (MTU)

• Flow control

• Jumbo frames

• VLAN

• Link Layer Discovery Protocol (LLDP)

MTU

Many switches define MTU differently from the way the initiator or target defines it. Switches often define MTU as the frame

size. End hosts almost universally define MTU as the packet size. The configured frame size on the switch might need to be

larger than the packet size or the MTU value defined on the host and the array. For example, a value of 9000 on the host

might require a value of 9014 or higher on the switch. This difference might vary by manufacturer.

Setting the switch MTU value to a number that is higher than the MTU value on the host or initiator does not cause problems.

The switch MTU setting causes problems only when the MTU value on the intermediate device (the switch) is set to a number

that is lower than the MTU value on one or both of the end devices.

Flow Control

Flow control provides a mechanism for temporarily pausing the transmission of data on Ethernet networks if a sending node

transmits data faster than the receiving node can accept it. Whenever possible, you should enable flow control on all host,

switch, and array ports to ensure graceful communication between network nodes. HPE Storage array network interface cards

(NICs) support flow control by default.

Setting up the System 17

Documentation Feedback: [email protected]

Jumbo Frame

Ethernet frames that transport data are typically 1500 bytes in size. Anything over 1514 bytes (or 1518 with VLAN tagging)

in the Ethernet frame is typically referred to as a jumbo frame. Jumbo frames are generally better suited to handle the flow

of iSCSI SAN traffic. They typically consist of 9000-byte frames. Enabling jumbo frames can help to improve storage throughput

and reduce latency.

HPE recommends using jumbo frames with new server deployments of dHCI.

VLAN

dHCI requires at least three VLANs:

• A management VLAN

The management VLAN must be the native VLAN in the trunk port (untagged).

• Two iSCSI VLANs

You should have one per switch. The best practice is to use two different VLANs for iSCSI traffic:

• One VLAN should map to one 10 Gbps port of your server.

• The other should map to the second 10 Gbps port.

If your server has four 10 Gbps ports, you should dedicate two ports to the use of iSCSI traffic only. These two ports should

be configured in access mode (no VLAN tag). Each port should map to one VLAN only.Configure the iCSI VLANs in access

mode only (no VLAN tag).

If your server has two 10 Gbps ports, you must trunk your iSCSI VLANs onto your existing ports and tag the VLANs

accordingly.

A dedicated VLAN for iLO is recommended based on the deployment option you use.

The following table lists the requirements:

NoteVLAN /ModeVLAN IDVLAN Description

Management/vMotion VLAN

must be the native VLAN.

mgmt_vlan = nativemgmt_vlanManagement/vMotion

Native VLAN onlyiscsi1_vlan = accessiscsi1_vlaniSCSI 1 IP address range

Native VLAN onlyiscsi2_vlan = accessiscsi2_vlaniSCSI 2 IP address range

VM Network can be trunked

on the Management interface.

vm_network = trunkvm_networkVM network

Optional VLAN. Only required

if Split Management is select-

ed during deployment; that is

a separate management net-

work for iLO.

ilo_vlan = accessilo_vlaniLO Network

LLDP

Link Layer Discovery Protocol (LLDP) must be enabled on each switch. dHCI uses LLDP during deployment to verify your

environment.

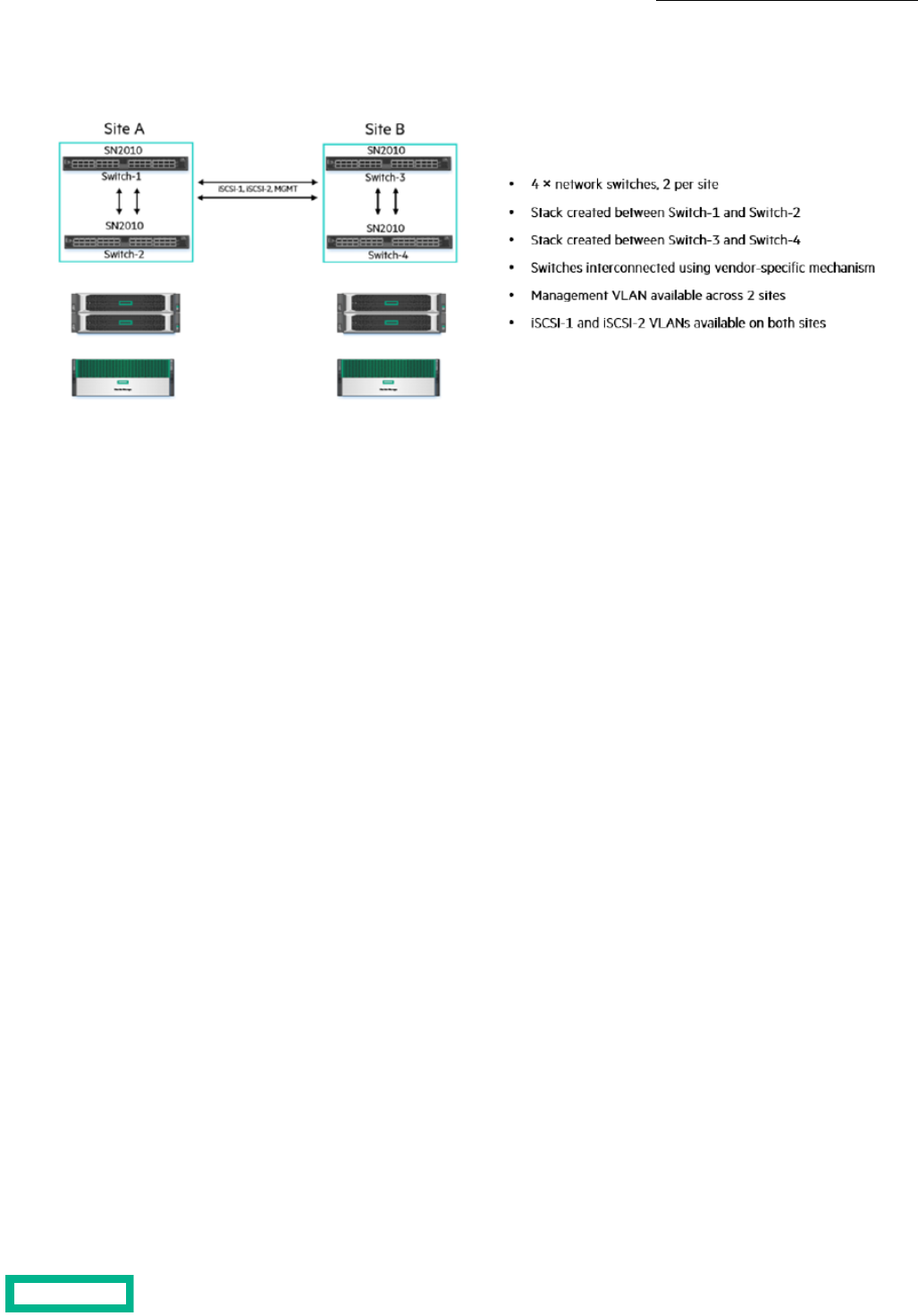

Network Configuration Example for Using Peer Persistance

Before you deploy dHCI, you must complete the network configuration between your sites. Peer Persistence with dHCI requires

that you configure the Management VLAN and both iSCSI VLANs so that they are available between the two solutions.

Setting up the System 18

Documentation Feedback: [email protected]

You networking configuration should be similar to the one shown in the following figure.

Figure 8: Network Configuration Example

Setting up the System 19

Documentation Feedback: [email protected]

Configuration Examples

The following sections contain examples of setting up HPE M-Series, HPE Aruba, HPE FlexFabric, and Cisco Nexus switches.

Keep in mind that these are just examples. They are not the exact scripts required to set up your configuration. You must

tailor your deployment based on your configuration. Follow the best practices and documentation provided with your switch.

HPE M-Series Configuration Example

The following tasks step you through the process of configuring HPE M-Series ONYX switches to work in a dHCI environment.

Note: The steps in these tasks are examples. The steps you perform depend on the switches you use and your system

configuration. You should follow the best practices and documentation provided by your switch vendor.

Before you configure the switches, make sure that they are running a supported version of HPE ONYX (ONYX-3.8.2204 or

later revisions). Refer to the Validated Configuration Matrix on HPE InfoSight for information about system requirements.

A base dHCI deployment requires a minimum of two network switches. The switches must be the same model. The task

examples in this guide use HPE M-series SN2010M switches.

Note: In addition to two switch MGMT0 IP addresses, you must also have a third IP address for the MLAG VIP. This

address must be on the same subnet as the MGMT0 ports. MLAG requires that the MGMT0 ports on the switches

communicate. It will not work if you use MGMT1. For more information about the HPE M-Series Switch Series, see the

HPE Support Center website.

To set up the switches, you must perform the following tasks:

1 Set up the initial configuration on new and factory-default HPE M-Series switches.

2 Update both switches to the latest ONYX release.

3 Set up the inter-peer link (IPL) port-channel and the MLAG configuration.

4 Configure Network Time Protocol (NTP).

5 Create the VLANs needed.

6 Add individual port descriptions for troubleshooting.

7 Assign the VLANs and configure jumbo frames and flow control.

8 Configure spanning tree.

9 Configure an MLAG port-channel uplink for the VM hosts (the example uses one 2x40 Gbps MLAG port-channel).

10 Secure the remaining interfaces.

Task 1: Set Up the Initial HPE M-Switch Configuration

The HPE M-switches come with the default factory configuration. You must configure them for your environment. .

Note: Keep in mind that this is an example of setting up HPE M-Series switches. The steps you perform and the

values you provide may differ based on your configuration.

You must be in configuration-terminal mode when you perform the switch commands.

Procedure

1. Verify that the HPE M-Series setup wizard started automatically and attempted to enter automatic configuration after

the initial boot where the host connected to the serial or console port on the switch.

2. Log in as admin. Use the password admin.

You must enter a password, even if it is the default password.

Configuration Examples 20

Documentation Feedback: [email protected]

3. Run the following commands on HPE M-Series switch 1.

Note: The following example uses [0.0.0.0/0] for the IPv4 address and the mask len. You must enter the value

that is correct for your system, not the values used in this example.

Do you want to use the wizard for initial configuration? y

Step 1: Hostname? [switch-5256f0] net_switch1_mgmt

Step 2: Use DHCP on mgmt0 interface? [yes] no

Step 3: Use zeroconf on mgmt0 interface? [no]

Step 4: Primary IPv4 address and masklen? [0.0.0.0/0]

Step 5: Default gateway? mgmt_net_gw_IP

Step 6: Primary DNS server? mgmt_net_DNA_IP

Step 7: Domain name? mgmt_net_domain_name

Step 8: Enable IPv6? [yes]

Step 9: Enable IPv6 autoconfig (SLAAC) on mgmt0 interface? [no]

Step 10: Enable DHCPv6 on mgmt0 interface? [yes]

Step 11: Admin password (Must be typed)? net_switch_admin_password

Step 11: Confirm admin password? net_switch_admin_password

Step 12: Monitor password (Must be typed)? net_switch_admin_password

Step 12: Confirm monitor password? net_switch_admin_password

The startup wizard should prompt you. If it does not, you might need to perform a factory reset.

4. Run the following commands on HPE M-Series switch 2.

Note: The following example uses [0.0.0.0/0] for the IPv4 address and the mask len. You must enter the value

that is correct for your system, not the values used in this example.

Do you want to use the wizard for initial configuration? y

Step 1: Hostname? [switch-525710] net_switch2_mgmt

Step 2: Use DHCP on mgmt0 interface? [yes] no

Step 3: Use zeroconf on mgmt0 interface? [no]

Step 4: Primary IPv4 address and masklen? [0.0.0.0/0]

Step 5: Default gateway? mgmt_net_gw_IP

Step 6: Primary DNS server? mgmt_net_DNA_IP

Step 7: Domain name? mgmt_net_domain_name

Step 8: Enable IPv6? [yes]

Step 9: Enable IPv6 autoconfig (SLAAC) on mgmt0 interface? [no]

Step 10: Enable DHCPv6 on mgmt0 interface? [yes]

Step 11: Admin password (Must be typed)? net_switch_admin_password

Step 11: Confirm admin password? net_switch_admin_password

Step 12: Monitor password (Must be typed)? net_switch_admin_password

The startup wizard should prompt you. If it does not, you might need to perform a factory reset.

Task 2: Set up the IPL Port-Channel and the MLAG Configuration

After you perform the initial configuration for the HPE M-Series switches, you must set up the IPL port-channel and configure

the MLAG and buffer traffic pools to segregate iSCSI data from the network data.

You must have two switch MGMT0 IP addresses and a third IP address for the MLAG VIP. These addresses must be on the

same subnet as the MGMT0 ports. MLAG requires that the MGMT0 ports on the switches communicate.

Note: Keep in mind that this is an example of setting up HPE M-Series switches. The steps you perform and the

values you provide may differ based on your configuration.

You must be in configuration-terminal mode when you perform these commands.

Configuration Examples 21

Documentation Feedback: [email protected]

Procedure

1. Run the following commands on HPE M-Series switch 1:

enable

configuration terminal

ping net_switch2_mgmt ##– verify responses and enter <ctrl> c

ip routing vrf default

ip igmp snooping

protocol mlag

lacp

lldp

logging monitor events notice

cli default auto-logout 60

traffic pool TCP type lossless

traffic pool iscsi type lossless

traffic pool TCP map switch-priority 0

traffic pool iscsi map switch-priority 4

interface ethernet 1/21-1/22 shutdow

interface ethernet 1/21-1/22 speed 40G force #speed 100G is the default

interface ethernet 1/21 description mlag ipl

interface ethernet 1/22 description mlag ipl

dcb priority-flow-control enable force

dcb application-priority tcp iscsi 4

dcb priority-flow-control priority 4 enable

interface ethernet 1/15-1/16 qos trust port

interface ethernet 1/15-1/16 qos default switch-priority 4

interface ethernet 1/15-1/16 qos rewrite pcp

interface ethernet 1/2 qos trust port

interface ethernet 1/2 qos default switch-priority 4

interface ethernet 1/2 qos rewrite pcp

interface ethernet 1/4 qos trust port

interface ethernet 1/4 qos default switch-priority 4

interface ethernet 1/4 qos rewrite pcp

interface port-channel 10 ipl 1

interface ethernet 1/21-1/22 channel-group 10 mode active

interface port-channel 10 dcb priority-flow-control mode on force

vlan 4094 name "MLAG ipl VLAN"

exit

interface vlan 4094 ip address mlag_private_ip1/mlag_private_netmask

interface vlan 4094 ipl 1 peer-address mlag_private_ip2

mlag-vip MLAG-FOO1 ip mlag-vip /mlag-netmask force

interface ethernet 1/21-1/22 no shutdown

no mlag shutdown

write memory

show interface port-channel summary

show mlag

show traffic pool

show buffer pool

2. Run the following commands on HPE M-Series switch 2:

enable

configuration terminal

ip routing vrf defaault

ip igmp snooping

protocol mlag

lacp

lldp

logging monitor events notice

cli default auto-logout 60

traffic pool TCP type lossless

traffic pool iscsi type lossless

Configuration Examples 22

Documentation Feedback: [email protected]

traffic pool TCP map switch-priority 0

traffic pool iscsi map switch-priority 4

interface ethernet 1/21-1/22 shutdow

interface ethernet 1/21-1/22 speed 40G force #speed 100G is the default

interface ethernet 1/21 description mlag ipl

interface ethernet 1/22 description mlag ipl

dcb priority-flow-control enable force

dcb application-priority tcp iscsi 4

dcb priority-flow-control priority 4 enable

interface ethernet 1/15-1/16 qos trust port

interface ethernet 1/15-1/16 qos default switch-priority 4

interface ethernet 1/15-1/16 qos rewrite pcp

interface ethernet 1/2 qos trust port

interface ethernet 1/2 qos default switch-priority 4

interface ethernet 1/2 qos rewrite pcp

interface ethernet 1/4 qos trust port

interface ethernet 1/4 qos default switch-priority 4

interface ethernet 1/4 qos rewrite pcp

interface port-channel 10 ipl 1

interface ethernet 1/21-1/22 channel-group 10 mode active

interface port-channel 10 dcb priority-flow-control mode on force

vlan 4094 name "MLAG ipl VLAN"

exit

interface vlan 4094 ip address mlag_private_ip2/mlag_private_netmask

interface vlan 4094 ipl 1 peer-address mlag_private_ip1

mlag-vip MLAG-FOO1 ip mlag-vip /mlag-netmask force

interface ethernet 1/21-1/22 no shutdown

no mlag shutdown

write memory

show interface port-channel summary

show mlag

show traffic pool

show buffer pool

Task 3: Configure NTP

You must configure the local time and date and enable NTP.

Note: Keep in mind that this is an example of setting up HPE M-Series switches. The steps you perform and the

values you provide may differ based on your configuration.

You must be in configuration-terminal mode when you perform these commands.

Procedure

Run the following commands on both switches. Adjust the ports as needed:

enable

configuration terminal

ntp server <<mgmt_net_ntp1>>

ntp enable

write memory

show clock

Task 4: Create the VLANs

You must create the required VLANs and the necessary VLAN interfaces.

Note: Keep in mind that this is an example of setting up HPE M-Series switches. The steps you perform and the

values you provide may differ based on your configuration.

Configuration Examples 23

Documentation Feedback: [email protected]

You must be in configuration-terminal mode when you perform these commands.

Procedure

Run the following commands on both switches. Adjust the ports as needed.

enable

configuration terminal

vlan <<mgmt_net_vlan>> name MGMT-VLAN

exit

vlan <<iscsi_san_a_vlan>> name iSCSI-SAN-A-VLAN # Both iSCSI VLANS should

be defined in both M-Series switches

exit

vlan <<iscsi_san_b_vlan>> name iSCSI-SAN-B-VLAN # Both iSCSI VLANS should

be defined in both M-Series switches

quit

vlan <<vm_production_net_1_vlan>> name VM-Production-VLAN1

exit

vlan <<dead_net_vlan>> name Dead-Network #Dead-Network for unused

ports

exit

write memory

Task 5: Add Individual Port Descriptions for Troubleshooting

You should include individual port descriptions for troubleshooting activity and verification.

Note: Keep in mind that this is an example of setting up HPE M-Series switches. The steps you perform and the

values you provide may differ based on your configuration.

You must perform all these steps in configuration-terminal mode.

Procedure

1. Run the following commands on HPE M-Series switch 1:

enable

configuration terminal

interface ethernet 1/15 description <hpe1_system_name>-CA-tg1a

interface ethernet 1/16 description <hpe1_system_name>-CB-tg1a

interface Ethernet 1/21 description MLAG DO NOT MODIFY

interface Ethernet 1/22 description MLAG DO NOT MODIFY

interface ethernet 1/ description <mgmt_server_1_hostname>-Port1

interface ethernet 1/2 description <mgmt_server_1_hostname>-iSCSI-Port1

interface ethernet 1/3 description <mgmt_server_2_hostname>-Port1

interface ethernet 1/4 description <mgmt_server_2_hostname>-iSCSI-Port1

interface ethernet 1/5 description <mgmt_server_1_hostname>-ILO

interface ethernet 1/7 description <hpe_system_name>-MGMT-CA-Port1

interface ethernet 1/8 description <nhpe_system_name>-MGMT-CB-Port1

write memory

2. Run the following commands on HPE M-Series switch 2:

enable

configuration terminal

interface ethernet 1/15 description <hpe1_system_name>-CA-tg1b

interface ethernet 1/16 description <hpe1_system_name>-CB-tg1b

interface Ethernet 1/21 description MLAG DO NOT MODIFY

interface Ethernet 1/22 description MLAG DO NOT MODIFY

Configuration Examples 24

Documentation Feedback: [email protected]

interface ethernet 1/1 description <mgmt_server_1_hostname>-Port2

interface ethernet 1/2 description <mgmt_server_1_hostname>-iSCSI-Port2

interface ethernet 1/3 description <mgmt_server_2_hostname>-Port2

interface ethernet 1/4 description <mgmt_server_2_hostname>-iSCSI-Port2

interface ethernet 1/5 description <mgmt_server_2_hostname>>-ILO

interface ethernet 1/7 description <hpe_system_name>-MGMT-CA-Port2

interface ethernet 1/8 description <hpe_system_name>-MGMT-CB-Port2

write memory

Task 6: Assign the VLANs and Configure Jumbo Frames and Flow Control

You must configure the Management and VM Network VLANs for each HPE ProLiant server in your environment. .

Note: Keep in mind that this is an example of setting up HPE M-Series switches. The steps you perform and the

values you provide may differ based on your configuration.

You must perform all these steps in configuration-terminal mode.

Procedure

1. (Management and VM Network VLANs for each HPE ProLiant server) Run the following commands on both switches,

adjusting the ports as needed:

enable

configuration terminal

interface ethernet 1/1-1/18 speed 10G force #speed 25G is the default

interface ethernet 1/5 speed 1G force #ilo speed is a 1G port

interface ethernet 1/1 switchport mode hybrid

interface ethernet 1/1 switchport hybrid allowed-vlan none

interface ethernet 1/1 switchport hybrid allowed-vlan add <vm_produc►

tion_net_1_vlan>

interface ethernet 1/1 switchport access vlan <mgmt_net_vlan>

interface ethernet 1/3 switchport mode hybrid

interface ethernet 1/3 switchport hybrid allowed-vlan none

interface ethernet 1/3 switchport hybrid allowed-vlan add <vm_produc►

tion_net_1_vlan>

interface ethernet 1/3 switchport access vlan <mgmt_net_vlan>

write memory

2. (Management VLANs for the HPE Storage management interface and server iLO VLAN) Run the following commands

on both switches, adjusting the ports as needed:

interface ethernet 1/7-1/8 switchport access vlan <mgmt_net_vlan>

interface ethernet 1/5 switchport access vlan <ilo_vlan> #ilo connection to

M-series assumed

write memory

3. (iSCSI VLANS, flow control, and jumbo frames for each HPE ProLiant server) Run the following commands on HPE

M-Series switch 1:

interface ethernet 1/2

switchport access vlan <iscsi_san_a_vlan>

flowcontrol receive on force

flowcontrol send on force

mtu 9216 force

no shutdown

exit

Configuration Examples 25

Documentation Feedback: [email protected]

interface ethernet 1/4

switchport access vlan <iscsi_san_a_vlan>

flowcontrol receive on force

flowcontrol send on force

mtu 9216 force

no shutdown

exit

write memory

4. (iSCSI VLANS, flow control, and jumbo frames for each HPE ProLiant server) Run the following commands on HPE

M-Series switch 2:

interface ethernet 1/2

switchport access vlan <iscsi_san_b_vlan>

flowcontrol receive on force

flowcontrol send on force

mtu 9216 force

no shutdown

exit

interface ethernet 1/4

switchport access vlan <iscsi_san_b_vlan>

flowcontrol receive on force

flowcontrol send on force

mtu 9216 force

no shutdown

exit

write memory

5. (iSCSI VLANS, flow control, and jumbo frames for each array port) Run the following commands on HPE M-Series

switch 1:

interface ethernet 1/15-1/16

switchport access vlan <iscsi_san_a_vlan>

flowcontrol receive on force

flowcontrol send on force

mtu 9216 force

no shutdown

exit

write memory

6. (iSCSI VLANS, flow control, and jumbo frames for each array port) Run the following commands on HPE M-Series

switch 2:

interface ethernet 1/15-1/16

speed 10G force

switchport access vlan <iscsi_san_b_vlan>

flowcontrol receive on force

flowcontrol send on force

mtu 9216 force

no shutdown

exit

write memory

Task 7: Configure Spanning Tree for HPE M-Series

You must configure a spanning tree for each HPE ProLiant and HPE Storage interface that is used for iSCSI. When you set

the interfaces to edge ports in a spanning tree and enable the spanning tree on the HPE M-Series switch, you ensure that

these ports will transition directly to the forwarding state in the spanning tree topology.

Configuration Examples 26

Documentation Feedback: [email protected]

Note: Keep in mind that this is an example of setting up HPE M-Series switches. The steps you perform and the

values you provide may differ based on your configuration.

You must be in configuration-terminal mode when you perform these commands.

Procedure

Run the following commands on both switches. Adjust the ports as needed.

spanning-tree mode rpvst

spanning-tree port type edge default

interface ethernet 1/1-1/5 spanning-tree port type edge

interface ethernet 1/7-1/8 spanning-tree port type edge

interface ethernet 1/15-1/16 spanning-tree port type edge

write memory

Task 8: Uplink into the Existing Network Infrastructure

Depending on your network infrastructure and connectivity requirements, you might use various layer 2 or layer 3 methods

to connect dHCI to the network.

This is an example of how to create an MLAG port-channel to uplink the HPE M-Series switch to your existing switch

environment. You must configure one uplink per HPE M-series switch to be aggregated in an MLAG port-channel.

Note: Keep in mind that this is an example of setting up HPE M-Series switches. The steps you perform and the

values you provide may differ based on your configuration.

Make sure you are in configuration-terminal mode when you perform the commands.

Procedure

Run the following commands on both switches. Adjust the ports as needed.

interface mlag-port-channel 80

interface ethernet 1/20 speed 40G force

interface ethernet 1/20 lacp rate fast #”no lacp rate fast” is slow and depends

on upstream switch

interface ethernet 1/20 mlag-channel-group 80 mode active #“mode on” depends

on upstream switch

interface mlag-port-channel 80 spanning-tree port type edge

interface mlag-port-channel 80 spanning-tree bpdufilter enable

interface mlag-port-channel 80 switchport mode hybrid

interface mlag-port-channel 80 switchport hybrid allowed-vlan none

interface mlag-port-channel 80 switchport hybrid allowed-vlan add <vm_produc►

tion_net_1_vlan>

interface mlag-port-channel 80 switchport access vlan <mgmt_net_vlan>

interface mlag-port-channel 80 flowcontrol send on force

interface mlag-port-channel 80 flowcontrol receive on force

interface mlag-port-channel 80 mtu 9216 force #mtu 1520 is the default;

depends on upstream switch

interface mlag-port-channel 80 no shutdown

show interfaces mlag-port-channel summary

Task 9: Secure the Remaining Interfaces for the HPE M-Series

The final task in setting up the HPE M-Series switches is to secure the rest of the switch by shutting down the unused ports

and putting them in your <dead_net_vlan>.

Configuration Examples 27

Documentation Feedback: [email protected]

Note: Keep in mind that this is an example of setting up HPE M-Series switches. The steps you perform and the

values you provide may differ based on your configuration.

Make sure you are in configuration-terminal mode when you perform the commands.

Procedure

1. Run the following commands on both switches. Adjust the ports as needed.

interface ethernet 1/6 shutdown

interface ethernet 1/9-1/14 shutdown

interface ethernet 1/17-1/19 shutdown

interface ethernet 1/6 switchport mode access

interface ethernet 1/9-1/14 switchport mode access

interface ethernet 1/17-1/19 switchport mode access

interface ethernet 1/6 switchport access vlan <dead_net_vlan>

interface ethernet 1/9-1/14 switchport access vlan <dead_net_vlan>

interface ethernet 1/17-1/19 switchport access vlan <dead_net_vlan>

2. Save a copy of the configuration file or capture a show run output to a text file from each switch for future reference.

HPE Aruba 8320 or 8325 Configuration Example to Prepare for Network Automation

If you are creating a new cluster, you can use the dHCI network automation feature to complete your switch setup. This feature

uses the dHCI wizard to gather information from your array about the connected HPE Aruba 8320 or 8325 switches and then

prompts you for information to complete the setup.

Note: If you do not set up the initial cabling correctly, you will not be able to use the dHCI network automation feature.

The dHCI wizard checks to see that you have a management port and data port connected to one switch and a second

management port and data port connected to another switch.

To use the network automation feature, you must have:

• Made sure that the switches are running the HPE firmware version specified in the Validated Configuration Matrix. A base

HPE Storage dHCI deployment must use a minimum of two network switches of the same model that are running the same

version of firmware.

• Created a new cluster. This feature does not work with existing clusters.

• Provided a password for the dHCI switch administrator, which has the user name switch_admin.

• You must complete the following manual steps on the array before you start the dHCI setup. The dHCI wizard uses this

information when you select the dHCI network automation feature.

1 Set up the initial configuration on Aruba 83xx switches 1 and 2.

2 Set up the virtual switching extension (VSX) configuration.

3 Configured a management network VLAN on all remaining ports under access (do not configure tag or trunk ports).

4 Interconnected the Aruba 83xx switches with the customer network.

Task 1: Set Up the Initial Configuration on the HPE Aruba 83xx Switches

To use the dHCI network automation feature, you must perform the initial configuration of the HPE Aruba 8320 or HPE Aruba

8325 switch pair before you start the dHCI setup.

Note: The steps in these tasks are examples. The steps you perform depend on the switches you use and your system

configuration. You should follow the best practices and documentation provided by your switch vendor.

You must be in configuration-terminal mode when you perform the commands on the switches.

Configuration Examples 28

Documentation Feedback: [email protected]

Procedure

1. Configure HPE Aruba 83xx switch 1:

• If you are using serial cable to connect to the console, specify 115,200 baud as the speed.

• Interconnect your two switches by using QSFP+ or SFP+, depending on the switch model.

• Specify three ports at minimum: two for VSX and one for the VSX keepalive mechanism.

2. Configure switch 1. Log in as admin. Press Enter when prompted for a password.

Run the following commands:

config

user admin password

interface mgmt

interface mgmt

no shutdown

ip static net_switch1_mgmt_ip/mgmt_net_netmask

default-gateway mgmt_net_gw

exit

write memory

3. Configure switch 2.Log in as admin. Press Enter when prompted for a password.

Note: If you are using serial cable to connect switch 2 to the console, specify 9600 baud as the speed.

Run the following commands:

config

user admin password

interface mgmt

interface mgmt

no shutdown

ip static net_switch2_mgmt_ip/mgmt_net_netmask

default-gateway mgmt_net_gw

exit

write memory

4. Enable an interface group.

Both switches: Run the following commands, adjusting the ports as needed:

config

system interface-group 1 speed 10g

system interface-group 2 speed 10g

system interface-group 3 speed 10g

system interface-group 4 speed 10g

write memory

Your interface group might differ from what is shown in the example. For more information, see the user's guide for your

HPE Aruba switch.

5. Set the management address to IPv4 on both switches .

Both switches: Run the following commands, adjusting the ports as needed:

config terminal

lldp management-ipv4-address <switch_ip_address>

write memory

Configuration Examples 29

Documentation Feedback: [email protected]

Task 2: Set up the VSX Configuration for the HPE Aruba Switches

When you use the automation feature, dHCI checks for information about the virtual switching extension (VSX) configuration.

You must have it configured on the HPE Aruba 83xx switch pair that you are using.

Note: The steps in these tasks are examples. The steps you perform depend on the switches you use and your system

configuration. You should follow the best practices and documentation provided by your switch vendor.

You must be in configuration-terminal mode when you perform the following commands.

Procedure

1. Configure the link aggregation group (LAG) that will be used for VSX.

Both switches: Run the following commands, adjusting the ports as needed:

config

interface lag 99

no shutdown

no routing

lacp mode active

vlan trunk native 1 tag

vlan trunk allowed all

int 1/1/48

lag 99

int 1/1/49

lag 99

2. Configure an interface to be used for the VSX keepalive connection.

Switch 1: Run the following commands:

interface 1/1/43

no routing

ip address net_switch1_vsx_ip/vsx_net_netmask

Switch 2: Run the following commands:

interface 1/1/43

no routing

ip address net_switch2_vsx_ip/vsx_net_netmask

3. Configure the VSX role.

Switch 1: Run the following commands:

config

vsx

role primary exit

write memory

Switch 2: Run the following commands:

config

vsx

role secondaryexit

write memory

4. Enable the VSX keepalive interface.

Configuration Examples 30

Documentation Feedback: [email protected]

Switch 1: Run the following commands:

config

vsx

keepalive peer net_switch2_vsx_ip source net_switch1_vsx_ip

exit

write memory

Switch 2: Run the following commands:

config

vsx

keepalive peer net_switch1_vsx_ip source net_switch2_vsx_ip

exit