Interactive Audience Measurement and

Advertising Campaign Reporting and Audit Guidelines

Interactive Audience Measurement and

Advertising Campaign Reporting and Audit Guidelines

September 2004, Version 6.0b

United States Version

2U.S. Version

Background

Consistent and accurate measurement of Internet advertising is critical for acceptance of the

Internet and is an important factor in the growth of Internet advertising spending.

This document establishes a detailed definition for ad-impressions, which is a critical component

of Internet measurement and provides certain guidelines for Internet advertising sellers (herein

referred to as “media companies” or “sites”) and ad serving organizations (including third-party ad

servers and organizations that serve their own ads) for establishing consistent and accurate meas-

urements.

Additionally, this document is intended to provide information to users of Internet measurements

on the origin of key metrics, a roadmap for evaluating the quality of procedures applied by media

companies and/or ad serving organizations, and certain other definitions of Internet measurement

metrics, which are in various stages of discussions (Appendix B).

The definitions included in this document and the applicable project efforts resulted from

requests from the American Association of Advertising Agencies (AAAA) and other members of

the buying community, who asked for establishment of consistent counting methods and defini-

tions and for improvement in overall counting accuracy.

The definitions and guidelines contained

in this document originated from a two-phase project led by the Interactive Advertising Bureau

(IAB) and facilitated by the Media Rating Council (MRC), with the participation of the Advertising

Research Foundation (ARF), as a result of these requests. Phase 1 was conducted from May

through December 2001, and Phase 2, which resulted in the current Version 2.0, was conducted

during 2003 and 2004. Both phases are described in more detail below.

FAST Definitions (dated September 3, 1999; FAST was an organization formed by Procter &

Gamble and the media industry to address Internet measurement issues several years ago which

is no longer active) were considered in preparation of this document. The original FAST language

was maintained wherever possible.

Definitions of terms used in this document can be found in the IAB’s Glossary of Interactive Terms.

The IAB’s Ad Campaign Measurement Project

In May 2001 the IAB initiated a project intended to determine the comparability of online advertis-

ing measurement data provided by a group of Internet organizations. The MRC, ABC Interactive,

and the ARF also participated in the project, with the MRC initially designing the project approach

and acting as facilitator of many of the project discussions.

The project had two important phases:

1. Identification and Categorization of measurement methods used by the project participants,

and

2. Analysis of the numeric differences in counts arising from certain measurement options for

Ad Impressions, as well as the numeric differences between client and server-initiated

counting of Ad Impressions.

Information gathered in both phases was used to create the measurement metric definitions and

other guidelines contained herein.

The IAB, MRC and ARF, in subsequent phases of this project, plan to further refine the counting

metrics beyond Ad Impressions – i.e., Clicks, Page Impressions, Unique Visitors and Browsers,

and other emerging media delivery vehicles – which are included in Appendix B of this document.

Additionally, when the follow-up phases of this project are executed (for example, the next phase

Interactive Audience Measurement and Advertising

Campaign Reporting and Audit Guidelines

3U.S. Version

“Clicks” will be initiated later in 2004), the project participants plan to re-assess the applicability of

the ad-impression guidance contained herein and make such modifications as new technology or

methodology may dictate.

Phase 1 – Establishment of Initial Guidelines and Metrics

The IAB commissioned PricewaterhouseCoopers LLP (PwC) to perform the testing and data gath-

ering required by Phase 1 of the project, which included identifying common measurement met-

rics, definitions and reporting practices, as well as highlighting areas of measurement diversity

among the project participants. Additionally, PwC prepared a report (available to the IAB, MRC,

ARF and project participants) that aggregated the findings, identified common trends and metrics

and proposed an initial draft of a common set of industry definitions for several of the project met-

rics.

PwC’s report was used as a basis for later participant discussions and in deriving the definitions

and guidelines contained herein. Ten Internet organizations were chosen by the IAB and requested

to participate in the project as follows:

• Three Ad Networks or Ad Serving Organizations

• Four Destination Sites

• Four Portal Sites

The following organizations participated in the project: AOL, Avenue A, CNET Networks Inc., Walt

Disney Internet Group, DoubleClick, Forbes.net, MSN, New York Times Digital, Terra Lycos and

Yahoo!

When combined, the participants’ ad revenues represent nearly two-thirds of total industry rev-

enue.

All of the participating organizations supplied information to PwC on their measurement criteria

and practices and cooperated in necessary interviews and testing used as the basis for PwC’s

report.

PwC’s procedures included: (1) interviews with employees of participating organizations, (2)

reviews of policies, definitions and procedures of each participating organization, (3) execution of

scripted testing to assess the collection and reporting systems of the participating organizations,

and (4) analyses of results for differences and for the purpose of suggesting consistent definitions.

Phase 2 – Refinement of Guidelines and Specific Ad Impression Counting Guideline

Phase 2 of the project included data analysis and discussion between extensive groups of partici-

pants including: (1) the Phase 1 team (now called the “Measurement Task Force” of the IAB), (2)

additional ad-serving organizations, and (3) the MRC. The project team for phase 2 did not include

ABC Interactive or PwC.

Additionally, the Interactive Committee of the American Association of Advertising Agencies was

provided with updates and periodic status checks to assure that project directions and findings

were consistent with the expectations of the buying marketplace.

Certain analyses were performed by ImServices, which were used in the assessment of changes

proposed to filtration guidelines.

Interactive Audience Measurement and Advertising

Campaign Reporting and Audit Guidelines

4U.S. Version

Project Participants

International Ad Servers

AdTech (Germany)

ALLYES (China)

Aufeminin (France)

CheckM8 (US/UK/Israel)

Cossette/Fjord Interactive (Canada)

Falk AG (Germany)

JNJ Interactive (Korea)

Iprom (Slovenia)

Predicta (Brazil)

Other Participants

ABCE/IFABC (Europe)

Advertising Research Foundation (U.S.)

Amer. Assoc. of Ad Agencies (U.S.)

Association of National Advertisers (U.S.)

EACA (Europe)

EIAA (Europe)

ESOMAR (Europe)

IAB Argentina

IAB Europe

IM Services (U.S.)

Interactive Media Association (Brazil)

Media Rating Council (U.S.)

PricewaterhouseCoopers LLP

JIAA (Japan)

U.S. ( * = non-publisher)

24/7 Real Media

About.com

Accipiter*

Advertising.com

AOL

Atlas DMT*

BlueStreak *

CentrPort*

CheckM8*

CNET Networks

Disney Internet Group

DoubleClick*

Fastclick

Falk North America*

Focus Interactive/Excite Network

Forbes.com

Google

I/PRO*

Klipmart*

MSN

NY Times Digital

Overture

Poindexter Systems*

Red Sheriff*/Nielsen NetRatings

Value Click

Weather Channel Interactive

Yahoo!

Zedo.com*

Interactive Audience Measurement and Advertising

Campaign Reporting and Audit Guidelines

Scope and Applicability

These guidelines are intended to cover on-line browser or browser-equivalent based Internet activity.

Wireless, off-line cached media and Interactive-based television were not addressed in these

guidelines due to differences in infrastructure and/or delivery method. Additionally, newer extended

metrics that are just beginning to be captured by media companies; such as “flash tracking,” or

flash sites are not addressed in this document and will be addressed at a later time.

This document is principally applicable to Internet media companies and ad-serving organizations

and is intended as a guide to accepted practice, as developed by the IAB, MRC and ARF.

Additionally, Internet planners and buyers can use this document to assist in determining the

quality of measurements.

5U.S. Version

Contents

This document contains the following sections:

1. Measurement Definitions

a. Ad Impressions

2. Caching Guidelines

3. Filtration Guidelines

4. Auditing Guidelines

a. General

b. US Certification Recommendation

5. General Reporting Parameters

6. Disclosure Guidelines

7. Conclusion and Contact Information

Appendix A – Different but Valid Implementation Options for Ad-Impressions

Appendix B – Initial Measurement Definitions Arising from Phase 1 of Project

a. Clicks

b. Visits

c. “Unique” Measurements — Browsers, Visitors and Users

d. Page Impressions

Appendix C – Brief Explanation of U.S. Associations Involved in this Project

1. Measurement Definitions

The following presents the guidance for “Ad Impression” counting resulting from Phase 2 of the

Project, which is considered finalized:

Ad Impression – A measurement of responses from an ad delivery system to an ad request from

the user's browser, which is filtered from robotic activity and is recorded at a point as late as pos-

sible in the process of delivery of the creative material to the user's browser — therefore closest to

actual opportunity to see by the user (see specifics below).

Two methods are used to deliver ad content to the user – server-initiated and client-initiated.

Server initiated ad counting uses the site's web content server for making requests, formatting and

re-directing content. Client-initiated ad counting relies on the user's browser to perform these

activities (in this case the term “client” refers to an Internet user’s browser).

This Guideline requires ad counting to use a client-initiated approach; server-initiated ad counting

methods (the configuration in which ad impressions are counted at the same time the underlying

page content is served) are not acceptable for counting ad impressions because they are the fur-

thest away from the user actually seeing the ad.

The following details are key components of the Guideline:

1. A valid ad impression may only be counted when an ad counter receives and responds to

an HTTP request for a tracking asset from a client. The count must happen after the initia-

tion of retrieval of underlying page content. Permissible implementation techniques include

(but are not limited to) HTTP requests generated by <IMG>, <IFRAME>, or <SCRIPT SRC>.

For client-side ad serving, the ad content itself could be treated as the tracking asset and

Interactive Audience Measurement and Advertising

Campaign Reporting and Audit Guidelines

6U.S. Version

the ad server itself could do the ad counting.

2. The response by the ad counter includes but is not limited to:

a. Delivery of a “beacon,” which may be defined as any piece of content designated as

a tracking asset. Beacons will commonly be in the form of a 1x1 pixel image, but the

Guideline does not apply any restrictions to the actual media-type or content-type

employed by a beacon response.

b. Delivery of a “302” redirect or html/javascript (which doubles as a tracking asset) to

any location, and

c. Delivery of ad content

3. Measurement of any ad delivery may be accomplished by measuring the delivery of a

tracking asset associated with the ad.

4. The ad counter must employ standard headers on the response, in order to minimize the

potential of caching. The standard headers will include the following:

• Expiry

• Cache-Control

• Pragma

See section 2 of this document entitled Caching Guidelines

for further information.

5. One tracking asset may register impressions for multiple ads that are in separate locations on

the page; as long as reasonable precautions are taken to assure that all ads that are recorded in

this fashion have loaded prior to the tracking asset being called (for example the count is made

after loading of the final ad). This technique can be referred to as “compound tracking.” Use of

compound tracking necessitates that the ad group can only be counted if reasonable assurance

exists that all grouped ads load prior to counting, for example through placing the tracking asset

at the end of the HTML string.

As a recommendation, sites should ensure that every measured ad call is unique to the browser.

There are many valid techniques available to do this, (including the generation of random strings

directly by the server, or by using JavaScript statements to generate random values in beacon

calls).

Other Ad-Impression Considerations

Robot filtration guidelines are presented later in this document. Appropriate filtration of robotic

activity is critical to accurate measurement of ad impressions.

Media companies and ad serving organizations should fully disclose their ad impression recording

process to buyers and other users of the ad impression count data.

2. Caching Guidelines

Cache busting techniques are required for all sites and ad-serving organizations. The following

techniques are acceptable:

1. HTTP Header Controls

2. Random Number assignment techniques to identify unique serving occurrences of

pages/ads.

Publishers and ad serving organizations should fully disclose their cache busting techniques to

buyers and other users of their data.

Interactive Audience Measurement and Advertising

Campaign Reporting and Audit Guidelines

7U.S. Version

3. Filtration Guidelines

Filtration of site or ad-serving transactions to remove non-human activity is highly critical to accu-

rate, consistent counting. Filtration guidelines consist of two approaches: (1) filtration based on

specific identification of suspected non-human activity, and (2) activity-based filtration (sometimes

referred to as “pattern analysis”). Each organization should employ both techniques in combina-

tion.

Organizations are encouraged to adopt the strongest possible filtration techniques.

Minimum Requirements

The following explains minimum filtration activity acceptable for compliance with this guideline:

Specific Identification Approach:

• Robot Instruction Files are used.

• URL, user agent, and client browser information is used to exclude robots based on exact

matches with a combination of two sources: (1) The IAB Industry Robot List and (2) a list of

known Browser-Types published by the IAB. In the case of (1), matches are excluded from

measurements. For item (2) matches are included in measurements. (Note that filtration

occurring in third party activity audits is sufficient to meet this requirement.)

• Disclose company-internal traffic on a disaggregated basis. If company-internal traffic is

material to reported metrics and does not represent exposure to ads or content that is quali-

tatively similar to non-internal users, remove this traffic. Additionally remove all robotic or

non-human traffic arising from internal sources, for example IT personnel performing testing

of web-pages. A universal or organizational identification string for all internal generated traf-

fic or testing activity is recommended to facilitate assessment, disclosure or removal of this

activity as necessary.

Activity-based Filtration:

• In addition to the specific identification technique described above, organizations are

required to use some form of activity-based filtration to identify new robot-suspected activ-

ity. Activity-based filtration identifies likely robot/spider activity in log-file data through the

use of one or more analytical techniques. Specifically, organizations can analyze log files

for:

o Multiple sequential activities – a certain number of ads, clicks or pages over a speci-

fied time period from one user,

o Outlier activity – users with the highest levels of activity among all site visitors or with

page/ad impressions roughly equal to the total pages on the site,

o Interaction attributes – consistent intervals between clicks or page/ad impressions

from a user

o Other suspicious activity – users accessing the robot instruction file, not identifying

themselves as robots. Each suspected robot/spider arising from this analysis

requires follow-up to verify the assumption that its activity is non-human.

Sites should apply all of these types of techniques, unless in the judgment of the auditor and man-

agement (after running the techniques at least once to determine their impact), a specific tech-

nique is not necessary for materially accurate reporting. If a sub-set of these techniques are used,

this should be re-challenged periodically to assure the appropriateness of the approach.

• Activity Based filtration must be applied on a periodic basis, with a minimum frequency of

once per quarter. Additionally Activity Based filtration should be run on an exception basis

in order to check questionable activity. In all cases Organizations must have defined proce-

Interactive Audience Measurement and Advertising

Campaign Reporting and Audit Guidelines

8U.S. Version

dures surrounding the schedule and procedures for application of this filtering.

The intent of activity-based filtration is to use analytics and judgment to identify likely non-human

activity for deletion (filtration) while not discarding significant real visitor activity. Activity-based fil-

tration is critical to provide an on-going “detective” internal control for identifying new types or

sources of non-human activity.

An organization should periodically monitor its pattern analysis decision rule(s) to assure measure-

ments are protected from robot/spider inflationary activity with a minimal amount of lost real visitor

activity. Additionally, publishers and ad serving organizations should fully disclose the significant

components of their filtration process to buyers and other users of their data.

4. Auditing Guidelines

General – Third-party independent auditing is encouraged for all ad-serving applications used in

the buying and selling process. This auditing is recommended to include both counting methods

and processing/controls as follows:

1. Counting Methods: Independent verification of activity for a defined period. Counting

method procedures generally include a basic process review and risk analysis to under-

stand the measurement methods, analytical review, transaction authentication, validation of

filtration procedures and measurement recalculations. Activity audits can be executed at

the campaign level, verifying the activity associated with a specific ad creative being deliv-

ered for performance measurement purposes.

2. Processes/Controls: Examination of the internal controls surrounding the ad delivery,

recording and measurement process. Process auditing includes examination of the ade-

quacy of site or ad-server applied filtration techniques.

Although audit reports can be issued as infrequently as once per year, some audit testing should

extend to more than one period during the year to assure internal controls are maintained. Audit

reports should clearly state the periods covered by the underlying audit testing and the period

covered by the resulting certification.

US Certification Recommendation – All ad-serving applications used in the buying and selling

process are recommended to be certified as compliant with these guidelines at minimum annually.

This recommendation is strongly supported by the AAAA and other members of the buying com-

munity, for consideration of measurements as “currency.” Currently this certification recommenda-

tion is for ad-impressions only, since this counting guideline is finalized through phase 2 of the

project.

Special Auditing Guidance for Outsourced Ad-Serving Software

Ad serving organizations that market ad-serving/delivery software to publishers for use on the

publisher’s IT infrastructure (i.e., “outsourced”) should consider the following additional guidance:

1. The standardized ad-serving software should be certified on a one-time basis at the ad-

serving organization, and this certification is applied to each customer. This centralized cer-

tification is required at minimum annually.

2. Each customer’s infrastructure (and any modifications that customer has made to the ad-

serving software, if any) should be individually audited to assure continued functioning of

the software and the presence of appropriate internal controls. Processes performed in the

centralized certification applicable to the outsourced software are generally not re-per-

formed. The assessment of customer internal controls (and modifications made to out-

Interactive Audience Measurement and Advertising

Campaign Reporting and Audit Guidelines

9U.S. Version

sourced software, if any) is also recommended to be at minimum an annual procedure.

These certification procedures are only necessary for outsource clients who wish to present their

measurements for use by buyers.

Special Auditing Guidance for Advertising Agencies or Other Buying Organizations

If buying organizations modify or otherwise manipulate measurements from certified publishers or

ad-servers after receipt, auditing of these activities should be considered.

There are, in addition to MRC and its congressional supported certification process for the broad-

cast industry, a number of other certifiers and types and levels of certification are available to ad

serving organizations.

5. General Reporting Parameters

In order to provide for more standardization in Internet Measurement reporting, the following gen-

eral reporting parameters are recommended:

Day — 12:00 midnight to 12:00 midnight

Time Zone – Full disclosure of the time-zone used to produce the measurement report is required.

It is preferable, although not a current compliance requirement, for certified publishers or ad-

servers to have the ability to produce audience reports in a consistent time-zone so buyers can

assess activity across measurement organizations. For US-based reports it is recommended that

reports be available on the basis of the Eastern time-zone, for non US-based reports this is recom-

mended to be GMT.

Week — Monday through Sunday

Weekparts — M-F, M-Sun, Sat, Sun, Sat-Sun

Month – Three reporting methods: (1) TV Broadcast month definition. In this definition, the Month

begins on the Monday of the week containing the first full weekend of the month, (2) 4-week peri-

ods – (13 per year) consistent with media planning for other media, or (3) a calendar month. For

financial reporting purposes, a month is defined as a calendar month.

Additional Recommendation: Dayparts – Internet usage patterns need further analysis to determine

effective and logical reporting day parts. We encourage standardization of this measurement

parameter.

6. Disclosure Guidance

An organization’s methodology for accumulating Internet measurements should be fully described

to users of the data.

Specifically, the nature of Internet measurements, methods of sampling used (if applicable), data

collection methods employed, data editing procedures or other types of data adjustment or pro-

jection, calculation explanations, reporting standards (if applicable), reliability of results (if applica-

ble) and limitations of the data should be included in the disclosure.

The following presents examples of the types of information disclosed.

Nature of Internet Measurements

• Name of Property, Domain, Site, Included in the Measurement

• Name of Measurement Report

• Type of Measurements Reported

Interactive Audience Measurement and Advertising

Campaign Reporting and Audit Guidelines

10U.S. Version

o Time Periods Included

o Days Included

o Basis for Measurement

o Geographic Areas

o Significant Sub-Groupings of Data

• Formats of Reported Data

• Special Promotions Impacting Measurements

• Nature of Auditing Applied and Directions to Access to Audit Report

• Sampling/Projections Used

o Sampling Methods Used for Browsers not Accepting Cookies or Browsers with New

Cookies

o Explanation of Projection Methods

Data Collection Methods Employed

• Method of Data Collection

o Logging Method

o Logging Frequency

o Logging Capture Point

• Types of Data Collected

o Contents of Log Files

o Cookie Types

• Contacts with Users (if applicable)

• Research on Accuracy of Basic Data

o Cookie Participation Percentages

o Latency Estimates

• Rate of Response (if applicable)

Editing or Data Adjustment Procedures

• Checking Records for Completeness

• Consistency Checks

• Accuracy Checks

• Rules for Handling Inconsistencies

• Circumstances for Discarding Data

• Handling of Partial Data Records

o Ascription Procedures

Computation of Reported Results

• Description of How Estimates are Calculated

o Illustrations are desirable

• Weighting Techniques (if applicable)

• Verification or Quality Control Checks in Data Processing Operations

• Pre-Release Quality Controls

• Reprocessing or Error Correction Rules

Interactive Audience Measurement and Advertising

Campaign Reporting and Audit Guidelines

11U.S. Version

Reporting Standards (if applicable)

• Requirements for Inclusion in Reports, Based on Minimum Activity Levels

Reliability of Results

• Sampling Error (if applicable)

Limitations on Data Use

• Non-sampling Error

• Errors or Unusual Conditions Noted in Reporting Period

• Limitations of Measurement, such as Caching, Multiple Users per Browser, Internet latency

7. Conclusion and Contact Information

This document represents the combined effort of the IAB (with PWC and ABCi in Phase 1), the

project participants, MRC and ARF to bring consistency and increased accuracy to Internet meas-

urements. We encourage adoption of these guidelines by all organizations that measure Internet

activity and wish to have their measurements included for consideration by buyers.

For further information or questions please contact the following individuals:

Interactive Advertising Bureau: Media Rating Council:

Greg Stuart George Ivie

President & CEO Executive Director

200 Park Avenue South, Suite 501 370 Lexington Ave., Suite 902

New York, NY 10003 New York, NY 10017

212-949-9033 (212) 972-0300

Advertising Research Foundation:

Bob Barocci

President

641 Lexington Avenue

New York, NY 10022

212-751-5656

Interactive Audience Measurement and Advertising

Campaign Reporting and Audit Guidelines

12U.S. Version

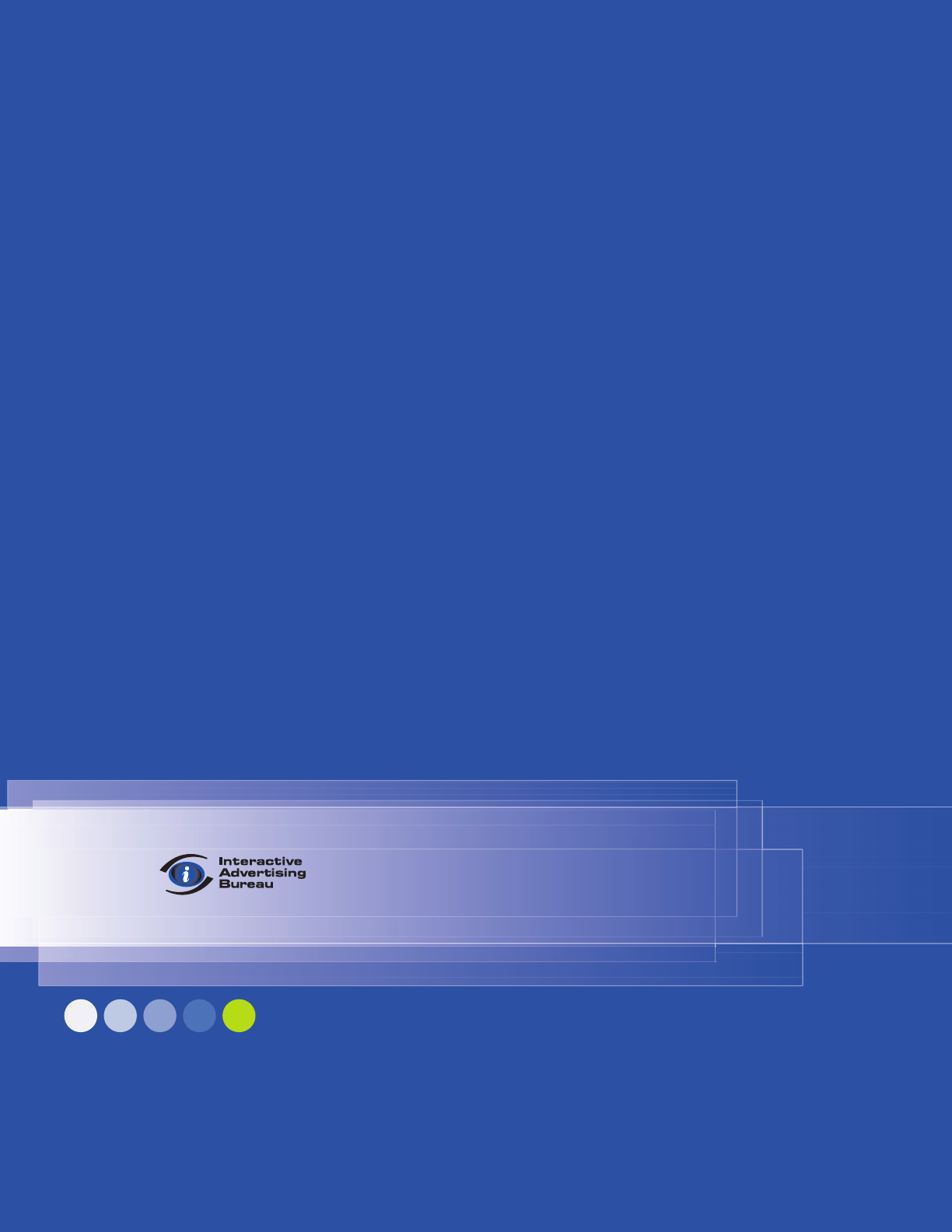

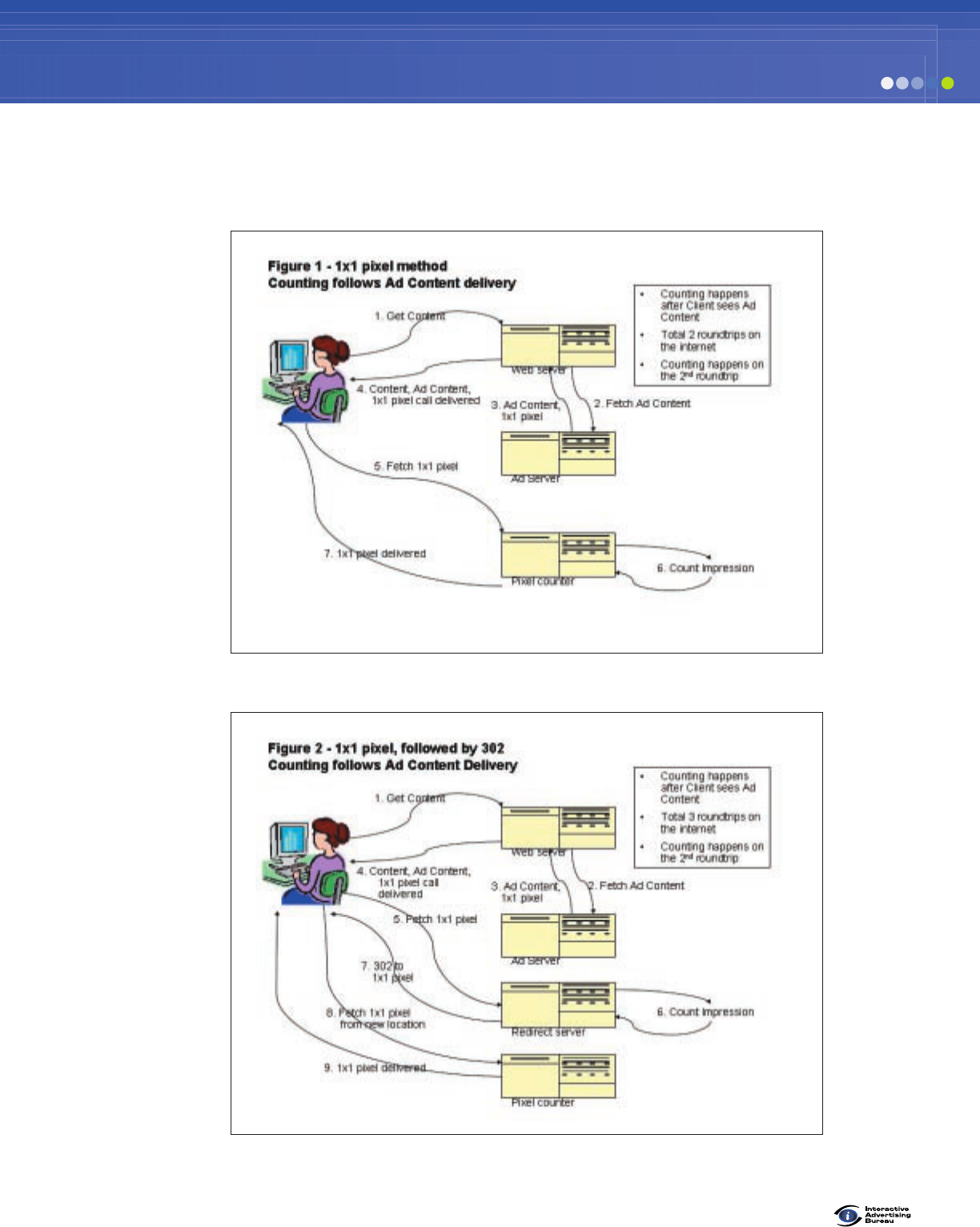

APPENDIX A

Figures – Different but Valid Implementation Options for Ad-Impressions

Interactive Audience Measurement and Advertising

Campaign Reporting and Audit Guidelines

13U.S. Version

Interactive Audience Measurement and Advertising

Campaign Reporting and Audit Guidelines

14U.S. Version

Interactive Audience Measurement and Advertising

Campaign Reporting and Audit Guidelines

15U.S. Version

APPENDIX B

Initial Measurement Definitions Arising from Phase 1 of Project

The following measurement definitions, resulting from Phase 1 of the Project, are considered “ini-

tial” guidance and are presented for consideration by interested organizations. The IAB, MRC, ARF

and the Measurement Task Force will be finalizing these definitions in later phases of the Project.

Click – There are three types of user reactions to Internet content or advertising – click-through, in-

unit click and mouse-over. All of these reactions are generally referred to as “clicks.” A click-

through is the measurement of a user-initiated action of clicking on an ad element, causing a redi-

rect to another web location. Click-throughs are tracked and reported at the ad server, and gener-

ally include the use of a 302 redirect. This measurement is filtered for robotic activity.

In-unit clicks and mouse-overs (mouse-overs are a form of ad interaction), result in server log

events and new content being served and are generally measured using 302s, however they may

not necessarily include a redirect to another web location. Certain in-unit clicks and mouse-overs

may be recorded in a batch mode and reported on a delayed basis. Organizations using a batch

processing method should have proper controls over establishing cut-off of measurement periods.

Clicks can be reported in total, however significant types of clicks should be presented with disag-

gregated detail. If, due to ad-counting software limitations, an organization cannot report the dis-

aggregated detail of click-types, only click-throughs should be reported.

Robot filtration guidelines are presented later in this document. Appropriate filtration of robotic

activity is critical to accurate measurement of clicks. Media companies and ad serving organiza-

tions should fully disclose their click recording process to buyers and other users of the click count

data.

It is important to note that clicks are not equivalent to web-site referrals measured at the destina-

tion site. If an organization complies with the guidelines specified herein, there will still be meas-

urement differences between originating-server measurement and the destination site (advertiser).

The use of 302 redirects helps to mitigate this difference because of its easy and objective quan-

tification however differences will remain from measurements taken at the destination site because

of various issues such as latency, user aborts, etc. The subject of the magnitude of this difference

may be a subject for future phases of this project.

Additionally, this guideline does not cover measurement specifics of post-click activity, which also

may be a subject of future phases of this project.

Visit – One or more text and/or graphics downloads from a site qualifying as at least one page,

without 30 consecutive minutes of inactivity, which can be reasonably attributed to a single brows-

er for a single session. A browser must “pull” text or graphics content to be considered a visit.

This measurement is filtered for robotic activity prior to reporting and is determined using one of

two acceptable methods (presented in preferred order):

1. Unique Registration: When access to a site is restricted solely to registered visitors (visitors

who have completed a survey on the first visit to identify themselves and supply a user-id

and password on subsequent visits), that site can determine visits using instances of

unique registered visitors.

2. Unique Cookie with a Heuristic: The site’s web server can store a small piece of information

with a browser that uniquely identifies that browser. For browsers that accept cookies, vis-

its can be approximated using the page and/or graphics downloads identifiable to a

unique-cookie (recognizing that this is not perfect because it merely measures unique

“browsers”). For browsers that do not accept a cookie, a heuristic (decision rule) can be

Interactive Audience Measurement and Advertising

Campaign Reporting and Audit Guidelines

16U.S. Version

used to count visits using a unique IP address and user agent string, which would be

added to the cookie-based counts. For these cases, using the full user agent string is rec-

ommended.

The unique cookie should be identified as unique using a technique such as a cookie number,

additionally sites with multiple domains or properties should consider special sharing rules for

cookie information to increase the accuracy and provide for greater leveraging of unique cookies.

Permanent cookies should be established with a lengthy expiration time, meant to approximate the

useful life (at minimum) of the browser technology.

Registration, cookies and unique IP/User Agent String measurement methods can be used in com-

bination. Certain organizations rely on unique IP address and user agent string with a heuristic as a

sole measurement technique for visits. This method should not be used solely because of inherent

inaccuracies arising from dynamic IP addressing which distorts these measures significantly.

Robot filtration guidelines are presented later in this document. Appropriate filtration of robotic

activity is critical to accurate measurement of Visits Media companies and ad serving organiza-

tions should fully disclose their visit recording process, including the scope of measurement and

measurement method, to buyers and other users of the visit count data.

“Unique” Measurements (Browsers, Visitors and Users)

Unique Users (and Unique Visitors)

Unique Users represent the number of actual individual people, within a designated reporting time-

frame, with activity consisting of one or more visits to a site or the delivery of pushed content. A

unique user can include both: (1) an actual individual that accessed a site (referred to as a unique

visitor), or (2) an actual individual that is pushed content and or ads such as e-mail, newsletters,

interstitials and pop-under ads. Each individual is counted only once in the unique user or visitor

measures for the reporting period.

The unique user and visitor measures are filtered for robotic activity prior to reporting and these

measures are determined using one of two acceptable methods (presented in preferred order) or a

combination of these methods:

1. Registration-Based Method: For sites that qualify for and use unique registration to deter-

mine visits (using a user-id and password in accordance with method 1 under “Visits” above)

or recipients of pushed content, this information can be used to determine unique users

across a reporting period. Best efforts should be made to avoid multiple counting of single

users registered more than once as well as multiple users using the same registration.

2. Cookie-Based Method: For sites that utilize the unique cookie approach to determine visits

(method 2 under “Visits” above) or recipients of pushed content, this information can be used

as a basis to determine unique users across a reporting period. The use of persistent cookies

is generally necessary for this measure. An algorithm (data model) is used to estimate the

number of unique users on the basis of the number of unique cookies. The underlying basis

for this algorithm should be a study of users based on direct contact and/or observation of

people using the browser at the time of accessing site content with the unique cookie and

the number of browsers in use by these users. This study should be projectable to the users

of the site and periodically re-performed at reasonable intervals. If only registered users are

used for this study, an assessment should be made as to the projectability of this group. The

algorithm should adjust the unique cookie number therefore accounting for multiple browser

usage by individuals and multiple individuals using a single browser.

Interactive Audience Measurement and Advertising

Campaign Reporting and Audit Guidelines

17U.S. Version

Unique Browsers

For organizations using the cookie-based method for determining “Uniques,” if no adjustments are

made to the unique cookie number of the site to adjust to actual people (adjusting to unique users

from unique cookies), the number should be referred to as “Unique Browsers.” The fact that no

adjustment has been made to reflect unique users should be fully disclosed by the media company.

Other Guidance for “Unique” Measurements

For sites utilizing cookie-based techniques, a method should be used to attribute unique user or

visitor counts to those browsers that do not accept cookies or those browsers accepting cookies

that have “first use” or new cookies (essentially those that cannot reasonably be determined to be

repeat visitors).

A site can use either a census-based projection technique or a sampling method to estimate this

activity. These methods are explained below:

For census-based projection, the site uses its log to accumulate visits for browsers not accepting

cookies and browsers with new cookies. Using this information, the site can:

(1) Assume no unique user activity from new cookies and cookie rejecting browsers, and then proj-

ect unique user activity levels using a common measure (page impressions per visit, etc.) based on

cookie-accepting repeat visitor activity; or

(2) Use a specific identification method (unique IP and user agent string) to assist in identifying the

Unique Users represented in this group. Using the full user agent string is recommended.

Census-based projection is generally preferred. However, for sites with unusually high volume

(making census based techniques infeasible) or other extenuating circumstances, a random sam-

pling technique is acceptable.

For sample-based projection, the site log continues to be used, however a sample of log data is

used to build activity measures of non-cookied users and users with new cookies. The sampling

method must be a known probability technique of adequate design and sample-size to provide

estimates at the 95% or greater confidence level.

The burden of proof is on the measurement provider to establish the sufficiency of the sampling

methods used. Media companies and ad serving organizations should fully disclose their unique

user measurement process, including projection methods and/or sampling methods, to buyers and

other users of the unique user data.

Robot filtration guidelines are presented later in the document. Appropriate filtration of robotic

activity is critical to accurate measurement of unique users.

Page Impressions – In addition to the metrics defined above, several organizations internally

and/or externally report page impressions. For purposes of this document, page impression meas-

urement needs further analysis to determine best practices and address certain industry issues.

The IAB’s Ad Campaign Measurement project included evaluation of page impression measure-

ment, and the following is presented to provide a page-impression measurement definition:

Page Impressions are defined as measurement of responses from a web server to a page request

from the user browser, which is filtered to remove robotic activity and error codes prior to report-

ing, and is recorded at a point as close as possible to opportunity to see the page by the user.

Much of this activity is recorded at the content server level.

Good filtration procedures are critical to page-impression measurement. Additionally, consistent

handling of auto-refreshed pages and other pseudo-page content (surveys, pop-ups, etc.) in defin-

ing a “page” and establishing rules for the counting process is also critical. These page-like items

Interactive Audience Measurement and Advertising

Campaign Reporting and Audit Guidelines

18U.S. Version

should be counted as follows:

• Pop-ups: ad impressions

• Interstitials: ad impressions

• Pop-unders: ad impressions

• Surveys: page impressions

• HTML Newsletters (if opened): page impressions if not solely advertising content, otherwise

ad impressions

• Auto-Refreshed Pages: Site-set auto-refresh – page impressions subject to the following

criteria — The measuring organization and user should consider: (1) whether the page is

likely to be in background or minimized therefore diminishing the opportunity to view. If the

content-type is likely to be in background or minimized while in use or the organization

cannot determine whether minimization has occurred, these auto-refreshed pages may be

assessed and or valued differently, and (2) that the refresh rate is reasonable based on con-

tent type. User-set auto refresh – Generally counted as page impressions.

• Frames: page impressions; organizational rules should be developed for converting frame

loads into page impressions and these rules should be disclosed. One acceptable method

is to identify a frame which contains the majority of content and count a page impression

only when this dominant frame is loaded. These items should be separately identified and

quantified within page-impression totals. Significant disaggregated categories should be

prominently displayed.

Ads not served by an ad-serving system (i.e., ads embedded in page content) are generally count-

ed by the same systems that derive page impressions or through the use of “beacon” technolo-

gies. In all cases, ads not served by ad-serving systems should be disaggregated for reporting

purposes from other ad impressions.

Media companies and ad serving organizations should fully disclose their page impression count

process to buyers and other users of the page impression count data.

APPENDIX C

Brief Explanation of U.S. Associations Involved in this Project

Advertising Research Foundation (ARF)

Founded in 1936 by the Association of National Advertisers and the American Association of

Advertising Agencies, the Advertising Research Foundation (ARF) is a nonprofit corporate-mem-

bership association which is today the preeminent professional organization in the field of advertis-

ing, marketing and media research. Its combined membership represents more than 400 advertis-

ers, advertising agencies, research firms, media companies, educational institutions and interna-

tional organizations.

The principal mission of the ARF is to improve the practice of advertising, marketing and media

research in pursuit of more effective marketing and advertising communications.

American Association of Advertising Agencies (AAAA)

Founded in 1917, the American Association of Advertising Agencies (AAAA) is the national trade

association representing the advertising agency business in the United States. Its membership

produces approximately 75 percent of the total advertising volume placed by agencies nationwide.

Although virtually all of the large, multi-national agencies are members of the AAAA, more than 60

Interactive Audience Measurement and Advertising

Campaign Reporting and Audit Guidelines

19U.S. Version

percent of AAAA membership bills less than $10 million per year.

The AAAA is not a club. It is a management-oriented association that offers its members the

broadest possible services, expertise and information regarding the advertising agency business.

The average AAAA agency has been a member for more than 20 years.

Interactive Advertising Bureau (IAB)

The IAB is the only association dedicated to helping online, Interactive broadcasting, email, wire-

less and Interactive television media companies increase their revenues.

The quality of the IAB leadership, membership and industry initiatives, such as standards,

research, advocacy and education, benefit the membership as well as the industry as a whole.

IAB Objectives

• To increase the share of advertising and marketing dollars that Interactive media captures

in the marketplace

• To organize the industry to set standards and guidelines that make Interactive an easier

medium for agencies and marketers to buy and capture value

• To prove and promote the effectiveness of Interactive advertising to advertisers, agencies,

marketers & press

• To be the primary advocate for the Interactive marketing and advertising industry

• To expand the breadth and depth of IAB membership while increasing direct value to mem-

bers.

Media Rating Council (MRC)

The Media Rating Council, Inc. (MRC) is an independent, non-profit organization guided and

empowered by its members, authorized by Congressional action, to be the verifier of syndicated

audience measurement of media in the U.S. The MRC Board grants Accreditation to research

studies and ancillary services whose methodologies and disclosures meet the Minimum Standards

for Media Rating Research. Accreditation decisions of the MRC Board are based on the scrutiny of

annual, confidential, neutral MRC-designed third-party audits.

The MRC membership represents companies from all major media, advertising agencies, mar-

keters and media-specific trade associations, excluding measurement services. The U.S. Media

Industry recognizes the MRC to be the authoritative body for monitoring, critiquing and seeking

continuous improvement in syndicated media research studies. In addition, the MRC membership

actively pursues research issues they consider priorities in an effort to improve the quality of

research in the marketplace.

Interactive Audience Measurement and Advertising

Campaign Reporting and Audit Guidelines